The futures of climate modeling

Where we are today

In the mid-20th century, with the combined advances in computing and numerical modeling, the first general circulation models of the atmosphere came into being. These models quickly advanced following two strands: one devoted to global weather prediction on short timescales and one devoted to simulation and prediction of the longer-term climate. The latter played an influential role in the 1960’s in verifying that anthropogenic greenhouse gas emissions warm the climate – a development that would lead to Syukuro Manabe winning the Nobel Prize in physics in 2021. These climate models have since solidified our theoretical understanding of global warming and have allowed us to understand the changes in the climate system as they have unfolded.

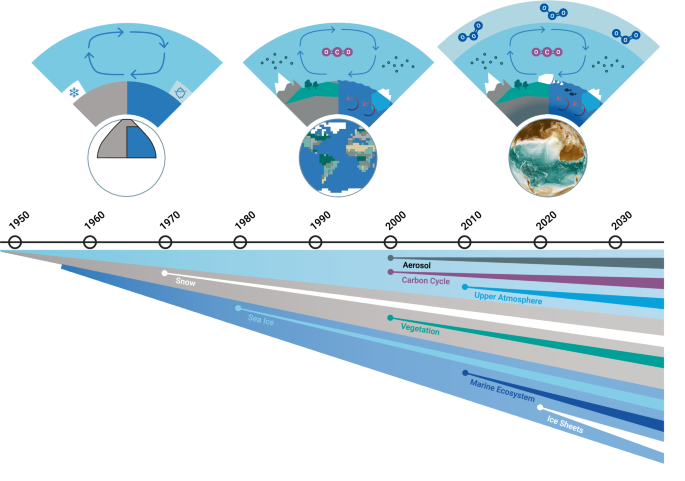

Climate models began with just the underlying physics of the atmosphere and ocean but have since expanded massively in complexity to the Earth System Models (ESMs) of today (Fig. 1) that not only simulate the physical aspects of the climate system but also the biogeophysical and chemical aspects of the fully coupled atmosphere-ocean-land-sea ice-land ice system1. Projects such as the Coupled Model Intercomparison Project (CMIP) have allowed the global community to work together to address the question of what we should expect in terms of future climate change and to understand how our models behave. Furthermore, the use of simulations starting from different initial conditions but produced with a single climate model and identical external forcing has highlighted the importance of natural variability as a source of uncertainty in regional climate predictions across a range of timescales. Global ESMs such as those used in CMIP or initial-condition large ensembles are an important source of understanding of the impacts of climate change and are one of the primary sources of information for stakeholders as to what the future might hold, either directly or by serving as the large-scale boundary conditions for various downscaling methodologies. The increasingly pressing need for climate information on a regional scale means that the demand for accurate information from climate models is now greater than ever before.

Progression of model components from early climate models to the latest Earth System Models.

Climate modeling has traditionally relied on improving predictions through advancements in theoretical understanding and the representation of physical processes. This is because, in contrast to weather predictions, climate predictions cannot be verified in real time. However, climate modeling has entered a unique time. The satellite-based observational record combined with in-situ measurements and reanalysis products now provides us with a global view of regional climate change that is more than 40 years long. For some features of the climate system and in some regions, climate change has been observed for even longer than this. This longer record combined with the growing climate change signal means that signals are starting to emerge from the noise across many regions and seasons. Recent studies comparing observed and modeled trends across different variables have revealed successes but also a number of discrepancies, e.g. trends in sea surface temperature (SST) in the tropical Pacific in models that are inconsistent with observations2. These discrepancies call into question whether climate models have the right sensitivity to forcing that is needed to make accurate future predictions. In addition, the coarse resolution of current global models means they have limited ability to reliably provide information at the regional scales needed by stakeholders. These issues of model fidelity and fitness for purpose raise serious (existential) questions about the future of climate modeling and have also sparked a broader conversation on the methodological and epistemic significance of climate modeling3,4,5. At the same time, new tools are becoming available such as km-scale models and AI/ML methods. Therefore, while the climate is now changing rapidly, so too is the field of climate science, leading many to question where the field should be moving next.

It has been argued that climate modeling is at a crossroads and a step change is required to accelerate progress. Several different possible future divergent paths have been proposed for this step change and each leverages a different tool. Here we reflect on the current state of climate modeling and discuss the different future paths, including their pros and cons. As a way to inform the future, we review the history of successful earth system predictions across timescales (weather, interannual, and climate) and highlight how multiple tools and steps were involved. We argue that the past is prologue for climate modeling and embracing multiple futures is important as we work toward convergent paths for advances in climate modeling. While we do recognize that the translation of climate predictions into actionable and useful information requires attention to criteria, such as salience (perceived relevance) and legitimacy (perceived objectivity), other than just credibility and accuracy, that is, the technical quality of the information6,7, our focus here is on the latter and the tools needed to produce such information.

The proposed paths for a step change

Climate modeling has always been used as a tool for understanding and predicting large-scale climate change and more recently has evolved into a tool used to accurately predict its local impacts for policymakers. The diversity of stakeholders and end users highlights the need to serve a broad community with different needs and interests in different questions which will require different tools and different model data. While these considerations have always been important, new technological opportunities have led to recent papers outlining different paths toward accelerating progress

-

Km-scale models8,9: in addition to providing more granular information that might be useful at the local scale, high-resolution models might allow us to improve numerics and reduce the number of parameterizations needed. At the kilometer scale, essential climate processes, such as precipitating deep convection, ocean mesoscale eddies, orography, and land-sea heterogeneity, are more explicitly and, arguably, physically represented. This might also allow the focus to be diverted toward other aspects of complexity which still require parametrizations (e.g., cloud microphysics, ice sheets, and biogeochemical processes). High resolution alone, however, does not guarantee improved fidelity or fitness for climate simulations. In fact, long-standing large-scale biases still persist in km-scale simulations9, but further understanding and refinements as we get used to working with these models will likely lead to further progress10, much like what has occurred over the decades with lower-resolution models. Additionally, the high computational costs of these km-scale models limit calibration or new developments of subgrid-scale process parameterizations and the creation of large ensembles for uncertainty quantification.

-

Physics augmented by parameter calibration11: parameter tuning (or calibration) continues to be central, even at high resolution, and has seen a re-emergence with advanced techniques. Some of the most promising recent strategies include the systematic comparison of high-resolution with single-column simulations, history-matching techniques to rule out unrealistic solutions, the use of process-oriented metrics targeting processes believed to dominate the climate change response to anthropogenic forcing, and the use of nudged or initialized simulations12,13. However, these techniques, while successful at tuning individual parametrizations or model components, still suffer from the curse of dimensionality in multiscale and multiphysics systems and compensate for model errors. In addition, transparent, rigorous, and reproducible practices are yet to emerge12,13.

-

Hybrid physics + AI14,15,16: despite the increased resolution and the improved understanding, many parameterizations are ad-hoc, and tuning their free parameters might not be sufficient. AI approaches hold promise for rapid progress in discovering new data-driven parameterizations that will capture the complexity of multiscale physics significantly reducing computational cost. The potential drawbacks are that resulting parameterizations can lead to numerical instabilities through interactions with the resolved dynamics, might struggle to generalize to a new climate (past or future), and might not be amenable to physical interpretation. In addition, this approach requires high-quality datasets, capable of capturing targeted subgrid fluxes that are to be parametrized.

While each approach has merits to tackle important problems, they come with caveats. No approach, to date, has resulted in a step change in climate modeling, including addressing the breadth of problems with the accuracy required for varied stakeholders. As one increases resolution, adds complexity, or includes new parameterizations, we uncover new physics but also new issues. As an example, recent work reported an increased fidelity in simulating historical SST trends in initialized predictions with a high-resolution ocean model17, however the improvement is not seen across models18. Global storm-resolving models demonstrate how increased resolution can improve the representation of tropical cyclone intensity over lower resolution models, but at the same time, there are remaining aspects of model formulation, beyond the model resolution, that can lead to remaining differences amongst storm-resolving models as to how they represent the structure of tropical cyclones19. Along similar lines, the rise of super-parameterization/multi-scale modeling in the early 2000s was considered a potential step change by many20,21. While it led to advances in our understanding of the Madden Julian Oscillation, aerosol-cloud interactions and it provides training data for Machine Learning algorithms, it also uncovered new issues and limitations of column modeling. The reality is that there will likely be steps back before large steps forward are possible and it is important for prediction and understanding to advance together. We can expect that a range of tools and approaches will continue to remain crucial to build reliable models.

Successful predictions

The ultimate objective of climate modeling is accurate prediction of the future statistical behavior of the climate system. At this critical moment in climate modeling, it is useful to review the history of successful Earth system predictions across different timescales and their underlying ingredients. Importantly, successful predictions across different timescales required different ingredients because of different sources of predictability (initial value problem for numerical weather prediction and boundary conditions for climate prediction).

Numerical weather prediction

Over the past 40 years, the forecast skill of Numerical Weather Prediction (NWP) systems has steadily increased, which has been referred to as the quiet revolution2. The increased skill of weather forecasts is arguably one of the most important scientific achievements of the past century. The success has been attributed to multiple paths or approaches rather than a single-step change. In particular, increased resolution, improved representation of small-scale processes (physical parameterizations), increase in ensemble size, and more observations that lead to more accurate initial conditions through data assimilation. More recently the quiet revolution of NWP has been upended by AI/ML emulators that show skill on the level of the most sophisticated physics-based high-resolution NWP models, if not higher. Weather emulators can be run by graduate students on their laptops in contrast to the most sophisticated physics-based high resolution NWP models that involve many scientists at large weather centers and require state-of-the-art supercomputers. The successful predictions of weather emulators seem to leverage reduced complexity or at least decreased compute time. However, the success of these emulators depends critically on the training data provided by physics-based models that require state-of-the-art supercomputers.

Interannual variability

The El Nino-Southern Oscillation (ENSO) is the dominant mode of climate variability on interannual timescales. It impacts weather and agriculture globally. The first successful forecast of ENSO was of the 1986 El Nino by Cane et al.22. The ingredients of the successful prediction were a coupled model of atmosphere-ocean dynamics23, combining a Gill model24 for the atmosphere with a reduced gravity model for the ocean. The ocean model was linearized about a background state given by observations. The further development of this model led to a second successful prediction of the 1991 El Nino with a 1-year lead time. This is a clear case where the innovative combination of theoretical understanding with idealized modeling has led to valuable predictions of a feature of the climate system. Of course, now more complex dynamical and statistical models are routinely used in ENSO prediction but, to this day, the Cane-Zebiak model is included in the NOAA CPC ENSO forecast and it remains competitive against the more complex dynamical and statistical models (NOAA CPC).

Response to CO2 increase

In 2021 the Nobel Prize was awarded, in part, to Dr. Syukuro Manabe for “reliably predicting global warming”. Manabe’s predictions are another case where simulation was successfully combined with understanding25,26. Manabe and colleagues simplified the physics and added complexity one step at a time to understand the mechanisms underlying the emergent response to increased CO2. This approach is in contrast to the perception that increasing model complexity improves the representation of Earth’s climate. Starting with radiative-convective equilibrium, Manabe and Wetherald27 predicted tropospheric warming and stratospheric cooling. Upon adding atmospheric dynamics and the sea-ice albedo feedback they predicted the latitudinal pattern of global warming: polar-amplified warming and tropical upper-tropospheric warming28,29. Finally, upon adding land processes and ocean dynamics they predicted the longitudinal pattern of global warming: land warms more than the ocean, the Southern Ocean can cool transiently30. By adding physics sequentially, they understood what emergent responses it controlled. All of these predictions have since been observed31 and this represents a clear case where incremental additions in complexity toward an all encompassing, complex simulation led to improved understanding and confidence in the model behavior (Fig. 2).

Spatial distribution of surface air temperature change in a response to (a) doubling of CO2 during year 60–80 and (b) observations between 1961–1990 and 1991–2015.

Future convergence/The path forward

A common theme that emerges from the above successes in predicting aspects of the Earth System is that multiple tools and approaches were involved (Fig. 3). There were methodological advances, such as improvements in observations, models, and assimilation methods. There were advances in fundamental understanding obtained through a hierarchy of approaches, such as an understanding of the basic ingredients required for ENSO prediction and an understanding of the signals produced within the first climate projections which lent credence to their validity. Past is prologue: heeding these lessons and retaining and enhancing the wide variety of tools in our toolbox is a clear path toward improved understanding and prediction of climate change.

Embracing different approaches, including hierarchical modeling, machine learning, and high-resolution modeling, will utimately lead to improved climate projections.

Climate modeling has entered a critical era where regional signals have emerged from the noise and where accurate predictions of changes we will experience in the coming decade are more important than ever before. The growing climate change signal and lengthening observational record highlight areas where prior climate predictions have worked well, but they also uncover discrepancies that need to be resolved for both understanding and prediction. The escalating urgency with which society needs accurate climate information is leading to a variety of proposals for the future of climate modeling8,11,15,32 (Fig. 3). They involve divergent paths and different tools and in some cases involve a massive investment into one avenue which understandably yields concerns that resources for other avenues may suffer. However, none of the proposed approaches alone have yet proven sufficient for addressing the scientific challenges that the climate science community faces. Indeed, these paths are also highly dependent on one another. For example, machine learning approaches require high-resolution model data for training and, with limited computational resources, high-resolution modeling efforts will almost certainly need to be augmented with emulators that can produce realistic probability distributions that account for both forced signals and internal variability. Furthermore, in order to have confidence in projections using any modeling approach requires an underpinning of fundamental understanding of why such projected changes are being produced, which will almost certainly involve the use of hierarchical modeling approaches alongside basic theory25,26,33. Indeed we have learned something when models warmed too much34,35, had bugs that were discovered36 or did not behave as expected37. Finding the problem and figuring out the error is not a sign of failure, it’s a sign of success and progress.

Our view is that all approaches have a role to play and that the community needs to identify and understand the synergies between them to determine how best to maximize their benefits to address the problem of understanding unfolding climate change signals and providing actionable climate information for the coming decades. The past, and indeed many other areas of life, have taught us that collectively we are enriched by diversity. A wide range of approaches and tools alongside strategic methods that will allow them to inform one another to maximize their benefits will likely be the best, most rapid path toward advances in climate modeling. A large body of literature in the area of philosophy of science provides support to the benefits of approaches that leverage diverse and interdependent tools to build theoretically based and quantitative predictions of complex systems38,39,40. Last, but not least, it is important to remember climate modeling involves the training of young scientists who benefit from diverse approaches41 and we need to work together across generations to shape the direction of climate modeling.

Responses