Deep learning models can predict violence and threats against healthcare providers using clinical notes

Introduction

Workplace violence and harassment toward healthcare providers is a major challenge for healthcare organizations around the world1. Evidence suggests that up to 38% of healthcare providers suffer violence in the workplace at some point in their careers, and incidents of physical intimidation, threats, verbal abuse, and sexual harassment are also common2. Research also indicates that the frequency of violent events and harassment of healthcare providers has continued to increase since the COVID-19 pandemic3,4,5, exacerbating provider burnout and leading to increased stress, staff turnover, absenteeism, and reduced job satisfaction and taking an overall toll on mental and physical health1. For medical inpatients, psychiatrists may be consulted to diagnose, treat, or de-escalate when staff perceive possible risk, but staff-initiated requests for consultation are often too late or never begun. At the same time, recent advances in machine learning and natural language processing (NLP) have demonstrated significant potential in training and fine-tuning sophisticated screening models capable of matching or even surpassing the capabilities of human experts6,7,8,9, and often doing so automatically, far faster, and at greater scale. We hypothesized that NLP models trained on corpora of clinical notes preceding past violent events could be utilized to prospectively determine patients and staff at risk of violence, enabling intervention and prevention.

Predicting violence against healthcare workers is challenging for a number of reasons. Actuarial models of suicidal or recidivistic criminal violence are notoriously poor, lacking dynamic input. Real-time data allows for better violence prediction, but requires time for universal screening to capture dynamic risks and complex interplay among patients, wards, hospitals, and social factors. Patients’ histories, illnesses, perceptions, and behavioral symptoms clearly define risks, as captured in screening measures such as the ABRAT10. Violence is more likely against individual healthcare workers with less experience, who are themselves insecure11 and anxious12, often in conditions of overwhelming traumatic stress. Violence is more prevalent in clinical environments where staff are too busy to respond to these acute needs13, reflecting institutional inequities and management decisions. The concentration of patients with a high static risk for violence in under-resourced clinical environments staffed by inexperienced and traumatized healthcare workers is a recipe for driving burnout and turnover14, compounding the deficit in experienced and behaviorally skillful staff. The use of force (whether through security staff, physical restraints, or chemical sedation) is typically initiated by frontline healthcare workers who may have the least skills and experience, and are influenced by dynamic processes of bias and fear perception which are strongly determined by patients’ race, poverty, and psychiatric labeling. Additionally, many incidents of violence or intimidation go unreported to hospital administrators15, limiting quality improvement and safety initiatives16.

For violence prediction tools to be valuable, they must encompass these complexities and provide identification early enough for a clinical response. Clinical services must be tailored to the different phenotypes of patient-level violent behavior and the needs of frontline healthcare workers at risk. These interventions must also be considered at the level of management units and healthcare systems in order to address the training, retention, and resilience of effective healthcare workers, as well as the inequities and biases that can exacerbate dynamic risks for violence in ill and injured patients. A few published studies have attempted to develop models capable of predicting violence against healthcare providers. Airakinsen et al. surveyed public sector workers in Finland, including nurses, to create logistic regression models for predicting violence or threats for a given worker over time17. Kowalenko et al. followed over 200 healthcare providers in an emergency department over 9 months and developed a linear regression model for prediction18. In both studies, as prediction focused on survey-based education, experience, and demographic characteristics of a given worker, the resulting models were not predictive of violence for a given patient or moment in time, limiting their utility for prospective interventions. Van Le et al. evaluated risk prediction using a curated dictionary approach to create features for SVM, Random Forest, logistic regression, and other models using case report forms19. Lata and Navel used logistic regression and other methods for predicting non-violent workplace behavior, though this was not specific to healthcare and limited to incidents of non-violent threats. Lee et al. used structured data from ED visits from a tertiary hospital in the Republic of Korea and achieved an F1 score using a random forest model of 0.8420. However, the study was conducted in a hospital setting under uniquely severe overcrowding and predicted violence likelihood across the entire time span of an encounter (potentially days or weeks), rather than a specific moment in time. Locality-specific EHR workflows, data quality, and cultural factors may further limit generalizability.

To the best of our knowledge, no published studies have attempted to use the contents of clinical notes for predicting violence in a healthcare setting or predicting violence for a specific moment in time. While the unstructured, free-text nature of clinical notes makes them somewhat challenging for analysis, narratives within clinical notes are also an incredibly rich source of psychosocial information, containing nuance and detail often absent from corresponding structured data21,22. We hypothesized that training a deep learning model using clinical notes could yield novel insights and high predictive performance.

This study contributes the following:

-

1.

A novel benchmark and clinical note corpus of predictions of forthcoming violence by human experts trained in psychiatry.

-

2.

A high-quality double annotation of the same corpus for violence risk factors, such as aggressive behavior, cognition, psychosis, and substance abuse.

-

3.

A baseline regression model using largely structured data achieves an F1 of 0.72.

-

4.

A named entity recognition (NER) model for predicting violence risk factors in clinical notes achieves an overall 0.7 F1.

-

5.

The first document classification model was able to predict violence against healthcare workers for a specific patient and moment in time, with 0.75 F1, exceeding that of our baseline regression model (F1 0.72) and human psychiatry team (F1 0.5).

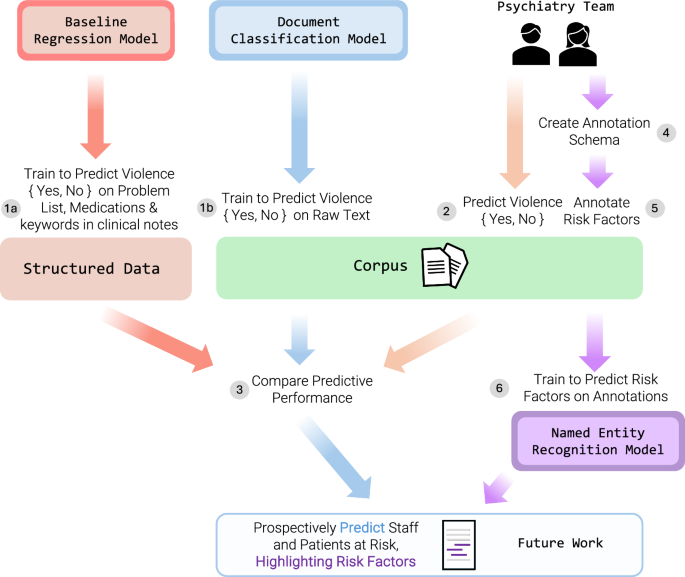

Figure 1 shows our overall study design.

1 We labeled our corpus with 1 (cases) or 0 (controls) (1a) trained a regression model using largely structured data and (1b) trained a Clinical-Longformer model for document classification as a regression task. 2 In pairs, our psychiatry team reviewed each document and predicted {Yes, No} that a document preceded a violent event. If a pair disagreed, a third psychiatrist reviewed it as a tie-breaker. 3 We compared the combined predictive performance of the psychiatry team across the entire corpus (Train + Test) to that of the document classification model (Test only). 4 Our psychiatry team leaders developed an annotation schema for risk factors for violence. 5 As the psychiatry team reviewed documents, they additionally annotated risk factors. The annotation schema was iteratively revised based on team feedback. 6 We trained a named entity recognition model for predicting risk factors.

Methods

Dataset

With the approval of our hospital leadership, we obtained two datasets of incidents from March 2021 to October 2023 (2.5 years) involving hospital security.

-

1.

Code grays are security staff response incidents of patients attempting to leave or resist care when this poses an imminent danger to themselves or others.

-

2.

Staff patient safety net (PSN) incidents involve violence against hospital staff, often leading to significant staff distress.

The two events often coincide. We first combined, and then de-duplicated the two datasets in order to ensure a given incident was recorded only once. Many Code Gray and PSN events are preceded by staff taking precautions when patients appear potentially violent or threatening, often mentioned in clinical notes. As we aimed to limit our predictions to only cases where the event was surprising and unanticipated—and thus prediction beforehand would be of most value—we filtered out any events in which, in clinical notes in the 3 days preceding the timestamp of the event, we found any mention of terms indicative of security monitoring, such as “1:1”, “sitter”, “detained”, “against medical advice”, and so on. This resulted in 280 cases with unique timestamps from 246 unique patients. Using these 280 cases, we extracted clinical notes in the 3 days preceding a given timestamp. As patients have many clinical documents written, some less potentially useful for our analysis, we limited clinical notes to only those of “H&P” (history and physical) and “Nursing Note” types. H&P notes are typically longer and describe the patient history and reason for hospitalization, recent labs and medication orders, and so on, and plan for care. Nursing notes tend to be shorter, more frequent updates on patient status. For each of the unique events, we concatenated the notes into a single long document.

Case-control matching

We achieved a 1:1 matching of cases and control patients. To do so, we wrote an algorithm querying our data warehouse to match cases to a control patient of the same biological sex, same age within ±5 years, admitted in the same 2.5-year time window, and with the highest number of matching ICD-10 diagnosis codes for the encounter, limited to patients with an H&P note written. For example, a male case patient who was 46-years-old at the time of the violent event and admitted with diagnosis codes for Altered Mental Status (R41.82), Wheezing (R06.2), and Tachycardia (R00.00), would be matched to the first male control patient aged 41–51 and with the same admitting diagnoses found. If no patient had all 3 diagnosis codes, the first patient with 2 of the 3 would be selected, and so on. This resulted in 280 corresponding control patients. We used the time 3 days after the initial H&P note as an artificial timestamp for controls and similarly created a long document for each. Our combined case + control dataset thus had 560 total documents.

Risk factor annotation schema and annotation

Because our long-term goal is to prospectively identify at-risk providers and patients in order to intervene and de-escalate potential violent events, ranking risk as a regression task alone (i.e., predicting a normalized value between 0.0 and 1.0) does not lend sufficient explainability. For example, if a psychiatry team received a daily report of providers and patients at risk but the report showed only a single predicted continuous value alongside each patient (e.g., “John Doe: 0.387”), it would be impossible to understand why a model output such a prediction without actually reviewing a patient’s chart—with the caveat that even then, human understanding and factors influencing model prediction may not align. While possible solutions such as SHAP23 could be utilized to highlight segments of clinical notes correlated to a given model output, highlights across an entire set of long concatenated notes could be time-consuming to read, when instead a short summary would be preferable. We, therefore, reasoned that developing an annotated corpus of risk factors for violence from the perspective of psychiatrists could be used to train an NER model and aid in explainability and summary for such a future report. Risk factor identification could thereby guide actionable, targeted interventions such as substance use treatment, suicide precautions, or delirium management.

Our lead psychiatry team members developed an annotation guideline for the following eight categories:

-

1.

Aggressive behavior—Aggressive actions observed during hospitalization and those performed prior to admission. Observed behaviors were based on the validated Broset Violence Checklist24, a validated, short-term violence prediction instrument. Previous actions included interpersonal violence perpetration and victimization, such as the history of assaults or suicide attempts.

-

2.

Cognition—The six neurocognitive functioning domains: (1) memory and learning, (2) language, (3) executive functioning, (4) complex attention, (5) social cognition, and (6) perceptual and motor functioning. In clinical documentation, cognitive impairments are often reported through a patient’s levels of alertness, orientation status, and ability to comprehend medical care and communicate medical decisions.

-

3.

Mood symptoms—Based on DSM-5 symptomatology for depressive, anxious, and manic conditions, this category identified disordered alterations in patients’ emotional states during the current hospitalization such as suicidal ideation, hopelessness, rumination, panic, and grandiosity.

-

4.

Psychotic symptoms—A loss of contact with reality. This category included positive symptoms such as delusions, hallucinations, and disorganized thoughts and behaviors, and negative symptoms such as emotional blunting, avolition, and poverty of thought.

-

5.

Acute substance use—The recent recreational use of mood-altering substances, both legal (such as nicotine, alcohol, and cannabis) and illicit (such as opioids and stimulants). Recent use prior to or during admission could be self-reported or referenced through toxicology results. In addition, this category included signs and symptoms of active substance intoxication, withdrawal, and craving.

-

6.

Unmet needs/interpersonal conflict—Patient-reported dissatisfactions with care. This included concrete complaints such as poorly controlled pain, interrupted sleep, rescheduled procedures, and premature or delayed discharge. It also included abstract patient perceptions of mistreatment by medical providers, such as feeling ignored or judged.

-

7.

Noncompliance—Patient refusal to participate in medically necessary care. This included refusing to take scheduled medications, following physical restrictions, and participating in clinical interviews, exams and diagnostic interventions such as lab draws and imaging studies. It also included the purposeful removal of medical equipment such as braces or bandages.

-

8.

High care utilization—A disproportionate burden on the healthcare system due to elevated resource use. This included references to bounce-back medical admissions, frequent emergency room visits, past psychiatric hospitalizations (for either voluntary or involuntary treatment), past incarcerations, and past or current treatment with community mental health organizations.

For our 560 total documents, we enlisted the help of six total annotators of various levels of experience in psychiatry: two attending psychiatrists, three psychiatry residents, and one medical student entering psychiatry residency. In addition to annotating risk factors, we also utilized our psychiatry team to establish a baseline of how well human experts can predict an upcoming violent event by reading clinical notes. To do so, at the end of each document, we added the text << CODE_GRAY_OR_PSN_WILL_OCCUR >>, which annotators were instructed to annotate as Yes or No based on their experience and intuition. In order to ensure a high quality of annotation, we first trained all annotators on the same 20 randomly selected documents, then copied all annotation variations for each training document into a “differential” file from which the annotation team used to reconcile differences. We then double-annotated the remaining documents by pairing annotators and randomly assigning 135 documents to each group, split into batches. After each round of annotation was complete, we generated differential files for each pair and completed the reconciliation. Two of the annotators were paired twice, and thus annotated approximately twice the number of documents as others. If a pair differed in their Yes/No prediction of violence in a given reconciled document, a 3rd “tie-breaker” psychiatrist from our annotation leaders reviewed the document and determined a final Yes/No prediction. Among annotators, prior to reconciliation, the mean pairwise inter-annotator agreement measured by F1 score using a relaxed scoring method requiring matching labels and overlapping (though not identical) token spans was 0.71, indicating reasonably high agreement.

Baseline regression model

In addition to our human psychiatry group, we also aimed to create an additional baseline regression model using largely structured data elements. We based the inputs to this model on the MEND Screening Model at the University of Pennsylvania25, using patient age at prediction, psychiatric diagnoses associated with medication orders, presence of active mood disorders and anxiety-related diagnoses on the problem list or past billing diagnosis codes, whether antidepressants were administered in the encounter, and prior ED visits with the psychiatric complaint within past two years. Additionally, we explored the inclusion of keyword items in preceding clinical notes relating to anxiety, abuse, psychiatry, depression, withdrawal, ativan, alprazolam, and intravenous drug use.

Evaluation

At a high level, our evaluation sought to answer the following questions:

-

1.

How well can a document classification model predict violence? How does this compare to human experts or models using structured data? Alongside our human and structured data baseline, we aimed to create a deep learning model capable of matching or surpassing human experts in prediction, trained on an unannotated raw text dataset. As documents in our dataset tended to be long, we fine-tuned the Clinical-Longformer base model26 for document classification after randomly splitting our dataset into an 80/20 split of train and test documents. Clinical-Longformer builds upon the findings of the Longformer model27, which replaces the standard full attention mechanism in Transformer models with a combination of sliding window and sparse global attention, and in doing so reduces memory consumption and allows for a larger context window. As discussed, our documents were concatenations of multiple clinical notes prior to a given timestamp for a single patient. In cases where a given document exceeded the length of the context window allowed by Clinical-Longformer or our GPU memory constraints, after some experimentation, we found that cropping the document by taking only the beginning and ending (up to 50% of characters allowed in the context window on each side), effectively removing the middle of the document, to work reasonably well.

-

2.

How well can a NER model predict risk factors? Using the risk factor annotations by our psychiatry team, we trained an NER model to predict an output label for each token in a given document. In order to evaluate every word in a given document, we used a moving-window strategy, splitting each document based on the maximum allowed tokens for a given model’s context window, then evaluating each window of text using the Bio_ClinicalBERTbase model28.

We use the F1 score as our primary evaluation metric in both tasks, where F1 = 2 × (precision × recall)/(precision + recall).

All experiments were approved by our institutional review board (IRB #00018889).

Results

The results of our first experiment are shown in Table 1. After annotations and tie-breaks of predictions were completed, the psychiatry team had an overall precision of 0.62, recall of 0.41, and F1 of 0.5, with the top individual at 0.62, 0.53, and 0.57, respectively. Some psychiatry team members annotated more documents than others based on availability. Our Clinical-Longformer model, adapted for a regression task, outputs a value between 0 and 1 which we normalize and compare to an optimized threshold for prediction. Table 1 shows results for 2 thresholds, one optimized for recall (0.75) and one for precision (0.78), both of which achieve an F1 of 0.75, exceeding that of our human psychiatrist team. Our baseline regression model also achieved reasonably good performance and surpassed the psychiatrist team with a somewhat lower F1 of 0.72. Using McNemar’s test29, differences between the Clinical-Longformer model and the baseline regression model were not, however, found to be statistically significant.

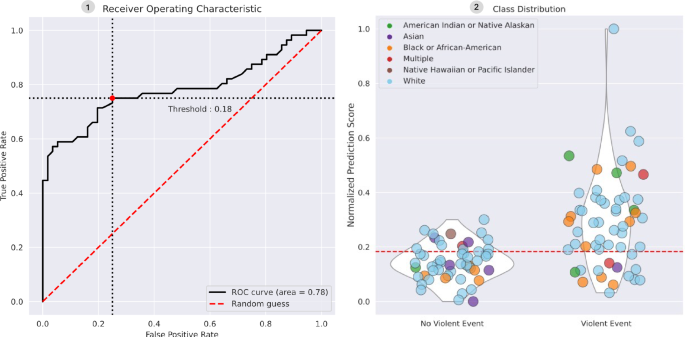

We focused subsequent analysis on our Clinical-Longformer model as it achieved the highest performance. Figure 2 shows the receiver operating characteristic (ROC) curve using a threshold at 0.18, favoring precision, as well as the distribution of test set patients by ethnic heritage. Table 2 similarly shows P/R/F1 and accuracy for test set patients by ethnic heritage.

Left—ROC curve for the Clinical-Longformer model with a threshold at 0.18, which favors precision. Right—violin and bubble plots of test set patients, with the Y-axis showing normalized prediction scores. Bubbles are color-coded by patient ethnic identification. The test (shown) and train sets do not reflect the true distribution of demographics among all violent patients and should not be interpreted as such.

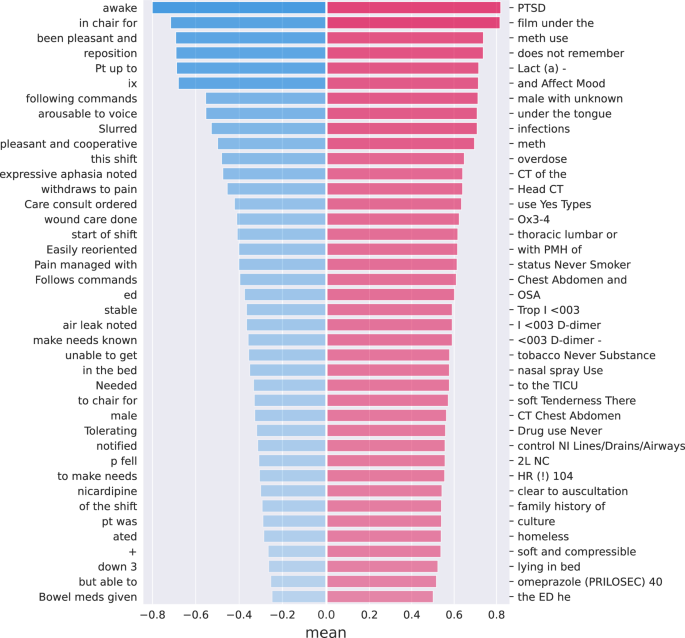

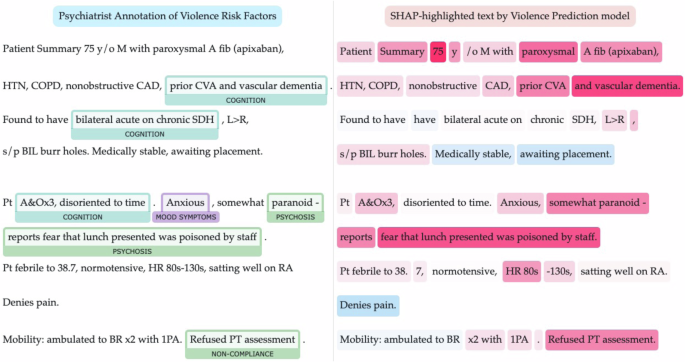

We additionally conducted an analysis using SHAP values to better understand phrases and documents correlated with higher and lower normalized prediction scores. Figure 3 shows mean sorted SHAP values from our Clinical-Longformer model extracted as unigrams, bigrams, and trigrams from the test set. Many, though not all, strongly correlated phrases are reasonably intuitive, with phrases correlated toward non-violent documents often reflecting reassuring nursing language (e.g., “pleasant and cooperative”), while phrases such as “PTSD” or relating to methamphetamine use correlated with violence. Other phrases, such as “family history of” or “of the shift” appear erroneous or irrelevant, though this is difficult to demonstrate empirically. Other phrases may be predictive of violence in ways that are possibly counter-intuitive or surprising to a human reader, though we leave a deeper analysis to future study. Figure 4 shows an example test set document with risk factor annotations by a psychiatrist on the left and SHAP values from the Clinical-Longformer model for the same document on the right. We found SHAP values often overlap with human-annotated risk factors, despite training the model on only raw, unannotated document text and violence outcomes.

The top 40 phrases we found to influence model predictions toward violent events (red) and not (blue), as measured by mean SHAP values across the test set.

The automatically learned phrases strongly predictive of Code Gray or PSN by the model (right) are in certain areas similar to psychiatrist annotations (left), despite being trained on raw clinical note text rather than annotations. In other cases, psychiatrist-annotated risk factors, such as “bilateral acute on chronic SDH”, appear to not strongly influence prediction for the model. This example has been abbreviated and modified to prevent patient re-identification.

Results of our second experiment for named entity recognition using the Bio_ClinicalBERTbase model are shown in Table 3. We calculated both a strict scoring strategy, requiring exact matching of annotated and predicted token indices and categories, as well as a relaxed scoring strategy, which requires that categories match and 50% or more of gold tokens be overlapped with predicted tokens. Our strict scoring strategy resulted in an overall F1 score of 0.65 and relaxed an F1 of 0.7. As we envision future prospective reports highlighting risk factors to include surrounding text context (e.g., the full sentence where a given risk factor was predicted), we find a relaxed scoring strategy to be a reasonable measure of model performance. Among risk factor categories, “cognition” showed the highest F1 (strict: 0.71 and relaxed: 0.75) while “unmet needs” showed the lowest (strict: 0.44 and relaxed: 0.48).

Discussion

Even for trained human experts with extensive knowledge of a local patient population and documented risk factors, prediction of violence remains challenging. However, our experiments demonstrate that deep learning models can predict forthcoming violent events toward healthcare providers with reasonably strong recall and precision. Our Clinical-Longformer model performed somewhat better than our baseline regression model using mostly structured data, with both achieving an F1 of over 0.7, compared to 0.5 for our psychiatrist team.

Our risk factor prediction NER model trained on psychiatrist annotations also serves as a novel means of providing visual context alongside a numerical risk score. While our NER model may not capture the same information presented in a SHAP visualization and does not necessarily provide insight into the decision-making of the Clinical-Longformer model, we believe it presents the most salient and readily actionable information from the perspective of psychiatrists and lends itself more easily toward summary and clarity than SHAP. We hope the outputs from our two models together therefore may enable a future psychiatry team viewing risk scores on recently admitted patients to quickly understand the risk factor context and select appropriate interventions.

As we intend to prospectively deploy our models to detect forthcoming violence and enable intervention and de-escalation, we also considered concerns around model bias based on patients’ ethnic heritage and racial identity. As can be seen in Table 2, using the threshold optimized for precision on the entire test set of 112 documents, the model performed reasonably well for patients identifying as American Indian or Native Alaskan (F1 0.86), Black or African-American (F1 0.82) and White (F1 0.77), but poorly for Asian and Native Hawaiian patients (F1 0.0 for both), though given the relatively small sample size further study on a larger set of patients is needed.

This study had a number of limitations. First, the clinical notes and timestamps used were from only one institution and a relatively small window of time (timestamps spread across 2.5 years, using clinical notes for prediction 0–3 days prior to each timestamp). External validation is needed to test if our results will generalize to other hospitals. In the future, we intend to work with colleagues at other safety net hospital systems and utilize techniques such as federated learning30 to leverage multiple sites’ data and improve models without sharing data directly with one another. We also explored and compared SHAP values and token highlights correlated to model predictions, though correlation does not imply causation. Our comparison to psychiatrist-annotated risk factors, while visually similar in many cases, nonetheless requires further study and empirical analysis to better understand how model learnings relate to psychiatric training and evaluation methods—especially when they appear divergent or even counter-intuitive. Future studies may additionally evaluate how the temporality of certain phrasings, such as current violent tendencies versus those shown in the past, may be used or interpreted by models such as ours, as our SHAP-based analysis does not take this into account. And while in the future we intend to use our named entity recognition model to provide summary information of a given document, as a separate model, it does not necessarily provide insight into the Clinical-Longformer model’s reasoning, and may surface different information in certain situations. In addition, we used a baseline regression model based on the MEND Screening Model, which uses the presence of structured data elements, such as medication orders, diagnosis codes, problem list items, and prior ED visits which may precede an index hospital encounter by weeks, months, or even years. In contrast, our Clinical-Longformer model uses clinical notes in the preceding 3 days prior to a given timestamp, though those notes in turn may describe narrative events that may long predate a given encounter. The different modalities of these two formats make them somewhat difficult to compare directly. To the best of our knowledge, however, there are few published models which would enable direct comparison, and as such we felt the MEND model to be the most reasonable choice as a baseline comparison. Our baseline regression model results (F1: 0.72) did not have a statistically significant difference from our Clinical-LongFormer model (F1: 0.75), but given the relatively small number size of our test set (n = 112), further study and additional data may be needed to definitively compare the two models.

We intend to explore methods for further improving predict performance, including exploration of models that combine structured and clinical note data as inputs, as well as preprocessing and integrating risk factor predictions into the document classification inputs to evaluate whether that improves our models as well. Additionally, we intend to explore the piloting of our models for prospective violence prediction and possible intervention, as well as robust evaluation of such predictions for bias and possible harm before deployment.

Responses