Boosted Bell-state measurements for photonic quantum computation

Introduction

Photonic fusion-based quantum computing (FBQC) is a promising platform for scalable, fault-tolerant quantum computation1,2,3,4,5. The two primitives of FBQC are entangling two-photon measurements, referred to as fusions, and small, pre-entangled resource states of constant size1,6,7. Quantum gates are implemented by performing fusions between photons of different resource states. The choice of measurement bases in which these fusions are performed dictates the computation. The success of the computation can then be deduced from the outcomes of the fusions. Topological fault-tolerance can be achieved by choosing the resource states as well as the fusion network such that the fusion results allow for error detection through parity checks1.

The required fusions can be implemented by Bell-state measurements (BSMs), in which the measured photons are projected onto the Bell basis3,8. Since the success probability of these fusions is directly given by the efficiency of the BSM, high BSM efficiencies are desirable for FBQC. Furthermore, efficient BSMs along with encoded fusions (see Fig. 7) can be used to improve the tolerance of FBQC schemes to photon loss1,5,9.

BSMs using only linear-optical elements offer scalability due to their low experimental overhead. They are, however, inherently limited to a maximum efficiency of 50%10. In order to overcome this 50%-bound while staying in the linear-optical regime, schemes using ancillary photons to perform arbitrarily complete BSMs can be realized11,12,13,14. These ancilla-boosted BSM schemes are promising candidates for FBQC schemes, as they enable an efficient, scalable implementation of fusions while only relying on additional photons and linear-optical components15.

In this work, we demonstrate such a boosted linear-optical BSM scheme based on entangled ancillary photon pairs12. By using a fiber-based 4 × 4 multiport interferometer, BSM success probabilities of up to 75% can be achieved. Using fiber-integrated components circumvents the need for free-space interferometers, thus drastically reducing the experimental complexity in a resource-efficient manner and allowing the integration into existing fiber-based infrastructures. We report experimental BSM efficiencies of (69.3 ± 0.3)% using this scheme.

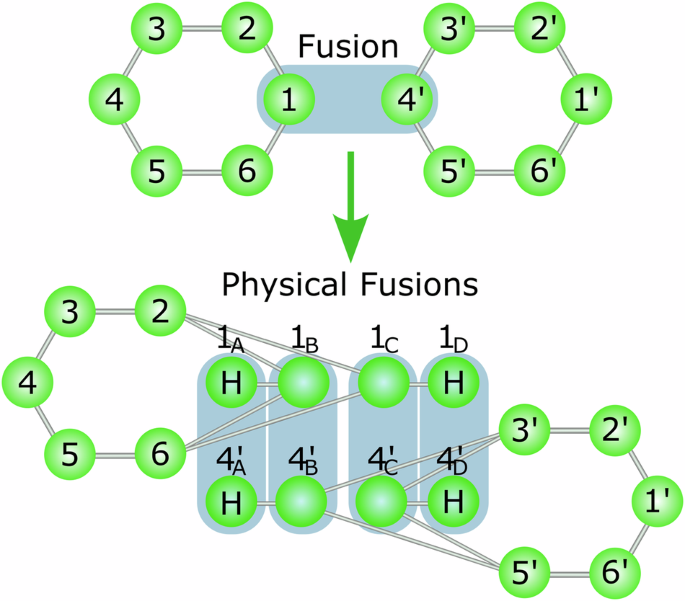

Furthermore, we investigate the effects of the boosted BSM on an encoded six-ring fusion network as proposed in1. Here, the resource state consists of a six-qubit graph state in a ring configuration (see Fig. 1), in which each qubit is encoded in four physical photons (see Fig. 7). This fusion network has been chosen due to its robustness to imperfect fusions and photon losses1,16. By simulating photon-loss thresholds for this fusion network, we compare the robustness to photon loss for our boosted BSM to non-boosted BSMs. We show that the boosted BSM allows for an individual photon-loss probability of 1.4% while the photon-loss threshold for the non-boosted BSM is calculated to be 0.45%, thus showing a threefold improvement for the boosted BSM.

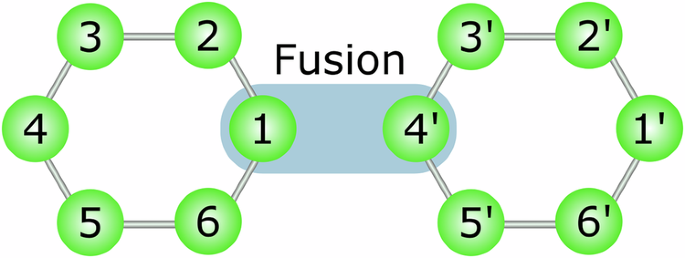

The resource states can be represented as a graph with six qubits in a ring configuration. The blue connection represents a fusion operation.

Results

Theoretical introduction

A standard linear-optical BSM can be performed by sending two photons to the inputs of a balanced beam splitter and examining the spatial mode as well as the polarization of the two photons as they exit the beam splitter. Based on the observed output pattern, a successful BSM projects the input photons into one of the four Bell states (vert {Psi }^{+}rangle), (vert {Psi }^{-}rangle), (vert {Phi }^{+}rangle) and (vert {Phi }^{-}rangle), where

and

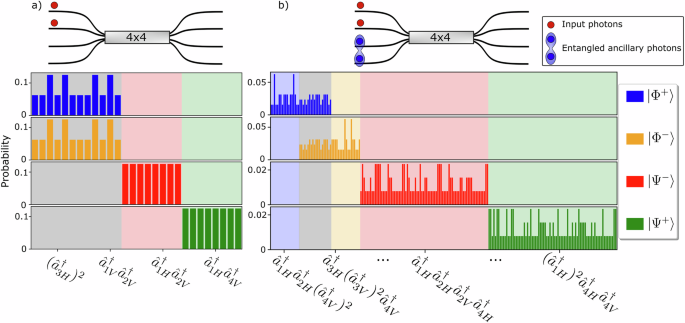

with ({hat{{a}_{i}}}^{dagger }) denoting the photonic creation operator with mode number i acting on the vacuum state (vert vacrangle). However, in this configuration, only the Bell states (vert {Psi }^{+}rangle) and (leftvert {Psi }^{-}rightrangle) produce unique measurement outcomes, whereas the possible outcomes for the Bell states (vert {Phi }^{+}rangle) and (vert {Phi }^{-}rangle) are completely identical. This leads to the established standard linear-optical BSM success probability of pc = 50%10,13,17. The same BSM can be performed by using two inputs of a 4 × 4 multiport splitter, again leading to a success probability of pc = 50%, as depicted in Fig. 2a).

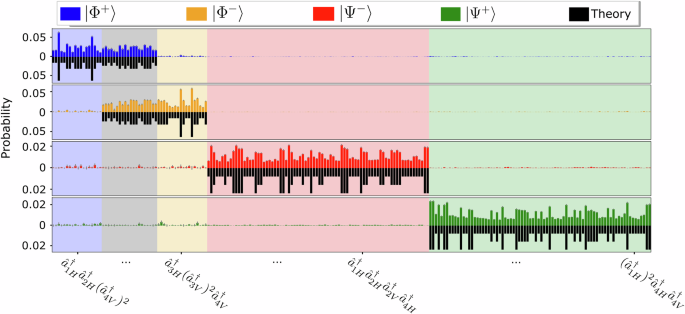

a Schematic and output statistics of the standard BSM scheme using two photons (red circles) as an input for the 4 × 4 splitter. Each bar corresponds to the probability of measuring a certain detector click pattern. For example, the bar at ({({hat{a}}_{3H}^{dagger })}^{2}) represents the probability of measuring two horizontally polarized photons in output 3. The probabilities are derived by applying the matrix ({hat{U}}_{4times 4}) from equation (4) to the different Bell-state inputs. Only the states (vert {Psi }^{+}rangle) and (vert {Psi }^{-}rangle) lead to distinct measurement outcomes whilst the states (vert {Phi }^{+}rangle) and (vert {Phi }^{-}rangle) cannot be distinguished. The background colors indicate whether a measurement of the corresponding state allows an unambiguous identification of one of the Bell states, with grey denoting ambiguous measurement outcomes. b Schematic and output statistics of the boosted BSM scheme using an ancillary Bell state (blue circles). The states (vert {Psi }^{+}rangle) and (vert {Psi }^{-}rangle) still lead to distinct measurement patterns. Furthermore, the additional interference with the ancillary Bell state gives rise to outcomes that are unique to either (vert {Phi }^{+}rangle) or (vert {Phi }^{-}rangle). These unambiguous outcomes allow the identification of these states with a probability of 50%, consequently leading to a BSM success probability of 75%.

For implementing a boosted BSM scheme, an ancillary photon pair in the state

is prepared12. The input photons and the ancillary photon pair are then sent to the 4 × 4 multimode splitter. The multimode splitter implements a discrete Fourier transform matrix in the form of18

A schematic of the boosted scheme is depicted in Fig. 2b). Now, additional terms in the output arise and allow for discrimination between the Bell states (vert {Phi }^{+}rangle) and (vert {Phi }^{-}rangle) in 50% of the cases12. The expected output statistics for different Bell-state inputs in the 4 × 4 interferometer are depicted in Fig. 2b).

In order to get a measure for the quality of the implemented BSM, we use a set of parameters that can be extracted from experimental data, as introduced in13. We determine the probabilities pc and pf as the probability of identifying the correct or an incorrect input Bell state, respectively. By calculating the average over all possible input Bell states, we obtain pc,total and pf,total with

In the implemented boosted BSM scheme, pc,total can reach a maximum of 75%. The quantities pc and pf allow the definition of the measurement discrimination fidelity (MDF) as19

which denotes the probability that a measurement uniquely and correctly identifies the prepared Bell state. Another quantity used to describe the quality of the performed BSM is the total variation distance D with20

where qi and fi denote the theoretical and measured probability of the click pattern i, respectively.

Using the measured values for pc,total for the boosted and non-boosted BSM, FBQC simulations can be performed to determine thresholds for the fusion erasure probability per. A fusion is erased if at least one photon used in the fusion is lost, leading to a loss of the measurement outcome. per can be written in terms of individual photon loss probabilities as

where nBS corresponds to the number of photons to be projected into a Bell state and nanc is the number of ancillary photons. ploss corresponds to the probability of losing an individual photon, which is used as the quantity of interest for the comparison of the different BSM schemes. Basis of the simulation is a fusion network consisting of six-ring graph states as depicted in Fig. 1.

Details on the simulation methods are outlined in “Methods”.

Experimental setup

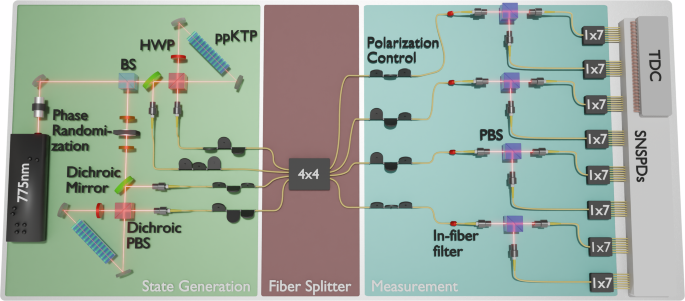

In order to experimentally realize the boosted BSM and investigate its performance in the context of FBQC, we generate two entangled photon pairs through spontaneous parametric downconversion. The sources can be adapted to emit any of the four Bell states. These Bell states are then sent to a fused fiber 4 × 4 splitter and analysed in a measurement stage. The full experimental setup is shown in Fig. 3.

The setup consists of a pulsed 775 nm pump laser (2 ps pulse duration, 76 MHz repetition rate), which is split using a balanced beam splitter (BS) to pump two Sagnac-type Bell-state sources. The sources are based on spontaneous parametric downconversion (SPDC) in periodically poled potassium titanyl phosphate (ppKTP) and generate polarization-entangled photon pairs at λ = 1550 nm. For setting the polarization of the pump laser, half-wave plates (HWP) are used. The generated photonic Bell states are then coupled into single-mode fibers and sent to the fused fiber 4 × 4 splitter. The relative phase between the sagnac sources is randomized using two quarter-wave plates and a motorized HWP. To ensure that the polarization of the input photons is maintained, fiber polarization paddles are used. In the measurement stage, the photons are sorted by polarization using polarizing beam splitters (PBS) where each output mode is connected to a 1 × 7 splitter with seven superconducting nanowire single-photon detectors (SNSPD) to allow for probabilistic photon number resolution. For the implementation of this detection scheme, a total of 56 SNSPDs and time-to-digital converters (TDC) are needed.

To analyse the output statistics of the 4 × 4 fiber splitter, the photons are sent through polarizing beam splitters (PBS). Each output is then probabilistically split up using a 1 × 7 splitter and sent to superconducting nanowire single-photon detectors (SNSPDs) with an average efficiency of about 80.0% to achieve pseudo-photon-number resolution (PNR) (see “Methods”).

The statistics for the implemented BSM are measured by recording coincidence events at the detectors. For the improved scheme, a background correction has been performed to account for the probabilistic nature of the Bell-state sources (see “Methods”).

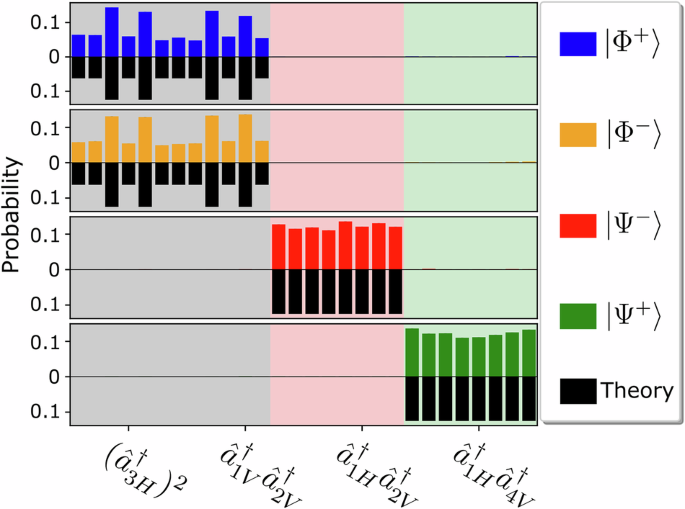

Standard Bell-state measurement

In order to compare the boosted BSM scheme to the standard scheme, we first perform a standard BSM by blocking the ancillary Bell-state source, routing only photons from a single Bell state to the 4 × 4 splitter. Using this scheme, the states (vert {Psi }^{+}rangle) and (vert {Psi }^{-}rangle) can be identified with probability pc of (98.12 ± 0.03)% and (98.09 ± 0.1)% and MDF of (98.32 ± 0.03)% and (97.0 ± 0.1)%, respectively (see Fig. 4 and Table 1). As the measured click patterns perfectly overlap between the states (vert {Phi }^{+}rangle) and (vert {Phi }^{-}rangle) it is not possible to distinguish them in the standard scheme, hence pc is not defined. The average distance D is calculated to be Davg = 0.0447 ± 0.0001, indicating a good overlap between experimental data and theory. Note that in Fig. 4, only measurement patterns corresponding to at least one of the Bell states are shown, while the measurement patterns not corresponding to any of the Bell states are discarded as a failed measurement. These events contribute to 1.37% of all outcomes.

Only the (vert {Psi }^{pm }rangle) states show unambiguous click patterns, while the (vert {Phi }^{pm }rangle) states perfectly overlap. Only states that correspond to at least one of the Bell states are depicted. Here, an average success probability of pc,total = (49.05 ± 0.02)% is achieved. The error on the individual probabilities is smaller than 0.001.

Boosted Bell-state measurement

In order to experimentally verify the boosting of the BSM, the improved scheme with the ancillary Bell state is performed. Due to interference with the ancillary Bell state, additional measurement patterns arise. These allow the identification of (vert {Phi }^{+}rangle) with a probability of (46.1 ± 0.8)% and (vert {Phi }^{-}rangle) with a probability of (46.2 ± 0.6)%, leading to an overall BSM-efficiency of pc,total = (69.3 ± 0.3)% (see Fig. 5 and Table 1). This is a significant improvement over the maximum of 50% using the standard scheme. The reduction in pc and MDF for the states (vert {Psi }^{pm }rangle) compared to the standard scheme is mostly caused by noise from either of the Bell-state sources generating multiple photon pairs at once as well as a decreased indistinguishability between photons generated from independent Bell-state sources (see “Methods”). The number of discarded measurement results that do not correspond to any of the four Bell states amounts to 5.45% of all outcomes.

Each bar corresponds to the probability of measuring a specific detector click pattern. The states (leftvert {Psi }^{+}rightrangle) and (leftvert {Psi }^{-}rightrangle) can be correctly identified with a probability of (92.4 ± 0.5)% and (92.7 ± 0.5)%, respectively. The improved scheme leads to measurement results that are exclusive to either (leftvert {Phi }^{+}rightrangle) or (leftvert {Phi }^{-}rightrangle). These states can be correctly identified with a probability of (46.1 ± 0.8)% and (46.2 ± 0.6)%, respectively.

FBQC simulations

We can now investigate the robustness of FBQC architectures to photon loss while comparing the standard BSM to our boosted BSM. For this simulation, we consider a six-ring fusion network with (2, 2)-Shor encoded six-ring resource states, similar to the one proposed in1. In the (2, 2)-Shor encoding, each logical qubit is encoded in four physical qubits21 (see Fig. 7). The simulation methods are explained in “Methods”.

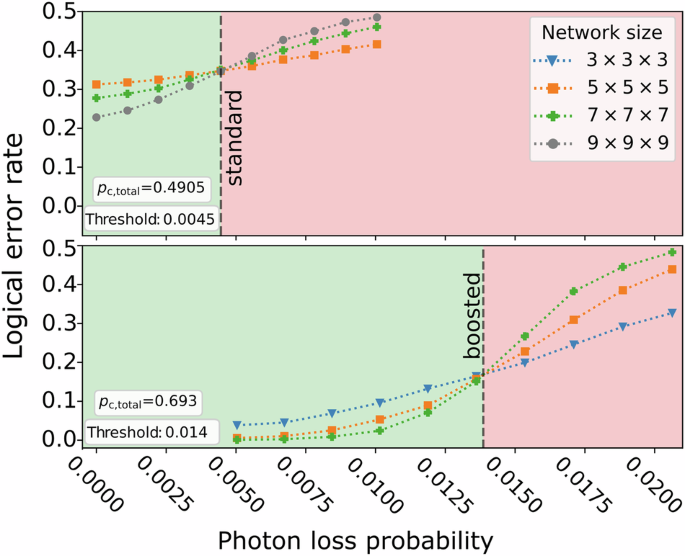

As a benchmark for robustness, we investigate the photon-loss threshold. This is done by simulating logical error rates for different fusion-network sizes, which is given by the number of unit lattice cells of the network in each direction, as discussed in1. Hereby, each unit cell consists of two resource states. These simulations are performed while varying the photon-loss probability ploss. The threshold for performing FBQC is given by the photon-loss probability at which the logical error rate starts decreasing with increasing network size. By surpassing this threshold, arbitrarily low logical error rates can be achieved by scaling the network size accordingly. The simulation results for network sizes three, five, and seven for fixed BSM success probabilities of pc,total = 69.3% for the boosted scheme and network sizes five, seven, and nine for BSM success probabilities pc,total = 49.05% for the non-boosted BSM can be seen in Fig. 6. Here, the photon-loss thresholds are determined to be ({p}_{{rm{loss}}}^{B}=1.4 %) for the boosted BSM and ploss = 0.45% for the standard BSM. Therefore, using the boosted BSM scheme, the robustness to photon loss of FBQC schemes is significantly improved by more than a factor of three. Furthermore, compared to the high logical error rates (20%–30%) simulated for the standard BSM even at low photon loss, the boosted BSM shows a significantly reduced logical error rate. This means that the boosted BSM demonstrated in this work can be used to achieve meaningfully low logical error rates that could unlock near- to mid-term applications of fault-tolerant photonic quantum computers.

Logical error rate for different network sizes and photon-loss probabilities for pc,total = 0.4905 (top) and pc,total = 0.693 (bottom). The simulation is performed for different sizes of the fusion network. The intersections mark the threshold at which arbitrarily low logical error rates can be achieved by increasing the size of the fusion network (green background). These photon-loss thresholds are found to be ({p}_{{rm{loss}}}^{B}=0.014) for the boosted scheme and ploss = 0.0045 for the standard scheme.

Discussion

We present the implementation of a boosted BSM scheme using entangled ancillary photon states. We significantly surpass the usual 50% BSM-efficiency limit for linear-optical schemes and find improved photon loss thresholds for FBQC schemes. With an average success probability of (69.3 ± 0.3)%, we reach the highest ancilla-assisted linear-optical BSM success probability that has been demonstrated to our knowledge. We show that using the boosted BSM, fusion-based photonic quantum computation could be performed with an individual photon-loss probability as high as ({p}_{{rm{loss}}}^{B}=1.4 %), compared to ploss = 0.45% with the standard BSM scheme. Thus, a notable increase in the loss robustness for fusion-based architectures can be achieved. Furthermore, the boosted BSM shows significantly reduced logical error rates compared to the standard BSM, even for small fusion network sizes, making it suitable for near- to mid-term applications of fusion-based schemes. Using fiber-integrated multiport splitters, we eliminate the need for complex free-space interferometers and thus greatly reduce the experimental complexity as well as required components compared to bulk-optical implementations of similar schemes.

Our results show the benefit of using pre-entangled ancillary photon pairs as a resource to increase the success probability of Bell-state measurements, surpassing schemes in which non-entangled ancillary photons are used11,13. Due to the significance of efficient Bell-state measurements in photonic quantum computation, this work marks a milestone towards the viability of such architectures in real-world applications22,23,24,25,26.

Methods

Photon sources

In order to verify that the generated photons are indistinguishable in their spectral and temporal degree of freedom, Hong-Ou-Mandel experiments27 have been performed between two photons being emitted from the same source as well as heralded single photons being emitted from different sources. Here, we achieved interference visibilities of VHOM1 = (99.2 ± 0.3)% and VHOM2 = (99.0 ± 0.4)% for photon pairs emitted from the first and second source, respectively, while pumping the sources with 50 mW. For heralded single photons from each of the sources, we achieved an interference visibility of VHOM12 = (94.0 ± 0.6)%, indicating the generation of pairwise indistinguishable photons from both sources. The two-source visibility is slightly lower compared to the single-source visibility due to additional noise from higher-order emissions as well as residual spectral correlations. The average coincidence rates are about 40000 twofold events and 10 fourfold events per second.

Background correction

The Bell-state sources used in this work are based on SPDC and as such generate two-mode squeezed vacuum states28. Consequently, higher-order emissions may occur, making a background correction necessary. The background is corrected by measuring and then subtracting the output statistics while blocking either source. Furthermore, the relative phase between the two sources is actively randomized to circumvent induced coherence effects29. Employing a background correction can lead to small negative values for measurement outcomes close to zero. In these cases, the absolute values are taken, leading to a slight underestimation of the reported BSM efficiencies. The need for background correction can be eliminated by using Bell-state sources based on deterministic single photon sources30 or heralded Bell-state generation schemes31 in future implementations.

Pseudo photon-number resolution

To achieve pseudo-photon-number resolution, we probabilistically split each output mode into 7 modes. The outputs are split using fiber-based balanced 1 × 7 splitters and connecting each of its outputs to an SNSPD. The probability of n input photons being routed to different detectors in the case of k outputs can be calculated as

Since this scheme is inherently probabilistic, the measured probabilities of events in which multiple photons are sent into one 1 × 7 splitter need to be corrected. This correction is done by multiplying each measured probability pm by a factor of 1/PPPNR(pm). Here, PPPNR(pm) is the probability that all photons contributing to pm are measured in separate outputs of the 1 × 7 splitters. This is given by

where ni(pm) is the number of photons incident on the 1 × 7 splitter in mode i for the measurement outcome corresponding to pm. In this experiment, we have k = 7 due to the employed 1 × 7 splitters.

Uncertainty calculations

The data presented in this work is generated by repeatedly measuring coincidence events in 120 s intervals. In order to achieve statistical significance, these measurements are repeated at least 500 times. In order to calculate the uncertainty for the number Ni of events in which click pattern i is measured, the standard deviation

is calculated and used as the uncertainty ΔNi. In order to determine the uncertainty of all values calculated from Ni, Gaussian error propagation is used.

Simulation methods

We performed FBQC simulations using Plaquette, an end-to-end fault-tolerance simulation software capable of modeling hardware imperfections in photonic and matter-based hardware platforms.

The six-ring fusion network (FN) simulated in this paper corresponds to the one discussed in ref.1, where we assume periodic boundary conditions. The arrangement of the resource states in this network can be described by two distinct lattices: the primal and the dual lattice. These lattices are useful to detect the different error mechanisms. For example, an X (or Z) error that affects the primal lattice will not affect the dual lattice and vice versa, whereas a Y Pauli error will affect both lattices at the same time. In this way, the checks of the six-ring FN discussed in ref.1 can be separated into two decoupled graphs, the primal and dual decoding graphs, which are automatically extracted by Plaquette. In each decoding graph, each node corresponds to a check of the FN, and each edge connecting two nodes corresponds to the fusion measurement outcome whose error will flip the parity of the two associated nodes. During the simulation, for each fusion, we decide which measurement outcome is lost upon fusion failure, so that its related edge in the decoding graph will be erased (i.e., randomized) In the case of fusion erasure, both edges (one in the primal and the other in the dual graph) are erased. Therefore, in the presence of both fusion failure and erasure, a subset of edges in both the primal and the dual decoding graph are erased, thus changing the parity of the checks connected to them. The checks with inverted parity constitute the syndrome, which provides information on where errors might have occurred. This syndrome is passed to the FusionBlossom32 decoder available in Plaquette, which provides a possible correction. To assess whether a logical error has occurred, the parity of the correlation surfaces in the absence of errors and after the error correction procedure is compared: if the two parties are different, then a logical error has occurred.

For the simulations related to the (2, 2)-Shor encoded six-ring fusion network, we mapped fusion failure and erasure probabilities on physical fusions to fusion failure and erasure probabilities on the encoded fusions. This mapping was performed in similar fashion to the mapping presented in ref.1. The main difference in our simulation is that we consider the specific pattern of fusion measurements that maximizes the fusion success probability of the encoded fusion for the given type of encoding chosen. An implementation of such an encoded fusion from physical fusions is shown in Fig. 7.

(Figure adapted from ref.1). Using the (2, 2)-Shor encoding, each logical qubit is encoded in four entangled physical qubits, denoted with index A to D. All physical fusions measure the parity of the stabilizer generators XX and ZZ on the physical qubits 1i and ({4}_{{rm{i}}}^{{prime} }). Upon fusion failure, we lose the parity of ZZ in fusions A and C and the parity of XX in fusions B and D, guaranteeing a fusion failure probability of the encoded fusion of ({p}_{{rm{fail}},{rm{enc}}}={p}_{{rm{fail}},{rm{phys}}}^{2}), where pfail,phys denotes the failure probability of the physical fusion. If there are no other sources of error (apart from fusion failure), we always lose the ZZ measurement outcome of the encoded fusion if it fails.

For the specific threshold plots that are depicted in Fig. 6, we fix the fusion success probabilities pc,total and scan over erasure probabilities. In more detail, we choose a set of physical erasure probabilities, which we scan over. These probabilities along with the fusion success probabilities are then translated into effective erasure probabilities on encoded fusions. Logical error rates are computed repeatedly for these effective erasure rates for different sizes of FBQC lattices (comprising n × n × n unit cells for n in 3, 5, 7). In the end, the obtained logical error rates are plotted against the different photon-loss probabilities and a threshold is obtained in the presence of fusion failure. Here, we assume uniform loss acting on all photons involved in the fusion, namely two in the case of unboosted BSMs and four in the case of boosted BSMs

Based on this analysis, the loss threshold for the (2, 2)-Shor encoded six-ring fusion network with a fusion success probability of 69.3% is ({p}_{{rm{loss}}}^{B}=1.4 %), and the loss threshold for the same fusion network with a fusion success probability of 49.05% is ploss = 0.45%.

Responses