A deep learning approach predicting the activity of COVID-19 therapeutics and vaccines against emerging variants

Introduction

Viruses can accumulate sequence changes under immune selection pressure and due to natural genetic variation1,2,3. Such mutations can permit evasion of host immune responses, leading to the emergence of new viral variants that reduce the efficacy of vaccines and antibody-based treatments4,5. With the ongoing evolution of a virus, there arises an uncertainty as to whether monoclonal antibodies and vaccines will be effective in neutralizing novel strains. Because of this, it is crucial to monitor viral strains’ potential for antibody escape to revise clinical and public health guidelines and develop more effective therapeutic and vaccine strategies.

Cell-based assays are a widely used tool for assessing the antibody evasion potential of viral strains6,7. These assays involve exposing a viral strain to an antibody agent in cell culture and evaluating the level of viral replication, infectivity, or virulence in vivo or in vitro. However, these assays have certain limitations, particularly when the virus is evolving rapidly. This is due to the fact that they rely on a limited number of viral isolates, which may not adequately represent the full diversity of circulating strains8,9,10. Consequently, it becomes challenging to monitor viral escape entirely and develop effective treatments that can target a wide range of viral strains. This challenge has been particularly evident during the COVID-19 pandemic, as the SARS-CoV-2 virus continues to evolve and produce new lineages and sub-lineages, with over 1.7 million unique sequences recorded to date11. Therefore, it is essential to complement these assays with surveillance efforts and realistic models to ensure that emerging viral strains and underlying antibody escape properties are entirely detected and monitored in real-time.

Modeling approaches, such as phylogenetic analysis, structural modeling, and machine and deep learning, offer valuable insights into understanding viral behavior, assessing antibody and vaccine efficacy, and predicting the impact of mutations. Recent studies have successfully modeled the temporal and geographic evolution of SARS-CoV-2, determined phyletic lineages of SARS-CoV-2 variants, and predicted the impact of mutations on ACE2 binding12,13,14. The arrival of new influenza strains each year has also driven the development of models that predict antigenic variation of influenza, helping aid the creation of annual flu vaccines15,16,17. Furthermore, in an era where there is a significant amount of viral surveillance data18,19,20, modeling approaches can be used to extract valuable knowledge about viral properties and antibody escape that may not be entirely captured by conventional methods.

Here, we propose a deep learning-based method to predict changes in neutralizing antibody activity of COVID-19 therapeutics and vaccine-elicited sera/plasma against emerging SARS-CoV-2 variants. Our method utilizes a variational autoencoder (VAE) to encode spike protein sequences into a latent space embedding, allowing viral sequences to be inputted into a predictive model. Using compiled in vitro assay data, we trained a neural network model to predict fold changes in the neutralization activity of COVID-19 therapeutics and vaccine-elicited sera/plasma against spike protein variants, relative to their activity against the ancestral strain (Wuhan-Hu-1). This work presents a comprehensive analysis of the spike protein variants and corresponding antibody resistance of SARS-CoV-2, augmenting the insights derived from experimental assays. Through this research, advancements can be made towards developing more effective therapeutic and vaccine strategies against rapidly evolving viruses. Additionally, it can facilitate the detection of viral variants that may evade current approved treatments and the discovery of antibodies that have regained their effectiveness against new variants.

Results

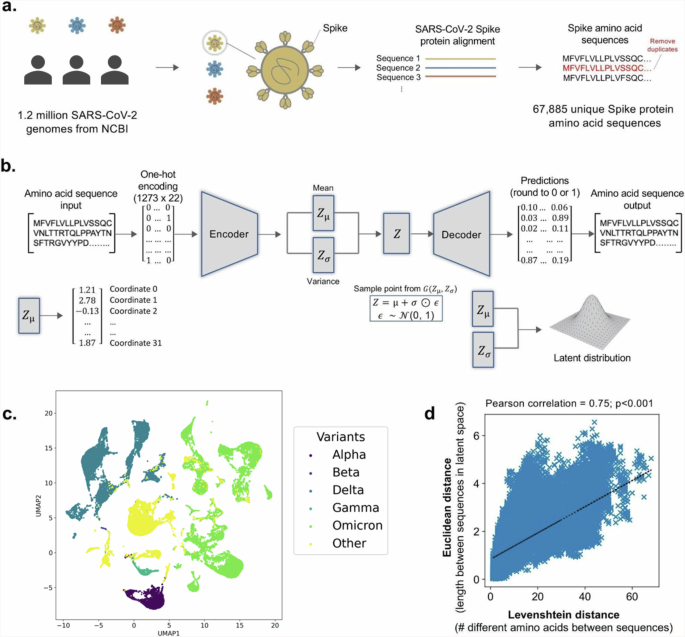

Encoding SARS-CoV-2 spike protein sequences using VAE

A VAE was first developed to encode SARS-CoV-2 spike protein sequences and create a latent space that captures mutational patterns and relationships between sequences. The dataset comprised 67,885 unique spike protein sequences extracted from the NCBI Virus Database as of October 31, 2022 (Fig. 1a). To train the VAE, 54,308 sequences were fed into the encoder, which compressed them into a 32-dimensional latent space (Fig. 1b, c). The decoder then reconstructed the sequences from their latent embedding. Following training, a difference score was calculated between the input and output sequences, indicating how well the decoder reconstructed the sequences from their latent embedding. For the test set (N = 13,577), the average difference score was 2.29 amino acid mistakes after reconstruction (standard deviation = 1.54) out of 1273 amino acid positions. Rates of reconstruction error for individual positions in the Spike protein sequence are reported in Supplementary Fig. 1.

a SARS-CoV-2 genomes from the NCBI Virus Database are collected and translated into spike protein amino acid sequences. b Illustration of VAE architecture. The spike protein amino acid sequences are used to train the autoencoder, where the encoder compresses the sequences into 32 latent space vectors and the decoder reconstructs the encoded sequences back to their original input. c The uniform manifold approximation and projection (UMAP) plot visualizes SARS-CoV-2 variant clustering along the first two principal dimensions. Colors differentiate variants within the reduced dimensional space. d Pearson correlation of Levenshtein distances of each sequence pair and Euclidean distances of the corresponding latent vectors, with least-squares line of best fit shown.

To validate the VAE’s accuracy in capturing similarities and differences between spike protein sequences, Levenshtein distances between sequences were compared with Euclidean distances between encoded versions of sequences using Pearson’s correlation (Fig. 1d). Levenshtein distance is defined as the minimum number of single-character edits (substitutions, deletions, insertions) required to change one sequence to another. Euclidean distance is defined as the length of the line segment between two sequences in latent space. The two variables were found to be correlated (ρ = 0.75, p < 0.001), demonstrating the VAE’s ability to accurately capture relationships between sequences in latent space.

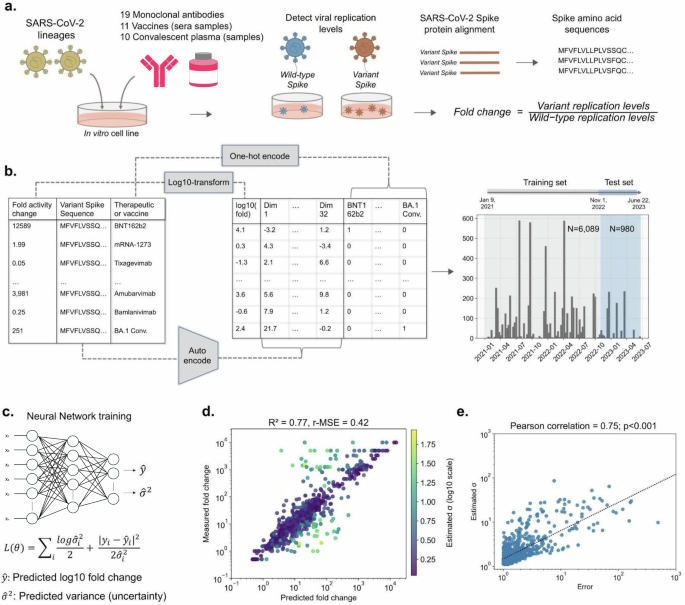

Uncertainty-based neural network prediction of fold changes in neutralization activity against SARS-CoV-2 variants

NCATS OpenData Portal’s curated dataset of 7069 results from in vitro assays was used to train a neural network model to predict fold changes in the neutralization activity of therapeutics and vaccine-elicited sera/plasma against SARS-CoV-2 variants (Fig. 2a). Fold changes are relative to the neutralization activity against the wild-type ancestral Wuhan-Hu-1 strain and were log10 transformed to ensure normality of the ratios. Spike protein sequences of viral isolates subjected to the assays were encoded into 32 latent dimensions using the VAE model, and the 40 therapeutics and vaccine-elicited sera/plasma tested against viral isolates were one-hot encoded (Fig. 2b). The training set comprised data collected between January 9, 2021, and October 31, 2022 (N = 6089), and the test set comprised data collected between November 1, 2022, and June 22, 2023 (N = 980). We integrated a custom loss function into the model, based on Bayesian inference, to provide reliable estimates of prediction uncertainty (Fig. 2c). This loss function enabled the estimation of variance associated with each prediction, providing a quantifiable metric that could be used to construct prediction intervals.

a SARS-CoV-2 isolates were subjected to therapeutic and vaccine in vitro assays, and resultant neutralization activity fold change ratios between the wild-type and variants were compiled and log10 transformed. b Variant spike protein sequences were VAE encoded and corresponding therapeutics and vaccines were one-hot encoded. Data collected from January 9, 2021, to October 31, 2022, was used as training data (N = 6089), and data collected from November 1, 2022, to June 22, 2023, was used as test data (N = 980). c A neural network model was trained to predict log10 fold change in neutralization activity and estimate the uncertainty (variance) in each prediction. d Comparison between model predictions and actual measurements for the test set, highlighting yellow points for higher prediction uncertainty. e Correlation between prediction error and estimated uncertainty for the test set, with least-squares line of best fit shown.

Performance evaluation of the uncertainty-based neural network model

Following training, the model demonstrated satisfactory performance on the test set, with an R2 of 0.77 and a r-MSE of 0.42 (Fig. 2d). To ensure that variance estimates serve as a dependable indicator of prediction uncertainty, we compared the absolute prediction error with the estimated variance for the test set using Pearson’s correlation (Fig. 2e). We observed a statistically significant correlation between the two variables (ρ = 0.75, p < 0.001), implying that higher prediction uncertainty is associated with a greater likelihood of prediction inaccuracy. To ensure that the observed predictive capacity was not due to overlap between the training and testing sets, introduced by our date-based splitting of the data, model performance was also assessed using only therapeutic-sequence pairs not part of training. This subset of the test set comprised 746 data points (76.1%) that were not seen by the model during training. The model’s performance on these novel data points remained robust, yielding an R2 of 0.76 and a r-MSE of 0.47 (Supplementary Fig. 2).

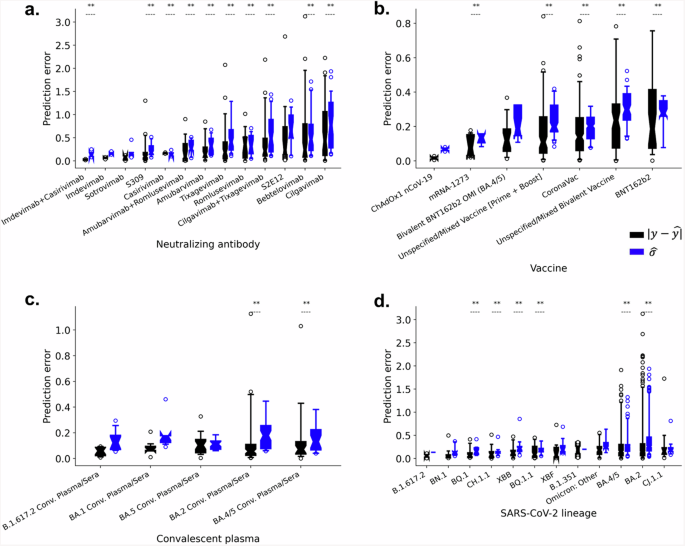

Assessment of the variability in prediction accuracy

We further evaluated the model’s prediction accuracy across the different COVID-19 therapeutic agents and SARS-CoV-2 lineages. Figure 3 demonstrates that prediction error varies among the therapeutic agents, with higher mean absolute error (MAE) observed for specific monoclonal antibodies such as Cilgavimab (MAE = 0.62), Bebtelovimab (MAE = 0.55), and S2E12 (MAE = 0.52) (Fig. 3a). Conversely, prediction error was lower for COVID-19 vaccine-elicited sera/plasma and convalescent plasma therapies (Fig. 3b, c). Increased prediction error was also associated with select SARS-CoV-2 lineages, such as CJ.1.1 (MAE = 0.30), BA.2 (MAE = 0.29), and BA.4/5 (MAE = 0.23) (Fig. 3d). Additional analysis of predictive accuracy for each therapeutic and lineage pair showed higher predictive error for CJ.1.1, BA.2, and BA.4/5 lineages when tested against Cilgavimab, Bebtelovimab, and S2E12 antibodies (Supplementary Table 1). In contrast, these lineages exhibited lower error against other therapeutics. Therapeutic and lineage pairs with high predicted error were found to exhibit high standard deviation in the experimentally measured log10 fold change (Supplementary Table 2). Importantly, estimated uncertainty values significantly correlated with prediction error for these specific antibodies and lineages with larger error, indicating the model’s capacity to provide robust uncertainty estimates when prediction inaccuracy is likely.

The distribution of absolute prediction error was assessed for each (a) monoclonal antibody, (b) vaccine, and (c) convalescent plasma sample, as well as for each (d) SARS-CoV-2 lineage within the test set, with progressive ordering (left to right) from lowest to highest mean absolute error. Absolute error was compared with the model’s predicted uncertainties (σ̂) using Spearman’s correlation, with corresponding p-values of less than 0.05 considered statistically significant and annotated within the figures.

Temporal generalization and performance

We further conducted a temporal analysis to assess the extent to which the model can generalize predictions for prospective variants. Specifically, we evaluated the model’s predictive performance for test sequences assayed within 0–4 months and 4–8 months after the end of the training period of October 31, 2022 (Supplementary Fig. 3). The model displayed exceptional predictive capability for sequences assayed 0-4 months after the training period (N = 696), achieving an R2 of 0.84 and a r-MSE of 0.34. Despite a decrease in performance, the model still exhibited good predictive capability for sequences assayed 4–8 months after the training period (N = 284), with an R2 of 0.64 and a r-MSE of 0.58.

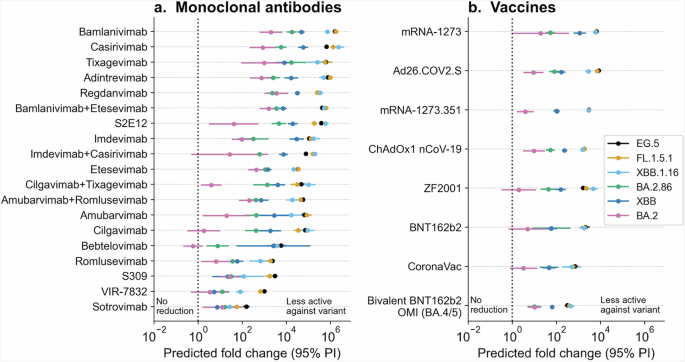

Predicted effects for emerging SARS-CoV-2 lineages

As a proof of concept, we present in Fig. 4 the predicted fold changes in neutralizing activity of vaccine-elicited sera/plasma and monoclonal antibodies against newly designated SARS-CoV-2 lineages: EG.5, FL.1.5.1, XBB.1.16, and BA.2.86. The predicted effects for parent lineages BA.2 and XBB are also shown as a point of reference.

The presented graphs showcase the estimated fold changes in neutralization activity of (a) monoclonal antibodies and (b) vaccines against the newly emerged SARS-CoV-2 lineages: EG.5, FL.1.5.1, XBB.1.16, and BA.2.86. Parent lineages XBB and BA.2 are also depicted in the figures. Error bars represent the 95% prediction intervals derived from the variance estimates, and the color-coding of the data points and error bars correspond to the lineage to which the prediction was made for.

For this analysis, we trained the VAE and neural network on all available data as of August 18, 2023, to demonstrate the usefulness of our model for surveying new variants in a real-world scenario. The model predicted that both newer XBB descendants (EG.5, FL.1.5.1 and XBB 1.16) and BA.2.86 induce lower neutralizing activity for existing COVID-19 vaccine-elicited sera/plasma and monoclonal antibodies, compared to their parental lineages (XBB and BA.2, respectively). However, BA.2.86 is predicted to exhibit a smaller reduction in neutralizing activity to all vaccine-elicited sera/plasma and most monoclonal antibodies, compared to newer XBB descendants.

In particular, although bivalent BNT162b2 (OMI BA.4/5) and Sotrovimab are predicted to retain some level of activity against these viral lineages, the predicted fold reduction for bivalent BNT162b2 is 315-fold against EG.5 (95% PI: [247, 401]), 384-fold against FL.1.5.1 (95% PI: [300, 491]), 443-fold against XBB.1.16 (95% PI: [296, 662]), and 38-fold against BA.2.86 (95% PI: [24, 62]). For Sotrovimab, the predicted fold reduction is 155-fold against EG.5 (95% PI: [111, 218]), 56-fold against FL.1.5.1 (95% PI: [30, 106]), 27-fold against XBB.1.16 (95% PI: [8, 97]), and 57-fold against BA.2.86 (95% PI: [30, 106]).

We then conducted a comprehensive analysis of the acquired spike mutations, focusing on identifying those predicted to have the greatest impact on activity of select monoclonal antibodies and vaccine-elicited sera/plasma (Supplementary Figs. 4 and 5). To accomplish this, we first calculated the distinct core mutations distinguishing each new lineage (EG.5, FL.1.5.1, XBB.1.16, and BA.2.86) from their parental lineages. Subsequently, we systematically introduced each mutation, one at a time, into the core spike protein sequence of parent lineage (XBB) to understand the predicted partial impact of each mutation. Notably, the spike mutation F456L, which is present in EG.5 and FL.1.5.1 sequences, is predicted to have the most significant effect on the neutralization activity of therapeutic agents shown in Supplementary Fig. 4. Moreover, the largest predicted partial effect of this mutation, relative to XBB parent, is seen for therapeutic agents S309 (precursor of Sotrovimab), VIR-7832, and ZF2001. We observed that the newly acquired mutations within the BA.2.86 lineage did not result in substantial changes in the therapeutic effectiveness (Supplementary Fig. 5).

Discussion

In this study, we outline a novel deep learning approach to monitoring the impact of SARS-CoV-2 variants on the neutralizing activity of COVID-19 antibody therapeutics and vaccine-elicited sera/plasma. First, we developed a variational autoencoder (VAE) capable of encoding spike protein sequences into a latent space while preserving the integrity of spike protein information. This resulted in an average reconstruction loss of 2.29 amino acids per 1273 positions on the spike protein. Subsequently, we trained a neural network model to predict fold changes in the neutralization activity of 40 different COVID-19 antibody therapies and vaccine-elicited sera/plasma against spike protein sequence variants, relative to their activity against the ancestral strain (Wuhan-Hu-1). To assess the model’s generalizability to predict prospective sequences, we evaluated its performance on sequences tested eight months after the training data cutoff date of October 31, 2022. Our findings indicate that the model can accurately predict the impact of prospective spike protein mutants on the neutralization activity of therapeutics and vaccine-elicited sera/plasma (R2 = 0.77), making it a valuable tool for identifying emerging viral variants that are likely to evade current COVID-19 treatments.

Our model’s predictions for emerging SARS-CoV-2 lineages align with current research findings. Consistent with recent data21,22,23, the model predicts that the newer XBB descendants, specifically EG.5, FL.1.5.1, and XBB.1.16, have significantly reduced in vitro susceptibility to both vaccine-elicited sera/plasma and monoclonal antibodies, rising concerns for evading vaccine-driven immunity and lack of efficacy to clinically relevant monoclonal antibodies. On further analysis of mutations linked to reduced responsiveness, our model identifies the spike mutation F456L, present in EG.5 and FL.1.5.1, as a primary contributor to reduced neutralization by selected COVID-19 antibodies and vaccine-elicited sera/plasma. This observation aligns remarkably with recent studies using pseudovirus neutralization assays22,24, and deep mutational scanning25, which highlighted the mutation’s role in ACE2 receptor binding. The F456L mutation emerged independently in XBB descendant strains such as EG.5, FL.1.5.1, and XBB.1.16.6 and was found in 40% of newly uploaded SARS-CoV-2 sequences by August 202322. Continuous shifts at this position and its vicinity, highlighted by deep mutational scanning25, suggest the virus is evolving to dodge antibody responses while effectively binding to its target receptors. Remarkably, our deep learning framework successfully discerned the robust immune escape properties of this mutation, despite not seeing it within training sequences for the uncertainty-based neural network, although the mutation was encountered in the VAE training sequences.

On August 17, 2023, the World Health Organization (WHO) designated BA.2.86, a highly mutated subvariant originating from BA.2, as a variant under monitoring due to potential immune evasion risks. However, recent studies employing live and pseudovirus neutralization assays indicate that BA.2.86 elicits a higher neutralizing antibody response to mRNA bivalent boosters than newer XBB descendants21,23,26, though slightly lower than its parental lineage (BA.2). Despite over 30 amino acid mutations in its spike region compared to the parental lineage (BA.2)26, many of which are novel to both the VAE and neural network, our model accurately predicted BA.2.86’s behavior, underscoring its performance in emerging lineages. Additionally, consistent with recent studies21,27,28, our model predicted that monoclonal antibodies including Sotrovimab, Cilgavimab, and Bebtelovimab that previously retained some activity against parental BA.2 variants have reduced neutralization activity against BA.2.86. Regarding mutations linked to reduced effectiveness, our findings align with a recent deep mutational scanning study25, which suggests that BA.2.86 mutations do not significantly impact neutralization activities.

This study leverages a VAE architecture for its generative capabilities and adept handling of non-linear data relationships29. Unlike traditional dimensionality reduction methods that compress data into lower-dimensional spaces using deterministic approaches, VAEs utilize a probabilistic framework based on variational inference and Bayesian statistics. Expressly, instead of mapping input data (e.g., viral sequences) to fixed vectors, VAEs represent the data as distributions characterized by means and standard deviations29,30. By iterative sampling and minimizing the loss function, which includes reconstruction loss and KL divergence, VAEs learn data relationships29,30. We believe that this probabilistic approach is particularly relevant to our study for two main reasons. First, given the high dimensionality of sequencing data, VAEs enable the capture of complex, non-linear relationships within large biomedical datasets, preserving important information during dimensionality reduction and thereby enhancing the performance of downstream predictive models. Second, the generative nature of VAEs facilitates the prediction of unseen viral sequences. However, employing VAEs involves significant computational demands and presents challenges in interpreting the latent space. VAE primarily focuses on entire data distribution rather than individual features. Each dimension in the latent space is composite of features and their interactions, compressed into a form allowing the model to reconstruct original input data. This configuration complicates direct correlation with the original features, thereby limiting a straightforward interpretation of feature importance30,31.

We employed chronological splitting to test the model’s adaptability to unseen viral sequences and evolving treatment trends as the model’s predictive accuracy can be impacted by the limited representativeness of training data for aligning viral sequences with treatments. Bayesian inference aims to mitigate this challenge, and the model performance is promising for future use of the algorithm to monitor therapeutic effectiveness of existing immune-based therapies against emerging viral strains. As more viral sequence and laboratory-based data continue to accumulate, this approach could be extended to other viral agents, such as influenza and HIV, where antigenic evolution is a critical factor in the development of effective treatments and vaccines. Overall, the current study showcases the proficiency of deep learning to detect patterns within viral sequence and assay-based data, allowing for the prediction of therapeutic effectiveness. Moreover, we demonstrate the use of Bayesian modeling to quantify prediction uncertainty, enabling the identification of viral sequences and treatments for which the model can confidently make predictions, as well as those with limited assurance where assays would be most informative.

There are several limitations to this study. First, the in vitro assay data used for model development is subject to experimental bias. Representation of viral sequences and therapeutic agents are based on availability of laboratory-based experiments. Consequently, this may result in reduced model accuracy for select therapeutic agents and lineages that are underrepresented in experiments, or for which there is much variation in experimental measurements (Supplementary Table 2). It is worth noting that the model does output uncertainty values, which improves prediction reliability and helps address data imbalance issues (Supplementary Table 1). Second, the extent to which the test set differs from the training set is not completely novel. A significant proportion of the test set sequences (56.9%, or 558 out of 980 sequences) are identical to sequences in the training set (Supplementary Fig. 5). However, it is important to note that only 23.9% (234 out of 980 data points) of the test set data has the same therapeutic and sequence combination as the training set. Moreover, the uniqueness of the test set goes beyond the comparison of sequences, with ~75% of therapeutic and sequence pairs not found in the training set. Third, the performance of our model depends on the accuracy and consistency of previously conducted in vitro neutralization assays. While we used fold change ratios to standardize results across different assays, various factors, such as assay type (live virus replication vs. pseudovirus), selected cell lines and alterations in viral protein processing, can influence assay readouts and result in inconsistent findings32. Fourth, it is critical to recognize that the model’s predictions regarding relative changes in in vitro neutralizing activity for therapeutics and vaccine-elicited sera/plasma do not directly translate to actual treatment effectiveness. In vivo studies are indispensable for more precise assessment in this regard, as they encompass a broader array of physiological factors. Lastly, although our model demonstrated satisfactory performance on sequences assayed shortly after the training period, its performance declined for sequences assayed further afterward (Fig. 4). This suggests that our model is most suited for making predictions for sequences emerging shortly after model training. It is important to note that future endeavors would involve iteratively training the model on all new available data to ensure the most reliable predictions for novel variants.

Methods

Variational autoencoder (VAE) architecture, training, and optimization

SARS-CoV-2 genomes present in the NCBI Virus Database were downloaded. Sequences collected up to October 31, 2022, were used to train the VAE. The dataset consisted of 1,208,321 spike protein amino acid sequences. We aligned and translated the sequences using NextClade33. Duplicate spike protein sequences were removed, which resulted in a final dataset of 67,885 unique spike protein sequences. The sequences were one-hot encoded as 22 by 1273 arrays, as there are 1273 amino acid positions in the Wuhan-Hu-1 spike protein and 22 options at each site: any one of the 20 amino acids, an insertion, or a deletion. In the case of an insertion, we only encoded that there was an insertion following the current position, without encoding the identity of the inserted fragment.

The VAE consists of an encoder and a decoder. The encoder compresses the spike protein sequence data (one-hot encoded amino acid sequences) to its latent embedding and the decoder reconstructs the input sequence data from its latent embedding. In the encoder, the number of latent dimensions is set to 32 because additional gains in loss after decoding were minimal when increasing the number of latent dimensions beyond 32. The latent space is modeled as a multivariate normal distribution with a defined latent mean and variance (log-transformed for numerical stability). A standard normal distribution was used as the prior distribution for the latent space. A sampling layer is present in the encoder, where data is randomly sampled from the latent space distribution before being passed to the decoder.

The VAE was compiled with an Adam optimizer. The loss function for the VAE is the sum of the reconstruction loss and the Kullback–Leibler (KL) divergence between the distribution returned by the encoder and a standard normal distribution. The reconstruction loss is calculated using binary cross-entropy. The VAE loss function is defined as follows:

where xi represents the i-th input sequence (one-hot encoded), x̂i represents the reconstructedsequence for input xi, i, j2 represents the variance of the latent distribution for the i-th inputsequence and j-th latent dimension, and z represents the mean of the latent distribution for the i-th input sequence and j-th latent dimension. The reconstruction loss quantifies the ability toreconstruct the input sequence data from its latent embedding, while the KL-divergence ensuresthat the learned latent space distribution follows a standard normal distribution. This helps theVAE learn smooth latent representations where distance in latent space reflects similaritybetween inputted sequences. The VAE was trained on 80% of the sampled sequences (N = 54,308) and evaluated on the remaining 20% of sampled sequences (N = 13,577). We trainedthe VAE for 50 epochs with a batch size of 32, while also providing an early stopping function witha patience of five to stop training once the validation loss stopped improving for five consecutiveepochs.

OpenData Portal curation of neutralizing activity against SARS-CoV-2 variants

The SARS-CoV-2 variant therapeutic data on the OpenData Portal have been curated by the National Center for Advancing Translational Sciences (NCATS) in collaboration with the National Institutes of Health (NIH) Accelerating COVID-19 Therapeutic Interventions and Vaccines (ACTIV) Preclinical Working Group and Tracking Resistance and Coronavirus Evolution (TRACE) initiative with support from the Foundation for the National Institutes of Health (FNIH). The data shared on the OpenData Portal have been manually curated from publications (both preprints and peer-reviewed articles) or data directly submitted. Curation efforts prioritized publications on advanced stage therapeutic agents (those approved and/or in clinical trials), with an emphasis on studies conducted 1) by each agent’s parent pharmaceutical company or 2) with a government partner. Curation efforts collated neutralizing activity of vaccine-elicited sera/plasma and therapeutics against SARS-CoV-2 variants, in addition to related metadata, from in vitro assays utilizing live or pseudotyped viruses. Fold-reductions and raw values provided via publication or direct submission can be accessed via download on the OpenData Portal web browser (https://opendata.ncats.nih.gov/variant/activity).

Preprocessing and encoding of in vitro neutralization assay data

SARS-CoV-2 in vitro neutralization activity data compiled by the National Center for Advancing Translational Sciences (NCATS) on its COVID-19 OpenData Portal34 were used for model development. The dataset consists of in vitro assays collected between January 9, 2021, and June 22, 2023. Both live virus replication assays and pseudotyped virus assays were considered for this analysis. Neutralization activity fold change ratios between the SARS-CoV-2 wild-type strain (Wuhan-Hu-1) and spike protein variants were compiled and log10 transformed. COVID-19 therapeutics and vaccine-elicited sera/plasma with less than 20 data points were excluded from our study to ensure high confidence in the predictions. Spike protein sequences from variants tested were auto-encoded into 32 latent dimensions using the previously described VAE. The specific therapeutics and vaccine-elicited sera/plasma evaluated in each assay were one-hot encoded into 40 additional dimensions, consisting of 19 monoclonal antibodies, 11 vaccines (vaccine sera samples) and 10 convalescent plasma samples (Table 1). To enable quantification of performance against future data, we divided the available data into training and test sets based on the data collection date. Specifically, the training set consisted of sequences tested between January 9, 2021, and October 31, 2022 (N = 6089), and the remaining test set consisted of sequences tested between November 1, 2022, and June 22, 2023 (N = 980). Table 2 reports the number and percentage of sequences belonging to a particular SARS-CoV-2 viral lineage in both the training and test sets.

Uncertainty-based neural network model architecture, training, and optimization

A neural network model underwent training, hyperparameter tuning, and cross-validation of the training set to predict fold changes in neutralization activity for COVID-19 therapeutics and vaccine-elicited sera/plasma against variant spike protein sequences. The model was compiled with an Adam optimizer and a custom loss function (Eq. (2))35. The activation function for the input and hidden layers is a leaky rectified linear unit function (LReLU) and the activation function for the output layer is a linear function. We trained the model for 100 epochs with a batch size of 32, while also applying an early stopping function with a patience of ten to stop training once the validation loss stopped improving for ten consecutive epochs.

A custom loss function, based on prior literature, was used to optimize our neural network (Eq. (2)), where the output layer of the model consists of two neurons to predict the mean and variance of each observation35. The loss function can be described as:

Where yi is the prediction for the i-th observation, i2 is the estimated variance for the i-th observation, and yi is the true target value for the i-th observation. Typically, a Bayesian neural network is trained to predict the log variance, si=logi2 (Eq. (3)):

This is because the loss avoids a potential division by zero35. The exponential mapping also allows us to regress unconstrained scalar values, where exp -si is resolved to the positive domain giving valid values for variance35. Non-Bayesian neural network training methods usually ignore the variance term in this equation, assuming it is constant across all observations in the data. However, by adding the variance term, the variance can be implicitly learned as a function of the data and can be used as a measure of uncertainty inherent in the observations. Including the variance term also allows the model to be more robust to noisy data because observations with higher variance (i.e., higher uncertainty) will have a smaller effect on the loss.

Responses