A physics-enforced neural network to predict polymer melt viscosity

Introduction

Additive Manufacturing (AM) enables the rapid creation of metal or polymer parts with previously unimaginable features and topologies and is therefore poised to disrupt a variety of industries1,2. For polymers, achieving desired properties in the final component is determined by the appropriate choices of material chemistries with suitable rheological properties, as well as conditions adopted during the AM process such as temperature, extrusion rates, etc. At present, a limited palette of chemistries, properties, and conditions is utilized, generally guided by experience, intuition, and empiricism.

In this contribution, we adopt an informatics approach relevant to AM across the chemical and process condition space, to predict one critical rheological property of polymers, namely, the melt viscosity η. Informatics approaches have made major inroads in recent years within materials research3,4,5, leading to accelerated means for property predictions and providing guidance for the design of new materials6,7,8,9,10. These methods start with available materials data on properties of interest. The materials are then represented numerically to capture and encode their essential features in a machine-readable format. The numerical representations, or fingerprints, are then mapped to available property data using machine learning (ML) algorithms, ultimately producing predictive models for the property considered6,11,12,13,14,15. Within the AM space, similar methods have been used for final component property prediction16, process monitoring17, geometric configuration18, composition optimization19, and optimization of printing parameters (albeit mainly for powder-bed AM1,18, but not as much for polymer melt extrusion AM2). Extrusion AM relies on the precise control of polymer melts, which currently requires data from extensive rheological experiments for each new chemistry. This is a bottleneck in the ink development process2. Therefore, predictive capabilities for rheological properties, such as η, are useful to reduce the number of physical experiments aimed at optimization and design.

Melt viscosity of polymers, beyond being a critical property, is attractive to model with ML because there is a reasonable amount of related literature data, although with limited chemical diversity compared to other polymer property datasets5,12,15,20,21. Additionally, there are known physical equations (albeit with empirical parameters) that describe the dependence of η on its governing conditions: temperature (T), average molecular weight (Mw), and shear rate (({dot{gamma }})) (Fig. 1A). For instance, it is known that η increases with increasing Mw (via piece-wise power law dependencies), decreases (non-linearly) with increasing ({dot{gamma }}), and decreases (exponentially) with increasing T. Explicit functional forms and additional background on the behaviors are provided in the Methods section. Molecular weight distributions, quantified by the polydispersity index (PDI), are also known to affect melt viscosity22,23,24. With this situation in mind, previous works have also addressed the modeling of η using ML25,26,27,28. While promising, the majority of these works have focused on specific scenario or are shown to predict unphysical results, making them difficult to apply.

A Depictions of the functions used to describe the behavior of η with respect to temperature (T), molecular weight (Mw), and shear rate (({dot{gamma }})). The functions are parametrized by empirical parameters with physical significance, elaborated in Table 1 and in the Methods section. The η dependence on Mw is given by (log {eta }_{{M}_{w}}) (Eq. (8) in the Methods section). Empirical parameters define the slopes of the relationship at low Mw (α1) and high Mw (α2), the critical molecular weight (Mcr), the y-intercept of ({eta }_{{M}_{w}}) (k1), and the rate of transition from low to high Mw regions (({beta }_{{M}_{w}})). The η dependence on T and Mw is given by (log {eta }_{0}(T,{M}_{w})) (Eq. (5) in the Methods section), and is parameterized by reference temperature (Tr) and empirical fitting parameters (C1 and C2). The effects of C1 and C2 are visualized by comparing the trends with different sampled values. The η dependence on ({dot{gamma}}) is given by (log eta (T,{M}_{w},{dot{gamma}})) (Eq. (4) in the Methods section). The relevant parameters include shear thinning slope (n), the critical shear rate (({dot{gamma }}_{cr})), and the rate of transition from η0 to shear thinning (({beta }_{dot{gamma }})). B The Physics-Enforced Neural Network (PENN) architecture starts with an input containing the polymer fingerprint and the PDI. A Multi-Layer Perceptron (MLP) uses the concatenated input to predict the empirical parameters. Next, the computational graph uses the predicted empirical parameters to calculate η, via the encoded (log {eta }_{{M}_{w}}), (log {eta }_{0}(T,{M}_{w})), and (log eta (T,{M}_{w},{dot{gamma}})) functions. The physical condition variables (log {M}_{w}), (log dot{gamma }) and T are input to their respective functions. C Physics unaware Artificial Neural Network (ANN) and a Gaussian Process Regression (GPR) are baselines to compare with the PENN model. The input features to the ANN and GPR models are the concatenated polymer fingerprint, T, Mw, ({dot{gamma}}), and PDI.

In the present work, we create a physics-enforced neural network (PENN) framework that produces a predictive model of polymer melt viscosity which explicitly encodes the known physical equations while also learning the empirical parameters for new chemistries directly from available data. Physics-informed ML frameworks have shown great promise recently in many application spaces, including atomic modeling, chemistry-informed materials property prediction, and Physics Informed Neural Networks (PINNs) that solve partial differential equations29,30,31,32,33. Our PENN for polymer melt viscosity prediction involves a Multi-Layer Perceptron (MLP) that takes as input the polymer chemistry (fingerprinted using our Polymer Genome approach6) along with the PDI of the sample, and predicts the empirical parameters as a latent vector (listed in Table 1), used to estimate η as a function of T, Mw, and ({dot{gamma }}). A computational graph then encodes the dependence of Mw, ({dot{gamma }}), and T on η (see Fig. 1A) using the equations described in the Methods section. The entire framework (Fig. 1B) is trained on our dataset (elaborated in the Dataset section). The detailed architecture of this framework is described in the Methods section.

We find that this strategy is critical to obtain results that are physically meaningful in extrapolative regimes (e.g., ranges of T, Mw and ({dot{gamma }}) where there is no training data for chemistries similar to the queried new polymer). This ability is vital given our benchmarking dataset’s sparsity, containing only 93 unique repeat units, although the total number of datapoints is 1903 (including T, Mw, ({dot{gamma }}), and composition variations. As baselines to assess this PENN, we trained artificial neural network (ANN) and Gaussian process regression (GPR) models without any physics encoded. We find that the PENN model is more useful in obtaining credible extrapolative predictions. Our results indicate that informatics-based data-driven and physics-enforced (when possible) strategies can aid and accelerate extrusion AM innovations in sparse data situations.

Results

Dataset

Melt viscosity data was collected from the PolyInfo repository34 and from the literature cited by PolyInfo. Cited literature data was extracted from tables and figures with the help of the WebPlotDigitizer tool35. The final dataset shown in Fig. 2 includes a total of 1903 datapoints composed of 1326 homopolymer datapoints, 446 co-polymer datapoints, and 113 miscible polymer blend datapoints. The dataset spans a total of 93 unique repeat units with variations in Mw, ({dot{gamma }}), T, and PDI. For datapoints without a recorded PDI, we impute 2.06, the median PDI of the dataset. Due to inconsistencies with the reporting of polymer structures such as branching and crosslinking for a wide variety of chemistries, the dataset only includes linear polymers.

The joint distributions of (A) molecular weight (Mw), (B) shear rate (({dot{gamma }})), (C) temperature (T), and (D) polydispersity index (PDI) with respect to melt viscosity (η) are presented. The single distributions for the physical conditions are given on the top axes and the distribution of η is given on the right-most axis. Each subplot contains all 1903 datapoints from the dataset. (A–C) have highlighted samples in red that exemplify the dependencies depicted in Fig. 1A. E Visual depiction of train-test splitting across chemical space and physical spaces for N monomers in the dataset.

We found that η at low Mw were underrepresented when compared to η measurements at high Mw. Using the zero-shear viscosity (η0) relationship with Mw (Fig. 1A), we added 126 datapoints at low Mw (included in the 1903 datapoints). This was achieved by identifying polymer chemistries with more than five η0 datapoints at high Mw and a recorded Mcr36. Equation (6) (Methods Section) was fit to each chemistry and extrapolated to estimate η values at low Mw.

Because the viscosity values span several orders of magnitude (Fig. 2), we use the Order of Magnitude Error (OME) to assess ML model accuracy. OME is calculated by taking the Mean Absolute Error of the logarithmically scaled η values. Models with lower OME exhibit more accurate predictions.

Overall assessment of physical intuition with sparse chemical knowledge

An important future use case of our ML models is to estimate the melt viscosity in new physical regimes, given a small amount of knowledge of a given polymer and other chemistries. For example, given a few costly tests of a new polymer at a few molecular weights, one should be able to predict the viscosity at remaining molecular weights, and, likewise, across different shear rates and temperatures. Figure 2E depicts how this ability was tested through a unique splitting of data into test/train sets across the chemical and physical regimes. First, the monomers were split into train (90%) and test (10%) sets. Within the test monomers, the median of the distributions of the test monomers with respect to a variable in the physical space was calculated. The median was used to split all datapoints containing that monomer: half for a final test split, and the other half for training. The upper or lower half going to testing was randomly chosen. This approach ensures that all the test data focuses on predicting in new physical regimes given a sparse amount of monomer data. This process was repeated three times for each of Mw, ({dot{gamma }}), and T to ensure that diverse tests were used for evaluation.

Figure 3 shows the combined results of three trials for splits across all three physical variables. Supplementary Fig. 1 shows parity plots that specify the results from each trial. The GPR, ANN, and PENN predictions have acceptable OMEs, indicating that all three can capture some chemical information and physical trends. The PENN results in a distinct decrease in OME (an average of 35.97% improvement), and an increase in R2 (up to 79% for the ({dot{gamma }}) split) from the ANN. The PENN also outperforms the GPR for the Mw and T splits, but the GPR is more accurate on the test set for ({dot{gamma }}). In further analysis, we show how the physical viability of these predictions is scrutinized beyond the high-level trends of the parity plot.

Parity plots are used to assess the models’ overall predictive capabilities in new physical regimes based on the physical variable split for molecular weight (Mw), shear rate (({dot{gamma }})), and temperature T. Results are compared between Gaussian Process Regression (GPR), Artificial Neural Network (ANN), and Physics Enforced Neural Network (PENN) models. Each plot compares experimental values for melt viscosity (η) to the predicted η across 3 unique test-train splits for each physical variable. The top row (A–C) contains GPR results for (A) the Mw split, (B) the ({dot{gamma }}) split (C) the T split. The middle row (D–F) contains ANN results for (D) the Mw split, (E) the ({dot{gamma }}) split (F) the T split. The bottom row (G–I) contains PENN results for (G) the Mw split, (H) the ({dot{gamma }}) split I) the T split. The dotted black lines represent perfect predictions. The coefficient of determination (R2) and Order of Magnitude Error (OME) are reported over these test sets.

Distribution of predicted empirical parameters

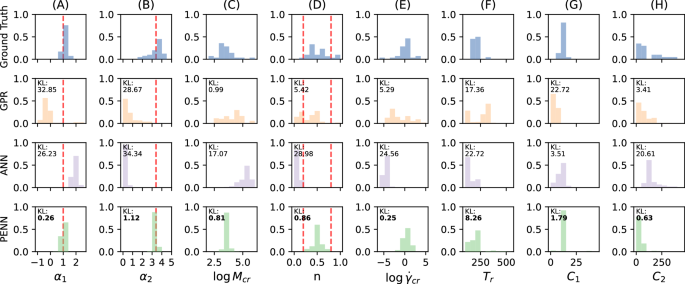

Despite the high overall performance of all three models, only the PENN model can produce physically credible predictions in regimes with restricted and sparse data. A comparison of the GPR, ANN, PENN models in estimating crucial empirical parameters (found in Table 1) from sparse data in the held-out set is detailed in Fig. 4.

Normalized distributions of empirical parameter values found in the dataset (Ground Truth) are compared to parameter values predicted by Gaussian Process Regression (GPR), Artificial Neural Network (ANN) and Physics Enforced Neural Network (PENN) models. Each column compares a different parameter for the melt viscosity (η) relationship with molecular weight (Mw), shear rate (({dot{gamma }})), and temperature (T). The examined parameters include: (A) α1, the slope of zero-shear viscosity (η0) vs. Mw correlation at low Mw (accepted value of 1 depicted by the red dashed line) (B) α2, the slope of η0 vs. Mw at high Mw (accepted value of 3.4 depicted by the red dashed line), (C) critical molecular weight (Mcr), (D) n, the rate of shear thinning (accepted range of 0.2–0.8 depicted by the dashed red lines), (E) critical shear rate (({dot{gamma }}_{cr})), (F) reference temperature (Tr) of a polymer. (G, H) show distributions for the C1 and C2 fitting parameters for the η–T trend. The ground truth distributions represent 41 samples for Mw parameters, 33 samples for ({dot{gamma }}) parameters, and 22 samples for T parameters. The Kullback–Leibler (KL) divergence of the model estimation distributions from the ground truth is given in the top left of each histogram. The lowest KL divergence among the three models is bolded for each parameter.

To establish a benchmark for comparing the three models, we obtained ground truth values of the parameters from the dataset. We did this by identifying subsets of our dataset involving the same polymer with measured η of several T, Mw, or ({dot{gamma }}). If a subset contained at least five points, we fitted the corresponding equation (Eqs. (5), (6), or (2)) to obtain empirical parameters. The distributions of these ground truth parameter values are shown in the first row of Fig. 4. There are a limited number of ground truth values because a small number of datapoints satisfy the above conditions. Nevertheless, this small sample set allowed us to make a few inferences about expected viscosity trends. The ground truth values of α1 and α2 are close to the theoretical values of 1 and 3.4, respectively37 (background provided in Methods). α2 values were occasionally less than the expected 3.4, possibly due to outliers or errors in fitting a small number of datapoints. The fitted (log {M}_{cr}) values fell within a range of 102.5 − 105 g/mol. For shear parameters, the majority of samples are found to have n in a range of 0.2 − 0.8, which is typical for polymer melts38. The obtained ({dot{gamma }}_{cr}) values were found in the range of 10−3 − 104 1/s. The fitted Tr values are mostly in a range of Tr < 250K. This is low when compared to Tg values found in thermal property datasets12. In our dataset, the datapoints that could be fitted to the η–T relationship were observed at T < 475K, so low Tr values could be overrepresented in the ground truth. The C1 parameter average was 11.8 and the C2 parameter average was 159.42 K. This analysis of the ground truth data suggests the desired parameter values our models should predict.

We used two different methods to obtain parameter estimations from the models: one method is unique to the PENN model, and another approach for the purely data-driven ANN and GPR. The PENN model automatically predicts each of the empirical parameters (see Fig. 1B), which are used in the computational graph to predict η. The ANN and GPR do not directly predict the parameters, so we used a fixed extrapolation procedure. The procedure involved selecting an unseen data point and varying a physical variable (one of Mw, (dot{gamma }), and T) within a predetermined range while holding the other two constant. The ranges for each variable encompass similar orders of magnitude as those present in the training dataset (Fig. 2). For Mw extrapolation, a range of 102 − 107 g/mol was used to encompass low and high Mw. For shear rate extrapolation, a range of 10−5 − 106 1/s was used to model behaviors in zero-shear and shear-thinning regimes. For temperature extrapolation, ranges of ± 20 K from the original data point’s temperature were used to stay within the boundary constraints of Eq. (5). Using this procedure, sets of predictions were made on every unseen datapoint and fit using Eqs. (5), (6), or (2), yielding estimated values of the empirical parameters.

In Fig. 4, we show the feasibility of the models’ empirical parameter predictions evaluated against the ground truth values and accepted values (elaborated in the Methods section). For parameters where a theoretical value is well-defined, the Root Mean Square Error (RMSE) of the predictions’ deviation from this value is calculated. The parameter prediction distribution is also compared to the ground truth distribution through a discrete Kullback-Leibler (KL) divergence,

Intuitively, the KL divergence is a measure of how one probability distribution P deviates from a reference distribution Q over a set of intervals i. A lower divergence indicates that the predicted parameter distribution is closer to the ground truth. The KL divergence was calculated by finding the entropy between the discretized probability distributions of the ground truth and the ML prediction.

From Fig. 4, it can be seen that GPR struggles to predict expected parameter values. The GPR predictions for α1 deviate from 1 by an RMSE of 1.26. For some polymers, GPR predicts α1 ≤ 0. The GPR predictions for α2 deviate from 3.4 by an RMSE of 2.87, and are significantly lower than the ground truth values in the dataset. Most predicted values for (log {M}_{cr}) are within the same range as the ground truth, but the proper low and high entanglement behavior is not captured which decreases the credibility of these fittings. For the shear thinning parameter n, some values fall within the expected range of 0.2 − 0.838 for polymer melts, but others are closer to 0, indicating that the expected shear thinning behavior is not always predicted. The predicted ({dot{gamma }}_{cr}) distribution is lower than the ground truth, indicating that the GPR forecasts the onset of shear-thinning at a significantly lower (dot{gamma }) than observed (if shear thinning is predicted at all). On temperature dependence, some Tr values are predicted higher than what is seen in the dataset.

The ANN’s failure to capture correct physical trends is also evident in the distributions of its fitted parameters. The RMSEs for the ANN’s estimated α1 and α2 values are 1.31 and 2.79, respectively. ANN overestimates α1 and underestimates α2 and therefore does not capture the effects of high Mw chain entanglement. The ANN predictions estimate a low n for a subset of polymers, which goes against the definition of shear thinning. The predicted ({dot{gamma }}_{cr}) values are lower than the ground truth distribution, indicating that the ANN struggles to capture the shear-thinning transition region from the dataset. The ANN predictions for the T trend are closest to the ground truth in comparison to its trends of the other variables, because T is a smoother, exponential function (Fig. 1A), enabling an easier average fitting.

The PENN outperforms the ANN in estimating feasible empirical parameters as depicted by lower KL Divergence values in the last row of Fig. 4. The RMSEs of the predicted α1 and α2 values are 0.05 and 0.17, which are substantially smaller than that of the ANN. Moreover, all the predicted values of (log {M}_{cr}) are within the ground truth range of 2.5 − 5. The PENN model can also learn the correct shear thinning phenomenon by predicting n values between 0.2 − 0.838 and a ({dot{gamma }}_{cr}) distribution that mirrors the dataset. The PENN’s predicted range of Tr is closest to the ground truth. For the C1 parameter, the PENN predicted distribution is closest to the proposed value of C1 = 7.60 (detailed in the Methods section), also having the lowest divergence from the ground truth. For C2 predictions, although the KL Divergence of the PENN is lower than the ANN, the PENN is confined to much lower values of C2, and has an average much lower than some experimentally derived values, such as C2 = 227.3 K39.

Overall, the average KL divergence across all parameter distributions for the GPR, ANN, and PENN are 14.59, 22.24, and 1.74, respectively. The overall distributions of empirical parameters points to the PENN having greater capabilities for producing physically correct results, than a purely data-driven model.

Performance in extrapolative regimes

In Table 2, we summarized the performance of predicted η profiles over wide ranges of Mw (256 extrapolations), (dot{gamma }) (71 extrapolations), and T (127 extrapolations) for all three models considered. We define a successful extrapolation as a model that is able to predict the correct trends while maintaining accuracy over the train and test points. In this study, a prediction is considered accurate for an experimental point if the experimental data falls reasonably within the predicted uncertainty bounds. However, in practice the required precision may vary depending on the specific practical use case. Overall, the PENN successfully predicts 80.4% of Mw extrapolations, 49.2% of (dot{gamma }) extrapolations, and 54.1% of T extrapolations. The ANN rarely achieves correct physical trends for Mw or (dot{gamma }) extrapolations in the span of the dataset and only predicts successful profiles for 17.2% of T extrapolations. The GPR model also exhibits a low performance in extrapolation. There are several instances (given in the brackets in Table 2) where the ANN and GPR successfully fit the data points but fail to extrapolate correctly beyond the dataset. This underscores the need for information beyond experimental data to enable extrapolation to new physical regimes.

Figure 5 shows a few examples of the extrapolation results summarized in Table 2. A much larger set of examples of both successful and unsuccessful extrapolations by the PENN compared to the GPR and the ANN are provided in Supplementary Figs. 2–7. Figure 5A–C shows examples of the PENN correctly extrapolating η given a small amount of information about a monomer in another part of the physical regime in unseen regimes. The ANN and GPR models are uncertain in these unseen regimes, resulting in large confidence intervals. In Fig. 5A, the PENN model accurately predicts the region near Mcr where the η–Mw relationship transitions from unentangled to entangled, and can therefore accurately predict η values at high Mw, despite not having seen any data in this region. The errors for the ANN and GPR in Fig. 5A are low, within approximately an order of magnitude of error. However, the ANN predictions have a near-constant slope around Mcr (implying α1 ≈ α2) and are inconsistent with the effects of polymer chain entanglements at high Mw. The GPR model also fails to predict a higher α2 slope. In Fig. 5B, only the PENN model predicts a zero-shear and shear-thinning region when predicting the η–(dot{gamma }) relationship of the given copolymer. The GPR model fits the training points but mispredicted shear-thinning at high shear rates. The ANN model predicts a decreasing relationship consistent with shear-thinning but doesn’t predict the zero-shear region. This could be an example of spectral bias within neural networks, which describes how ANNs prioritize global or “low frequency” patterns in data over local or “high frequency” patterns40. The general decreasing trend of η–(dot{gamma }) is “low frequency” and is captured by the ANN. In contrast, the transition regions are of a “higher frequency” and are not captured by the ANN. In Fig. 5C, the PENN model predicts the correct η–T relationship. The ANN model also predicts an exponential relationship but with a higher inaccuracy. The GPR model fits both the training and unseen datapoints, but predicts an unphysical trend beyond this. Overall, the PENN model makes predictions that follow the expected behaviors (Fig. 1A) of polymer melts.

Examples of successful (A–C) and unsuccessful (D–F) melt viscosity (η) and zero-shear melt viscosity (η0) predictions over wide ranges of molecular weight (Mw), shear rate ((dot{gamma })), temperature (T) by the Physics Enforced Neural Network (PENN) models. The extrapolated predictions are compared to those by Gaussian Process Regression (GPR) and Artificial Neural Network (ANN) models given the same training information. A is a good η0–Mw extrapolation for [*]CCCCCCCCCCOC(=O)CCCCC(=O)O[*] at T = 382.15 K. B is a good η–(dot{gamma }) extrapolation for a copolymer of [*]CC([*])CC(C)C and [*]CC([*])CCCCCCCC (0.968:0.032) (Mw = 290000 g/mol, PDI = 7.8) at T = 543.15 K. C is a good η–T extrapolation for [*]CCOCCOCCOC(=O)CCCCCCCCC(=O)O[*] (Mw = 2000 g/mol, (dot{gamma }) = 60 1/s). D is an unsuccessful η0–Mw extrapolation for [*]C=CCC[*] at T = 490.15 K, with possible mispredictions of Mcr and k1. E is an unsuccessful η–(dot{gamma }) extrapolation for a copolymer of [*]C[*] and [*]CC([*])OC(C) (0.72:0.28) (Mw = 60000 g/mol), with possible misprediction of ({hat{dot{gamma }}}_{cr}) and η0. F is an unsuccessful η–T extrapolation for [*]CC(O)COc1ccc(C(C)(C)c2ccc(O[*])cc2)cc1 (Mw = 1696 g/mol, (dot{gamma }) = 0.0 1/s) with a possible misprediction of Tr.

Correctly extrapolated samples by the PENN model, such as the ones in Fig. 5A–C make up 67.5% of the extrapolated test cases, which is a significant improvement relative to both the ANN and GPR. The PENN model also has room for improvement, especially when applied to datasets with low chemical diversity. Overfitting to a small set of chemistries in training can lead to the inaccurate prediction of parameters when making predictions for unseen chemistries. This behavior is demonstrated in Fig. 5D–F, where the PENN predicts a plausible rheological trend but incorrect values for unseen polymers. However, the PENN model introduces a layer of interpretability unavailable to physics-unaware models. Based on the predictions we can reasonably infer which parameters were over- or under-estimated. In Fig. 5D, the PENN model predicts near-correct α1 and α2 slopes, but the predicted Mcr and k1 values are underestimated. Figure 5E depicts how an underestimated η0 (caused by inaccuracies in predicted Mcr, α1, α2 and/or k1) can cause inaccurate η predictions for all other (dot{gamma }) values. We also see this phenomenon in Fig. 5F, where Tr is likely underestimated. The propagating error causes the PENN model to predict an inaccurate trend across the entire spectrum of T. Despite these errors, the pinpointing of the PENN’s weak spots can be used to add targeted training data to improve the model. This level of interpretation is unique to the PENN and cannot be done for the GPR and ANN.

These examples of extrapolations provide insights into the applicability of PENN versus pure data-driven methods when using datasets that contain limited chemistries. The equations used in the PENN are based on assumptions and generalizations, and may not account for all physical nuances. These must be considered when applying PENNs to future material design and process optimization problems.

Discussion

In this study, we introduce a Physics Enforced Neural Network (PENN), a strategy that combines data-driven techniques with established empirical equations, to predict the melt viscosity of polymer melts with better physics-guided generalization and extrapolation. The PENN makes predictions across many chemical compositions and relevant physical parameters, including molecular weight, shear rate, temperature, and polydispersity index. We compared our PENN approach against the purely data-driven, physics-unaware, Artificial Neural Network and Gaussian Process Regression. In extrapolative regimes, our PENN model outperforms the physics-unaware counterparts and offers an elevated level of interpretability and generalizability. To enhance generalizability across chemistries, future work could increase the chemical space in the dataset through new experiments, molecular dynamics simulations, and/or more aggressive data acquisition from literature.

This work has profound implications for additive manufacturing (AM) and materials informatics. The PENN model’s capability to guide the rheological control of diverse polymer resins accelerates the development of new printing materials, thereby expanding AM’s utility. Our methodology offers a blueprint for modeling other properties governed by empirical equations. The initial success of the PENN architecture for melt viscosity is a powerful demonstration of how data-driven insights combined with established knowledge can propel us into a new era of rapid advancements in materials science and engineering.

Methods

Fingerprinting and feature engineering

The chemical attributes of a polymer are represented by a unique fingerprinting scheme. The fingerprints (FPs) contain features derived from atomic-level, block-level, chain-level, and morphological descriptors of a polymer as described at length earlier6. The dataset contains homo- and co-polymers, and miscible polymer blends. Co-polymers and blends contain multiple repeating units, each with a separate FP. For co-polymers, the FP of each unit was aggregated to a single copolymer FP using a weighted average (with weight equal to composition percentage)12. Similar to previous work12, all co-polymers were treated as random. For miscible polymer blends, the FP of each unit was aggregated to a single FP using a weighted harmonic average (with the weight equal to composition percentage)20. For blends containing units with different Mw and/or PDI, the weighted average over each unit was used.

Enforced polymer physics trends

In this section, we detail the physics-based correlations included within the Physics Enforced Neural Network (PENN).

We enforce dependencies of η on temperature (T), molecular weight (Mw), and shear rate ((dot{gamma })) through (eta ({M}_{w},T,dot{gamma })), which we derive below.

Preamble: smoothing of piecewise functions

When going from one function g(a, b) in a low regime (a < b) to another function h(a, b) in a high regime (a > b), we can use the smoothened Heaviside step function,

where β is a tunable rate of transition.

A function f(a, b) that transitions from g(a, b) to h(a, b) is given by

η dependence on (dot{gamma }), T, and M

w

The η dependence on (dot{gamma }) follows the physics of shear-thinning fluids41,42,43. In these fluids, at low (dot{gamma }), there is not enough force between chains to break entanglements and cause movement, so η remains constant at η0. At a critical shear rate, ({dot{gamma }}_{cr}), the shear force is high enough to cause chain alignment, making chain diffusion easier. Beyond ({dot{gamma }}_{cr}), η decreases according to a shear-thinning linear power law41. This trend can be represented by a function (Equation (2)) across both the zero-shear and shear thinning regimes41,44,45,46,

where the parameter n describes the sensitivity to shearing47. For shear-thinning fluids, n < 1. For most polymer melts, n is empirically known to be in the range 0.2–0.838.

Equation (2) is unfavorable to use directly because (dot{gamma }) spans several orders of magnitude, so (log dot{gamma }) must be used as an input. Eq. (2) cannot be adapted to use (log dot{gamma }) as an input (due to the +1 in the denominator), so we depict the relationship across the low (dot{gamma }) and high (dot{gamma }) regimes as a piecewise function on the log-scale,

We smooth Eq. (3) with ({H}_{{beta }_{dot{gamma }}}) to get (log eta ({M}_{w},T,dot{gamma })) (Eq. (4)),

where ({beta }_{dot{gamma }}) is a parameter that dictates the rate of shift from zero-shear to shear-thinning. For our implementation, we found that optimization over the (dot{gamma }) domain was optimal when ({beta }_{dot{gamma }}=30).

(log {eta }_{0}({M}_{w},T)) is defined by the T dependence. As temperature increases, so does the rate of molecular self-diffusion, resulting in lower η seen in fluidic polymer melts43. The William–Landel–Ferry (WLF) equation39,48 describes the exponential decrease in η as the temperature increases. Therefore, we can encode temperature dependence as

where Tr is a reference temperature and C1 and C2 are material-dependent empirical parameters. The values for these are dependent on polymer chemistry. C1 = 7.60 and C2 = 227.3 K are examples of values that have been proposed39 from observations of experiments on a small subset of polymers. The reference temperature Tr is within a few degrees of the glass transition temperature Tg. It has been proposed that the WLF relationship holds within the range of Tg to Tg + 200K39.

({eta }_{{M}_{w}}) is defined by the Mw dependence. Longer and heavier polymer chains experience increased entanglements, which hinder chain reptation in the polymer melt at low shear37,43. Equation (6) is a piece-wise power law that describes this phenomenon.

where

Mcr is the critical molecular weight, above which entanglement density is high enough to increase the impact of Mw on η0. The two power laws intersect at Mw = Mcr37. Mcr is found to be approximately 2-4 times the molecular weight at which chain entanglement starts, but the exact value is polymer dependent43. α1 is the slope of the (log {eta }_{0})–(log {M}_{w}) curve if Mw < Mcr and α2 is the slope if Mw≥Mcr. Typically, α1 is theoretically and empirically determined to be about 1, while α2 is found to be about 3.437,43, but the exact value is dependent on the polymer. k1 and k2 are the y-intercepts of each power law and are polymer-dependent.

Mw and η0 span several orders of magnitude, so we use Eq. (6) in the log-scale to get Eq. (7),

Smoothing Eq. (7) with ({H}_{{beta }_{{M}_{w}}}) gives Eq. (8),

where ({H}_{{beta }_{{M}_{w}}}) is the smoothened Heaviside step function using ({beta }_{{M}_{w}}), a parameter which dictates the rate of shift from α1 to α2.

Therefore, Eqs. (4), (5), and (8) determine the (log eta ({M}_{w},T,dot{gamma })). The predicted parameters n, ({dot{gamma }}_{cr}), ({beta }_{dot{gamma }}) determine the (dot{gamma }) dependence in (log eta ({M}_{w},T,dot{gamma })), which is also a function of η0(Mw, T). The predicted parameters C1, C2, and Tr determine the T dependence in η0(Mw, T), which is also a function of ({eta }_{{M}_{w}}). The predicted parameters α1, α2, Mcr, ({beta }_{{M}_{w}}), and k1 determine the Mw dependence in ({eta }_{{M}_{w}}). The parameter outputs of the MLP have physically appropriate bounding ranges (reported in Supplementary Table 1).

η dependence on P

D

I

The dispersity of molecular weights in a polymer melt affects the bulk motion of polymer chains43. For example, a short and long chain may diffuse differently compared to two medium-sized chains. Therefore, using just the Mw without any knowledge of dispersity can mislead the ML model. We account for dispersity by using the polydispersity index (PDI),

where Mn is the number average molecular weight. Empirical models for this relationship22,24 may require detailed information on the specific shape of the molecular weight distribution of a polymer melt. Not all of our data points contain proper information on PDI (as discussed in the Results section), so we do not directly encode η-PDI trends within the computational graph. Instead, the PDI could affect the transitions in the critical regimes of the η0–Mw relationship and the η–(dot{gamma }) relationship (when (dot{gamma }={dot{gamma }}_{cr}) or Mw = Mcr)22,23,24. We incorporate this effect through the parameters ({beta }_{{M}_{w}}) and ({beta }_{dot{gamma }}) (described in Table 1). A higher value of ({beta }_{{M}_{w}}) or ({beta }_{dot{gamma }}) creates a quicker transition within their respective critical regimes.

PENN training

This entire PENN architecture is trained, in part, to minimize the error of viscosity predictions. The sum of these errors across all n training points is called the viscosity loss ({{mathcal{L}}}_{eta }), defined in Eq. (9). Each data point is denoted by its index i.

During training, we add loss terms (see Eq. (10)) to penalize the predicted α1 and α2 for the ith training point ((hat{{alpha }_{1,i}}) and (hat{{alpha }_{2,i}}), respectively) for deviating from their average values. The viscosity loss plus the penalty terms form the total loss ({mathcal{L}}).

wα is a hyperparameter that controls the impact that known values of the α1 and α2 parameters have on the final loss.

Machine learning approaches

The PENN and ANN models were implemented in PyTorch49. All models were trained on the same 9:1 (Train:Test) split. Before training, the features and η were scaled to a range of (−1,1). The polymer fingerprint, PDI, and temperature were scaled with the Scikit-Learn MinMaxScaler50 to a range of (−1,1). The (dot{gamma }) was scaled by first adding a small value of 10−5, taking the ({log }_{10}), and then scaling to (-1,1). Mw was scaled by taking the ({log }_{10}) value and then scaling to (−1,1). For the PENN, (log {M}_{w}) and (log dot{gamma }) use the same scaling bounds as η.

Within the training set, a 10-fold cross-validation (CV) was used to ensure that the models did not overfit the training set. The ANN and PENN models also had separate models trained for each CV split. Hyperparameter optimization was performed using the Hyperband51 optimization algorithm over each CV fold for both the ANN and the PENN models, with RayTune52 implementations, respectively. The ANN and PENN models, both containing 4 layers (including 2 hidden layers), involved optimization of the same hyperparameters: layer 1 size (64, 128, 256, 512), layer 1 dropout (0,0.01, 0.015,0.02,0.025,0.03), layer 2 size (64, 128, 256, 512), layer 2 dropout (0,0.01,0.015,0.02,0.025,0.03), and weight decay (0.00001, 0.00005, 0.0001, 0.0005, 0.001). For the PENN, wα (0.001, 0.005, 0.01, 0.03, 0.05) was also optimized. The value corresponding to the lowest ({{mathcal{L}}}_{eta }) (Eq. (9)) of the CV test split was used.

The Adam optimizer was used to train the models with a learning rate (LR) reduction by a factor or 0.5 on the plateau of the validation loss given a patience of 20 epochs. An initial LR of 0.0001 was used for the PENN. Empirically, we found that the PENN tuning was sensitive to high LR. The initial LR for the ANN was 0.001. Training was stopped with an Early Stopping patience of no improvement in the validation loss after 25 epochs.

The GPR model was implemented using Scikitlearn50 trained using Bayesian optimization to tune key hyperparameters. The hyperparameters optimized include the noise level (alpha) with a range of [10−2, 101], the length scale of the RBF kernel (length_scale) with a range of [10−2, 102], and the constant value used in the kernel (constant_value) with a range of [10−2, 102], each with a logarithmic uniform prior. The optimization was performed over 50 iterations each over the 10-fold cross-validation, with the best-performing model parameters selected based on the results. The scaling for the inputs and outputs of the GPR were the same as the ANN.

Responses