Business Intent and Network Slicing Correlation Dataset from Data-Driven Perspective

Background & Summary

Future networks will face increasingly complex and diverse demands and challenges with the rapid development of emerging technologies such as the Internet of Things (IoT), cloud computing, and big data1. In this context, it is essential for network management systems to embrace automation and intelligence to ultimately achieve closed-loop autonomy2, which would enable self-learning and evolution capabilities. However, without sufficient data support, closed-loop autonomous systems would struggle to make effective decisions and adjustments. Data serves as the cornerstone of system operation, providing real-time network state information and historical operational records, which are crucial for enabling systems to self-optimize and self-protect3. The core value of data-driven approaches lies in leveraging in-depth data analytics to inform decisions and actions, and closed-loop autonomous systems serve as a key platform for realizing this value proposition. By integrating data analytical insights into actual network operations, closed-loop autonomous systems can automatically execute adjustments and optimization actions, thereby fully demonstrating the advantages of data-driven approaches4. Thus, data-driven network reform is the necessary pathway to addressing network complexity, optimizing resource allocation, enhancing network security, promoting business innovation, and achieving network intelligence5. As technology continues to advance and application scenarios expand, data-driven network reform will play an increasingly pivotal role in the future.

In the process of intent-based network management, users or administrators typically express specific requirements regarding network performance, quality of service, and resource allocation through natural language6. These requirements are first parsed by a business intent recognition system and translated into machine-understandable business intent data. Based on this, the network management system orchestrates resources and generates corresponding network slices to flexibly respond to different business needs7. Throughout this process, the network management system relies on intent data and the associated data to link business intent to network slices, and achieve end-to-end service management from demand to delivery8. First, an appropriate entity set is needed for intent recognition, which is fundamental to the effective operation of intent-based network management systems. Business intent extraction is typically achieved using natural language processing9 techniques, such as BiLSTM10 (bidirectional long short-term memory) model or by fine-tuning Google’s BERT11 (Bidirectional Encoder Representations from Transformers) model. To enhance the accuracy and generalization capability of these models, it is essential to design entity sets tailored to specific tasks. Second, labeled business intent description data is required. Most existing network and business datasets describe only one aspect of characteristics, making it difficult to adapt to the rapidly evolving network scenarios. This leads to a shortage of training data for intent recognition systems. Directly obtaining research data from relevant institutions is often difficult, as these organizations are typically reluctant to share such data12, and such data are rarely collected and organized. Furthermore, public network datasets are rendered unreliable and sometimes outdated due to the rapid changes in network scenarios and patterns13. Finally, the generation and optimization of network slice data should be dynamically adjusted based on business requirements. However, current network slice generation still predominantly relies on standard service models based on network communication functions, which involve selecting appropriate slices from pre-existing ones. To achieve more flexible and business-driven network slice orchestration, it is essential to construct intent datasets that can dynamically generate and optimize slice data according to business needs.

In this paper, we create a new Business Intent and Network Slicing dataset (BINS) to bridge the gap between the agent need for such a dataset and the lack of the dataset. The BINS dataset provides richly structured and meticulously curated descriptive intent data for intent-based networking (IBN)14, with its data sourced from three primary channels. The first data source comes from China Telecom Sichuan Branch (Sichuan Telecom), consisting of real network operational data monitored and recorded within their intent-based network. The second part of the dataset is generated through manual construction by professional network engineers and by professionals simulating network operators expressing network intents. The third part of the BINS dataset is derived from parsing relevant academic papers, industry standards, and publicly available network documents from official websites of universities and research institutions, enterprises, and telecom operators. Within these network scenarios, we encompass multiple application contexts, including campus environments, industrial intelligent networking under the Industrial Internet, and real-time monitoring, covering over 200 distinct network intents. In this context, “intent” is defined as high-level goals or requirements expressed by network stakeholders, indicating the anticipated services, performance, and behavior of the network. By manually annotating these intent entities and relationships, the final dataset contains more than 100,000 intent entities and over 40,000 unique triples. These triples can be used to construct knowledge graphs15 for intent recognition, providing critical support for further development of IBN systems.

The primary objective of the BINS dataset is to advance the development of IBN, which is crucial for the applications of fully autonomous networks16 in the future. This is particularly important for emerging network scenarios stemming from various technologies, such as smart environments17, IoT18, vehicular networks (V2X)19, healthcare20, and next-generation 6G networks21. In these contexts, intelligent and advanced automation software mechanisms replace error-prone manual network configurations, thereby enhancing the efficiency of network configuration and reducing costs. Furthermore, the BINS dataset holds significant potential for broader applications, such as information retrieval and application interaction in natural language processing. In these fields, using intent datasets to train and evaluate natural language understanding models can effectively identify user requests and commands, enabling the provision of relevant information or actions and improving the overall user experience. Overall, the BINS dataset not only offers vital support for intent recognition tasks in closed-loop autonomous systems but also serves as a valuable resource for the intelligent development of other applications across various domains.

Methods

This section outlines the entire process of data generation, covering the various data sources, the data processing workflows and annotation methods, and providing an explanation of the included label categories and their definition methods.

Data collection methods

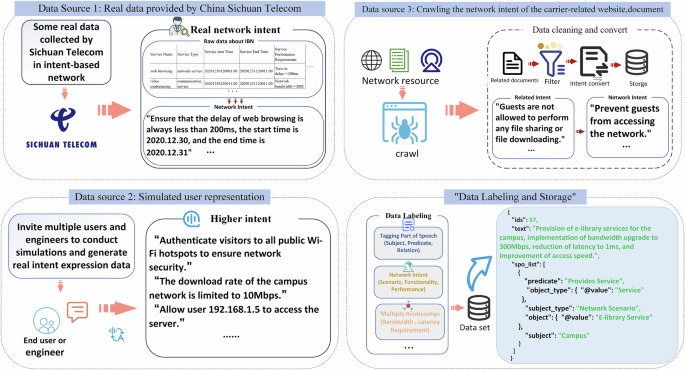

Figure 1 illustrates the three data sources of BINS and their corresponding data preprocessing processes used in this study. The data sources include:

The three sources of data, along with the data preprocessing and annotation process, all three data sources undergo corresponding annotation processing, with an annotation example provided in the lower-right corner.

Data source 1

As shown in Fig. 1, the first source of data comes from real-world operational data collected during intent-based network operations provided by Sichuan Telecom, Sichuan, China. This data was monitored and recorded within the intent-based network, covers network demands and performance metrics across various business scenarios, with a total of 10,001 records. The data metrics include service name, service type, start and end times, and network performance requirements. Based on these metrics, the data can be transformed into corresponding expressions of network intent. For instance, a record with a service name “web browsing”, service type “network service”, start time “20201230”, end time “20201231”, and a performance requirement of “latency ≤200 ms” is translated into a user intent expression such as: “Ensure that the latency for web browsing is ≤200 ms from 20201230 to 20201231” or “Maintain web browsing latency consistently ≤200 ms”. Through such transformations, irrelevant data was filtered out, yielding over 14,000 intent expressions from real business scenarios as part of the BINS dataset.

Data source 2

As illustrated in Fig. 1, some data was manually generated by network engineers based on their practical work experience. These intent data samples were derived from common scenarios in network configuration, troubleshooting, and business requests. Since these network engineers possess specialized knowledge, the intents they created are more detailed than those expressed by end users, often including more specific network parameters, such as bandwidth and latency, which are particularly beneficial for building intent-based network systems.

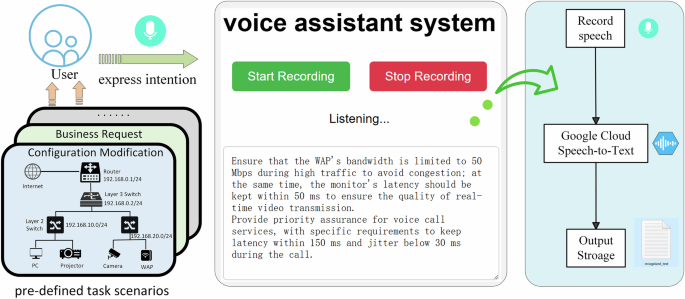

Volunteers were invited to simulate user network requests, generating user intent data through interaction with a voice assistant system. This process was primarily facilitated through a voice interface, where volunteers engaged with pre-defined task scenarios, such as network configuration modifications, business requests, and performance optimization, using voice inputs. The user interface of the voice assistant was developed using HTML, CSS, and JavaScript, and incorporates the Google speech recognition API Google Cloud Speech-to-Text22. This API is renowned for its high accuracy and real-time processing capabilities, capable of rapidly and accurately converting spoken input into text, ensuring the smoothness and accuracy of the data collection process. Figure 2 provides an example of this process.

An example of the intent collection API, where the simulator interacts with the voice assistant system in a predefined task scenario, generating real business intent expression data through voice input.

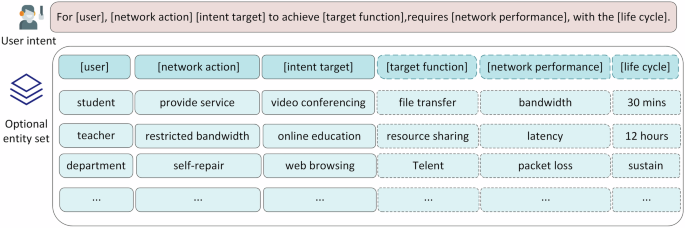

On the other hand, different intent expression paradigms can significantly impact the accuracy of intent recognition systems and the subsequent generation of intent strategies. Therefore, formalizing the grammar of intent expressions is crucial23. Additionally, Some simulated users may be limited by their limited network expertise, which could result in ineffective intent expressions. To more accurately simulate end-user network requests, this paper refers to the intent classification provided in IETF RFC931624 and introduces a reference model for intent expressions, simulated users limited by network knowledge can refer to this template, as shown in Fig. 3. This model standardizes intent expressions and is composed of the following entity label sets:

-

Intent user (customer, or network or service operators). The “user” entity is the declarer of the intent, and can include technical users such as network experts, network administrators, service providers, or non-technical end users, such as teachers and students in a school, or administrative departments in an enterprise.

-

Network action. This entity represents the manner in which the required service or the network should achieve a certain state, such as providing a service, limiting bandwidth, or self-healing.

-

Intent target. This entity collects the user’s needs, services, or objectives that need to be fulfilled, such as web browsing, online education, or video conferencing.

-

Target device or function. This entity denotes the network function (e.g., data communication, resource sharing) or physical device (e.g., routers, switches) used to deploy the intent. This is an optional entity.

-

Network performance. This entity collects the desired network performance metrics specified by the user, such as bandwidth, latency limitations, jitter requirements, etc. This is also an optional entity.

-

Lifecycle (persistent, transient). This entity refers to the duration of the intent, which is an optional entity and can range from minutes to hours or longer.

The reference model provided for standardizing intent expression allows participants to select and combine relevant entity labels based on their needs, forming key entity groups to accurately express business intent.

It is noted that the standardized intent expression process illustrated in Fig. 3 serves as a reference guide only and does not require strict adherence in practical applications. Participants may flexibly adjust the sequence of entity label usage and selectively employ partial or complete entity label sets according to specific application scenarios and practical requirements, thereby achieving diversified and effective intent expression. For instance, in a campus network scenario, a network administrator may express the following intent: “For students, providing web browsing services to achieve resource sharing, requires network bandwidth exceeding 100Mbps with the service being available for a minimum of 6 hours.” This can also be simplified to: “For students, providing web browsing services to achieve resource sharing.” The corresponding entity labels are: [user]: “student”, [network action]: “provide service”, [intent target]: “web browsing”, [target function]: “resource sharing”, [network performance]: “bandwidth exceeding 100Mbps”, [lifecycle]: “6 hours”. In this case, [target function], [network performance], and [lifecycle] are optional entities.

Data source 3

As shown in Fig. 1, network intents were parsed by analyzing academic papers, industrial standards, and websites related to operators, educational institutions, and enterprises. Telecom operators and technical support websites typically contain a wealth of information, including network issues raised by researchers, intent examples, and corresponding solutions. These data encompass diverse types of intents and data. Data is collected through methods such as keyword search, topic search, and the snowball method. Subsequently, noise is removed and irrelevant information is filtered manually, while PySpellChecker25 is used for spell checking to ensure high data quality. Subsequently, through a combination of automated tools and manual review, this data was transformed into valid intent expression data. For example, in a journal26 case study on IBN, the authors presented the following intent: “Transfer a common-level video service from user A in Beijing to user B in Nanjing.” After being formatted, this intent was passed on to the intelligent policy mapping module, which parsed the intent and broke it down into a specific service function chain (SFC), such as network address translation (NAT) and firewall functions, then constructed the corresponding SFC request. In this case, user A and user B can be represented by their respective IP addresses. Hence, the intent could be translated into: “Transfer a common-level video service from user 220.15.2.10 in Beijing to user 210.59.4.15 in Nanjing” (with assumed IP addresses). Through this process of data collection and transformation, a total of 274 intent entries were gathered from relevant documents and websites. After effective conversion, 257 verified intent data entries were obtained.

Data processing and annotation

To ensure data accuracy and reliability, targeted preprocessing measures were implemented based on different data sources. For the real-world data provided by Sichuan Telecom, consistency and data completeness were manually verified, irrelevant network metrics were removed, and the data was transformed into structured intent expressions. For the data constructed by network engineers, manual reviews were conducted to ensure data relevance and reliability, guaranteeing that the constructed data accurately reflected actual network operational demands and intents. Data generated by simulated users was transcribed into text via the automated voice assistant system, after which it underwent redundancy and noise checks, as well as language normalization. Due to potential inaccuracies or irrelevant content in the speech recognition process, careful manual filtering of valid intent data was required. For network-parsed data, the processing was more complex, involving manual filtering, redundancy removal, noise reduction, and extraction of content related to network intents, which were then converted into structured intent descriptions to ensure data relevance and usability.

During network data processing, raw data collected from multiple sources could be affected by various distortions, including semantic redundancy, non-ASCII encoded characters, spelling errors, and unrelated expressions. These distortions are categorized as follows:

-

Semantic repetition. Sentences that convey the same meaning but differ in linguistic expression. For instance, “Ensure that there is no buffering while playing high-definition video” and “Ensure stable network performance during HD video streaming without any delays” express the same intent, which is to guarantee a stable and buffer-free network connection while watching HD videos. Although the wording differs, the core intent is the same: ensuring smooth video playback of streaming media videos.

-

Non-ASCII encoding. Sentences containing special characters, such as Greek letters, mathematical symbols, or consecutive punctuation marks.

-

Spelling errors. To ensure linguistic accuracy, an automated Python-based spell-checking tool, PySpellChecker25 was used to detect and correct spelling errors within the data.

-

Unrelated expressions. These are statements that contain additional information unrelated to the core intent. For example, in the sentence, “The company has recently expanded its workforce, so we need to ensure that video conferencing for all employees has no network latency,” the unrelated portion, “The company has recently expanded its workforce,” should be removed, leaving the key intent as “Ensure no network latency for video conferencing.”

All preprocessed data underwent entity annotation, relationship annotation, and slice type annotation to make it suitable for natural language processing tasks, such as Named Entity Recognition (NER)27 and relation extraction. Entity labels are used to identify key elements in the text, such as business types, network performance, or functionalities; relationship labels describe the interconnections between business entities, such as “provides” or “ensures”; slice type labels specify the corresponding network slice type for the requirements expressed in the intent, based on domain-specific needs. These slice types include eMBB, URLLC, and mMTC, which are used to differentiate various network service types. To further enhance the utility of the data, the BIO (Begin, Inside, Outside)28 annotation scheme was applied to label the raw data, where the BIO annotation is applicable to tokenized text. The BIO annotation scheme is widely used in sequence labeling tasks, such as NER, tokenization, and syntactic analysis. It assigns a label to each word to indicate its entity type, with “B” indicating the beginning of an entity, “I” the middle part, and “O” representing non-entity words. The annotated structured data was stored in JSON format, ensuring consistency and usability, and providing a high-quality data foundation for the subsequent training and deployment of intent recognition models.

Data Records

The dataset files are publicly accessible through the data platform figshare29. The data is organized into three folders. The raw data collected is stored in the CSV format, while the annotated and structured data is stored in the JSON format.

Personnel and user interface description folder

volunteer.csv is an informational file recording the basic details of the volunteers who participated in the experiment, including their ID, age, and occupation. Table 1 presents the distribution of volunteers. The files “App.js”, “index.html” and “styles.css” form the user interface used for volunteers to collect network intent data. These files are developed using HTML, CSS, and JavaScript, and the integrated with the Google Cloud Speech-to-Text API to convert the network intents expressed by volunteers into text (source code is detailed in the “Code Availability” section).

Raw intent data folder

This folder comprises multiple files containing preprocessed data from various sources. Each record includes user ID, intent description, and creation time. For simulated or engineer-constructed data, refer to the files “engin_ori.csv” and “volu_api.csv”. For document-parsed data, including network requirements, source URL, and collection times, refer to the files “docu.csv” and “sichuan.csv”.

Labeled intent data folder

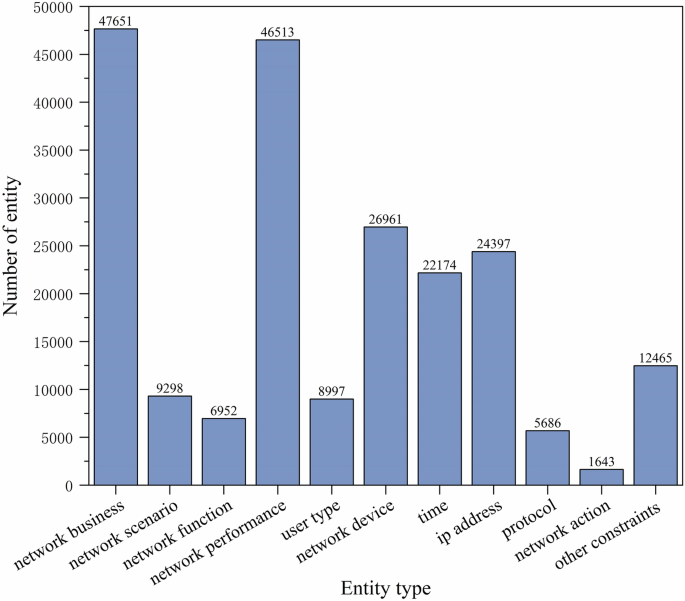

After processing the raw data as described earlier, the annotated data is stored in four JSON files: “engin.json,” “volu.json,” “docu.json,” and “sichuan.json”. Each record in these files consists of text, entity type, entity, relationship, slicing type and the BIO annotations of entities. These annotated structured data files are not only used for training intent recognition models, but also for common natural language processing tasks, such as entity extraction and relationship extraction. Additionally, each text in the files is labeled with a BIO sequence based on the position of its entities within the text, making it more suitable for training intent extraction models. Figure 4 shows the statistical information on the number of major entity categories included in the BINS dataset. Table 2 lists the total number of entity, unique relationships, and slice types contained in each file. It also includes the average span length of entities and relationships, as well as the count of unique triples for each data source.

The statistical information on the number of major entity categories included in the BINS dataset.

Technical Validation

To ensure the availability and assess the reliability of the data for network intent recognition, the training samples should comprehensively cover the network domain as much as possible, enabling the efficient identification of business intents within networks. To achieve this, it is essential to ensure that the data has been preprocessed, and is consistent and complete in order to assess its reliability. Data preprocessing is critical for reducing the impact of data noise and irregularities on model training, which can otherwise affect the reliability analysis of the data. This aspect has already been described in the data processing subsection of the methods section.

Data consistency refers to whether the data follows a unified standard and maintains a uniform format30. Initially, after the annotation of entity categories and relationships, the data is stored in a structured manner to facilitate reuse. Additionally, each text is labeled in the BIO format, making it suitable for the primary task or other natural language processing tasks.

Other aspects of detection utilized Capital One’s DataProfiler31, a powerful, flexible, and easy-to-use tool for data quality and metadata analysis. It is capable of automatically identifying file types, analyzing data quality, and generating easily interpretable visual reports. The main quality metrics used are described in Table 3.

To validate whether this dataset can be used to train intent recognition models and to assess the performance of these models, this paper employs the named entity recognition method based on BERT11 to verify if the data can be applied in intent recognition scenarios. By utilizing NER to extract key entities and related parameters from unstructured text, the model can effectively recognize users’ network intents. BERT is a pre-trained model introduced by Google in 2018, which includes 12 Transformer blocks, each equipped with multi-head self-attention mechanisms and feedforward neural network layers, make it particularly effective in natural language processing tasks. In the experiments, for comparison, the BERT-CRF32 and BERT-BiLSTM-CRF33 models were used to train the intent recognition system. The BERT-CRF model adds a Conditional Random Field (CRF) layer on top of the BERT output to capture dependencies between labels. Specifically, given the BERT output (overrightarrow{H}) for the input sequence, the CRF layer calculates the score (sleft(X,Yright)) for each possible label sequence using the following scoring function:

where ({w}_{{y}_{i-1},{y}_{i}}) is the transition score between labels, ({P}_{{y}_{i}}) is the feature weight matrix, and hi is the BERT output. The BERT-BiLSTM-CRF model further introduces a BiLSTM layer between the BERT output and the CRF layer. The BiLSTM layer captures bidirectional contextual information of the input sequence through forward and backward LSTM units, with its output ({H}^{{prime} }) serving as the input to the CRF layer: ({H}^{{prime} }=left[{H}_{f};{H}_{b}right]). All BERT-based models are trained using the Adam optimizer, with the learning rate set to 5 × 10−5. The output layer of the models uses Softmax as the activation function, and the model loss is computed using cross-entropy loss. Additionally, we experiment with fine-tuning Spacy (https://spacy.io/) NER models over the pre-trained DeBERTa-v3-large MLM34 to quantify the performance of the BINS dataset on the latest model. DeBERTa’s powerful pre-training capabilities help capture contextual information effectively, thereby enhancing entity recognition in complex contexts. Spacy NER is a deep learning-based tool designed to identify and classify entities from text. It utilizes CNN, RNN, or Transformer architectures, and incorporates a CRF layer to optimize the prediction of entity labels. Spacy NER supports various entity types and offers pre-trained models in multiple languages. The models are trained on large-scale annotated corpora, providing excellent out-of-the-box performance, while also supporting customization for domain-specific training needs. Spacy NER is widely applied in fields, such as information extraction, knowledge graph construction, and chatbot development. By fine-tuning Spacy NER over the DeBERTa MLM, the model’s entity recognition capabilities in IBN scenarios can be enhanced, thereby validating the generalization ability of the data.

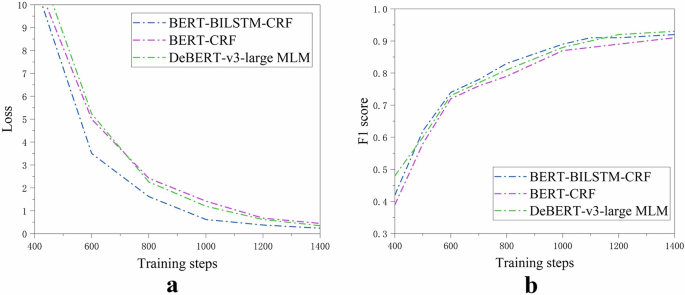

To evaluate the performance of the models, precision, recall, and F1-score35 were selected as evaluation metrics, which are commonly used for named entity recognition tasks. Precision refers to the proportion of true positive samples among those predicted as positive by the model. Recall indicates the proportion of actual positive samples that were correctly predicted by the model. F1-score considers the harmonic mean of precision and recall, providing a balanced assessment of both. When precision and recall are both high, the F1-score are high. When evaluating F-scores, precision, and recall, exact matching36 was used. This is because in the IBN systems, there is often a strict correspondence between entities and categories. Using exact matching ensures the evaluation results accurately reflect the model’s performance under stringent conditions. Exact matching measures the model’s adaptability to the datasets, avoiding biases in generalization ability and data quality that may arise from relaxed matching methods. In the experiment, the labeled “engin.json” dataset was used as the foundation to quantify the performance of the model. This dataset contains 14,466 entries, divided into 80% training data and 20% testing data. To observe the convergence of the models during training, the performance of both models was plotted in Fig. 5. The training steps represent the ratio of the number of training samples to the batch size, multiplied by the number of training epochs. In the experiments, the batch size was set to 8, and the epoch was set to 1. As shown in Fig. 5, the BERT-BiLSTM-CRF model converged at 1,000 training steps with an F1-score of approximately 93%, while the BERT-CRF model converged at 1,200 training steps with an F1-score of 90%. In contrast, the fine-tuned DeBERTa-v3-large MLM model achieved convergence at 1200 training steps, with an F1 score of 94%.

The performance of the three models on the “engin.json” dataset is shown. (a) Presents the convergence of each model with the number of training steps, while (b) illustrates the change in the F1 score of each model throughout the training process.

To evaluate the contribution of the BINS dataset to model generalization, we used the remaining three datasets with 80% allocated for the training data and 20% for the testing data in the experiments. Due to the smaller sample size of the “docu.json” dataset, it was combined with the “volu.json” dataset and used together in the experiments. Table 4 shows the performance of the three models upon convergence across various data sources. The BERT-BiLSTM-CRF model outperforms the BERT-CRF model of all metrics and convergence speed on all datasets, indicating that adding a BiLSTM layer to the BERT model helps capture richer contextual information, thereby improving the named entity recognition performance. Although the BERT-BiLSTM-CRF model performs excellently on BINS dataset, the DeBERTa-v3-large MLM model, with its more efficient long-range dependency capturing capability and context processing mechanism, can better adapt to different domains and tasks. It excels particularly in handling complex or variable entity annotations. As a result, the DeBERTa-v3-large model significantly outperforms the BERT-BiLSTM-CRF model across all datasets.

The experiments demonstrated that the labeled dataset used in model training and testing performed well, indicating its suitability for intent recognition tasks. This also validates the dataset’s effectiveness and applicability, providing a high-quality data foundation for building efficient network intent recognition models.

Usage Notes

The collected intent-based network dataset in this paper can be used to train and optimize intent recognition systems. The system precisely determines users’ network intent by interpreting and analyzing their natural language input. It then translates this meaning into appropriate network strategies, including configuration, troubleshooting, and performance improvement. For example, in terms of network configuration, when a user inputs commands like “configure VLAN” or “set firewall rules”, the system can efficiently interpret the request and convert it into the appropriate network policy. After validation, the system generates the corresponding network slice and deploys it to the relevant network infrastructure, thereby enhancing the automation and intelligence of the network management system.

Additionally, these datasets can be extended to applications in other fields, such as information retrieval and application interaction. In information retrieval, users’ queries often carry explicit intent. By training intent recognition models using our constructed datasets, search engines can better understand the intent behind user queries, thereby providing more relevant and precise search results. For example, when a user queries “how to improve network speed,” the intent recognition model can interpret that the user is seeking better network performance, and thus provide results that meet the user’s needs. In application interaction, understanding user intent is crucial for enhancing user experience. By training intent recognition models with our constructed datasets, applications can better predict and respond to user actions. For example, in a smart assistant application, when a user issues a voice command to “set a reminder for a video conference at 9 am tomorrow,” the intent recognition model can accurately understand the command and perform the corresponding operation, thereby improving the application’s intelligence and user satisfaction.

To further process this dataset for other tasks or domains, we recommend using popular Python libraries, such as Pandas and NumPy.

Responses