Contextual subspace variational quantum eigensolver calculation of the dissociation curve of molecular nitrogen on a superconducting quantum computer

Introduction

Quantum chemistry has been investigated as an application of quantum computing for two decades1. Instances of the electronic structure problem are intrinsically quantum mechanical, are challenging for classical methods at the few-hundred qubit scale and are of scientific and commercial importance. Considerable development of quantum algorithms for quantum chemistry has taken place. Progress in hardware has lead to many small demonstrations of quantum chemical calculations on noisy intermediate-scale quantum (NISQ) devices. These demonstrations evaluate both the practicality of quantum algorithms when deployed on real hardware and also evaluate NISQ hardware against a real-world application benchmark. Variational quantum algorithms have been studied extensively for their shallow circuits, making them appealing for NISQ applications where modest coherence times limit the depth of circuits that can be executed successfully. The current state of the art, summarized by a representative sample of Variational Quantum Eigensolver (VQE) realizations in the Supplementary Information (SI), shows that much progress is required before quantum computers can challenge their classical counterparts.

The goal of quantum computing for quantum chemistry is to achieve quantum advantage. This implies the existence of problems where all classical heuristics fail to produce adequate results, while a quantum algorithm succeeds in rendering its chemical features to sufficiently high accuracy to be scientifically useful. This means larger systems and/or basis sets than are classically tractable, although candidate advantage applications are not easy to find, as recently brought to attention2,3. The threshold of “chemical accuracy” is used as a typical indication of success which, in NISQ demonstrations, is taken to mean the quantum calculation achieves an absolute error below 43 meV with respect to the numerically exact result in the chosen basis. This is an abuse of terminology as small basis set calculations will not typically be chemically-accurate when compared against experiment, which is the ultimate arbiter of computational utility; it has been suggested that algorithmic accuracy is more appropriate terminology4. The point of interest is whether NISQ devices are able to achieve sufficient resolution such that, with realistic basis sets, we may address chemically relevant questions and many demonstrations fail to meet this standard.

The NISQ hardware available today limits demonstrations to small basis set calculations on small molecules that do not challenge the classical state-of-the-art. Continued hardware development should enable a sequence of demonstrations of increasing size whose end point is quantum advantage. Quantum simulations that address small-scale versions of problems whose large-scale realizations are believed to be challenging or out-of-reach of classical chemistry methods are therefore good targets. One may evaluate progress in NISQ demonstrations by considering various desiderata.

It is prudent to question whether a particular NISQ calculation is strictly quantum mechanical in some fundamental conceptual sense. VQE instances have been evaluated from the point of view of contextuality5, relevant to the present work. We employ a hybrid quantum-classical method, in which part of the result is solved classically6 and a quantum correction is calculated on a NISQ device7. This reduces the quantum resource requirements, enabling us to address larger problems and also ensures the quantum calculation is not susceptible to description by some conceptually classical model.

For small problem instances, it is of interest to evaluate the performance of NISQ devices against various classical heuristics. A standard benchmark problem for many conventional quantum chemistry techniques is molecular nitrogen N28,9, which is of particular interest during bond-breaking. Density matrix renormalization group (DMRG) and coupled cluster calculations were performed on N2 in the Dunning cc-pVDZ basis set10, and more recently using heat-bath (HCI) and quantum-selected (QSCI) configuration interaction11. In the dissociation limit static correlation dominates9 and single-reference methods such as Restricted Open-Shell Hartree-Fock (ROHF) break down; in this regime, the ground state wavefunction is not well-described by a single Slater determinant. Despite the inadequacy of the single-reference state, in the limit of all excitations post-Hartree-Fock methods such as Møller-Plesset Perturbation Theory (MP), Configuration Interaction (CI) and Coupled Cluster (CC) are still exact; however, each method requires truncation to be computationally feasible, which induces error. Furthermore, perturbation and coupled cluster approximations suffer from non-variationality12,p. 292,320], which is observed in the minimal STO-3G basis for the N2 potential energy curve (PEC) in Fig. 1.

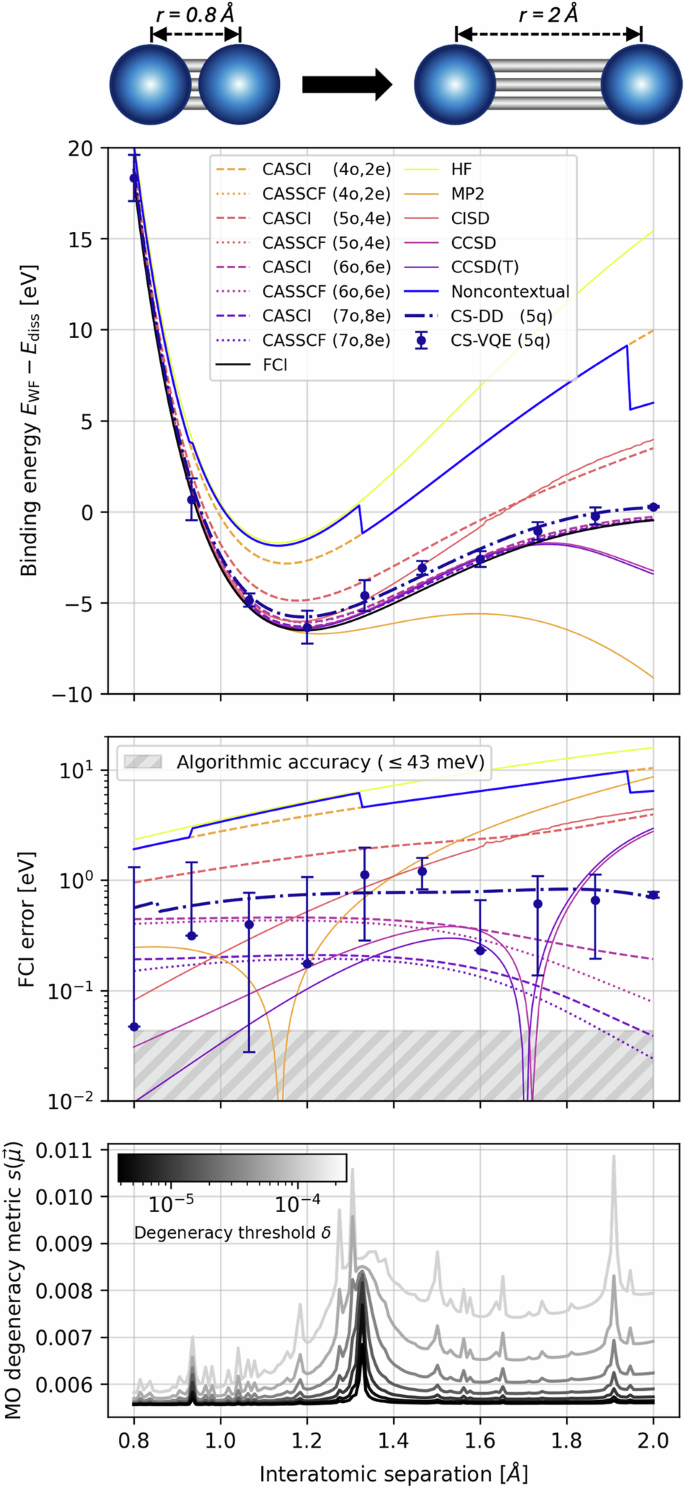

The CS-VQE data points were evaluated on IBM Quantum hardware, while CS-DD corresponds with direct diagonalization of the five-qubit contextual subspaces. The quantum simulations maintain good agreement with the Full Configuration Interaction energy throughout the entire range of interatomic separations, outperforming all the single-reference methods in the dissociation limit and remaining competitive with CASCI/CASSCF at a considerable saving of qubits. Discontinuities in the noncontextual energy coincide with peaks in our molecular orbital degeneracy detection function in Equation (1). Bars indicate standard error on the mean.

In such scenarios, multiconfigurational methods are commonly utilized such as complete-active-space configuration interaction (CASCI) and self-consistent field (CASSCF)13, which account for all determinants that correlate electrons in a specified number of active orbitals and thus have the flexibility to describe mixing between nearly degenerate configurations (i.e. static correlation)14. In Fig. 1 we include CASCI/CASSCF calculations, in each case selecting the active space from MP2 natural orbitals; an occupation number close to zero or two indicates the corresponding spatial orbital is mostly unoccupied/occupied and can therefore be considered inactive, which naturally maximizes the correlation entropy of the wavefunction in the active space. This yields improved treatment of the bond-breaking behaviour for active spaces (6o, 6e) and (7o, 8e), while coupled cluster is more accurate around the equilibrium geometry where is is expected to perform favourably. An issue with these CAS methods is that the computational cost scales exponentially with the size of the active space and dynamical correlations outside of the active space are excluded. The missing dynamical correlation can be recovered, for example through low-order perturbations such as complete-active-space (CASPT2) or n-electron valence state (NEVPT2) second-order perturbation theory. A further problem with all these techniques is that the quality of the calculation, namely the amount of correlation energy captured, is substantially affected by the choice of active space15, while keeping the problem computationally tractable.

Another commonly used approach to treating bond-breaking is Unrestricted Hartree-Fock (UHF), in which spin-up and spin-down orbitals are addressed separately. Sometimes, this can qualitatively describe bond dissociation; however, solutions no longer exhibit the correct spatial/spin symmetry16, i.e. they are no longer eigenstates of the S2 = ∣∣S∣∣2 operator where S = (Sx, Sy, Sz) describes the axial spin components. Since the molecular wavefunction is important to obtain observables other than energy, this represents a drawback of UHF as spin-contaminated or symmetry-broken wavefunctions are inappropriate in such cases.

In this work we invoke the Contextual Subspace approach7,17,18 to quantum chemistry running on superconducting devices. While we employed this technique previously for the equilibrium ground state preparation of HCl on noisy hardware19, the variational circuit was preoptimized classically. One other work utilized the Contextual Subspace method on noisy hardware for the purposes of testing a pulse-based ansatz by calculating equilibrium energies20. However, only the smallest of their simulations, NH STO-3G in a 4-qubit subspace, was able to recover the Hartree–Fock energy, with correlated wavefunction methods a more challenging target. By contrast, in this work we aim to calculate the entire PEC of N2—not just a single point estimate—with the Contextual Subspace Variational Quantum Eigensolver (CS-VQE) running on a quantum computer; each VQE routine consists of many state preparation and gradient calculations.

We compare our methodology against a suite of quantum chemistry techniques in the section Results, namely ROHF, MP2, CISD, CCSD, CCSD(T), CASCI and CASSCF. Given that we describe the N2 system in a minimal STO-3G basis set, chosen for its modest size and feasibility on the available quantum hardware, the exact Full Configuration Interaction (FCI) energy can be calculated. The goal here is not to achieve quantum advantage, which is precluded by the limited basis set, but rather to demonstrate how the Contextual Subspace approach on superconducting hardware can be competitive with and challenge a set of classical benchmarks. This framework is fully scalable and, as quantum computers mature, should provide a realistic path to quantum advantage and facilitate practical quantum simulations at large scales.

To realize this goal, we designed a robust simulation methodology that combines various quantum resource reduction tools together with a flexible error mitigation/suppression strategy. An overview of the qubit subspace framework is given in the section Contextual Subspace, while measurement reduction is achieved through a Qubit-Wise Commuting (QWC) decomposition of the reduced Hamiltonians, provided explicitly in SI. Furthermore, to demonstrate the compatibility of Contextual Subspace with contemporary simulation techniques, we construct ansätze via our modification to qubit-ADAPT-VQE21 which enforces hardware-awareness through a penalizing contribution in the excitation pool scoring function, presented in the section Ansatz Construction. Details pertaining to the quantum error mitigation/suppression techniques employed for this simulation are provided in the section Quantum Error Mitigation/Suppression, while software/hardware considerations are outlined in the section Software and Hardware Implementation Details.

Results

We perform Contextual Subspace Variational Quantum Eigensolver (CS-VQE) experiments for ten points along the binding potential energy curve (PEC) of N2 STO-3G, evenly spaced between 0.8Å − 2Å. The results of this may be viewed in Fig. 1. Alongside our experimental results, we include the following classical benchmarks: ROHF, MP2, CISD, CCSD, CCSD(T), CASCI and CASSCF. The active spaces of the latter two were selected using MP2 natural orbitals for fairness, since this is comparable to how the contextual subspaces were chosen as described in the section Contextual Subspace. We included active spaces of varying sizes, specifically (4o,2e), (5o,4e), (6o,6e) and (7o,8e) where (Mo,Ne) denotes N electrons correlated in M spatial orbitals. A crucial point to note when comparing our CS-VQE results to the CAS methods is that, for an active subspace of M spatial orbitals, one needs 2M qubits to represent the problem on a quantum computer; therefore, our chosen active spaces range from 8 to 14 qubits in size, while the contextual subspace consists of just 5 qubits. This is important to bear in mind when interpreting the results.

In Fig. 1 we see the single-reference quantum chemistry techniques – ROHF, MP2, CISD, CCSD and CCSD(T) – struggling to capture the FCI energy for N2. This holds especially true in the dissociation limit where there is no agreement between the different approaches. While the conventional techniques yield relatively low error around the equilibirum length at 1.192Å (albeit not within the target algorithmic accuracy of 43 meV), they incur large error at stretched bond lengths due to a failure of Restricted Open-Shell Hartree-Fock to describe static correlation. Furthermore, we see instances of non-variationality, which becomes apparent at 1.140 Å for MP2, 1.706 Å for CCSD(T) and 1.728 Å CCSD. For the CAS methods, we do not capture the bond breaking appropriately until the active space is expanded to (6o,6e) or (7o,8e), corresponding with 12 and 14 qubit subspaces.

On the other hand, our 5-qubit CS-VQE hardware experiments produce mean errors between 47 meV and 1.2 eV throughout the evaluated interatomic separations and remain below 1 eV for all but two of the bond lengths (1.333 Å and 1.467 Å). From direct diagonalization (the CS-DD curve in Fig. 1) we see a true error range of 0.5 eV to 0.8 eV along the N2 PEC. Our quantum simulations outperform all the single-reference techniques in capturing the bond dissociation behaviour; indeed, beyond an interatomic separation of 1.351 Å, the 5-qubit contextual subspace calculation yields lower errors than CISD and MP2, and outperforms CCSD(T) after 1.834Å, the ‘gold standard’ of quantum chemistry.

Turning now to the multiconfigurational approaches, our CS-VQE experiments produce lower error than CASCI/CASSCF (4o,2e) and (5o,4e) for every bond length, despite them corresponding with 8- and 10-qubit subspaces. In order for CAS to capture the dissociation satisfactorily, it needs at least the (6o,6e) space to describe the triple bond between nitrogen atoms appropriately; this is precisely what we find in Fig. 1. While the (6o,6e) and (7o,8e) calculations do yield improved errors, particularly towards the dissociation limit, we stress that they correspond with 12- and 14-qubit subspaces. Contextual subspaces of the same size yield considerably lower error than CAS in this instance (assessed through direct diagonalization), albeit caveated with the added challenge of running hardware experiments of that scale, which could prohibit us from achieving this in practice.

Our energy advantage in the dissociation limit can be attributed to the noncontextual energy component of the CS-VQE simulations. Around the equilibrium length there is negligible difference between the Hartree-Fock and noncontextual energy, but as the bond is stretched we see the noncontextual approximation outperforming Hartree-Fock, even before the inclusion of contextual corrections obtained from VQE simulations. Interestingly, the noncontextual energy coincides exactly with the CASCI/CASSCF (4o,2e) curve between bond lengths 1.328 Å and 1.909Å. This is because the noncontextual problem can accommodate a ground state that is multireference in nature, thus capturing the separated atom limit more appropriately than the single-reference ROHF state here. We note that the CS-VQE optimization is still initialized in the Hartree-Fock state and therefore does not receive an unfair advantage from this feature of the method; instead, the noncontextual contribution is included in the construction of the contextual Hamiltonian as a constant shift.

Curiously, the noncontextual PEC is not continuous and these error improvements are encountered in sharp decreases of energy, as seen in Fig. 1 for interatomic separations 0.936 Å, 1.328 Å and 1.909 Å. In order to probe this effect, we search for degeneracy in the energy levels between Molecular Orbitals (MO), which is known to cause issues for MP22,23. This is achieved by detecting near-zero energy differences between elements of μ, a vector with length the number of orbitals M whose entries are the canonical MO energies computed through Hartree–Fock. Our candidate MO degeneracy detection function is

where δ≥0 acts as a filtering parameter determining the threshold of near-degeneracy between energy levels, noting (mathop{lim }limits_{delta to 0}frac{sqrt{pi }delta {rm{erf}}(x/delta )}{2x}={delta }_{x,0}) and thus for δ = 0 this will detect exact degeneracy. The metric satisfies 0≤sδ(μ)≤1 and for (delta < {delta }^{{prime} }) we have ({s}_{delta }({boldsymbol{mu }})le {s}_{{delta }^{{prime} }}({boldsymbol{mu }})). The maximum depth ({D}_{max }le M) allows one to truncate the outer sum since the number of inner terms increases as ({M-1 choose i}); in the N2 STO-3G case M = 10 so we may include all MO degeneracy contributions, but for larger systems we may truncate for ease of computation. This may be viewed in the lower subplot of Fig. 1, where peaks indicate the presence of degenerate MOs. Encouragingly, these peaks coincide exactly with discontinuities in the noncontextual energy approximation, thus giving us confidence that the success of our Contextual Subspace techniques stems from its ability to describe static correlation in the noncontextual component. In SI we supplement this new diagnostic tool with traditional approaches to detecting static correlation in wavefunction methods such as T1, D1 and correlation entropy. Finally, while we identified the occurrence of noncontextual discontinuities to coincide with peaks in our MO degeneracy metric of Equation (1), one might consider the converse implication of our noncontextual problem as itself a test for detecting non-dynamical correlation. This is closely related to the Coulson-Fischer point, characterized by a divergence between restricted and unrestricted Hartree-Fock calculations24, indicating a break-down of spin symmetry.

Discussion

This study unifies many contemporary techniques of quantum computation to deliver experimental results that challenge conventional quantum chemistry methods of a similar computational overhead. In particular, it demonstrates the effectiveness of the Contextual Subspace method in surpassing classical single-reference techniques (ROHF, MP2, CISD, CCSD, CCSD(T)) and its competitiveness with more computationally demanding multiconfigurational approaches (CASCI, CASSCF). While we do not claim to realize any form of quantum advantage (due to the problem being solvable at the FCI level), this work exemplifies the ability for our simulation methodology to capture static correlation effects (encountered in systems undergoing bond dissociation, for example). This stems from the noncontextual ground state being able to describe a superposition of electronic configurations, as opposed to a single Slater determinant. This is analogous to how multiconfigurational methods such as CASCI and CASSCF are able to treat non-dynamical correlations; however, we showed that a significantly reduced contextual subspace could describe the bond dissociation of N2 at a level comparable to active spaces more than twice the size in CAS methods. A benefit of the Contextual Subspace technique is the active space is not explicitly selected15,25 and the multi-reference character is informed by the choice of noncontextual Hamiltonian.

Future work will look into increasing the size of problem studied by expanding the basis set and/or molecular system. As we move to study systems outside the realm of FCI, we wish to assess whether our methodology is able to maintain its competitive advantage of significant reduction in quantum resource requirements, while still facilitating a similar level of accuracy as classical multiconfigurational techniques. Furthermore, it is also possible to make a frozen core and/or active space approximation within the Contextual Subspace approach, a further consideration in which it will be interesting to explore the efficacy of this method. The Contextual Subspace methodology describes a flexible approach to quantum simulations that is scalable to large molecular systems. This should provide a practical route to quantum advantage and thus provide answers to scientifically meaningful questions in the chemical domain.

Methods

Contextual subspace

Contextuality gives us perhaps the broadest conceptual picture of quantum correlations that defy classical description26,27,28,29. In the restricted setting of Pauli measurements, it manifests in the non-transitivity of commutation amongst non-symmetry elements of the Hamiltonian, implying outcomes may not be assigned consistently without contradiction5,30. Conversely, the absence of contextuality gives way to a class of Hamiltonians whose spectra are described by a classical objective function that parametrizes an underlying hidden-variable model6. Therefore, partitioning the target Hamiltonian into contextual and noncontextual components gives us a hybrid quantum/classical algorithm for calculating eigenvalues with reduced quantum resources. In fact, by enforcing noncontextual symmetries over the contextual Hamiltonian, we may identify a so-called contextual subspace7.

The qubit reduction mechanism in the Contextual Subspace approach7,17,18, also in Qubit Tapering31,32, is effected by the stabilizer subspace projection framework17; scalable implementations of these techniques are available through the Symmer Python package33. Such subspace methods exploit various symmetries of the problem Hamiltonian—physical in the case of Qubit Tapering and artificial for Contextual Subspace—to yield a reduced effective Hamiltonian, with correspondingly reduced quantum resource requirements as measured by number of qubits, number of Hamiltonian terms and Hamiltonian norm, the latter of which dictates the sampling overhead in VQE.

Since Qubit Tapering operates on ({{mathbb{Z}}}_{2})-symmetries of the full system, the full and projected Hamiltonians are isospectral up to a change of eigenvalue multiplicities. Indeed, degeneracies of the full system may be lifted under this procedure; any remaining degeneracy implies the existence of non-({{mathbb{Z}}}_{2}) symmetry. All molecular systems possess at least two ({{mathbb{Z}}}_{2})-symmetries which enforce the parity of spin up or down particles. Additional symmetry arises from the molecular point group that describes the geometrical symmetry of the system. In the setting of diatomic molecules there are two relevant point groups: the cyclic point group C∞v consisting of continuous rotations around the inter-nuclear axis and the dihedral point group D∞h that also includes the reflection and inversion symmetries of the diatomic. Heteronuclear molecules, consisting of two distinct atomic centres, lie within the former group, while homonuclear molecules such as N2 fall under the latter.

The specific ({{mathbb{Z}}}_{2})-symmetries one exploits through tapering come from abelian subgroups of the above point groups that describe a restriction to 2-fold symmetry. In particular, the relevant group generators of C2v ⊂ C∞v are 180∘ rotations around the bond axis, denoted C2, and vertical reflections σv. In the case of D2h ⊂ D∞h we have the same rotational symmetry C2, in addition to the group generators σh, corresponding with horizontal reflections, and the inversion symmetry i. In all, qubit tapering enables the removal of four qubits from heteronuclear molecules (two point group generators C2, σv plus spin up/down parity) and five from homonuclear molecules (three point group generators C2, σh, i plus spin up/down parity). This reduces our N2 Hamiltonian from 20 to 15 qubits without incurring any error.

The Contextual Subspace methodology is more general than Qubit Tapering. The reduced Hamiltonians produced via this technique need not preserve the spectrum of the full Hamiltonian, which makes the selection of stabilizers (one might think of these as ‘pseudo’-symmetries) that define the subsequent contextual subspace more nuanced. We motivate this selection from the MP2 wavefunction (specifically, the excitation generator) just as in the CASCI and CASSCF calculations so that the methods may be compared fairly. While the latter selects an active space informed by the MP2 natural orbitals, we maximize the ℓ1-norm of the MP2 expansion coefficients whose corresponding Pauli-terms commute with the chosen stabilizers.

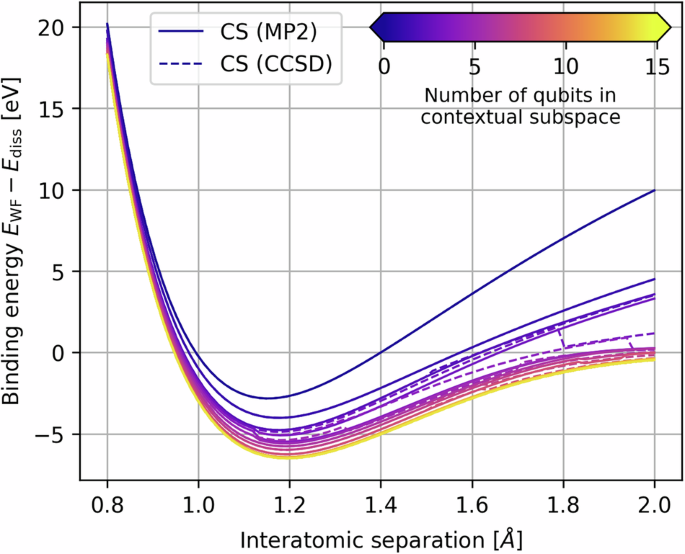

In the development of this work we also compared subspaces motivated by the CCSD excitation generators; interestingly, we found discontinuities in the resulting PEC, which may be observed in Fig. 2. This was encountered not just in the contextual subspace, but also for the CASCI/CASSCF calculations as seen in SI. Hence, we deferred to the MP2 wavefunction where such discontinuities were largely alleviated, at the cost of the absolute error generally being greater than that found in the CCSD-motivated subspaces.

In the latter we encounter a large number of discontinuities that are largely absent for the MP2-informed subspaces, although the absolute error is typically lower. At 15-qubits we recover the full space and the exact FCI energy is obtained.

We may construct contextual subspace approximations for any number of qubits between 1 and 14, given that we first taper the molecule so a contextual subspace on 15 qubits corresponds with performing full-system VQE. Not only does the Contextual Subspace method allow us to reduce the number of qubits required to represent a Pauli Hamiltonian, it also has an impact on the number of terms and ℓ1-norm of the resulting Pauli coefficients, Λ = ∑i∣hi∣, as seen in Fig. 3. This has implications on the sampling overhead required in VQE, which scales asymptotically as ({mathcal{O}}({Lambda }^{2}{epsilon }^{-2})) to achieve a desired error ϵ > 034,35.

Proportion of the number of terms and ℓ1-norm of the reduced contextual subspace Hamiltonians versus the full system at the equilibrium bond length r = 1.192Å.

It can be argued that reducing the number of qubits not only allows us to simulate larger systems on quantum hardware, but may also render such systems classically-tractable. However, the fact that Λ is additionally reduced, thus alleviating the quantum overhead further, provides a strong motivation for its use as a quantum resource reduction technique. In addition, this feature will benefit Hamiltonian simulation techniques such as qDRIFT36, where there is an explicit quadratic scaling dependence on Λ in the resulting circuit depths.

The Contextual Subspace approximation is also compatible with more advanced measurement reduction methodologies; in previous work we studied its use in combination with Unitary Partitioning18, although we did not implement it for this experiment as a unitary must be applied in-circuit to realize the measurement of each anticommuting clique37,38,39. If combined with additional techniques for reducing the number of terms in the Hamiltonian, such as Tensor Hypercontraction40, this could present a compelling quantum resource management framework.

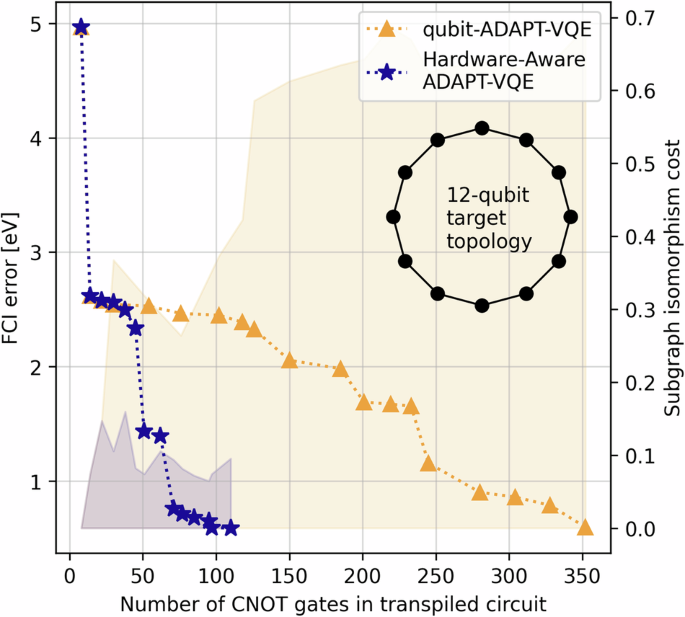

Increasing the number of qubits in the contextual subspace increases the accuracy of the method. For N2, in order to achieve algorithmic accuracy (terminology introduced in4 and taken here to mean errors within 43 meV of FCI, with chemical accuracy a common misnomer when working within minimal basis sets since it implies agreement with experimental results) throughout the full PEC under the contextual subspace approximation, we need 11/12-qubits motivated by the CCSD/MP2 wavefunctions, respectively. However, increasing the number of qubits in the contextual subspace also increases the depth of the ansatz circuit and hence exposes us to the vulnerabilities of hardware noise. In Fig. 4 of the following section we present the results of running noiseless qubit-ADAPT-VQE21,41 over a 12-qubit subspace and observe the decay of error against the number of CNOT gates in the ansatz circuit; such circuits are too deep to obtain satisfactory results on the available hardware. There is a trade-off between a sufficiently large contextual subspace to represent the problem accurately and a sufficiently shallow ansatz circuit such that the output is not overly contaminated by noise.

We show the FCI error per ADAPT cycle against the number of CNOT operations in the corresponding circuits transpiled for a closed loop of the 27-qubit Falcon topology as in Fig. 5. We compare the standard qubit-ADAPT-VQE algorithm versus our hardware-aware approach and observe considerably reduced depths. The ‘subgraph isomorphism cost’ of embedding the ansatz graph in the target is computed as s(n)/W.

We have been able to achieve algorithmic accuracy on quantum hardware in previous work for the equilibrium ground state of HCl19, where just 3-qubits were sufficient and hence a shallow ansatz was possible. A 12-qubit ansatz circuit would be too deep—and consequently too noisy—to achieve this level of accuracy on current hardware. Since algorithmic accuracy is too challenging a target for a 12-qubit simulation on real hardware, we relax this requirement. Instead we choose a contextual subspace that is sufficiently large to challenge a set of classical methods for N2. We compare against ROHF, MP2, CISD, CCSD, CCSD(T), CASCI and CASSCF for active spaces of varying size. In reproducing the PEC of N2 we find that a 5-qubit contextual subspace, while not algorithmically accurate, yields errors that do not exceed 1 eV, as shown in Fig. 1. It should be highlighted that the above classical techniques do not maintain algorithmic accuracy throughout the PEC either.

Ansatz construction

Algorithm 1

qubit-ADAPT-VQE; our hardware-aware scoring function f of Equation (3) is described in Algorithm 2.

We adopt qubit-ADAPT-VQE21,41 to build sufficiently shallow circuits for our N2 PEC experiment, with a new modification that facilitates a hardware-aware approach to adaptive circuit construction. The general ADAPT framework is provided in Algorithm 1; the central component is a pool of Pauli operators ({mathcal{P}}), from which one builds an ansatz circuit (leftvert psi rightrangle) iteratively by appending the term that maximizes some scoring function f at each step. In the standard approach we take the partial derivative at zero after appending a given pool element (Pin {mathcal{P}}), specifically

which may be evaluated either with the parameter shift rule42 or by measuring the commutator [H, P]41. By calculating the pool scores (f({mathcal{P}})) and identifying the maximal term, we extend (leftvert psi rightrangle to {e}^{itheta P}leftvert psi rightrangle) and re-optimize the ansatz parameters via regular VQE before repeating. For our particular application, we take ({mathcal{P}}) to be the set of single and double coupled-cluster excitations.

We modify the pool scoring function (2) to enforce hardware-awareness in the adaptive circuit construction, thus minimizing the number of SWAP operations incurred through transpilation. We achieve this by ranking approximate subgraph isomorphisms in the hardware topology, described by a graph ({{mathcal{G}}}_{{rm{target}}}=({{mathcal{N}}}_{{rm{target}}},{{mathcal{E}}}_{{rm{target}}})) where ({{mathcal{N}}}_{{rm{target}}}) is the set of available qubits and ({{mathcal{E}}}_{{rm{target}}}subset {{mathcal{N}}}_{{rm{target}}}^{times 2}) is the edge-set indicating that two qubits may be natively coupled via some nonlocal operation on the hardware. We define an isomorphism between two graphs ({mathcal{G}}) and ({mathcal{H}}) to be a bijective map (g:{{mathcal{N}}}_{{mathcal{G}}}mapsto {{mathcal{N}}}_{{mathcal{H}}}) such that, if ((u,v)in {{mathcal{E}}}_{{mathcal{G}}}), then ((g(u),g(v))in {{mathcal{E}}}_{{mathcal{H}}}). In other words, an isomorphism is a mapping from nodes of ({mathcal{G}}) onto nodes of ({mathcal{H}}) that preserves the adjacency structure. Furthermore, two graphs are said to be subgraph isomorphic if ({mathcal{G}}) is isomorphic to a subgraph of ({mathcal{H}}); we use the VF2++ algorithm43 as implemented in the NetworkX Python package44 for subgraph isomorphism matching.

Algorithm 2

Hardware-aware biasing function evaluation

In order to reweight the standard score assigned to a given pool operator (Pin {mathcal{P}}), we construct a weighted graph ({{mathcal{G}}}_{{rm{circuit}}}=({{mathcal{N}}}_{{rm{circuit}}},{{mathcal{E}}}_{{rm{circuit}}})) for the circuit ({e}^{itheta P}leftvert psi rightrangle) and bias with respect to a notion of distance from the nearest subgraph isomorphism, described in Algorithm 2. This works by iteratively deleting collections of qubits ({boldsymbol{n}}in {{mathcal{N}}}_{{rm{circuit}}}^{times d}) from the ansatz circuit and any associated edges in the corresponding coupling graph, terminating once a subgraph isomorphism is identified. Here, d is the search-depth, which begins at d = 0 with no qubits deleted and is incremented at each step; since the number of distinct n is ({| {{mathcal{N}}}_{{rm{circuit}}}| choose d }), we truncate at some maximum depth D and any pool operator for which no subgraph isomorphism was found with d≤D receives a score of zero. Otherwise, with the function s(n) that sums edge-weights connected to the nodes n, our new Hardware-Aware ADAPT-VQE scoring function becomes

where (W={sum }_{(u,v,w)in {{mathcal{E}}}_{{rm{circuit}}}}w) is the total sum of edge-weights and b > 0 is the biasing strength. This allows one to control the severity with which non-subgraph-isomorphic circuits are penalized. While the depth d does not explicitly appear in equation (3), since ∣n∣ = d we will have more edge-weights included in s(n) for larger depths and hence will be penalized more.

We test our new Hardware-Aware ADAPT objective function by constructing a 12-qubit contextual subspace ansatz for N2 at a stretched bond length of 2Å. In Fig. 4 we compare error against the number of CNOT gates in the transpiled circuit for our new scoring function, versus the standard qubit-ADAPT-VQE approach. For the target topology we choose a 12-qubit ring, which is found as a subgraph of the Falcon chip layout presented in Fig. 5. Transpilation is the mapping of a given circuit onto the target quantum device, which may not natively support the required entangling operations and thus expensive SWAP operations are incurred to compensate for discrepancies in the qubit connectivity45. The number of two-qubit gates required to transpile the ansatz circuit for the chosen 12-qubit ring is seen to be dramatically reduced, while maintaining similar errors compared with the hardware-agnostic approach. For fairness, both techniques were transpiled using a basic level of circuit optimization (e.g. cancellation of inverse gates).

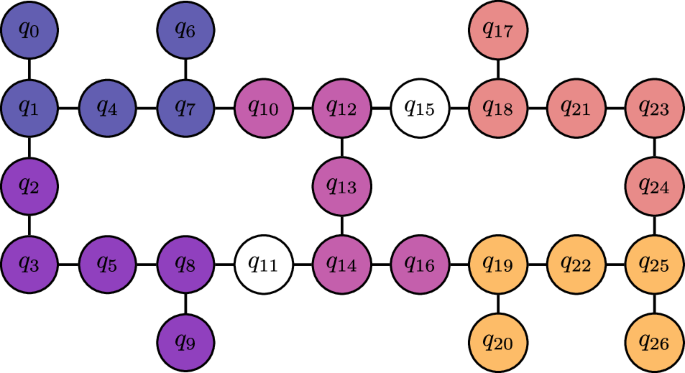

The different colours indicate replica ansatz circuits tiled across 5-qubit clusters. Not only does this increase the effective number of measurements extracted from the hardware 5-fold, but it also serves as a form of passive quantum error mitigation whereby noise is averaged over the device.

In SI we present the 5-qubit quantum circuits produced via Hardware-Aware ADAPT-VQE that were subsequently executed on IBM hardware to produce the results of the section Results. We note that the circuits presented there have received a high level of optimization in order to yield the lowest possible depth and are not the untreated circuits obtained directly from our ansatz construction routine.

Quantum error mitigation/suppression

The handling of errors in quantum computation can be categorized as suppression, mitigation or correction. The first of these is implemented close to the hardware itself and attempts to deal with flaws in the operation and control of the device. Mitigation, on the other hand, serves to reduce bias in some statistical estimator of interest by executing ensembles of circuits that have been carefully designed to exploit a feature of the quantum noise; this typically comes at the cost of increased uncertainty in the resulting expectation values. Finally, error correction schema engineer redundancy into the system, forming ‘logical qubits’ from many physical qubits such that errors may be detected and corrected on-the-fly during computation.

The error handling strategy deployed for this work deploys methods of suppression and mitigation and is motivated by the results of our previous benchmarking effort, in which we estimated the ground state energy of the HCl molecule to algorithmic accuracy (within 43 meV of FCI)19. For our N2 simulations, we adopt a combination of Dynamical Decoupling and Measurement-Error Mitigation with Zero-Noise Extrapolation. In our previous work we were also able to exploit Symmetry Verification46,47,48, however the non-({{mathbb{Z}}}_{2})-symmetries (particle and spin quantum number) reduce to the identity under our qubit subspace procedure in this case and therefore do not permit error mitigation opportunities. We also employ a circuit parallelization scheme that averages over hardware noise.

Dynamical decoupling

The original mechanism underpinning the Dynamical Decoupling (DD) error suppression technique, in which a carefully applied sequence of pulses may prolong the coherence of a spin system, predates quantum computing49. In our case, we apply periodic spin echos on idling qubits to suppress undesirable coupling between the system and its environment50,51. We use a simple uniform sequence of π-pulses to effect the decoupling; different sequences with non-uniform spacing (such as Uhrig DD52) might yield improvements.

Measurement-error mitigation

In Measurement-Error Mitigation (MEM) we apply an inverted transition matrix representing the probability of a bitflip (leftvert 0rightrangle leftrightarrow leftvert 1rightrangle) occurring for a given qubit53. More specifically, for a qubit k we evaluate a 2 × 2 matrix where element ({A}_{ij}^{(k)}) represents the probability of preparing state (leftvert irightrangle in {leftvert 0rightrangle ,leftvert 1rightrangle }) and measuring state (leftvert jrightrangle in {leftvert 0rightrangle ,leftvert 1rightrangle }). Tensoring qubits together allows us to infer the joint probabilities of preparing (leftvert {boldsymbol{i}}rightrangle in {{leftvert 0rightrangle ,leftvert 1rightrangle }}^{otimes N}) and measuring (leftvert {boldsymbol{j}}rightrangle in {{leftvert 0rightrangle ,leftvert 1rightrangle }}^{otimes N}) by taking products over the individual qubit marginals

applying A−1 to any subsequent noisy measurement results provides a rectified readout distribution.

This makes a strong assumption that quantum measurements are independent; Nation et al. demonstrate a breakdown of this technique in the presence of correlated measurements54, however constructing the fully coupled transition matrix is not in general feasible. The mthree package54 was utilized to implement MEM for our simulations. We note there are alternative techniques that do make such assumptions on the nature of the readout error, such as Twirled Readout Error Extinction (TREX), which leverages the idea of twirled measurements55.

Circuit tiling

Noise is not uniform across the qubits of a quantum processor, hence one will observe considerable variance in the results when executing the same circuit on different parts of the chip; to mitigate this, we execute many replica circuits across the chip and average over the results, which has the added benefit of increasing the effective shot-yield. We depict our circuit parallelization scheme in Fig. 5 for the 27-qubit Falcon architecture, which extends similarly to the 127-qubit Eagle device, similar to our other work56. One may view this as instance of ensemble averaging, often employed when computational models exhibit severe sensitivity to the initial conditions.

This noise-averaging process results in improved stability of the final energy estimates, especially when used in combination with DD and MEM as introduced above. This is particularly desirable if one wishes to make inferences from the behaviour of these estimates under some noise amplification procedure, which is precisely the case for ZNE, introduced in the following section. When performing regression, any uncertainty in the extrapolation data will propagate through to the inferred values and thus increase the variance of the final energy estimate. This has also been observed when applying the error mitigation technique of Randomized Compilation (RC)57 in combination with ZNE, where it is argued that small amounts of coherent noise lead to substantial errors58. While RC converts coherent error into stochastic Pauli noise by implementing the target unitary in different ways, one might draw an analogy with the parallelization scheme presented here. Indeed, due to inconsistency in the noise channels for local qubit clusters, the unitary performed in each sub-circuit will differ and may explain the stable noise amplification observed in the following section, ultimately leading to reliable extrapolation and lower variance in the final energy estimate.

Zero-noise extrapolation

Zero-Noise Extrapolation (ZNE), also referred to as Richardson Extrapolation, is a heuristic that amplifies noise arising from some selection of gates in the quantum circuit, obtaining noise-scaled energy estimates that one may use to extrapolate to the hypothetical point of ‘zero-noise’59,60,61,62,63,64,65,66,67. ZNE is sensitive to the way in which one chooses to perform the noise amplification; some works choose to amplify noise in the temporal domain through gate stretching, requiring pulse-level control of the hardware60,62, while others adopt discrete approaches such as unitary folding63 where identities I = U†U are injected into the circuit. In all cases care must be taken to ensure circuit transpilation does not perform any internal circuit optimizations that would lead to unpredictable noise scaling.

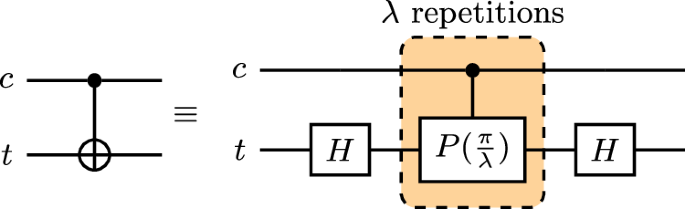

For this experiment, we adopt the strategy used in our previous work19 to decompose each CNOT operation into a product over its roots, where we may increase the number (lambda in {mathbb{N}}) of factors in the decomposition

as in Fig. 6. The control and target qubits are denoted c and t, respectively, noting also that there are Hadamard gates Hadt between consecutive CPhase gates that cancel, leaving only those at the beginning and end. Given the IBM native gateset, each CPhase gate will need to be transpiled back into a pair of CNOTs and three Rz rotations; thus, each CNOT gate is replaced with 2λ CNOT and 3λ + 2 single-qubit gates through this noise amplification procedure.

A root-product decomposition of the CNOT gate into CPhase gates, repeated application of which is counteracted with reduced rotation angles.

Previously, we experimented with a local unitary folding scheme in which we inserted CNOT pairs after each CNOT in the circuit, resulting in each being replaced with 2λ + 1 CNOT gates, compared with the 2λ encountered in our CPhase approach. In order for λ → 0 to probe the zero-CNOT-noise regime, we needed to offset the noise amplification/gain factors in the extrapolation to account for the additional + 1 CNOT of the former. By contrast, we find the CPhase decomposition to avoid the necessity of this gain offset, making for cleaner regression.

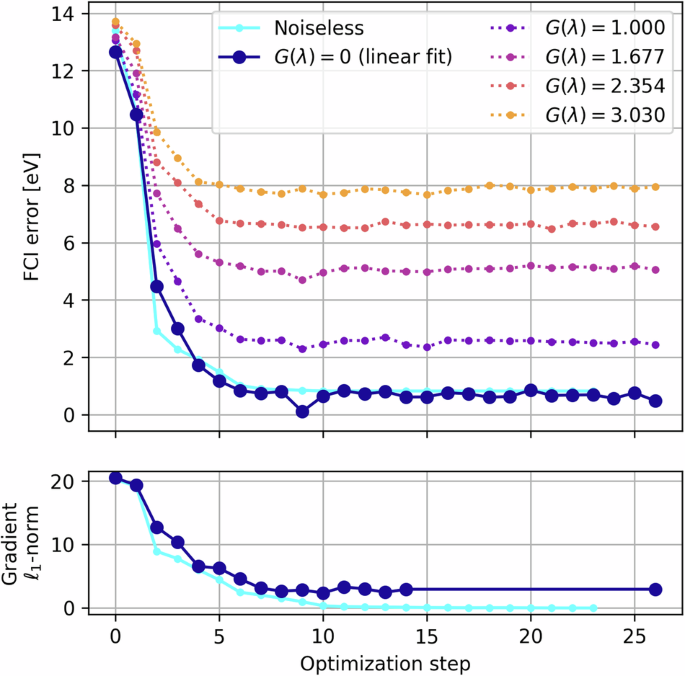

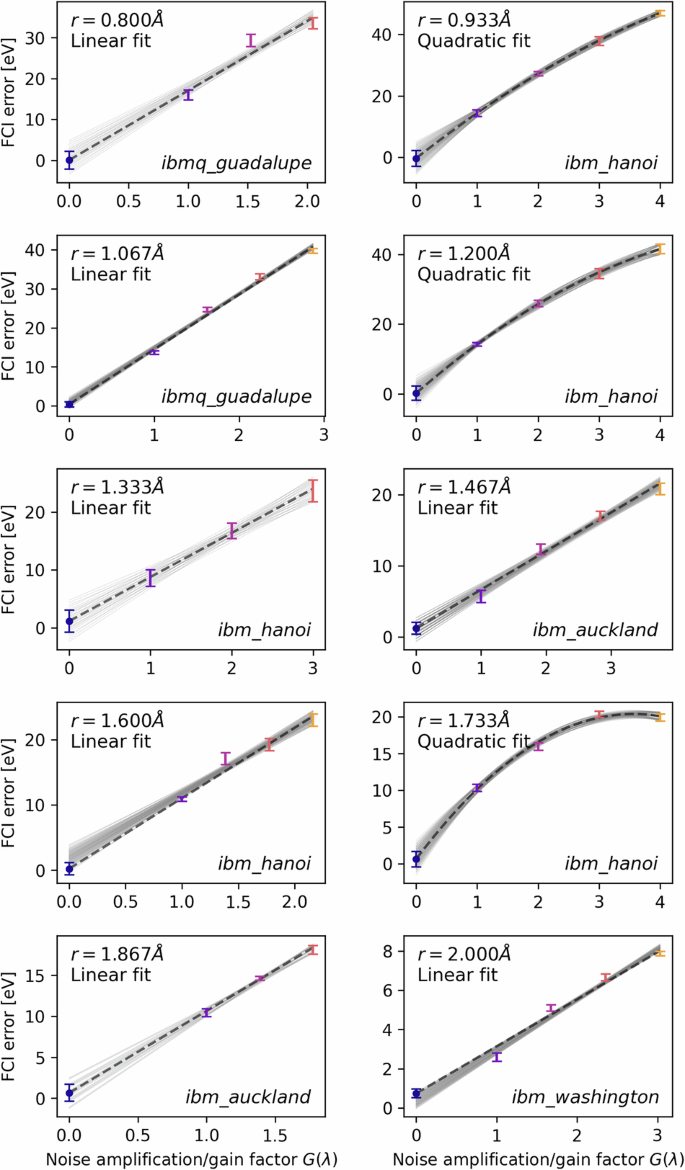

After retrieving the noise-amplified results from the quantum device, the noise amplification/gain factors G(λ) are calibrated using the one- and two-qubit gate error data extracted from the hardware at the time of execution; this is why the extrapolation data do not lie on integer values in Figs. 7 and 8. For more reliable extrapolation, we employ inverse variance weighted least squares regression (linear or quadratic), so that highly varying data points are penalized in the fitting procedure; the variances here are obtained from the converged VQE data, as opposed to the statistical bootstrapping procedure we used in our previous ZNE work19. In Fig. 7 we present a full VQE routine executed on the ibm_washington system, complete with the noise amplified data leading to the final extrapolated estimate. In Fig. 8 we present the noise extrapolation for each of the ten bond lengths simulated on the IBM hardware.

We also include a noiseless routine for comparison and note the partial derivatives converge on zero in the noiseless simulation, while they are prevented from doing so in the noisy case indicated by non-zero gradient ℓ1-norm, despite the optimizer having converged on the ground state energy.

Standard deviations are taken over the converged data in VQE in order to use weighted least squares in the linear/quadratic regression. We also plot the spread of possible extrapolation curves given the variance of each individual noise-amplified estimate. The noise amplification factors themselves are calibrated using one- and two-qubit gate error data extracted from the hardware at the time of execution.

Software and hardware implementation details

All of the conventional quantum chemistry techniques used within this work were facilitated by PySCF 68. Hamiltonian construction began with a Restricted Open-Shell Hartree-Fock calculation, before building second-quantized fermionic operators in OpenFermion69 that were subsequently mapped onto qubits via the Jordan-Wigner transformation70. The resulting operators were then converted into Symmer objects in order to leverage the included qubit subspace functionality33; we projected each Hamiltonian onto a 5-qubit subspace before running our VQE simulations.

Ansatz circuits were constructed using the Hardware-Aware ADAPT-VQE algorithm of the section Ansatz Construction, with the optimized circuits given explicitly in SI. We extracted Qubit-Wise Commuting (QWC) cliques from the Hamiltonians using graph-colouring functionality in NetworkX44, with the explicit decompositions provided in SI. We performed 5000 circuit shots for every QWC clique per expectation value calculation and the classical optimizer in each VQE routine was the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm71. Gradients were calculated in hardware via the parameter shift rule42. The simulations were executed on 15 of the available 16 qubits on Falcon r4P QPU ibmq_guadalupe (3 ansatz circuit tilings), 25 out of 27 qubits on Falcon r5.11 QPUs ibm_hanoi, ibm_auckland (5 ansatz circuit tilings) and 125 of the 127 qubits on the Eagle r1 QPU ibm_washington (25 ansatz circuit tilings). Each VQE workload was submitted to the IBM Quantum service as a Qiskit Runtime job.

Responses