Integrated photonic programmable random matrix generator with minimal active components

Introduction

The rapid advancements in photonics fabrication techniques and materials science have enabled the deployment of photonic integrated circuits compatible with telecommunication wavelengths in compact on-chip form factors1,2,3. This allows exploiting the properties of light to perform computational tasks with reduced power consumption, increased bandwidth, and improved reliability. The ability to manipulate light on chip-scale platforms has sparked a plethora of applications across various fields, including telecommunications4, optical neural networks5,6 and machine learning7, sensing8, imaging9, and quantum computing10,11. Indeed, extensive research has been developed to deploy programmable photonic integrated circuits capable of real-time tuning. The idea of such devices was first introduced by Reck et al.12 for free-space propagation using arrays of beam splitters and phase shifters, which paved the way for compact on-chip solutions based on meshes of Mach-Zehnder interferometers (MZI)2,13,14,15,16,17, as well as recirculating meshes18,19,20, and multi-plane light conversion and multiport waveguide arrays21,22,23,24,25,26.

Recently, interlaced architectures that represent arbitrary unitary N × N matrices have been explored as alternative candidates to conventional MZI meshes23,24,25, where arrays of phase shifters and passive mixing layers are intertwined one after the another. This approach involves the intertwining of N + 2 passive and N + 1 active layers of optical elements, which has demonstrated high flexibility. Specifically, the passive layer responsible for mixing light propagation across all waveguides does not need to follow any specific design as long as its corresponding transfer matrix satisfies a density criterion25. The active elements lie in a layer separated from the passive one, allowing for more flexibility in the phase elements, which can be implemented through microheaters27,28, phase-change materials29, or other technologies.

Along different lines, the implementation of random unitary matrices by purely optical means has found exciting applications30, such as optical encryption in free-space settings31,32 and through metasurfaces33,34. Harnessing the random transmission matrix of light in disordered waveguide arrays allows for encoding high-dimensional images into their lower-dimensional representations9,35. This property has been found resourceful in random disordered fibers, where the inherent Anderson localization renders highly localized modes used for high-fidelity transport of intensity patterns and images36,37,38,39,40,41. Furthermore, random photonic devices have been shown to be an excellent resource for generating operations akin to Haar-random matrices, a fundamental task on boson sampling required in quantum computing tasks42,43.

The present work introduces a programmable, compact, and simple-to-fabricate photonic chip designed to randomize light signals injected into its input. Such a process is achieved by exploiting general interlaced architectures discussed in the literature24,25 and reducing the corresponding number of elements to an effective minimum without compromising its functionality. Indeed, numerical experiments reveal that only two active layers are required to perform the randomization process with high accuracy. Here, the output randomness is inherited from the random distributions assigned to the phase elements; the quality of the random output is evaluated by comparing it with the typical profile of white noise signals. Although the fabricated chip randomizes both the real and imaginary parts of the input signal, we present a relatively simple scheme in which the random patterns are still measurable with conventional power measurements. The chip capabilities are extended by demultiplexing large signals into smaller sizes without jeopardizing the quality of the random output. Furthermore, a specific application is introduced, where the chip can be used as an all-optical encryption device whose decryption process can be assessed through an equivalent device, the existence of which is guaranteed by the unitary nature of the randomization device.

Results

Random architecture model

Photonic integrated circuits (PICs) are rapidly becoming an attractive solution for optical computing applications. Their versatility enables the design of both programmable units25 and integrated task-specific operations44,45. Particularly, unitary architectures based on interlaced layers of passive and active optical components have become a common design solution, for they allow for the representation of arbitrary unitary matrices (universality) and have shown to be resilient to manufacturing errors24,46. Experimental realization has been reported47, and further statistical methods for phase retrieval based on intensity measurements are discussed in the literature48.

The fundamental operational principle lies in the propagation of guided modes through waveguides ({bf{E}}({bf{r}})={mathcal{E}}({{bf{r}}}_{perp }){e}^{i(wt-beta {{bf{r}}}_{parallel })}{hat{e}}_{perp }), with ({mathcal{E}}({{bf{r}}}_{perp })) the normalized guided-mode amplitude, r∥ and r⊥ position vectors in the direction parallel and perpendicular to the propagation, respectively, ({hat{e}}_{perp }) the unit vector in the perpendicular direction, and β the corresponding mode propagation constant49 (see Supplementary Material S1 for more details). The electric field of a N-port device composed of single-mode waveguides, such as the one illustrated in Fig. 1a, writes as the complex-valued vector ({bf{x}}=({x}_{1},ldots ,{x}_{N}){mathcal{E}}({{bf{r}}}_{perp })), with ({x}_{i}in {{mathbb{C}}}^{N}) carrying information about the intensity and phase of the propagating light. Henceforth, to reduce the notation, the electric field propagating through the device is simply written as x ≡ (x1…, xN).

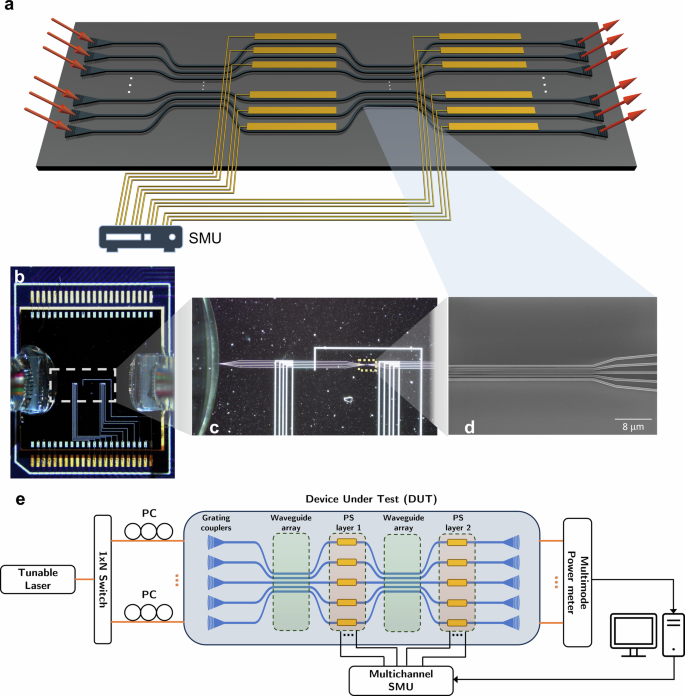

a PIC interlaced structure for M = 2 layers. The input optical signal x is fed into the PIC, and the randomization phases are programmed by the SMU controller. The processed optical output signal is z. For completeness, the fully packaged fabricated chip (b), a microscope image of the photonic circuit area (c), and a SEM capture of the waveguide array section (d) are illustrated. e Experimental setup and operation of the randomization device. Here, the programmable photonic integrated circuit performing the randomization is set as the device under test (DUT). In contrast, the phase shifters are the programmable elements of the device, which are externally and individually controlled by a multichannel source measure unit (SMU). The processed light at the output grating couplers is collected through a multiport power meter.

In general, universal interlaced architectures are composed of M1 layers of passive mixing components and M2 layers of active elements24,25. Particularly, a universal N-port unitary device requires M1 = N + 2 passive and M2 = N + 1 active layers to render any arbitrary unitary optical operation. In turn, for randomization tasks, we require that the output vectors show traces of randomness regardless of the nature of the injected input signal, and thus, not all layers might be needed. That is, we seek the minimum number of layers M1 and M2 so that the output resembles a white noise signal. Indeed, as pointed out in25, the intermediate passive layers do not necessarily require to take any specific form, and layers described by transmission matrices with dense properties render the desired functionality.

In the present design, the M-layer (M1 = M2 = M) unitary interlaced structure ({mathcal{U}}in U(N)) is built based on dense passive layers (F({alpha }_{m})={e}^{i{alpha }_{m}H}), which rule the wave evolution of light and are described by coupled-mode theory approach50,51. Here, H is a tri-diagonal matrix with components Hn,n+1 = κn for n ∈ {1, …, N − 1} and κn the corresponding coupling parameter between neighbor waveguides. The device is operated by preparing an arbitrary input state ({bf{x}}in {{mathbb{C}}}^{N}), the input signal, which is subsequently randomized through the unitary transformation

where ({P}^{(j)}=diag({e}^{i{phi }_{1}^{(j)}},ldots ,{e}^{i{phi }_{N}^{(j)}})) are unitary diagonal matrices characterizing the programmable phase-mask layers, whereas αj are the coupling lengths for each waveguide array, for j ∈ {1, …, M}.

The randomization process can be achieved with high accuracy by incorporating only two active layers of phase shifters, M = 2, and no substantial improvement is observed when more layers are included (see discussion below). This effectively reduces the overall size of the final architecture, rendering a low-footprint solution that requires a minimum number of control elements and, thus, is less prone to operational errors. The coupling coefficients defining the waveguide array can be chosen as those of the DFrFT operation using the Jx lattice24,52, homogeneous lattice53, or any random lattice that fulfills the density criterion posed by the Goldilocks principle discussed in25 (see Supplementary Materials S2 for examples of dense matrices).

Experimental setup and measurements

The PIC performing the randomization operation is fabricated on a silicon-on-silica (SOI) platform (see Methods for details). The design relies on coupled waveguides to perform the mixing layer operation and a layer of metal heaters producing the desired phase shift using conventional thermo-optic effects28. TE grating couplers are used to externally couple the PIC to the light input source and at the output for the data collection stage. Furthermore, a mechanical polarization controller (PC) is attached to the injection fiber, which is tuned so that maximum power is coupled to the PIC. The device under test (DUT) is the randomization PIC depicted in Fig. 1a, fed by a N × 1 network switch that splits the quasi-TE0 mode into the desired inputs that encode the optical signal. The heaters producing the phase shift are electronically controlled by a multichannel source measure unit, providing independent control currents of up to 10 mA to each metal heater. Lastly, the output modes are gathered from the output grating couplers and collected at a multiport power meter.

The proposed PIC design is flexible enough to be manufactured in commercial foundries. For the current experimental run, the PIC was designed as a 5 × 5 unitary device (5-port device) in the form of a fully electrically and optically packaged chip (see Fig. 1b–d). The packaging allows for easy and minimum-error light coupling and light-detection processes. Figure 1e summarizes the current experimental setup.

Manufacturing highly dense photonic chips presents challenges due to thermal and optical crosstalk, which become significant design problems when scaling up photonic units. To address this, we reduce the required number of ports of the proposed PIC by demultiplexing the input signal into lower-dimensional vectors, which are then sequentially fed at the input ports. Each input sequence is randomized using phases that are randomly distributed from either a normal or uniform distribution. This results in independent random outputs. Finally, the sequentially randomized outputs are multiplexed back into a higher-dimensional signal. This design choice is two-fold: it helps mitigate errors and reduces the device footprint.

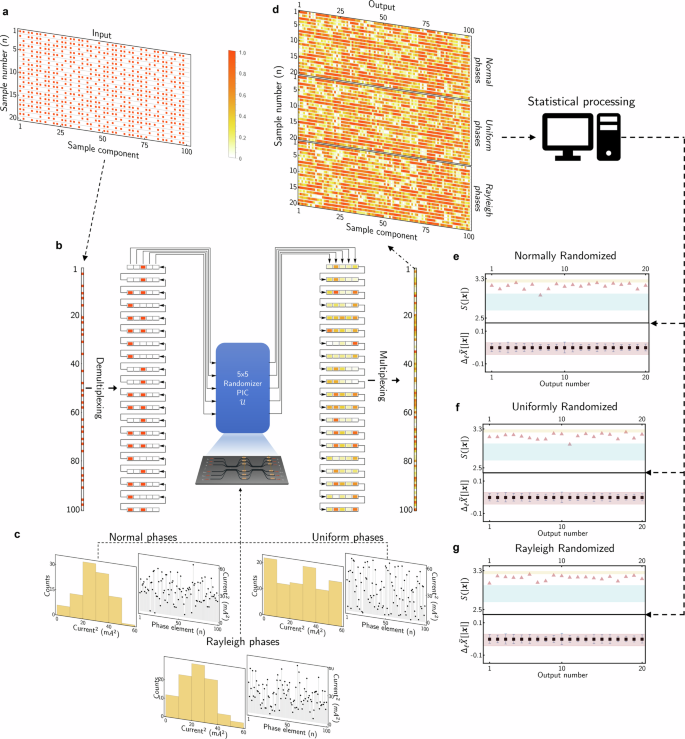

Thus, any input signal ({bf{x}}in {{mathbb{R}}}^{100}) shall be demuxed into sequences of 5-dimensional vectors, ({bf{x}}in {{mathbb{R}}}^{20times 5}). Particularly, we consider highly sparse 100-dimensional vectors, ({bf{x}}in {{mathbb{R}}}^{100}), whose components are either zero or one so that at least a component with a value of one exists for every demuxed sequence. The zero and one values denote in our experiment the cases when no light is injected and when light is coupled to the corresponding grating coupler, respectively. The distribution of zeros and ones is randomly selected for each sequence in the demuxed signal. The choice of such sparse signals is twofold: they can be implemented straightforwardly with the current setup, and they have poor qualities when it comes to random signals. The latter implies that if random footprints are found at the output of the PIC, it is not due to the random nature of the input signal (see analysis below). Since the PIC output is gathered through a power meter, any phase information is washed out during power measurements. Thus, real and imaginary parts of the complex-valued randomized output are not accessible through power detection schemes. Still, traces of randomness can be detected since the power measurements of complex-valued white noise (normally distributed signals) render a Rayleigh distribution instead54.

The set of 20 input sparse signals used in the present experiment, the demuxed lower-dimensional signals, and the corresponding measurements at the PIC output are illustrated in Fig. 2a–b. The phase change produced by thermo-optic phase shifters is proportional to the temperature change, which is, in turn, proportional to the current squared or electrical power28s. Thus, three experimental runs are performed, where the currents in the SMU are programmed in such a way that the corresponding current-squared (phases) follow normal, uniform, and Rayleigh distributions (Fig. 2c). The current-squared values are randomly picked from the interval (0, 64)mA2 according to each distribution. The upper current limit of 8 mA has been fixed to avoid overheating on the chip, and it also corresponds to the approximate value at which a full 2π phase rotation is achieved. The pre-established set of sparse inputs x(n) is fed into the PIC and optically processed according to the current-squared distributions under consideration, rendering the randomized optical signal that is ultimately detected and recorded by the multimode power detector. Since the signal was initially demultiplexed, the optical output is sequentially gathered in packages of five, the total number of ports, and normalized with respect to the total power in each measurement sequence. The final signal ({{bf{x}}}_{out}^{(n)}) is then produced by multiplexing all the collected sequences and normalized once again with respect to the maximum so that ({{bf{x}}}_{out,p}^{(n)}in left(0,1right]). The resulting randomized optical signals are shown in Fig. 2d for all the different current distributions.

a Sequences of testing random pulsed trains ({{bf{x}}}^{(p)}in {{mathbb{R}}}^{100}), for p ∈ {1, …, 20}. b The latter are demultiplexed into signals ({hat{{bf{x}}}}^{(p)}:= {{mathbb{R}}}^{20times 5}), which are programmed in the N × 1 switch and injected into the PIC. This produces the randomized demultiplexed signals ({hat{{bf{z}}}}^{(p)}). c In this process, two ensembles of random phases are loaded into the phase shifters through the SMU, which correspondingly powers the metal heaters. Such ensembles are shown as histograms and scatter plots, highlighting the normal (left), uniform (right), and Rayleigh (center) distribution profiles. d The output processed signals from the PIC are multiplexed back to the vectors ({{bf{z}}}^{(p)}in {{mathbb{R}}}^{100}). e–g These outputs are then post-processed to extract the statistical information for normally and uniformly randomized phase distributions using the entropy S[∣x∣] and autocorrelation difference ΔℓX[∣x∣] criteria. For reference purposes, the typical entropy values for normal and uniform distributions are highlighted in yellow and blue, respectively. Likewise, the typical autocorrelation values for the previous distributions are highlighted in red.

The randomness quality of ({{bf{x}}}_{out}^{(n)}) is assessed by analyzing the statistical properties of the intensity distribution. Ideally, white-noise signals are distributed according to normal distributions. Since we are limited to intensity-based measurements, the statistical analysis is restricted to positive-valued signals. We thus focus on the Rayleigh distribution for our analysis, which is equivalent to the squared of normal distributions, a characteristic more akin to the optical signals under consideration. In turn, uniformly distributed signals are known to maximize the Shannon entropy55 and thus are a handy resource for encryption tasks. Consequently, both the Rayleigh and uniform distributions serve as benchmarks against which the randomness of the randomized optical signals can be assessed.

Autocorrelation and Shannon entropy are two complementary metrics that enable the quantification of signal randomness. Autocorrelation is particularly useful for identifying patterns within signals. Typically, random signals exhibit a flat autocorrelation function, while the derivative of the autocorrelation tends to flatten out when examining positive-definite signals. Conversely, Shannon entropy estimates the uncertainty in a signal; that is, lower uncertainty translates to poor prediction of the signal features (see Section 2d for a comprehensive analysis of these criteria). The statistical analysis of the randomized optical signals is shown in Fig. 2e, f for all the current distributions under consideration. In the latter figure, the blue-shaded and yellow-shaded areas highlight the regions where normally and uniformly distributed signals are typically found (refer to Section 2d).

The Shannon entropy values of processed optical signals accumulate in the region between the normal and uniform distributions, with a tendency toward the uniform distribution region. The assessment of randomness is further evaluated through the analysis of the autocorrelation differences, revealing the expected flat profile characteristic of random positive-definite distributions regardless of the current distribution used during the randomization process. Additionally, a quartile-quartile comparison between the optical signals and theoretical distributions is shown in Section S3 of Supplementary Information. The latter is analyzed for a larger dataset of 400 signals, which further reinforces the random distribution character of the processed optical signals, with a tendency toward uniform distributions.

Randomness estimation

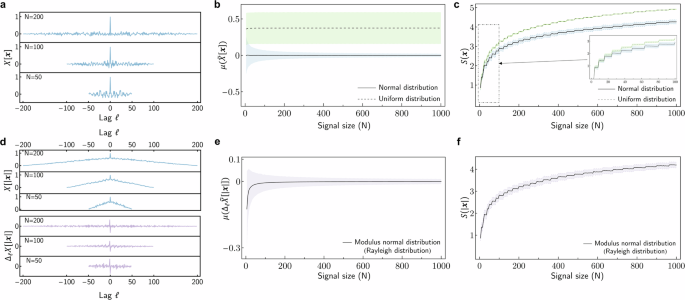

To assess the randomness of the device outputs, some criteria must be established to classify any given signal as random white noise. Although this may be accomplished through several statistical measures. Here we focus on the correlation and entropy properties. The use of two different statistical properties allows for ruling out false positives inherent in either autocorrelation or entropy analysis, as discussed below. White noise signals x are known to be uncorrelated to shifted copies of themselves, henceforth called lags and denoted by ℓ. For continuous or infinitely sampled signals, the autocorrelation of white noise signals (X[x]) becomes a single impulse at the lag ℓ = 0, Xℓ[x] = δℓ,0 with δp,q the Kronecker-delta distribution. In turn, for finite discrete signals, the autocorrelation is approximately flat for ℓ ≠ 0 and peaks to the unity for ℓ = 0. Indeed, Fig. 3a depicts some typical profiles of X[x], for signals ({bf{x}}in {{mathbb{R}}}^{N}) normally distributed.

a Typical autocorrelation profile for white noise signals. (b) Truncated autocorrelation criterion (widetilde{X}[cdot ]) and (c) Shannon entropy (S) for ensembles of normal (blue-shaded) and uniform (purple-shaded) distributions as a function of the distribution size N. d Typical autocorrelation profile (upper panel) and the corresponding finite difference Δℓ of the modulus of white noise signals. The corresponding truncated autocorrelation criterion (e) and Shannon entropy (f) as a function of the distribution size N. In (b–c) and (e–f), 1000 random distributions were generated for each N, from which the mean (solid or dashed line) and standard deviations (shaded area) are computed.

For ({bf{x}}in {{mathbb{R}}}^{N}), the autocorrelation renders a vector ({bf{X}}[{bf{x}}]in {{mathbb{R}}}^{2N-1}), which is symmetric around the lag ℓ = 0; i.e., X−ℓ[x]=Xℓ[x]. For ℓ = 0, the autocorrelation reduces to the Euclidean norm of x, Xℓ=0[x] = (x, x) = ∥x∥2. For normalized signals, the autocorrelation peaks at ℓ = 0 to the unity. Thus, throughout the manuscript, all signals analyzed by the autocorrelation are normalized beforehand. Following the autocorrelation symmetric, we focus exclusively on the positive lags ℓ > 0 and thus define the truncated autocorrelation

which contains the minimum relevant statistical information to be processed.

Further insight into the autocorrelation and entropy estimation can be achieved by considering two specific sets of signals, i.e., signals generated from the normal and uniform distribution. The normal distribution is the desired behavior for the randomization process, which provides a benchmark to compare the outputs of the encryption device. The uniform distribution is used as a reference for the entropy analysis, as it provides the maximum bound for the Shannon entropy. To test the behavior of random signals following these distributions, we consider the ensembles ({{mathcal{S}}}^{(n);N}={{{{bf{x}}}^{(n);k,N}}}_{k = 1}^{1000}) and ({{mathcal{S}}}^{(u);N}={{{{bf{x}}}^{(u);k,Nleft.right)}}}_{k = 1}^{1000}) composed of 1000 normal and uniform randomly generated signals, respectively, for different dimensions, N = {5, 10, …, 1000}.

The truncated autocorrelation in (2) shall approximate a flat function for white noise signals, which, for N finite dimension signals, is represented by a vector with an approximate null mean ((mu left(widetilde{X}[{bf{x}}]right))) and a standard deviation ((sigma left(widetilde{X}[{bf{x}}]right))) approaching zero as N → ∞. To illustrate the latter, we compute the mean and standard deviation of the truncated autocorrelation of every element in the ensemble as ({{mathcal{S}}}_{X;mu }^{(n);N}:= {{mu (widetilde{X}[{{bf{x}}}^{(n);k,N}])}}_{k = 1}^{1000}) and ({{mathcal{S}}}_{X,sigma }^{(n);N}:= {{sigma (widetilde{X}[{{bf{x}}}^{(n);k,N}])}}_{k = 1}^{1000}), respectively. In this form, the behavior of the ensemble for each N is revealed by computing the average of the mean of ({{mathcal{S}}}_{X;mu }^{(n);N}) to get the main trend and the mean of ({{mathcal{S}}}_{X;sigma }^{(n);N}) for the deviations around the main trend. The latter is shown in the blue-dashed area in Fig. 3b, where the expected tendency of the normal distribution is evident. For completeness, the same analysis was carried out for uniformly distributed signals (green-shaded).

In turn, the Shannon entropy S(x) of a vector x provides a notion of uncertainty for probability distributions (see Methods section). Indeed, although a higher entropy does not necessarily imply higher randomness, one can still define a threshold for the entropy to identify a white-noise signal. For instance, the uniform distribution possesses the higher uncertainty, and thus maximum entropy, among all distributions with compact support55. On the other hand, for Kronecker-delta-like distributions, the entropy is minimum (null) as certainty is absolute. Thus, the corresponding entropy shall be lower for a given white noise signal than that of uniform distributions.

By using the previously introduced random ensembles of normal (({{mathcal{S}}}^{(n);N})) and uniform (({{mathcal{S}}}^{(u);N})) distributions, we can perform a statistical analysis based on the mean and standard deviation of the entropy for each ensemble across different signal sizes N. These results are depicted in Fig. 3c, where the solid and dashed curves denote the mean for normal and uniform ensembles, respectively. The shaded areas represent the corresponding standard deviations from the mean value for each distribution. The mean entropy is higher for uniform distributions, which is expected, as elements uniformly distributed are all equally likely and possess larger uncertainty.

Thus, a given signal x is said to be white noise if the mean ((mu left(widetilde{X}[{bf{x}}]right))) and standard deviation ((sigma left(widetilde{X}[{bf{x}}]right))) of the truncated autocorrelation, and entropy S(x) all lie in the normal distribution regions depicted in Fig. 3b, c for the corresponding size N. Note that if x = (0, …, 1, …0), it follows that (mu (widetilde{X}[{bf{x}}])=sigma (widetilde{X}[{bf{x}}])=0), which lies in the white-noise region but is clearly not a random signal. For these reasons and to rule out false positives, both conditions are enforced to conclude about the randomness of the signal in question. Remark that, for N ⪅ 15, the entropy regions for both normal and uniform distributions are indistinguishable when analyzed using the entropy and autocorrelation criterion; thus, white noise is challenging to assess for relatively small-size signals.

Since the PIC produces optical complex-valued outputs, the white noise criteria shall be applied to the real and imaginary parts. In the current experimental setup, power measurements are gathered at the output, corresponding to the modulus square of the complex electric field, and we thus shall apply an equivalent criterion to the power. For simplicity and without loss of generality, we focus on the modulus ∣x∣. It is known that if the real and imaginary parts of x are normally distributed with mean zero and standard deviation one, the elements of ∣x∣ ≔ (∣x1∣, …, ∣xN∣) are distributed according to the Rayleigh distribution with scale parameter one54. See upper-panel in Fig. 3d. From this, the autocorrelation of the ∣x∣ becomes linear with respect to the lag ℓ and anti-symmetric around ℓ = 0. Thus, the finite difference of the truncated autocorrelation, ({Delta }_{ell }widetilde{X}[| {bf{x}}| ]:= ({X}_{2}-{X}_{1},ldots ,{X}_{N-2}-{X}_{N-1})), also renders a flat distribution for ℓ > 0. See lower-panel in Fig. 3d. In this form, an equivalent criterion can be introduced for ∣x∣ based on ({Delta }_{ell }widetilde{X}[| {bf{x}}| ]) and the entropy S(∣x∣), which are respectively depicted in Fig. 3e–f. The analysis for the modulus of uniform distributions was excluded in the latter figure, as elements of such a distribution are already positive numbers.

Device randomness and encryption capabilities

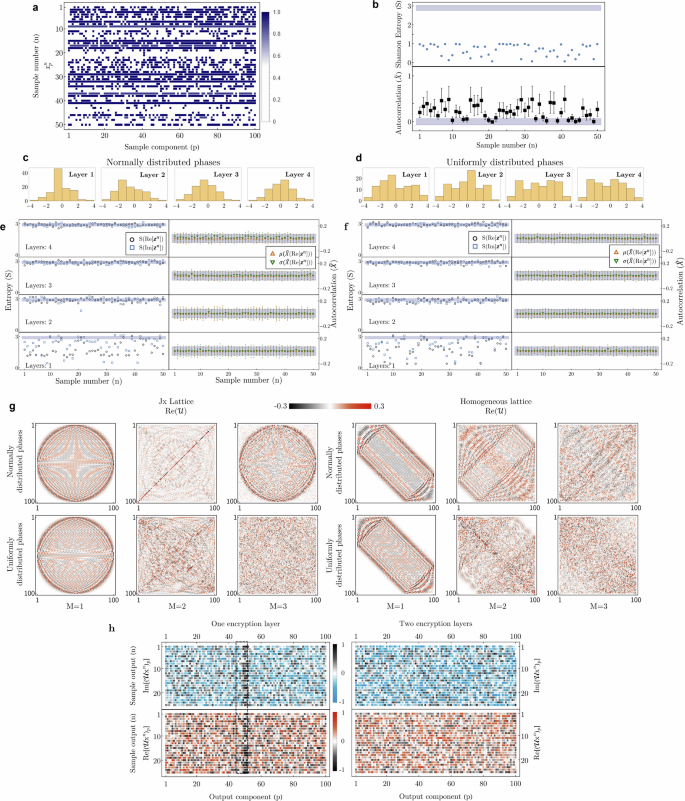

The randomization capabilities of the proposed PIC are tested by first generating a set of input samples ({{{widetilde{{bf{x}}}}^{n}}}_{n = 1}^{50}), where each sample ({{bf{x}}}^{n}in {{mathbb{R}}}^{100}), the components of which render a sparse signal generated from a sequence of random randomly placed unit pulses δk,p, with δk,p the Kronecker delta function and k, p ∈ {1, …, N = 100}. See Fig. 4a. Despite the randomness in the generation of input sparse signals, they do not show any trace of white-noise behavior. This is done by testing the entropy and truncated autocorrelation criteria, as shown in Fig. 4b, where the regions where white noise is expected (shaded areas) are highlighted. The entropy of every input sample lies below the expected values for white noise, whereas the mean and standard deviations deviate from the expected flat distribution for white noise in most cases.

a Set of 50 randomized pulsed sample signals xn. b The corresponding values for the Shannon entropy (upper panel) and autocorrelation bars (lower panel). The shaded area denotes the region where white noise is expected. c–d Histograms of randomly generated phase shifters (encryption keys) taken from the normal (c) and uniform (d) distributions in the interval (left(-pi ,pi right]). e–f Entropy and truncated autocorrelation criteria for the samples randomized using normally (e) and uniformly (f) distributed keys. The random process uses M = 1, 2, 3, 4 random-phase layers. g Real part of the transmission matrix ({mathcal{U}}) in Eq. (1) for M = 1, 2, 3 layers and considering normally (upper panel) and uniformly (lower panel) distributed phases. For illustration, the Jx and homogeneous lattice were used as the mixing layers F. h Real and imaginary parts of the first 25 encrypted sample signals using normal keys combined with one (left) and two (right) encryption layers.

Therefore, a signal is deemed white noise if both entropy and truncated autocorrelation lie around the shaded corresponding regions. The requirement of both simultaneous criteria is better illustrated in sample No. 21, which has a low entropy but a perfect flat autocorrelation. This is because sample No. 21 is a single-unit pulse, the autocorrelation of which is easily proved to be flat, and thus the signal is not white noise, likewise, for other samples with a similar pattern. Thus, given that none of the samples fulfill the randomness criterion, we rule out the possibility that any trace of randomness eventually found at the PIC output is produced due to intrinsic randomness in the generation of input samples.

For the numerical tests performed in this section, the phase elements in each layer of the architecture are independently generated from either a normal or uniform distribution bounded to the interval (− π, π). The corresponding histograms of the random phases used in the encryption process are illustrated in Fig. 4c–d for up to four different layers. The latter allows analyzing the encryption capabilities of the output processed signals ({widetilde{{bf{z}}}}^{n}={mathcal{U}}{widetilde{{bf{x}}}}^{n}) by inspecting the white noise behavior. To this end, the entropy and truncated autocorrelation are calculated for each sample output ({widetilde{{bf{z}}}}^{n}) using both normal and uniform phases distributions as encryption keys, as illustrated in Fig. 4e–f. Here, the numerical simulations are run considering M = 1, 2, 3, 4 encryption layers to showcase the effects of such added layers. Indeed, the entropy analysis shows that one encryption layer is insufficient to randomize all the input samples, even though the autocorrelation shows an almost flat distribution in each sample. We thus rule out the device with only one phase layer as a potential randomization device.

In turn, when two phase layers are considered, the output of each sample signal produces higher entropy values, with only a few lying outside the region of white noise. Interestingly, the autocorrelation shows a higher standard deviation for normally distributed phases than those outputs encrypted with uniform keys. For more layers (M > 2), both the entropy and autocorrelation criteria show no significant improvement as compared to M = 2 layers. This numerical evidence allows for reducing the PIC size to M = 2 layers without impacting random performance in any significant way. For completeness, the real part of the transfer matrices ({mathcal{U}}) is depicted in Fig. 4g when sweeping the number of layers M and using the Jx and homogeneous lattices as the passive mixing layers F. In analogy to the previous analysis, normally (upper panels) and uniformly (lower panels) distributed phases are also used here. It is clear that M = 1 layers produce a transfer matrix resembling the original missing layer F (see Supplementary Material S1), whereas M = 2 layers wash out any such pattern. In both cases, the transfer matrices associated with uniformly distributed phases show a better random pattern than the normally distributed case. Thus, when operated with uniformly distributed phases, the PIC output produces a better random process.

The randomization PIC can be further exploited to operate as an encryption device. This is done by treating the input signal as the vector to be encrypted, the phase distribution as a set of encryption keys, and the randomized output as the encrypted vector. In this procedure, the phase distribution has to be recorded and stored for subsequent decryption tasks. Indeed, the decryption process can be inverted, as the transformation operator ({mathcal{U}}) is unitary. Thus, the encrypted signal xout can be cast back to its original form by injecting it into either the inverse operator ({{mathcal{U}}}^{dagger }) or by reverting the device ports and using the conjugated phases. This inversion process can be done only if the original sets of phases ({phi }_{n}^{(m)}) used to randomize the input signal are known. For more details on the inversion process, see Supplementary Materials S2.

The real and imaginary parts of the first 25 normally encrypted samples are shown in Fig. 4g for one and two encryption layers. Here, one can corroborate that one-layer encryption produces signals whose real and imaginary parts tend to pile up around the middle signal component 50 (dashed-rectangle in Fig. 4g), as predicted from the entropy analysis. Indeed, such a tendency vanishes when the second encryption layer is added, producing signals spread across all the components. It is worth remarking that, for power measurements, the second phase layer in the architecture in Fig. 1 does not modify the readout at the power detectors. Thus, we can disregard the latter when performing power measurements. Nevertheless, the proposed PIC is flexible and compatible with phase measurement if the input and data collection stages of the present experimental run are changed by an optical vector analyzer.

Random passive layers

Although the previous construction has shown a compact encryption device that can be scaled down to two phase layers without jeopardizing the encryption capabilities, it is always desirable to design a circuit with fewer elements. So far, the encryption is stored in the phase element, whereas the waveguide is fixed as a well-patterned unitary matrix. Thus, the device randomness can be enhanced by adding disorder into the waveguide arrays. The waveguides cannot be tuned once manufactured, but their pattern highly affects the output. It has been shown in25 that random matrices generated from the Haar measure serve as passive layers in universal unitary interlaced architectures. The latter means that such matrices are dense enough to shuffle the elements of an input vector and render it into an arbitrary new one, provided that enough layers are available. For the encryption tasks, the encryption two-layered architecture should work when operated with random Haar matrices instead of a predefined lattice model, provided that the former matrices are dense.

The additional random element, namely the passive unitary layer F, is expected to increase the potential randomness at the encryption device output. To analyze the latter, we add the random symmetric deformation R to the Jx Hamiltonian H so that the perturbed encryption device ({mathcal{U}}(delta )) and the evolution through the perturbed waveguide array F(δ) read, respectively, as

where δ the perturbation strength parameter, and (Rin {{mathbb{C}}}^{Ntimes N}) a random matrix with elements taken from the normal distribution with mean μ = 0 and standard deviation σ = 1; i.e., ({mathcal{N}}(mu =0,sigma =1)). Without loss of generality, the perturbation strength is considered as δ > 0. If the strength parameter δ ≪ max(κn), the deformation can be considered as a perturbation of the original Jx lattice. For larger δ, the overall effect of R will overcome that of Jx, rendering a random matrix. This is handy as we can study the effects of small perturbation on the waveguide array, and also analyze the encryption capabilities of the device when the passive element is random in nature.

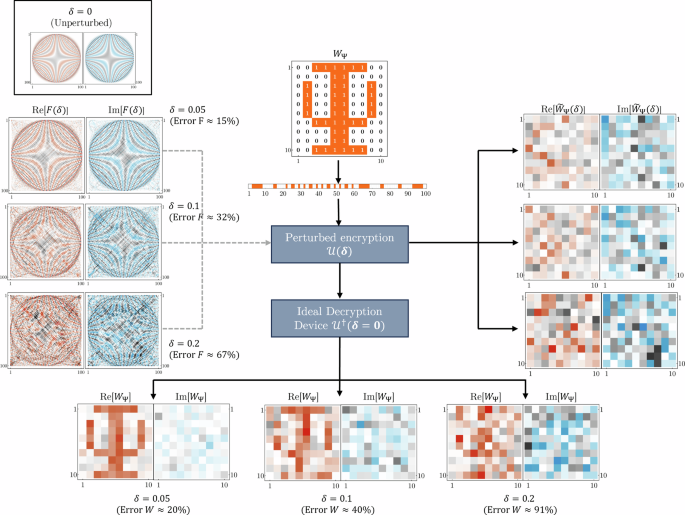

To measure the overall effect of the perturbation parameter δ, it is more convenient to compute the percentage error introduced to F(δ) with respect to the ideal Jx lattice; i.e., ({E}_{F}(delta )=left(parallel F(delta )-Fparallel /parallel Fparallel right)times 100 %). By considering 100 random perturbations for each δ, one finds that, on average, the perturbations δ = 0.05, 0.1, 0.2 induce errors on F around EF = 15%, 32%, 67%, respectively. The overall effect of such perturbations on the real and imaginary parts of F(δ) are shown in Fig. 5 (left panel). To test the effects of such perturbation on the encryption and decryption scheme, we consider a multi-stage setup. First, take a 10 × 10 pixel image and reshape it into a 100-dimensional one-dimensional vector, which is encrypted using (3) for δ = 0.05, 0.1, 0.2, with N = 100. The encrypted vector is then decrypted using the same keys through the unperturbed decryption device ({{mathcal{U}}}_{enc}^{dagger }). Indeed, the original source image is recovered when encrypted using δ = 0, whereas deviations are expected for δ ≠ 0. For δ ≠ 0, the decrypted images show an error due to the defects on the F(δ) layers. For instance, for δ = 0.05 (EF ≈ 15%), the error in decrypting the image is approximately ({E}_{{widetilde{W}}_{Psi }}approx 20 %). Figure 5 (lower panel) shows the real and imaginary parts of the decrypted images, where it is clear that, for δ = 0.05, the real part still resembles the source image and the large error is due to the imaginary part components. In turn, for δ ≤ 0.1, the real part of the decrypted images is indistinguishable.

(left panel) Real and imaginary parts of the perturbed DFrFT matrix F(δ) for δ = 0.05, 0.1, 0.2. (Top panel) Testing image W used for encryption with perturbed DFrFT F(δ). (right panel) The corresponding encrypted images. (Bottom panel) Decrypted images using an ideal decryption device ({{mathcal{U}}}^{dagger }(delta =0)).

Discussion

The randomization PIC has been tested under the proposed demultiplexing scheme, showing the expected performance. Among the potential issues present in the experimental run, the thermal heaters might induce undesired effects due to thermal cross-talk and thermal stabilization. The first effect is not relevant, as the phase shifters are randomly assigned from distributions, and thermal cross-talk will also contribute to the overall random pattern. Despite the latter, a set of pre-programmed phases will induce the same behavior in the PIC. This holds as long as thermal stabilization time is reached, which is the most critical factor in reaching reproducibility. Thus, the experimental data was gathered after allowing a long enough time window τ between measurements after powering up all the metal heaters. The proposed PIC is flexible enough to be scaled up to a larger number of channels if so required, the design of which would only require that the transfer matrix of the passive mixing layer F fulfills the required density criterion25.

While a single layer of MZIs can be used to independently modulate the amplitude and phase of optical signals in each channel, this approach inevitably leads to power losses through the leakage channel of the MZI, a requirement to produce amplitude modulation. The proposed PIC design overcomes this issue due to the unitary nature of the waveguide arrays used as the passive mixing layers, which produce the required interference that ultimately steers the amplitude modulation without resorting to lossy solutions. This renders a lossless and relatively compact design that depends only on two layers of active elements regardless of the size N of the optical signal.

The proposed PIC successfully demonstrated the capability to generate the necessary random pattern and encryption features using a two-layer design. Despite adding a third layer not significantly improving the white noise of the encrypted signals, the extra key combinations used in the encryption process make the output more difficult to reverse-engineer without prior knowledge of the keys. In this regard, the proposed design can be adjusted whenever compactness or security is the final goal. There are reports in the literature for all-optical encryption devices in free-space configurations using random phase masks31,56. The latter includes a two-lens configuration combined with two statistically independent white noise phase planes, creating an encrypted image. The device introduced in the present work provides an equivalently compact and on-chip solution, which can be further exploited as an optical image encryption technique by following a similar demultiplexing procedure as the one discussed above.

The measurements presented in this work mainly focus on power detection, but this is due to limitations in the experimental setup rather than the performance of the PIC itself. In fact, the proposed PIC is versatile supports phase measurement if an optical vector analyzer is implemented or further interferometry is performed to extract the output phases. Furthermore, the proposed device can work as a versatile and controllable platform for investigating equivalent random and disordered wave systems36,37, where the disorder can be tuned on real-time to induce the desired effects. Recent studies have demonstrated that random photonic devices can serve as an effective tool for producing operations equivalent to Haar-random matrices. This is a crucial requirement for boson sampling in quantum computing tasks42,43. The latter is achieved by changing to other material platforms, such as silicon nitride (Si3N4), which are more suitable for single photon transport. In this regard, the intrinsic nonlinearity of the waveguide core can induce photon entanglement across a waveguide array57.

Methods

Material platform

The randomization operation in the photonic integrated circuit (PIC) is carried out using a silicon-on-silica platform. A passive layer, denoted as F(α), is implemented using waveguide arrays that, based on the coupled-mode theory, facilitate the unitary wave evolution responsible for mixing a single excitation channel across all the waveguides. The waveguides are constructed with a silicon core (Si) surrounded by a silica cladding (SiO2) with refractive indices of nSi = 3.47 and nSiO2 = 1.4711 at room temperature (293 K). In terms of geometry, we have a waveguide with a transverse rectangular shape featuring 500 nm width and 220 nm thickness. This configuration enables the waveguide to support a fundamental quasi-TE0 mode and a quasi-TM mode when operated at 1550 nm wavelengths. The PIC is specifically designed to operate on the quasi-TE0 mode, and 8-degree TE grating couplers are employed to effectively couple the right mode from the injection fiber into the PIC.

For the 5-channel PIC, the waveguide array is designed to exhibit symmetry around the middle waveguide. The spacing between the outer-most and middle waveguides is 233 nm and 210 nm, respectively, whereas the coupling length is set to 62 μm. The phase shifters are implemented using Ti/W alloy as heaters and are connected to the probing pads using bi-layer TiW/Al electrical traces. The probing pads are wire-bonded to a PCB for electrical access to the phase shifters.

Entropy and autocorrelation estimation

While the current experimental setup is designed exclusively for capturing power measurements, the suggested PIC can acquire phase information by adjusting the data collection stage. This would allow for the collection of complex-valued signals, requiring the application of the white-noise criterion to both the real and imaginary parts, as well as the modulus of the signal, such as the case presented in the main text.

Particularly, the autocorrelation ℓ component (lag) of the X[x] is defined as Xℓ[x] ≔ (Tℓx, x), with Tℓ the translation operator, (x, y) the Euclidean inner product in ({{mathbb{R}}}^{N}), and ℓ ∈ { − N − 1, …, N − 1} the position of the lagged signal. In turn, to compute the Shannon entropy of either component of the signal, ({bf{x}}in {{mathbb{R}}}^{N}), it is first required to extract and normalize the related histograms ({{{p}_{j}}}_{j = 1}^{M}) using (M=lfloor sqrt{N}rfloor) bins. This allows producing the corresponding probability distribution to compute the Shannon entropy

Noise levels

The thermal noise (Johnson-Nyquist noise) generated in resistors represents an unavoidable noise source that could impact the operation of the metal heaters used for phase control. To obtain some insight on the effects of such noise, we consider RMS thermal power noise for DC signals58Pn,RMS = 4kBTΔf, where Kb stands for the Boltzmann constant, T the absolute resistor temperature, and Δf a bandwidth over which the noise is sampled. In turn, the phase-change Δϕ produced in the waveguides is proportional to the power absorbed by the heater Pheater; that is, Δϕ = αPheater with α ≈ 0.12 rad/mW estimated from experimental measurements. From the latter, the phase error produced by thermal noise can be estimated Δϕthermal = 1.92 × 10−9 rad, where a 1 GHz sampling bandwidth was used. Thus, these thermal fluctuations ultimately produce negligible effects on the accuracy of the phase control produced through the metal heaters.

In turn, the source is a tunable semiconductor laser (Santec TSL-570) operating at a wavelength of 1550 nm with an output power of 20 mW. This laser has a fairly narrow linewidth of Δf = 200 kHz (equivalent to Δλ ≈ 1.6 pm at λ = 1550 nm), which corresponds to a phase noise of Sf(0) = 400 kHz. Nevertheless, this is significantly lower than the device bandwidth that is governed mainly by the bandwidth of the waveguide arrays involved which itself has to do with the frequency dependency of the coupling coefficients. This is shown in Fig. S1d in Supplementary Information S1, illustrating the coupling parameters used in the proposed design over the C-band. In the latter, the inset shows the behavior in the interval 1550 nm +/− 1 nm, highlighting the flat dependence over the laser source wavelength, which renders a uniform response over the generated CW output with such a narrow linewidth.

The nominal relative intensity noise (RIN) of this laser is RIN = 〈(ΔP)2〉/P2 = -145 dB/Hz, which corresponds to intensity fluctuations ratios of less than ΔP/P ≈ 5 × 10−5. This is equivalent to ΔP ≈ 1μW at the input of the device which translates to a negligible amount of intensity fluctuation at the output of the device which will not impact the measured stable values of the power at the output of our device. The input power (20 mW) is dropped down by the grating couplers in the input and output, the coupling efficiency of which is -7.5 dB per coupler, bringing the optical power down to levels around 600 μW. On the other hand, according to the power meter specifications provided by the manufacturer, the detection resolution is -80 dB (10 nW). The generated optical signal in the output is thus several orders of magnitudes more significant than the power detector resolution. The noise level from the optical source (SNR) is also several orders of magnitude below the output optical signal level. Thus, the magnitude of the randomized optical output is not affected by the noise levels in any significant form.

Responses