PID3Net: a deep learning approach for single-shot coherent X-ray diffraction imaging of dynamic phenomena

Introduction

X-ray imaging offers unparalleled insights into the internal structures of materials without causing damage, making it indispensable in materials science and engineering1,2,3. X-ray diffraction, which is central to techniques such as X-ray crystallography, facilitates detailed studies of crystalline materials at the atomic or molecular levels. Coherent X-ray diffraction imaging (CXDI) advances these capabilities, enabling the visualization of materials (without the requirement of the crystallinity) with high spatial resolution, surpassing traditional lens-based approaches4,5. Unlike electron and probe microscopy, which typically require extensive sample preparation and special environments while offering only excellent surface resolution, CXDI can reveal internal structures in more natural conditions. Thus, CXDI enables non-destructive imaging of both the surface and internal structures of materials in their natural state, with exceptional spatial resolution6.

In CXDI, a well-defined coherent X-ray beam is directed onto a sample, producing a diffraction image that includes intensity information but lacks phase details7,8,9,10,11,12,13,14. Reconstruction of the sample image necessitates sophisticated phase retrieval analysis, which recovers the phase information from the measured diffraction image. The inverse problem typically uses iterative computational methodologies that employ forward diffraction simulation and image-reconstructing algorithms based on error reduction, hybrid input–output (HIO)15, or difference map16,17,18,19. However, the inherent ambiguity in phase retrieval analysis, in which a single diffraction image with only intensity information may correspond to multiple potential sample images, complicates image reconstruction and evaluation of the reliability of the constructed images. Moreover, it limits real-time applications crucial for in-situ and operando experiments20,21.

Scanning CXDI, commonly known as X-ray ptychography22, effectively images extensive samples by systematically scanning a coherent beam across them, necessitating overlapping spatial sequences to ensure each section contributes multiple diffraction images. This redundancy is vital as it provides additional spatial constraints to verify the accuracy of the phase retrieval analysis, enhancing the convergence of the analysis. Despite their advantages, phase retrieval methods using iterative algorithms such as the extended ptychographical iterative engine (ePIE)23, which is widely recognized for its high convergence rate and noise robustness, often struggle without static overlapping illumination areas and are highly sensitive to parameter selections24. These challenges significantly compromise accuracy and limit capabilities in imaging dynamic phenomena6,25,26.

Scanning CXDI has broader issues, especially in capturing high temporal and spatial resolution of samples with dynamic processes. In particular, the scanning speed of the coherent X-ray beam moving across the whole sample for collecting diffraction images directly affects the ability to capture fast-evolving phenomena. The CXDI is typically performed in a step-scan manner, where the beam stops at discrete points following a previously designed scenario. On-the-fly scanning is also developed, which attempts to speed up the measurement process by moving the beam across the sample without stopping, and the data is collected continuously as the beam moves. Regardless of the method, a slow scanning speed may result in temporal inconsistencies between imaging regions of the sample, as simultaneous imaging of every local region is desired. Conversely, a faster scanning speed shortens the exposure time for each illuminated local area, reducing diffraction signals and lowering image quality. Additionally, the assumption that each sample section is consistently illuminated multiple times and contributes multiple diffraction images may not hold during dynamic changes in the sample, potentially reducing the accuracy of the phase retrieval analysis and negatively affecting the spatial resolution of the reconstructed image.

Several non-scanning CXDI methods, which use single-shot imaging to capture multiple frames, have been proposed to depict the dynamic process with excellent temporal resolution27,28,29,30,31,32. However, these remain in the proof-of-principle stage, and comprehensive studies on the dynamics of actual samples in the hard X-ray region must be undertaken. One of the challenges in phase retrieval analysis for the single-shot CXDI is the lack of additional spatial constraints, which complicates image reconstruction and affects the reliability of the results. Additionally, low signal-to-noise ratios (SNR) present another challenge, as the single-shot CXDI requires short exposure times per frame to capture fast-evolving phenomena, leading to fewer photons being detected and higher noise in the measurements. Recent advancements have led to the proposal of practical methods for single-shot CXDI, which can effectively and comprehensively elucidate material dynamics and functions14,33,34.

Deep learning (DL) can be applied to address complex imaging problems like those encountered in holography and phase retrieval analysis, enhancing accuracy and computational efficiency24,35,36,37,38,39,40,41,42,43,44,45,46. These techniques often face challenges such as the accurate reconstruction of phase information from intensity measurements and handling the inherent noise and artifacts in the data. Among the notable advancements, PtychNet, a convolutional neural network (CNN), facilitates the direct reconstruction of Fourier ptychography data. Similarly, PtychoNN24 and SSPNet, which are encoder–decoder deep neural network (DNN) architectures, are designed explicitly for single-shot CXDI. These networks streamline the imaging process by directly reconstructing sample images without explicitly reconstructing phase information, thus simplifying the imaging process24,47,48. Deep Ptych49 employs generative models to regularize the phase problem and achieve superior reconstruction quality; however, it requires the identification of an applicable prior model. Conversely, prDeep50 optimizes an explicit minimization objective using a generic denoising DNN trained for a specific target data distribution.

Recent advancements in DL for video processing have increased our ability to capture the semantics and context of dynamic scenes51. Leveraging sophisticated neural network architectures, DL methods can extract meaningful temporal variations and patterns from sequential image frames, surpassing the results of traditional image analysis. This capability demonstrates the potential of DL in the CXDI of dynamic phenomena. By analyzing temporal context and correlations in diffraction images and reconstructed images, DL is expected to facilitate high-resolution image reconstructions of dynamic changes within the sample and learn the underlying mechanisms of these phenomena. This learning may, in turn, enhance the quality of phase retrieval and image reconstruction, enabling a reciprocal relationship in which each aspect reinforces the other.

Despite remarkable advances in phase retrieval analysis that benefit from DL, DL-based methods are associated with numerous challenges. A notable issue is the reliance on supervised learning, which necessitates the use of ground-truth data for training. However, obtaining such data in real-world phase retrieval scenarios is often unfeasible, leading to unreliable image reconstruction models when only simulated data are used. Several unsupervised DL methods, such as AutoPhaseNN36 and DeepMMSE52, have been proposed to address this issue. These methods do not require ground-truth data for constructed images53 but need appropriate constraints tailored to the optical settings. Nonetheless, they often trade time efficiency for accuracy, which compromises their suitability for in-situ experiments. Incorporating physical insights and mathematical constraints into DL-based methods can enhance their performance on complex problems28,33,54.

This study introduces a DL-based method specifically designed to address the inherent challenges of phase retrieval analysis for single-shot CXDI, aiming to enhance significantly the visualization of local nanostructural dynamics within the sample. This method leverages a physics-informed learning approach, where the network learns directly from the measured diffraction data without relying on external reference images or human-provided labels. Instead, known physical constraints derived from the experimental setup serve as the guiding principles for training, ensuring that the network’s reconstructions are consistent with both the measured data and the underlying physics. The DL architecture is composed of a measurement-informed refined neural network block (RB), designed to integrate optical settings and mathematical constraints into the learning process, and a temporal neural network block (TB), designed to capture the temporal correlations between diffraction images and those of the corresponding reconstructed images via the learning process. Additionally, a single-shot CXDI optical system is constructed to compare the proposed method with state-of-the-art methods in real-world experiments.

Results

Dynamic phase retrieval in single-shot CXDI

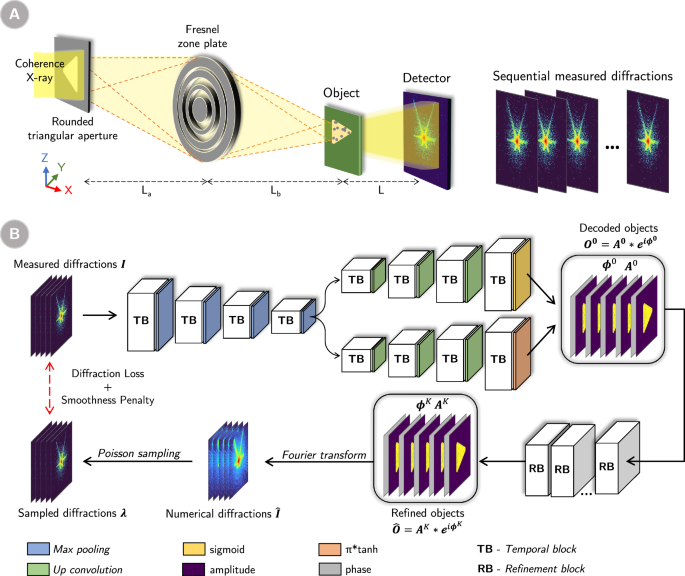

Figure 1A illustrates a single-shot CXDI optics system for dynamic object imaging14,34. The system uses a monochromatic X-ray beam, which is shaped by a rounded triangular aperture and a Fresnel zone plate (FZP) to generate a rounded triangular X-ray beam illuminating the sample. The exit wavefield ψ(r) of the sample can be represented as

where r denotes the real-space coordinate vector, and P(r) is the illumination probe function. The complex object function O(r) = A(r) (*)eiϕ(r) is the mathematical representation of the sample in real-space, which comprises amplitude A(r) and phase ϕ(r) components.

A Schematic diagram of the single-shot coherent X-ray diffraction imaging (CXDI) optical system, featuring a triangular aperture and a Fresnel zone plate. B Overview of the PID3Net framework for dynamic phase retrieval in single-shot CXDI.

This exit wavefield produces a diffraction images in the far field, captured as a two-dimensional intensity pattern by a downstream detector. The intensity of the diffraction images can be expressed as

where q denotes the reciprocal-space coordinate vector, and Ψ(q) is the wavefront of the ψ(r) in the detector plane. ({mathcal{F}}) represents the Fourier transform operator.

In practice, the measured diffraction intensity I(q) on the detector is represented as a two-dimensional image, usually a square, denoted as (Iin {{mathbb{N}}}^{mtimes m}) (m is the measurement size of the image in pixels). The illumination probe function P(r) is a two-dimensional matrix (Pin {{mathbb{C}}}^{mtimes m}) and is assumed constant during the experiment in a fixed optical system with a coherent X-ray (stable illumination source). In this study, we adopt the mixed-state reconstruction approach55 that applies a set of illumination probe functions ({P}^{(k)}in {{mathbb{C}}}^{mtimes m}) with mode k ∈ [1, 2,…, K] for single-shot CXDI. Such probe functions are often estimated in advance via scanning CXDI and are assumed to remain constant. The complex object function O(r) is represented by two-dimensional matrices (Ain {{mathbb{R}}}^{mtimes m}) and (phi in {{mathbb{R}}}^{mtimes m}) expressing the amplitude and phase information, respectively.

To investigate the dynamic behavior of objects included in a sample, we captured the dynamic changes by imaging the sample a total of N consecutive frames, with each frame recorded over an exposure time Δt. The observed diffraction intensity image at the tth frame is denoted as It. The objective of phase retrieval in a dynamic CXDI experiment is to inversely derive a unique complex object function Ot from It for each tth frame. Typically, the conventional phase-retrieval algorithm iteratively refines the object function Ot based on the reciprocal–space constraint. Starting from an initial guess O0, the estimation of the object function in the iteration (i+1)th is expressed as follows:

where ({bar{P}}^{(k)}) is the complex conjugate of P(k). The scalar α is a feedback parameter of the updating object function U and ({psi }_{t}^{(k)}={P}^{(k)}times {O}_{t}^{i}) is the wavefield of the sample reconstructed from the previous iteration ith. Meanwhile, ({psi }_{t}^{{prime} (k)}) is the revision of the wavefield ({psi }_{t}^{(k)}) satisfying the constraint in the reciprocal space:

where ({Psi }_{t}^{(k)}={mathcal{F}}[{psi }_{t}^{(k)}]). ({{mathcal{F}}}^{-1}) denotes the inverse Fourier transform operator. Through the iterative process, the refinement algorithm is expected to converge to a solution of the complex object function Ot that corresponds to It. It should be noted that the measured diffraction intensity images It inherently contain uncertainties and noise arising from the probabilistic nature of photon diffraction, particularly during imaging with limited photon flux or short exposure times. As a result, when applying iterative phase retrieval methods that use only the observed intensities, one may obtain multiple plausible solutions for the complex object function Ot. In other words, due to these uncertainties and the non-uniqueness of the phase retrieval problem, different solutions can fit the measured diffraction data equally well.

PID3Net: Physics-informed self-supervised learning network

Borrowing the concept from the iterative refinement algorithm, this study employed a physics-informed self-supervised learning strategy to develop a neural network: the Physics-Informed Deep learning Network for Dynamic Diffraction imaging (PID3Net), tailored to phase retrieval in single-shot CXDI for dynamic object imaging. Figure 1B shows the design of the proposed network.

Integration of physics-informed constraints

Unlike purely data-driven approaches, PID3Net leverages established physical principles and utilizes experimental conditions to guide the network’s optimization. By introducing physics-based priors via the measurement-informed refinement block (RB) and the loss functions, we ensure that our reconstructions remain consistent with the underlying diffraction physics rather than relying solely on learned statistical patterns. The physics-informed learning in PID3Net proceeds as follows:

-

Fourier Transformation establishes fundamental physical relationship between the sample’s complex object function and the measured diffraction intensity. Specifically, the complex object function O(r) is multiplied by a known probe function P(r) and then Fourier-transformed to the detector plane. During training, the network’s output (hat{O}) is projected into reciprocal space to ensure that the estimated intensity (hat{I}=| {mathcal{F}}[Pcdot hat{O}]{| }^{2}) aligns with the measured diffraction intensity I.

-

Spatiotemporal Smoothness Constraints impose realistic priors that, while not directly derived from physical laws, reflect the expectation that nanostructural changes occur gradually over time. Utilizing Total Variation (TV) regularization and 3D temporal convolutions, PID3Net incorporated smoothness in both spatial and temporal dimensions. This promotes more accurate reconstructions by reducing abrupt changes and noise, thereby capturing better the natural evolution of dynamic samples.

-

Reciprocal-Space Constraint ensures consistency with known intensity measurements by applying a refinement block that employs forward and backward Fourier transforms. The refinement process adaptively updates the reconstructed object with learnable parameters. This adaptive refinement integrates spatial and temporal information, ensuring that reconstructions remain consistent with reciprocal-space data while dynamically adjusting to temporal changes.

-

Poisson Loss component incorporates the inherent photon-counting nature and photon statistics of X-ray detection into the training objective. In addition to conventional pixelwise discrepancy metrics such as the mean absolute error (MAE) between the observed and estimated diffraction images, I and ({hat{boldsymbol{I}}}), PISD3Net also explore a Poisson-based loss function to directly model the photon-counting nature of X-ray detection. By aligning the training objective with the measured diffraction image, the network’s parameter updates reflect the underlying physical reality more faithfully than arbitrary image-based metrics. Thus, the final reconstructions are statistically grounded and physically meaningful.

By integrating these physics-informed steps, PID3Net guides each backpropagation update toward solutions that satisfy fundamental diffraction principles, coherent illumination conditions, and photon-counting statistics. Consequently, the resulting reconstructions are not only visually plausible but also physically consistent, thereby improving reliability and interpretability even under challenging imaging conditions.

Self-supervised learning network architecture

Given a sequence of T consecutive diffraction intensity images I = [I1, I2, …, IT], which are extracted from the total observed N consecutive diffraction intensity images, the network is designed to reconstruct simultaneously a corresponding set of T object functions, O = [O1, O2,…, OT]. The objective is to ensure that the calculated diffraction images ({hat{boldsymbol{I}}}=[{hat{I}}_{1},{hat{I}}_{2},ldots ,{hat{I}}_{T}]) derived from these object functions closely matched the experimental diffraction images I.

Consequently, the challenge of phase retrieval in CXDI experiments is formulated as a gradient-descent optimization problem53 to minimize the discrepancies between two sets of diffraction images, I and ({hat{boldsymbol{I}}}). This approach leverages measured diffraction intensities, known physical constraints, and details of experimental measurement settings to guide the learning process, effectively bypassing the need for separate ground-truth references of the sample.

To facilitate the convergence of the phase retrieval in the single-shot CXDI for dynamic object imaging, we design the phase retrieval network with introducing the smoothness constraint in both spatial and temporal domains. We adopted the spatiotemporal convolution56 (or 3D-CNN), which is widely applied for video understanding, to enable spatiotemporal feature learning directly from a sequence of frame-level patches. PID3Net first inputs the set of diffraction images I to encoder–decoder modules, including one encoder and two decoders (Fig. 1B). These modules use a temporal block (TB), comprising three 3D-CNN layers, as shown in Fig. 2A. In the encoder, the temporal information is encoded from three levels: the diffraction image itself, three adjacent diffraction images, and five adjacent diffraction images. Moreover, during the decoding phase, images with shared information are reconstructed over time through the TB, enhancing the temporal coherence of the objects. Subsequently, the decoder component leverages the encoded information to produce the real-space amplitude ({{boldsymbol{A}}}^{0}=[{A}_{0}^{0},{A}_{1}^{0},cdots ,,{A}_{T}^{0}]) and phase ({{boldsymbol{phi }}}^{0}=[{phi }_{0}^{0},{phi }_{1}^{0},cdots ,,{phi }_{T}^{0}]) images of the T sequential object functions. Details on the encoder–decoder implementation are provided in the Methods section ‘Encoder-Decoder block’

A Temporal Block (TB) with 3D CNN layers for integrating smoothness constraints in both spatial and temporal domains. B Measurement-informed refinement block (RB) for incorporating optical settings and mathematical constraints.

The phase-retrieval process often causes the problems of twin image ambiguity, translation, and initialization, complicating the convergence of the inversion process57,58. To address the challenges, we integrated optical settings and mathematical constraints via a second block, the measurement-informed refinement block (RB). The block refines the phase ϕ0 and intensity A0 information using a hybrid approach that combines DL methods with the iterative process (Fig. 2B).

At each refinement step (i+1)th, the amplitude ϕi and intensity Ai information from the previous step is first combined to complex object functions ({{boldsymbol{O}}}^{i}=[{O}_{1}^{i},{O}_{2}^{i},ldots ,{O}_{T}^{i}]), in which ({O}_{t}^{i}={A}_{t}^{i}* {e}^{text{i}{phi }_{t}^{i}}). The updating information is defined using CNN blocks, by adopting the updating Eq. (3) from the iterative phase retrieval algorithm. However, rather than individually updating each object function, we use the TB layer to learn the updates for all T object functions simultaneously, preserving overlapping information shared with other sample reconstructions in real space (Fig. 2B). We denote ({F}_{A},{F}_{phi }:{{mathbb{R}}}^{Ttimes mtimes m}to {{mathbb{R}}}^{Ttimes mtimes m}) as the CNN blocks used to determine the revision for the amplitude and phase information. Each network comprises one TB followed by an output convolution layer of size 1 × 3 × 3. Activation functions in FA and Fϕ are sigmoid and π*tanh to limit the amplitude and phase output to [0, 1] and [− π, π], respectively. The updating process is expressed as follows:

where ({{boldsymbol{U}}}^{i}=[{U}_{1}^{i},{U}_{2}^{i},ldots ,{U}_{T}^{i}]) are updating information of the object functions calculated using Eq. (3). arg(.) and ∣. ∣ indicate the argument and modulus of a complex number, respectively. Subsequently, the object functions are updated using mathematical constraints as follows:

where α is a learnable parameter of the update rate. Both FA, Fϕ, and α undergo adaptive training to regulate the amount of measurement constraint incorporated in Oi+1.

After K iterations, we obtained the reconstructed object functions (hat{{boldsymbol{O}}}) (= OK) and derived the amplitude (hat{{boldsymbol{A}}}=| hat{{boldsymbol{O}}}|) and phase (hat{{boldsymbol{phi }}}=,{text{arg}},(hat{{boldsymbol{O}}})) from the reconstructed object functions (hat{{boldsymbol{O}}}). Within DL approaches, the image reconstruction problem can be solved by minimizing the difference between I and (hat{{boldsymbol{I}}}=| {mathcal{F}}[Ptimes hat{{boldsymbol{O}}}]{| }^{2}), which is quantified via the absolute error loss, expressed as

However, in real-life experiments, diffraction image It are captured using photon-counting statistics, meaning the measured diffraction values are non-negative integers. Conversely, the mathematically estimated diffraction ({hat{I}}_{t}) consists of real values, which may cause numerical issues during loss calculation in optimization. This issue needs to be considered carefully when the signal in the diffraction image is weak due to a short exposure time. To address this, we design a loss function that measures the difference in detected photon counts per pixel by employing independent Poisson distributions, which accurately reflect the photon-counting statistics of diffraction measurements59,60. This loss function ensures a more robust and statistically grounded approach for modeling the experimental data, as follows:

where λt indicates the number of photons detected in each pixel, which is determined by Poisson sampling with the rate ({hat{I}}_{t}) as shown in Fig. 1B.

Additionally, to ensure a smoothness constraint in both spatial and temporal domains for the constructed images, we employed a 3D total variation loss, ({{mathcal{L}}}_{TV})32,61. Herein, ({{mathcal{L}}}_{TV}) is defined as

where (hat{{boldsymbol{O}}}) is the complex reconstructed objects have the shape T × m × m.

In summary, our loss function ({mathcal{L}}) comprises two components: the diffraction loss (({{mathcal{L}}}_{diff})), which is responsible for minimizing the disparity between the estimated and measured diffraction images, and the smoothness penalty loss (({{mathcal{L}}}_{TV})). The total loss function ({mathcal{L}}) is formulated as

where the scalar weight β is learned and adjusted during the training for balancing the two loss components and ({{mathcal{L}}}_{diff}) is either ({{mathcal{L}}}_{MAE}) or ({{mathcal{L}}}_{PO}). Details on hyperparameters setting and model training are provided in the Methods section ‘Encoder-Decoder block’ and ‘Model training’

Experimental design

We conduct three evaluation experiments to demonstrate the efficacy of PID3Net for phase retrieval in single-shot CXDI for depicting dynamic process of the sample. These experiments involved capturing diffraction intensity images of the sample using an experimental optic system (Fig. 1A) or through numerical simulations under similar optical conditions. Details of the experimental optic system and simulations are provided in Section ‘Setups of CXDI for experiments’. In the first evaluation experiment, we examine the efficacy of PID3Net in imaging a moving Ta test chart, commonly used as a proof-of-concept sample for evaluating optic systems or phase retrieval analysis. In this experiment, the Ta test chart is moving at a fixed velocity of 340 nm s−1 (Fig. 3A) relative to the X-ray beam. The diffraction intensity images of the moving Ta test chart are captured on-the-fly using the experimental optic system. PID3Net is then used to reconstruct the movement of the sample from the measured diffraction intensity images.

A Schematic of the experimental setup, where the Ta test chart moves horizontally against a fixed X-ray beam. The orange region indicates the illuminated area of the sample. Diffraction intensity images of the moving Ta test chart are captured on-the-fly using the experimental optical system with five probe function modes, which are reconstructed using scanning CXDI. B Measured diffraction intensity images of the moving Ta test chart at eight different frames. The frame index of each image is indicated in the upper row, and the color bar at the top represents the diffraction intensity.

The two following evaluation experiments examines the efficacy of PID3Net in imaging the dynamics of gold nanoparticles (AuNPs) dispersed in solution. Observing the motion of AuNPs over a broad spatiotemporal scale is essential, as they are widely used to probe the mechanical properties of materials and the rheological properties within living cells. In the first evaluation experiment of AuNPs, we evaluate the efficacy of PID3Net using simulations of moving AuNPs, providing both amplitude and phase data along with corresponding diffraction images. This allows us to directly compare the phase information retrieved by PID3Net with the ground-truth data, facilitating an accurate assessment of our method’s efficacy. In the second evaluation experiment of AuNPs, we employ a sample of AuNPs dispersed in an aqueous polyvinyl alcohol (PVA) solution. We evaluated the efficacy of PID3Net in the real experimental scenario when the AuNPs are moving in the solution. We expect that the movement of the AuNPs would be captured using single-shot CXDI by measuring the diffraction images directly with the experimental optic system. Table 1 presents the detailed settings of the three experiments.

We assess the efficacy of PID3Net by comparing it with a conventional method33 that uses reciprocal-space constraints and gradient descent, specifically extended to a mixed-state model55 where multiple probe functions are applied in optical systems. The multi-frame PIE-TV (mf-PIE)32, which introduces virtual overlapping frames constraint for solving dynamic CXDI, is also applied for comparison. Hereinafter, we denote these two methods as mixed-state and mf-PIE. Besides the iterative methods, we compared our method with two state-of-the-art DL-based methods for phase retrieval, including the AutoPhaseNN36 and PtychoNN24. Additionally, we investigated the effectiveness of the Poisson loss ({{mathcal{L}}}_{PO}) and the MAE loss ({{mathcal{L}}}_{MAE}) when applied in PID3Net for phase retrieval. We denote the models as PID3Net-PO with Poisson loss and PID3Net-MAE with MAE loss. The efficacy of these methods is evaluated on the basis of three critical criteria: (1) the discrepancy between the diffraction images reproduced from the reconstructed sample images and measured diffraction images, (2) the spatial resolution of the retrieved phase information, and (3) the efficiency of the retrieved phase information for post analysis, in terms of applicability and accuracy.

To quantify the discrepancies between diffraction images, we used the Rf score62 (Methods section ‘Model training’), which provides a single scalar value indicative of the overall pixel-by-pixel difference between the measured diffraction images and those calculated from the reconstructed sample images. The Rf score is crucial for assessing how well the reconstructed sample images reproduce the measured diffraction images on average. We employ the phase retrieval transfer function (PRTF)7,63 to evaluate the spatial resolution of the retrieved phase information. The PRTF focused on diffraction information consistency by comparing reconstructed diffraction to measured diffraction patterns in Fourier space. In our simulations, we leverage access to ground-truth objects, which provides an ideal opportunity for validation. Using this advantage, we apply Fourier ring correlation (FRC)64 to directly compare the reconstructed objects against these ground-truth objects. These two types of comparisons offer more comprehensive evaluations of reconstruction accuracy.

Additionally, the efficiency of the retrieved phase information is quantified using the structural similarity index measure (SSIM)32,65 when the ground truth of the sample is available in the simulation scenario. The SSIM provides a single scalar value; however, it indicated the similarity between the retrieved phase information and those calculated from the ground truth of the sample. To quantitatively evaluate the efficiency of our method in real experimental scenarios, where images of the sample are reconstructed from diffraction images measured during actual X-ray diffraction experiments, we verify the fidelity of the reconstructed pattern on the sample by referencing ground-truth information about the samples and their moving behaviors. The ground-truth information includes the shape and moving velocity of the sample in the experiment with the Ta test chart and the distinguishability between particles and the solution in the experiment with AuNPs dispersed in PVA solution34.

Phase retrieval for movement of Ta test chart

In this section, we discuss the first proof-of-concept experiment that is conducted to evaluate the performance of PID3Net in phase retrieval for the motion of the Ta test chart. Before conducting the primary experiment, a preliminary study14 is performed to determine the appropriate probe function for reconstructing the phase image of a static tantalum (Ta) test chart. The study employs the mixed-state method within the framework of scanning coherent X-ray diffraction imaging (CXDI) (Fig. 3A). During the process, the Ta test chart is held stationary, with an exposure time of 10 seconds at each scan position. In the main experiment, the Ta test chart is moved, and continuous single-shot CXDI measurements are collected to produce a video of the diffracted images. Diffraction images are measured with a 7 ms exposure time per frame. Figure 3B illustrates some selected frames from the first to the 1400th frame.

Figure 4A presents the retrieved phase information from the diffraction images by using the mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO. At first glance, the patterns and line absorber shapes of the Ta test chart in the phase images reconstructed by PID3Net-PO and PID3Net-MAE are more precise and more stable over time than those retrieved by mixed-state or mf-PIE, as further illustrated in Supplementary Movie 1. The phase information retrieved using AutoPhaseNN and PtychoNN is also shown in this movie and Supplementary Fig. 1; however, the resolution of the reconstructed images is not as clear and stable as with PID3Net-PO and PID3Net-MAE.

A Phase information was retrieved from diffraction intensity images of the moving Ta test chart with a 7 ms exposure time per frame, using the mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO methods. The frame index of each image is indicated in the top row. B Magnified views of the regions enclosed by green squares at the 400th frame, along with profiles of two circular arcs. The horizontal mark at the middle of each arc indicates its zero position. C Analysis of the phase profiles of the two circular arcs in the retrieved phase information from the measured diffraction intensity image at the 400th frame, monitoring phase shifts at different positions along the curved lines. D Phase retrieval transfer function (PRTF) analysis of the phase images reconstructed using the four phase retrieval methods. Dashed horizontal lines indicate the spatial resolutions at which the PRTF value falls below 1/e, marking the threshold for reliable phase retrieval. E Distribution of the estimated velocity from the acquired phase images over the first 400 frames. The dashed line represents the preset velocity of 340 nm s–1, and the white bar indicates the median of the distribution.

Next, to evaluate the spatial resolution of the reconstructed image obtained by the phase retrieval methods, profiles of two circular arcs on the Ta test chart are analyzed for each frame. Figure 4B shows the enlarged view of the phase information retrieved from the measured diffraction images at the 400th frame, highlighting the two circular arcs. The red circular arc crosses over 100 nm-width patterns, while the blue circular arc crosses over 200 nm-width patterns. These patterns include transmitted and absorbed regions, represented by areas with positive and negative phase shifts, respectively. Figure 4C shows plots of the retrieved phase information by the four applied methods along the two circular arcs. In the Ta test chart, the patterns are organized cyclically along the circular arcs, with equal widths along each arc but different widths between the two arcs for both the absorbed and transmitted regions. Both PID3Net-MAE and PID3Net-PO successfully reconstructed the patterns that the two circular arcs pass through, accurately reflecting the ground-truth shape of the Ta test chart. In contrast, the patterns are not clearly observed in the phase information retrieved by using the mixed-state and mf-PIE methods. Similar to the iterative methods, the DL methods, such as AutoPhaseNN and PtychoNN, did not reconstruct the patterns accurately, as shown in Supplementary Fig. 2B. Additionally, PID3Net-PO yielded a more stable and smoother cycle of phase transition than PID3Net-MAE. The estimated widths of the patterns reconstructed using PID3Net-PO are approximately 120 nm and 210 nm for the red and blue curved lines, respectively, aligning well with the widths of these patterns in the Ta test chart. The obtained results quantitatively demonstrate that PID3Net-PO and PID3Net-MAE can retrieve phase information with at least twice higher spatial resolution compared to the iterative methods, such as mixed-state and mf-PIE methods, as well as DL methods like AutoPhaseNN and PtychoNN.

As an attempt to evaluate the reliability of the proposed methods in scenarios where ground-truth information of the sample is unavailable, unlike the scenario with the Ta test chart, we assessed how accurately the reconstructed sample images could reproduce the observed diffraction images. This assessment is crucial for determining the practical applicability of the proposed methods. The discrepancies on average between diffraction images reproduced from phase information retrieved using the mixed-state method and the measured diffraction images are the smallest, as evidenced by an Rf score of 0.84. The scores for PID3Net-MAE and PID3Net-PO are almost the same, at 0.84 and 0.85, respectively. Meanwhile, the results of other DL-based methods are slightly higher, at 0.87 for both the AutoPhaseNN36 and the PtychoNN24. The diffraction images reproduced from phase information obtained via the mf-PIE method exhibit the largest discrepancies, with an Rf score of 0.91.

Figure 4D presents the PRTF indices, which evaluate how well the reconstructed diffraction patterns match the experimentally measured diffraction patterns across spatial frequencies, for the four methods: mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO. For each method, we calculate the average of the PRTF indices across all diffraction images in the entire video of the Ta test chart’s motion, providing a partial assessment of the fidelity of phase retrieval. While the PRTF reflects the ability to recover spatial features in Fourier space, it does not fully account for noise-induced inconsistencies or artifacts, which can inflate high-frequency PRTF values and obscure actual reconstruction fidelity66.

The PRTF indices for the mixed-state method and PID3Net-MAE are comparable and suggest better recovery of spatial features than the remaining methods across all spatial frequencies. Both methods achieve a full-period recovery limit of approximately 200 nm. The results for PID3Net-PO suggest a slightly reduced recovery ability, with a limit of approximately 230 nm. Conversely, mf-PIE exhibits the lowest recovery ability, with a spatial feature limit of approximately 280 nm, indicating reduced reliability for finer details. A similar analysis for phase images reconstructed using other DL methods is presented in Supplementary Fig. 2C. PtychoNN shows slightly worse performance than PID3Net-PO, while AutoPhaseNN achieves a recovery limit comparable to the mixed-state method and PID3Net-MAE, at approximately 200 nm.

Although the Rf scores and PRTF analysis could provide an overall similarity between the reconstructed and measured diffraction images across various frequencies, interpreting them in terms of reconstruction quality can be challenging, especially under noisy or low signal-to-noise ratio conditions. Grainy artifacts, often exacerbated by noise, can introduce inconsistencies in the reconstruction and contribute to misleadingly high PRTF values at certain spatial frequencies, falsely suggesting improved resolution66. Further investigation into this phenomenon is presented in the Supplementary Section III.

In addition to matching the measured diffraction images, phase retrieval methods must also accurately reconstruct phase information that captures the dynamic behaviors of the sample. In our method, the temporal block (TB) is specifically designed to retrieve phase information from adjacent frames in a sequence of diffraction images, leveraging temporal correlations to improve robustness to noise and ensure consistent depiction of dynamic behaviors. This approach contrasts with traditional methods that independently retrieve phase information for each frame, often neglecting temporal coherence critical for dynamic reconstruction.

Further investigations are performed to evaluate the efficiency of the phase information retrieved from our proposed methods in depicting the moving behaviors of the Ta test chart. We employed auto-correlation methods on pairs of adjacent phase images retrieved using the six methods, mixed-state, mf-PIE, AutoPhaseNN, PtychoNN, PID3Net-MAE, and PID3Net-PO, and monitored the number of shifted pixels to estimate the velocity of the movement of the Ta test chart. As shown in Fig. 4E, the averages of estimated velocities derived from these phase retrieval methods are close to the actual velocity settings of the sample in the experiment. In contrast, the numbers derived from DL methods are lower than the actual velocity, as shown in Supplementary Fig. 2D. Notably, the estimated instantaneous velocities by using PID3Net-MAE and PID3Net-PO during the experiment are more stable, with smaller variances, than those estimated by using the mixed-state and mf-PIE methods. The results indicate that the PID3Net method adeptly learns to reconstruct phase information that matches the dynamics behaviors of the sample, where the Ta test chart is moving horizontally relative to the X-ray beam from left to right at a fixed velocity.

The previous evaluation results have shown that our proposed method can reconstruct high-quality images for the moving Ta test chart by adding constraints to ensure smoothness in both spatial and temporal domains. These high-quality reconstructions may be attributed to the deep learning method’s capacity to capture the underlying mechanisms of the sample’s dynamic behaviors, thereby addressing both the issue of random noise in observed diffraction images and the issue of multiple solutions in phase retrieval. To test the capacity of the proposed methods, we simulated a hypothetical movement of the Ta test chart using the obtained experimental data by rearranging the order of the consecutive measured diffraction images. In this test, the Ta test chart repeatedly moved from left to right over 400 frames and then moved back to the left over the subsequent 200 frames instead of moving in only one direction as in the actual experiment. The PID3Net-PO model, which is trained on the experimental dataset featuring only one direction of movement, successfully retrieved high-resolution images for the simulated movement of the Ta test chart with changes in the direction of movement (Supplementary Movie 2) without requiring retraining. This finding suggests that the DL method holds promise in capturing the dynamic behaviors of the sample moving horizontally relative to the X-ray beam, and thus producing high-quality image reconstructions.

In summary, our method yielded high-quality images that accurately captured the movement of the Ta test chart, surpassing the iterative and DL methods. The iterative methods somewhat captured the dynamic behavior of the moving Ta test chart but resulted in lower-quality images, while the other DL methods failed to portray the sample’s dynamic behavior accurately. Consequently, in the forthcoming evaluation experiments, we compared our method with only two iterative methods: mixed-state and mf-PIE. Besides accurately reconstructing high-quality images of the Ta test chart, our method is less time-intensive than iterative methods. As shown in Supplementary Table II, our method is four times faster than the mixed-state method and thirty-two times faster than the mf-PIE method in training, while the inference time of our method is thousands of times faster, making it highly suitable for practical deployment.

Phase retrieval for simulated movement of AuNP in solution

In the second evaluation experiment, we examine the efficacy of PID3Net in retrieving phase information for the motion of AuNPs in solution from simulated diffraction images. Starting from such numerical simulations, which provide a controlled setting as well as known ground truth, will facilitate an accurate assessment of our method’s efficacy before applying it to the actual experimental scenario.

Figure 5A shows the schematic of the simulation for the AuNPs dispersed in the solution and a simulated diffraction image. This simulation uses four modes of the numerical probe function, which are reconstructed using scanning CXDI34. Figure 5B presents the retrieved phase information from the simulated diffraction images using the mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO. The PID3Net method is superior to other methods, particularly in the contrast between the AuNPs and the background. This superior contrast led to a more accurate capture of the shape of particles from the retrieved phase information.

A Schematic of the simulation setup for the AuNPs dispersed in solution, along with a simulated diffraction image. The diffraction images were accumulated over a 100 ms exposure time using four-mode probe functions, which were reconstructed via scanning CXDI. B Amplitude (top) and phase (bottom) information in the first frame were reconstructed using the mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO methods. C Fourier ring correlation (FRC) analysis of the reconstructed and simulated phase images, and PRTF analysis of the reconstructed and simulated diffraction images using the four phase retrieval methods. The dashed line indicates the threshold value of 1/e and the corresponding spatial frequency.

At first glance, the distinction between AuNPs and solutions in the phase images reconstructed by PID3Net-PO and PID3Net-MAE appears more precise and more stable over time compared with those retrieved by mixed-state or mf-PIE, as further illustrated in Supplementary Movie 3. In particular, PID3Net-PO and PID3Net-MAE can retrieve finer details and more rounded edges, which match better with the ground truth of the simulation, and are crucial for interpreting and analyzing the moving behaviors of the AuNPs. Additionally, PID3Net-PO shows potential in accurately capturing amplitude information, which is essential for characterizing the intensity distribution of the sample. However, reconstructing amplitude for short exposure times in practice remains challenging for all the methods.

Table 2 presents a quantitative evaluation of phase retrieval accuracies for the four methods by considering the differences in both diffraction and phase images. The PID3Net-MAE method achieves the lowest Rf score of 0.69 among the considered methods, indicating that the diffraction images it calculates are closest to the simulated diffraction images. However, the PID3Net-PO method, which achieved a slightly higher Rf score, reconstructed phase information most closely to the ground truth of the simulation, as evidenced by the highest SSIM score of 0.95, surpassing those of all other methods. The mixed-state exhibits a comparable Rf score with those of our methods, but the phase reconstructions achieved using this method lack accuracy compared with the ground truth of the simulation, as evidenced by its significantly lower SSIM score. Conversely, the worst Rf score is observed for mf-PIE, at 0.82, but its SSIM score is much better than the number of the mixed-state. The obtained results again suggest that achieving better Rf does not guarantee better-quality phase information due to the multiple solutions issue.

Furthermore, the spatial resolution of retrieved phase images is statistically assessed using both the FRC and PRTF analysis methods (Fig. 5C). The FRC for phase information of PID3Net-MAE and PID3Net-PO outperformed those of other methods across all spatial resolutions. Notably, the spatial resolutions of the phase images reproduced from the retrieved phase information by PID3Net-MAE and PID3Net-PO are up to 185 nm and 175 nm. In comparison, the reliable spatial resolutions for mixed-state and mf-PIE are limited to 305 nm and 315 nm, respectively. However, regarding PRTF analysis for the reconstructed diffraction, the mixed-state and PID3Net-MAE have slightly better spatial resolution at approximately 140nm. Meanwhile, the PID3Net-PO and mf-PIE results are up to 150 nm and 250 nm, respectively.

To further investigate the differences between PRTF and FRC behaviors in our proposed method, we conduct four additional simulation experiments by varying the intensity of the probe light while keeping the simulation conditions for the particles constant. These simulations are designed to mimic the trade-off between signal-to-noise ratio (SNR) and temporal resolution commonly encountered in real experiments. The PRTF analysis across these different incident photon flux levels revealed that, although PRTF scores remained relatively consistent across the examined phase reconstruction methods, the actual reconstruction quality varied significantly. Our proposed method produces sharper and clearer reconstructions compared to other methods, as confirmed by both visual inspection and FRC measurements against the ground truth (Supplementary Figs. 5 and 6). The comparison with the Ta test chart data and these findings further suggest that a high PRTF score does not necessarily guarantee better reconstruction quality, particularly in noisy and low-SNR measurement scenarios, such as those encountered in dynamic imaging experiments with short exposure times. All experimental settings and results are detailed in Supplementary Section III.

In summary, these statistical results underscore the efficacy of the PID3Net method in accurately retrieving phase information for the motion of AuNP in simulations with high spatial resolution, compared with the other two iterative phase retrieval methods. The promising outcomes suggest the potential applicability of the PID3Net method in real experimental scenarios, where diffraction images are measured using an experimental optic system instead of simulations. Additionally, this experiment shows that while the Rf and PRTF metrics provide insights into the reconstruction consistency on the basis of the observed diffraction images, the SSIM and FRC metrics offer a more nuanced perspective on the fidelity of the reconstructed image. This distinction underscores the importance of considering both metrics while evaluating phase retrieval methods.

Phase retrieval for the experimental movement of AuNPs in the PVA solution

Previous evaluation experiments using numerical simulations of samples with nanoparticle motions have theoretically demonstrated the efficacy of the PID3Net method in phase retrieval for dynamic behaviors of AuNPs in solution. Statistical analyses on ground-truth images or prior knowledge about the AuNPs’ dynamics, such as known velocities, showed that the PID3Net method enables high-resolution image reconstructions and aids in understanding the underlying mechanisms of motion of AuNPs in solution. In the third experiment, we apply the proposed method to reconstruct the image of an actual sample with motions of colloidal AuNPs34 in a 4.5 wt% polyvinyl alcohol solution, with particle sizes of approximately 150 nm, from its measured diffraction images to assess the efficacy of the PID3Net method.

Figure 6A shows reconstructed images from one-second-exposure diffraction images across five frames, using mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO. The images reconstructed by mixed-state and mf-PIE appear blurred and noisy, suggesting less effective particle position and contrast resolution. In contrast, PID3Net-PO clearly reproduces the particles’ positions and contrasts, which results in smoother estimations and markedly reduced noise. However, phase recovery using PID3Net-MAE is difficult, yielding images with diminished contrast and increased blurriness. This issue is attributed to its reliance on the MAE calculation that averages pixel losses across the image, potentially smoothing out critical details. For a more detailed visualization of these results, refer to Supplementary Movie 4. The evaluated Rf scores for mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO are 0.33, 0.62, 0.49, and 0.53, respectively. Moreover, the PRTF scores for mixed-state and PID3Net-MAE are comparable and outperform the other two methods at various spatial resolutions (Fig. 6B). The limits of full-period spatial resolution for mixed-state and PID3Net-MAE are approximately 80 nm, whereas for PID3Net-PO and mf-PIE, the limits extend to 160 and 220 nm, respectively.

A Phase information was retrieved from experimentally measured diffraction images of AuNPs dispersed in PVA solution with a one-second exposure time per frame. Phase images were reconstructed using the mixed-state, mf-PIE, PID3Net-MAE, and PID3Net-PO methods. The rightmost images show magnified views of the regions enclosed by red squares in the 2000th frame. B PRTF analysis of the reconstructed phase images using the four phase retrieval methods. The dashed line indicates the threshold value of 1/e and the corresponding spatial frequency. C Distributions of entropies for phase images reconstructed using the four methods. D Pixel intensity distributions of the particle and solution regions in the reconstructed phase images.

Further investigations are conducted to quantitatively evaluate the efficiency of the phase information retrieved by our proposed methods in capturing the movement of the AuNPs in the PVA solution. Figure 6C illustrates the distribution of entropy for the reconstructed phase images, thereby indicating the variability of intensity values within each image. Lower entropy values correspond to more ordered and less complex phase distributions, which suggest a higher reconstruction fidelity of images characterized by fewer artifacts. Notably, PID3Net-PO exhibits lower entropy values than PID3Net-MAE, and significantly lower entropy values compared to the mixed-state and mf-PIE methods, highlighting the enhanced contrast and superior noise-reduction capabilities of PID3Net-PO in the reconstructed images.

Additionally, an adaptive threshold filter34 is employed to assess the performance of each method in supporting accurate gold particle detection. Using consistent settings for differentiating the signal from particles against noise and the background (solution), the images reconstructed by PID3Net-PO distinctly show a significant enhancement in distinguishing particle and PVA solution distributions, as illustrated in Fig. 6D. Supplementary Section IV presents a detailed analysis of the diameter distribution of detected particles using our algorithm, building upon our previous work34. In our previous work, utilizing data from X-ray Photon Correlation Spectroscopy (XPCS), we measured the diffusion coefficient (DXPCS) to be 7550 ± 850 nm2 s−1. Based on this diffusion coefficient, we estimate that the particles move at an average speed of approximately 50 nm s−1. The mean diameter of particles detected using the PID3Net-PO method is approximately 200 nm, while the fabricated particles are designed to have a diameter of 150 nm. This estimation aligns with the theoretical expectations derived from our XPCS analysis, corresponding to a radial displacement of approximately 50 nm during the one-second exposure time.

Although further analyses are required to fully comprehend the nanoscale dynamics of each specific material, the results obtained from all three evaluation experiments underscore the efficacy of the PID3Net method. The temporal self-consistent learning approach employed in both Fourier and real spaces offers crucial support for improving phase-retrieval quality, providing valuable insights for further development of phase-retrieval methods in CXDI to depict the dynamic process of the sample.

Discussion

PID3Net represents DL architectures specifically engineered to address the phase retrieval challenges in CXDI for depicting the dynamic process of the sample. This network leverages physics-informed learning strategy to reconstruct complex structures of the sample from sequential diffraction images, achieving exceptional performance. The strength of PID3Net lies in its application of 3D convolutional layers to the temporal sequences of diffraction images, which enables the model to learn diffraction images representations self-consistently. This capability enables PID3Net to effectively capture intricate features present in experimental data. Additionally, PID3Net integrates these learned representations to perform inverse reconstructions of sample images via deep dynamic diffraction imaging. This comprehensive approach facilitates accurate predictions and enables the generation of high-fidelity reconstructions, even in scenarios marked by dynamic variations and significant noise.

However, limitations associated with DL models compared with traditional iterative reconstruction methods should be acknowledged. Although iterative methods can effectively work with a single data point, DL models typically require substantial data for training and convergence. The availability and quality of training data, complexity of the imaging system, and computational resources required for training are crucial factors for consideration. Despite these challenges, the successful implementation of PID3Net has prompted advancements in the field of coherent X-ray imaging.

Future studies could integrate physical models and prior knowledge into the DL framework to reduce training data requirements and increase generalizability. Additionally, advancements in transfer learning could allow models trained on the data of one type material to be adapted for use with different types of materials, improving the versatility and applicability of DL methods in CXDI. Furthermore, integrating numerical simulation and physics-informed learning DL in a reinforcement learning manner could enhance the model’s ability to learn and adapt to dynamic changes in real-time, significantly improving phase retrieval performance and robustness.

PID3Net is a valuable tool for various scientific and industrial applications owing to its ability to generate accurate reconstructions in real time and efficiently process dynamic variation positions. The development of PID3Net will enhance our understanding of material systems at the nanoscale, potentially transforming the landscape of nanomaterial research and development.

Methods

Encoder-Decoder block

In the PID3Net architecture, we employed T-frame consecutive diffraction images in the shape of T, H, and W as the input and introduced TBs with different kernels over temporal dimensions. The encoder block comprised four TB layers with Max pooling. We represented the kernel as F × K × W × W, where F represents the number of filters, and K and W are the kernel sizes for the temporal and spatial spaces, respectively. As shown in Fig. 2A, one TB consists of three CNN layers: F × 1 × 3 × 3 + (F/2) × 3 × 3 × 3 + (F/2) × 5 × 3 × 3, which accumulate information in both spatial and temporal spaces to encode the input diffraction images. Following each TB layer, a 1 × 2 × 2 max pool layer is applied to reduce the image size by half while preserving the temporal information. Subsequently, two separate decoder block channels are used to decode the amplitude and phase reconstruction information. For these parts, the TB layers are also used with 1 × 2 × 2 transpose convolution layers to construct the details of the sample shape. Instead of adopting a simple nearest-neighbor interpolation upsampling method, the transpose convolution layer is used to adaptively learn the upsampling process. Finally, a convolution layer of size 1 × 3 × 3 is used for the output of each decoder channel. For the amplitude and phase outputs, we used the sigmoid and π*tanh activation functions, respectively. The model parameters and architecture details are presented in Supplementary Table I. More details about the impact of TB and RB layers are presented in the Supplementary Section II.

Model training

For phase retrieval, PID3Net functions as a physics-informed learning model, minimizing the difference between the measured diffraction image and the intensity image estimated by the network. The consistency of the reconstructions with the observed diffraction datasets during phase retrieval is monitored using the Rf factor, defined as follows:

where N is the number of diffraction images in the datasets. On the other hand, the consistency between the reconstructed image (hat{y}) and the ground-truth image y in simulation scenarios is evaluated using the SSIM score, defined as:

where C1 = 0.01 and C2 = 0.03 are constant parameters used on the standard SSIM implementation65.

The model is trained with the Adam optimizer on an individual Tesla A100-PCIe graphics processing unit with a memory capacity of 40 GB. The learning rate is set to 0.001, and the batch size is set to eight. The network is trained for 20 and 50 epochs for the test chart and AuNP datasets, respectively. Remarkably, less than one million parameters are used in PID3Net. In this study, the number of sequence images for learning (T) is set to five for all the datasets. The number of iteration updates in the RB layer is set at five for the performance–speed trade-off. For the iterative reconstruction methods, the number of iterations is set such that the Rf score is saturated and did not decrease.

Setups of CXDI for experiments

The illumination in our single-shot CXDI setup is defined as the image of a triangular aperture formed by the zone plate (Fig. 1). The sharpness of this illumination is influenced by the quality of the zone plate, which may impact the reconstruction quality of the proposed PtySD3Net method. The use of a zone plate offers the advantage of improved reconstruction image quality by eliminating the influence of parasitic scattering from the mask’s inner walls. However, using a mask without a zone plate has the benefit of increased incident X-ray intensity, as zone plates typically have low diffraction efficiency, leading to an improved signal-to-noise ratio in the diffraction data.

Placing the mask closer to the sample could potentially improve illumination sharpness and reconstruction quality but also introduces challenges related to scattering, alignment, and sample positioning. Optimal mask placement depends on specific experimental requirements and sample characteristics. A detailed investigation of the effects of mask placement and the trade-offs between using a zone plate or a mask alone on the performance of PtySD3Net is an interesting topic for future research but is beyond the scope of the current study.

Experimental CXDI

The experimental setup included a source that delivered an incident 5 keV monochromatic X-ray beam, which is shaped by a triangular aperture (side length: 10 μm). The triangular apertures are fabricated using focused ion beam processing on a 15-μm-thick platinum foil polished on both sides. A Fresnel zone plate is installed to reduce the triangular aperture size by a factor of two, resulting in a triangular X-ray beam of approximately 5 μm per side, which illuminated the sample. To inhibit X-ray scattering in air, all the optical elements, the sample, and the detector are enclosed in a vacuum chamber maintained at a pressure less than 1 Pa. The temperature increase owing to X-ray absorption in the solution is estimated to be less than 0.2 K s−1. Given that the solution conducted heat via convection, the temperature increase due to X-ray irradiation is considered negligible.

In the first experiment, the probe functions are reconstructed using the mixed-state method14,57 via scanning CXDI with an exposure time of 10 s at each scan position. The sample is exposed to 15 × 15 overlapping fields of view, separated by 500 nm in the horizontal and vertical directions. As shown in Fig. 3A, the probe functions are divided into five orthogonal modes. All probes feature a half-sized triangular aperture imaged using the FZP, with the first mode capturing 89.6 % of all photons. The intensities of the five modes probe are distributed as follows: 89.6, 4.4, 2.4, and 1.7 %. Subsequently, the model is applied to image the Ta test chart that is continuously translated against the same X-ray beam for single-shot CXDI. During these translations, the diffraction images generated from the illuminated area are continuously recorded at intervals of 7 ms for 15 s at 340 nm s−1. The incident photon flux on the sample surface is maintained at 107 photons s−1, resulting a dynamic range of 104.2 photons pixel−1 on these diffraction intensity images. The diffraction intensity images are recorded using an in-vacuum pixelated detector (CITIUS detector)67,68 with a pixel size of 72.6 μm. The detector is positioned 3.30 m downstream from the sample.

In the third experiment, we reused four modes of the probe function, which are introduced in our previous study to image the dynamics of the gold nanoparticles in the solution34. These probe functions are reconstructed through scanning CXDI. The distribution of these four modes accounted for 90.9, 5.4, 2.2, and 1.5 % of all the photons, which are orthogonal to each other in a single exposure. In the experiment, we used gold to fabricate probe particles due to its chemical inertness, biocompatibility, and resistance to deformation, making it safe for biological systems and suitable for studying mechanical stress and strain in materials and cells. The AuNPs are fabricated with a diameter of 150 nm. During the experiment, we continuously recorded diffraction images from the illuminated area with an exposure time of 1 second. A constant incident photon flux of approximately 3 × 106 photons s−1 is maintained on the sample surface, which resulted in a dynamic range of 105.1 photons pixel−1 on the diffraction intensity images. The diffraction intensity images are recorded using an in-vacuum pixelated detector (EIGER 1M, Dectris) with a pixel size of 75 μm. The detector is positioned 3.14 m downstream from the sample.

Simulation of CXDI

A wave-optical simulation of the illumination optics is conducted in the second experiment, using an off-axis configuration FZP under the specified experimental conditions. This simulation is employed to evaluate the efficacy of our proposed phase retrieval method in imaging the motion of AuNPs before applying it in a real scenario. Thus, all simulation settings are aligned with those of the actual experiment described previously. AuNPs are simulated with a diameter of 300 nm, and their quantity is determined based on a 0.1 ratio for the entire simulated area. The simulation used an optical configuration featuring a photon energy of 5 keV and a photon flux of 3 × 106 photons s−1, similar to the actual experiment. The diffraction intensity images are captured using consecutive images with an exposure time of 100 ms with a dynamic range of 104.1 photons pixel−1. Photon-counting noise with the Poisson statistics is also added to the diffraction images. In our previous study34, the average velocity of AuNPs is reported as approximately 200 nm s−1 in the actual experiment, estimated using X-ray photon correlation spectroscopy (XPCS). Consistent with the aforementioned experimental observations, a controlled particle velocity of approximately 200 nm s−1 is applied in the simulation.

Responses