Probabilistic machine learning for battery health diagnostics and prognostics—review and perspectives

Introduction

Lithium-ion (Li-ion) batteries have witnessed growing adoption in consumer electronics, electric vehicles (EVs), and grid energy storage systems, largely owing to their excellent energy density and power output. However, continued usage and adverse operating environment drive irreversible chemical reactions and material morphology changes, leading to gradual but inevitable degradation of battery capacity and power over time. Consequently, accurately estimating the state of health (SOH) of Li-ion batteries and predicting their future degradation is crucial to optimizing every part of the battery life cycle—from research and development, to manufacturing and validation, deployment in the field, and reuse and recycling1.

Some of the earliest research into Li-ion battery health diagnostics and prognostics focusd on mathematical modeling of the capacity fade during cycle aging tests2,3,4. Notably, Bloom et al.4 found that the cell capacity could be aptly captured by a modified Arrhenius relationship, which is generally used to describe the rate of a chemical reaction. This capacity fade model excelled in extrapolating battery performance to new, untested conditions, providing great utility for design and engineering. Not long after, researchers began experimenting with empirical and semi-empirical mathematical models for battery capacity fade modeling to gain better accuracy. In the work of Spotnitz5, researchers developed a semi-empirical model for Li-ion battery capacity fade considering reversible and irreversible capacity loss due to solid-electrolyte interphase (SEI) growth on the graphite anode in the cells. Similar work by Broussely et al.6 proposed an empirical quadratic equation to model the capacity fade of NMC/Gr Li-ion cells during long-term storage. The quadratic model was primarily developed to capture the effect of SEI growth from electrode-electrolyte reactions during storage. Later, Liaw et al.7 demonstrated that empirical models could be used to extrapolate cell resistance increase with thermal aging to update the parameters of a simple equivalent circuit model for capacity estimation. Since these initial seminal works, many more advanced empirical and semi-empirical models have been developed8,9,10.

The great success of empirical and semi-empirical models soon led researchers to investigate alternative methods of modeling battery aging from experimental data. Saha et al.11 were some of the first to use a machine learning (ML) algorithm as part of a framework to model battery capacity fade and predict remaining useful life. The researchers used a relevance vector machine (RVM) (see the section “Relevance vector machine”) to model the exponential growth observed in the cell’s internal resistance with aging. The RVM was used to predict future resistance parameters for an equivalent circuit model that was then used to predict cell capacity. Altogether, the RVM was shown to do an exceptional job at rejecting outliers from the dataset and providing good uncertainty estimates with its predictions. This approach inspired others to further investigate ways of using ML models for battery health diagnostics and prognostics12,13,14.

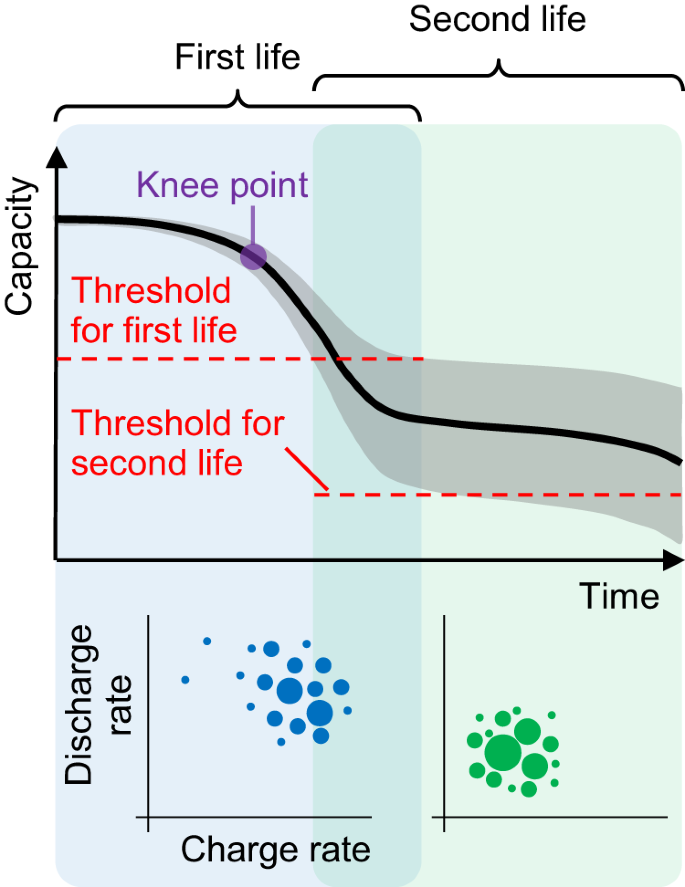

Over the past decade, the use of ML for battery health diagnostics and prognostics has expanded substantially. The rapid growth can be attributed in part to the recent advances in ML and deep learning technology, like open-source ML software and datasets, that enable easier modeling of complex data15. Well-studied applications of ML for battery health diagnostics and prognostics include battery performance simulation and state estimation (primarily state-of-charge (SOC) and power estimation)16,17,18,19, SOH estimation and capacity grading20,21,22, and capacity forecasting and remaining useful life (RUL) prediction23,24,25. Newer, emerging battery prognostic problems include early lifetime prediction26,27, knee point prediction28, capacity trajectory prediction from early aging data29,30, and initial works investigating the applicability of existing diagnostic and prognostic models to battery aging data collected from the field31,32,33.

Despite these significant research efforts on battery health diagnostics and prognostics, most ML-focused works have yet to incorporate uncertainty quantification systematically. Here, “uncertainty” refers to the predictive uncertainty of an ML model, such as a neural network, for a training/test sample point that is ideally associated with how confident the model is when predicting at this point34. The idea is that an ML model does not simply produce an output (e.g., an estimate of a cell’s SOH indicator); it also estimates the uncertainty associated with this prediction to the most accurate extent possible. For example, this predictive uncertainty can be in the form of a standard deviation of a Gaussian-distributed output that describes the spread of the probability distribution around the mean prediction. A spread that is too large indicates that the uncertainty level is high enough for the output not to be trusted. In such cases, a human end user may discard this prediction or provide the ML model with additional information to reduce the predictive uncertainty. Predictive uncertainty can be confused with prediction error. The former comes as an uncertainty estimate by an ML model with uncertainty quantification capability and is thus known; in contrast, the latter is unknown without access to the ground truth. That is why access to predictive uncertainty is important for applications not tolerating large prediction errors well. Ideally, in these applications, we expect predictive uncertainty (known) to be a reliable indicator of prediction error (unknown) on a per-sample basis.

Quantifying predictive uncertainty in ML-based health diagnostics and prognostics becomes especially important given the dynamic and multi-physical nature of Li-ion batteries, where even small variations in manufacturing and testing conditions can significantly change the electrical, thermal, and mechanical performance, resulting in larger cell-to-cell variability35. Furthermore, this inherent cell-to-cell variability becomes even more pronounced as the cells age. Early work by Baumhofer et al.36 investigated the production-caused variation in capacity fade of a group of 48 cells cycled under identical conditions, finding that the lifetimes varied by as much as a few hundred cycles. These results, and many similar studies26,32,35,37, highlight the great need for probabilistic diagnostic and prognostic algorithms that often have to learn from small datasets and extrapolate to the tail-end of the lifetime distribution for a population of cells. Such extrapolations are often associated with large prediction errors, which, although infeasible to quantify without access to the ground truth, can be communicated to the user, to some degree, through high predictive uncertainty and low model confidence. Probabilistic models with properly calibrated uncertainty estimation are paramount for setting warranties on battery-powered devices like consumer electronics and, more recently, EVs, where failing to deliver a promised lifetime due to maintenance/control decision making informed by largely incorrect ML-based diagnostic and prognostic results can cost companies their reputation in addition to the monetary burden associated with honoring warranty repairs.

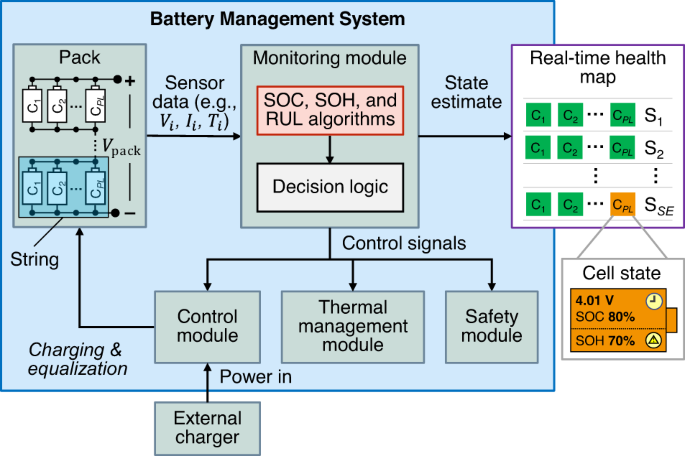

In practice, quantifying diagnostic and prognostic uncertainty is especially important for large battery packs with many modules, where the capacity of a module consisting of serially connected cells will be limited to the capacity of the worst-performing cell. Thus, probabilistic models (like those discussed in the sections “Probabilistic ML techniques and their applications to battery health diagnostics and prognostics”, “Advanced topics in battery health diagnostics and prognostics”, and “Future trends and opportunities”) that can accurately model worst-cell performance through uncertainty estimates made by learning from a limited dataset are crucial to module and pack development. This cell-to-cell variability poses a direct challenge for battery management systems (BMSs) that need to balance cell voltages to maximize the capacity and power availability of a battery pack. In essence, a BMS is an electronic system consisting of hardware, software, and firmware that is responsible for managing the power and health of a rechargeable battery (e.g., a Li-ion battery cell or pack). Figure 1 outlines some key functions of a BMS in an illustrative flowchart. The BMS in the figure is built for a battery pack consisting of SE serially connected strings (or modules), each with PL parallelly connected cells. The BMS takes voltage (V), current (I), and temperature (T) measurements from each cell in the pack at regular intervals (e.g., every 1–5 s) and estimates the SOC and SOH of each cell, both of which cannot be directly measured. As will be detailed in the section “Battery health diagnostic and prognostic problems”, SOH estimation is an important battery diagnostic problem. For situations that require knowing how long each cell/module can be used before replacement, the BMS monitoring module also predicts each cell’s RUL and, in some cases, the cell’s SOH trajectory in future cycles. RUL prediction and SOH trajectory prediction are two well-studied battery prognostic problems of significance, as will be discussed in the section “Battery health diagnostic and prognostic problems”. Most importantly, neglecting uncertainty when predicting cell SOC and SOH may lead the BMS to incorrectly balance cell voltages, ultimately reducing the available capacity and power of the pack. It is worth mentioning that SOH estimation and RUL prediction can be computed in the cloud instead of directly at the BMS device, as the SOH and RUL usually need not be updated in real-time.

This battery pack comprises SE serially connected strings, each of which is composed of PL cells connected in parallel.

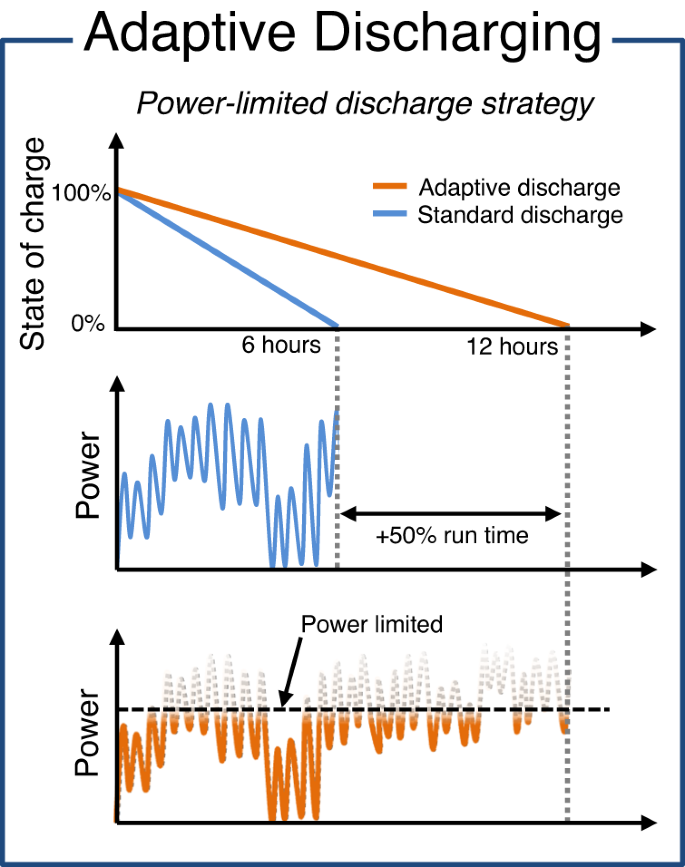

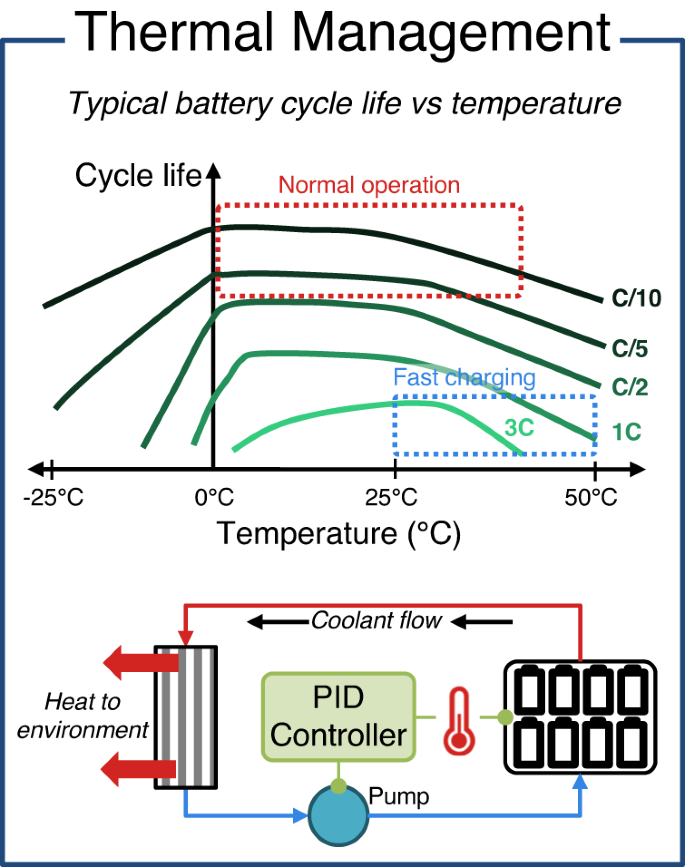

One major advantage of predictive uncertainty quantification for battery maintenance and control is its value in informing BMS actions during operation. For example, if estimates of cell SOC are highly uncertain, the BMS may limit the overall charge power in order to prevent cells from entering over-voltage conditions during charging. However, parameterizing models that can accurately quantify predictive uncertainty is challenging because battery datasets are usually limited in size due to the large expenses required to operate thermal chambers for extended periods. Further, it is difficult to replicate real-world operating conditions in the laboratory, and much care is needed to ensure newly parameterized models can accurately quantify prediction uncertainty on field data (see the section “Diagnostics and prognostics using field data”). The trend of small datasets is likely to continue as cells grow larger in size for automotive and grid storage applications. Large-format and high-capacity (>100 Ah) Li-ion battery cells require even more expensive testing equipment to achieve the high C-rates (>3C for a 100 Ah cell requires >300 A continuous current) necessary for aging cells quickly and studying fast-charging protocols—research that is imperative for lowering the “refueling time” of today’s EVs and accelerating the transition to electrified transportation. With costs for cells and test equipment on the rise, calibrating the predictive accuracy and uncertainty of battery diagnostic and prognostic models prior to deployment becomes critically important. Incorrect control decisions based on erroneous predictions and uncertainty may lead to suboptimal performance, damage to battery cells, and in rare cases, thermal runaway that results in catastrophic product loss and endangers the safety of people nearby.

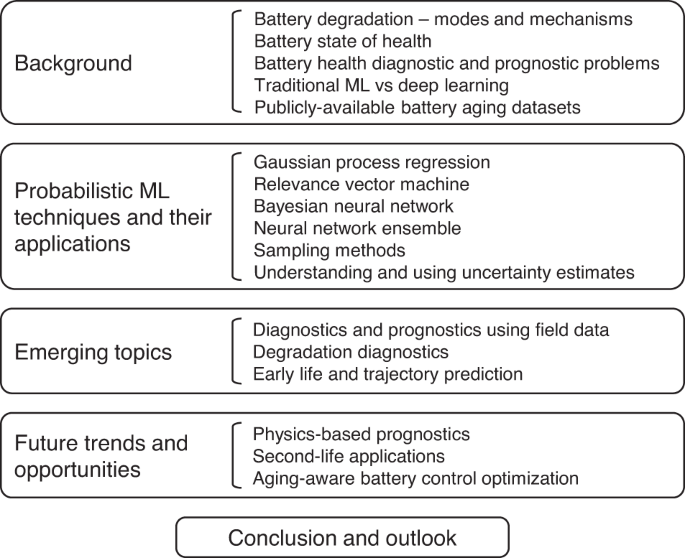

To this end, developing and validating probabilistic battery diagnostic and prognostic models is an essential area of research in the battery community. A handful of reviews on battery health diagnostics/prognostics exist today and can be found here38,39,40,41,42,43,44,45. However, all the reviews to date focus primarily on deterministic ML modeling methods, and do not emphasize existing research that studies probabilistic methods for battery health diagnostics and prognostics. To address this gap, we seek to provide a comprehensive overview of probabilistic modeling and ML for battery health diagnostics and prognostics. After providing an overview of Li-ion battery degradation, we review past and present studies on probabilistic battery health diagnostics and prognostics and discuss their methods, advantages, and limitations in detail. Our review offers unique insights into each of the probabilistic modeling approaches with detailed discussions on the implementation approach and recommendations for future research and development. Figure 2 presents an outline of this review paper. Below are a few key items covered in our review.

-

1.

First, we provide an overview of Li-ion battery degradation, discussing the types, main causes, and resulting effects on cell-level performance and SOH in the sections “Battery degradation—modes and mechanisms” and “Battery state of health”. The classification of battery degradation modes and analysis of their root causes provides relevant background knowledge that motivates the need for battery diagnostic/prognostic models that can estimate cell health and predict future cell degradation. In the section “Battery health diagnostic and prognostic problems”, we provide a high-level overview of six general problems relevant to battery health estimation and life prediction. Additionally, we highlight the pivotal role that publicly available battery aging datasets have played in facilitating existing research in the area (the section “Publicly available battery aging datasets”).

-

2.

Second, we analyze and compare the advantages and limitations of various probabilistic ML techniques and their application to battery health diagnostics and prognostics (the section “RVM applications to battery diagnostics and prognostics SOH estimation”). This section, uniquely focusing on probabilistic techniques for health diagnostics and prognostics, covers both the methodologies of each technique and examples of its applications to SOH estimation, SOH forecasting, and RUL prediction. This particular emphasis on probabilistic ML is a noteworthy feature of this review that sets it apart from existing reviews on battery health diagnostics and prognostics.

-

3.

Third, we delve into three emerging and “newer” topics in battery health diagnostics and prognostics in the section “Advanced topics in battery health diagnostics and prognostics”. Specifically, this section offers unique insights from three researchers actively working on problems related to battery SOH estimation from field data (the section “Diagnostics and prognostics using field data”), degradation diagnostics (the section “Degradation diagnostics”), and early life and trajectory prediction (the section “Early life and trajectory prediction”). This unique coverage of emerging topics further sets our review apart from existing ones.

-

4.

Fourth and finally, we discuss future trends and research opportunities in physics-based prognostics (the section “Physics-based diagnostics and prognostics”), second-life applications for used Li-ion cells (the section “Second-life applications”), and aging-aware battery control optimization (the section “Aging-aware battery control optimization”). This discussion constitutes the final distinctive element of this review, not commonly found in most other reviews.

Outline of sections and subsections.

Our review paper is concluded in the section “Conclusion”, where we also discuss prospects for future research essential to addressing long-standing challenges in battery health diagnostics and prognostics.

It is worth noting that this review focuses primarily on the application of various probabilistic ML and deep learning methods to unique problems (the section “Battery health diagnostic and prognostic problems”) within the field of battery health diagnostics and prognostics. A limitation of this work is that it does not cover specific challenges related to emerging ML topics, such as hybrid modeling, transfer learning, federated learning, and similar ML-focused concepts.

Background

Battery degradation—modes and mechanisms

Battery degradation is a complex and multi-scale process that varies with cell design and is driven by the way a cell is used. Understanding the fundamental mechanisms of Li-ion battery degradation is essential for effectively modeling and designing around it. Typically, researchers and engineers will conduct lab-based aging experiments to study the effects of different operating conditions on cell aging and SOH, which is most often quantified as a cell’s remaining capacity or internal resistance. Periodic reference performance tests (RPTs) are carried out during aging experiments to assess cell capacity and resistance under standard conditions (usually 25 °C) to help isolate the effect of aging on changes in cell capacity and resistance46. Comparing cell SOH measured from RPTs is important because cell capacity and resistance are influenced by temperature, C-rate, voltage limits, among other factors.

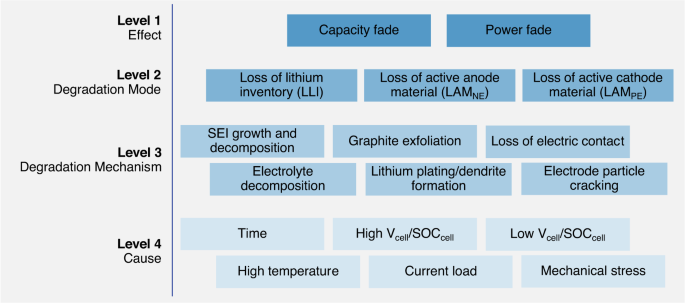

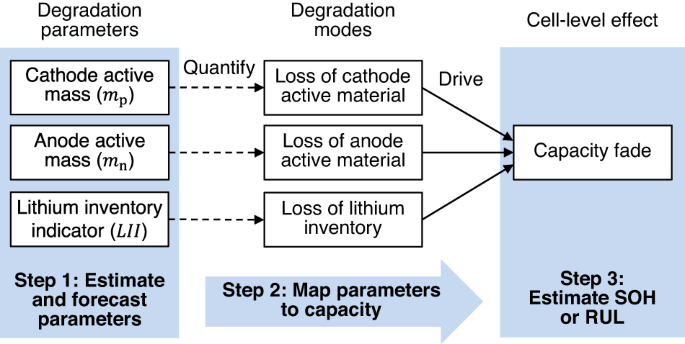

Battery aging tests are used to understand how stressors, like time, temperature, and energy throughput, affect the rate of capacity fade and the progression of internal degradation modes47,48. An overview of battery degradation mechanisms, their corresponding modes, and measurable cell-level effects is shown in Fig. 3. Even without cycling, Li-ion batteries lose capacity over time as internal side reactions occur between the electrolyte and electrode materials. The most prevalent of these side reactions is the formation of the solid electrolyte interphase (SEI) on the graphite anode common in nearly all Li-ion batteries used today49. SEI growth is mainly driven by time, but is also influenced by temperature, cell voltage, and cell load50. Fortunately, the formation of SEI on graphite anodes is entirely expected and well-studied as it plays a large role in determining a battery’s maximum capacity and expected lifetime. It has been widely accepted that capacity fade from the growth of SEI scales with a square-root-of-time (Q(t) = a ⋅ t0.5) relationship9. Further, many researchers have modeled Li-ion battery capacity fade due to SEI formation at various temperatures by scaling the t0.5 term using Arrhenius-like equations that model the influence of temperature on reaction rate51,52. Additionally, SEI formation has been shown to be directly related to the SOC a battery is stored at, where higher voltages generally lead to faster reactions and greater capacity fade. However, next-generation battery designs are pursuing new anode materials and may reduce or even eliminate the use of graphite in the anode altogether, thus introducing new degradation mechanisms that will need to be studied and mitigated.

Figure modified from Thelen et al.81 and based on the original figure in Birkl et al.71 showing the relationship between cell use/environment (Level 4), the corresponding degradation mechanisms (Level 3), their connections to the degradation modes (Level 2), and the resulting capacity/power fade (Level 1).

When a Li-ion battery is cycled, more degradation mechanisms arise in addition to the always-present capacity fade from SEI growth and other side reactions. Often during cycling, the SEI growth rate accelerates because the movement of Li-ions in/out of the electrodes causes repeated swelling and subsequently cracking of the already formed SEI, revealing new sites for SEI to form, and ultimately consuming more lithium in the process51,53,54. Like SEI formation, electrode swelling is expected by battery designers, and is a well-studied degradation mode. Li-ion battery degradation from electrode cracking has been shown to be sensitive to the depth of discharge (DOD) and the C-rate the cell is subjected to—where deeper discharge and faster rates increase the rate of capacity fade26,51,55. Capacity fade driven by electrode cracking during cycling has been diagnosed as a primary driver of cycling-driven capacity fade in Nickel-based battery chemistries like nickel-cobalt-aluminum-oxide (NCA)55 and nickel-manganese-cobalt-oxide (NMC)26,51. In these studies, researchers found that loss of cathode active material (LAMPE) to be a primary contributor to a cell’s capacity fade and was strongly correlated with a cell’s eventual lifetime.

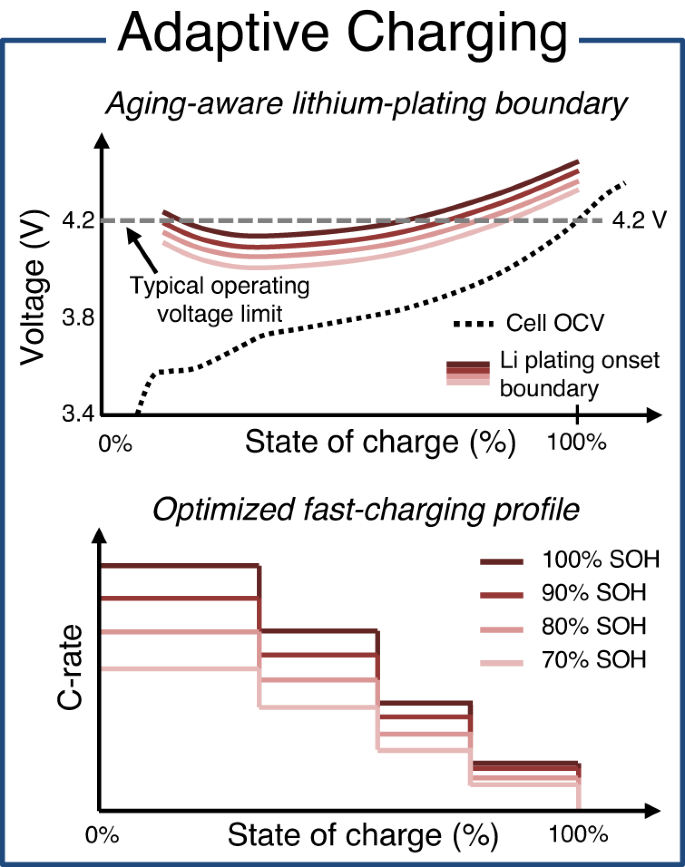

Under more extreme conditions such as cold temperatures (T ≪ 10 °C), high charging C-rates (I ≫ 3C), or the combination of these conditions, intercalation of Li-ions into the anode and cathode are slowed, causing Li-metal to plate onto the surface of the anode instead of intercalate inside it56. Lithium plating poses a great safety risk due to the possibility of a lithium metal dendrite growing large enough to puncture the separator and cause an electrical short circuit. Unlike SEI formation and electrode cracking, lithium plating is not expected to take place inside Li-ion batteries during normal operation. Therefore, much work has been done to detect and model the lithium plating degradation mechanism so that it can be safely mitigated through design and control strategies. However, lithium plating is a dynamic process that is affected by the cell design (energy density), charge rate, temperature, and SOC, making it challenging to detect and quantify. Research by Huang et al.57 demonstrated how differential pressure measurements could be used to detect lithium plating inside cells in real-time during fast charging. Their method holds promise for online monitoring and real-time control of cells operating in the field, but the technology still needs to be demonstrated on the pack level before it might be considered for mass production. Other research by Konz et al.58 demonstrated a method for quickly quantifying the lithium plating limits of a cell using standard battery cyclers by measuring the coulombic inefficiency of the cell after cycling at various C-rates. The method performs sweeps over a series of charge rates and SOC cutoffs to map out the lithium plating limits at the tested temperature. The method provides a cheaper and faster approach to mapping the lithium plating limits and designing an optimal fast-charging protocol using experiments instead of the traditional approach of using an electrochemical model of a cell. Regardless of the strategy employed, modeling and mitigating lithium plating is imperative to ensuring the safe and reliable operation of batteries over their lifetimes. Later we will revisit the topic of lithium plating when discussing emerging strategies for prolonging battery lifetime in the section “Aging-aware battery control optimization”.

With researchers pushing for higher energy densities by introducing new materials into batteries, there will always be new degradation mechanisms that present challenges. Recent efforts to increase the capacity of existing Li-ion battery chemistries, like lithium cobalt oxide (LCO), lithium NCA, lithium NMC, and lithium iron phosphate (LFP), by adding silicon (Si) to the graphite anodes has lead to a field of research devoted to studying silicon-anode technology. However, the high capacity of silicon as an anode material presents its own set of challenges around swelling and cracking. Silicon-anode batteries are notoriously known for swelling as much as 20% their original thickness, posing a unique set of degradation and packaging challenges59. Similarly, high energy density Li-metal batteries pose their own set of unique challenges, mainly related to the reversibility of the metal plating and stripping process on the negative current collector. Likewise, solid-state batteries face challenges related to degradation of the solid electrode/electrolyte interfaces and the materials themselves. On the other hand, low energy density lithium titanium oxide (LTO) anodes are much safer from an abuse perspective, but suffer from extreme gassing which creates bubbles between electrode layers and subsequently delamination which deactivates areas of the electrodes, causing accelerated capacity loss and aging60,61. Less mature batteries, like Li-S and Li-air chemistries face a host of issues with fast capacity fade and poor coulombic efficiency that prevent scaling to production. Readers interested in the challenges surrounding degradation of next-generation silicon anode, Li-metal batteries, solid-state, LTO, LiS, and Li-Air batteries are referred to these reviews on the topics—silicon anode:59,62, Li-metal:63,64, solid-state:65,66, LTO60,61, Li-S67,68, and Li-Air69.

Until these battery chemistries are refined further, applications of battery health diagnostics and prognostics are mainly limited to the laboratory. In light of this, our review primarily focuses on probabilistic ML modeling methods applied to standard Li-ion chemistries. However, it is envisioned that nearly all of the ML-based modeling methods discussed in this paper will be transferable to new battery chemistries to some degree.

Battery state of health

Battery degradation observed during controlled laboratory experiments or normal operation in the field is the result of the interaction and accumulation of various component-level degradation mechanisms like those discussed in the section “Battery degradation—modes and mechanisms”. The most frequently used measures of battery SOH are capacity and resistance because they are directly measurable during aging experiments using periodic RPTs46,70. Resistance and impedance measurements taken at various SOCs are used to quantify the cell’s ability to deliver power and is a crucial battery state for implementing safe management controls. Direct and alternating current (DC and AC) resistance can usually be measured with fast diagnostic pulses (<30 s). However, directly measuring the capacity of cells operating in the field is largely infeasible without significantly interrupting the normal operation of the product to run a long charge/discharge diagnostic test. In practice, the SOH of cells operating in the field must be estimated from the available cell-level electric, thermal, and mechanical data.

More recently, advances in battery modeling and the availability of larger publicly available aging datasets has lead many researchers to further extend the definition of cell SOH to include the three primary degradation modes that drive capacity and power fade: LAMPE, LAMNE, and LLI (see Fig. 3). Together, these three degradation modes capture the combined effect of the individual degradation mechanisms on cell health and provide better insight into the health of the cell’s major components than do capacity and resistance. For example, identifying that the anode is degrading more quickly than the cathode can help with identifying when a knee-point in the cell’s capacity fade trajectory may occur56. Similarly, capacity fade is often complex and path-dependent. For example, the dominant degradation mechanism driving capacity fade during the early life of a battery is typically SEI growth. Later on, other degradation modes, like electrode particle cracking, begin to appear as the cell accumulates more cycles and the electrodes experience repeated swelling and relaxation51. Quantifying cell SOH through the three degradation modes provides more insight into when and to what degree cell degradation is occurring than simply estimating cell capacity.

While quantifying battery SOH through the various component-level degradation modes is useful in the lab, the same methods are not necessarily useful nor viable for cells operating in the field. Relevant metrics of cell SOH for field units like EVs and consumer electronics are primarily focused around quantifying remaining capacity, resistance, impedance, and any risks of thermal runaway, as these impact the user experience the most. Quantifying battery SOH from field data presents a new set of challenges, since the quality and quantity of diagnostic measurements are heavily influenced by user behavior. For example, it is rare that cells will ever complete full DOD cycles in the field due to BMS limits and cell voltage imbalance. Thus, gathering usable data for SOH estimation becomes a real challenge. Later in the section “Diagnostics and prognostics using field data”, we discuss current research focusing on health diagnostics and prognostics from field data.

Battery health diagnostic and prognostic problems

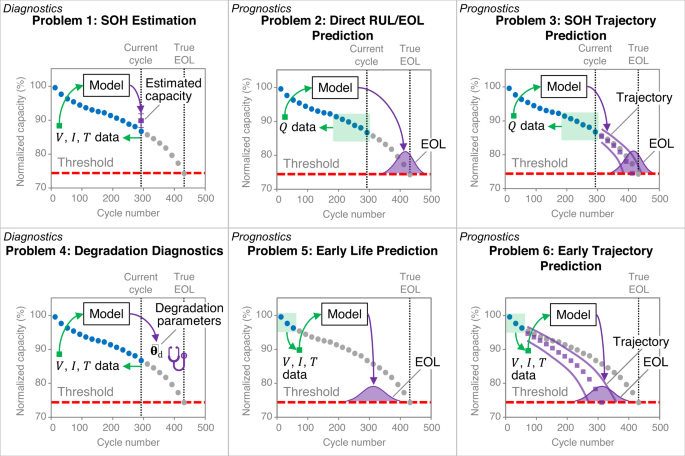

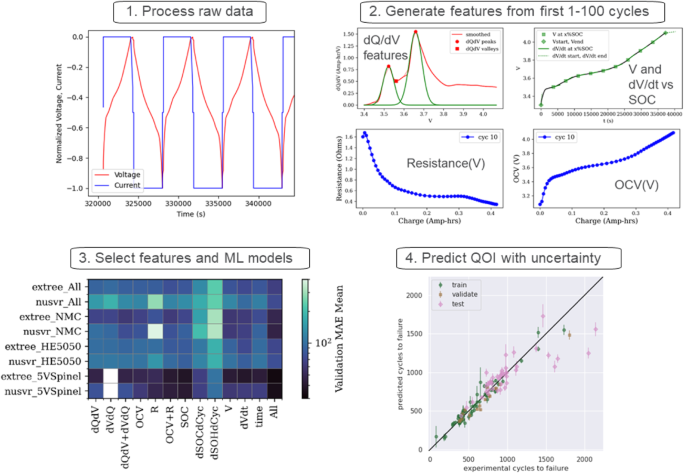

Figure 4 provides an overview of battery diagnostic and prognostic problems where probabilistic ML techniques can be applied to build regressors with uncertainty quantification capability (i.e., the ability of these regressors to quantify the predictive uncertainty in their outputs). We divide the fields of battery health diagnostics and prognostics into six unique problems to highlight the subtle differences in the various research articles published on the topics. Broadly, problems 1 and 4 are classified as diagnostic problems since battery health is estimated at the current cycle. Problems 2, 3, 5, and 6 are classified as prognostic problems since battery health (and/or lifetime) is predicted for future cycles. The six general problems are briefly summarized as follows:

-

Problem 1: SOH estimation Approaches to this first problem aim to estimate the current battery health, often based on voltage, current, and temperature measurements readily available to a BMS. In practice, it comes down to estimating the capacity and resistance, which together determine a battery’s energy and power capabilities. This problem is probably the most extensively studied in the battery diagnostics field, with multiple review papers dedicated to this problem every year.

-

Problem 2: Direct RUL/EOL prediction Approaches targeting this second problem predict the RUL by training an ML model that directly maps a sequence of most recent capacity observations to RUL. These capacity observations can be either actual capacity measurements via coulomb counting on full charge/discharge cycles or capacity estimates by an algorithm. The idea is to feed this sequence of capacity observations to an ML model, which produces an RUL estimate. In other words, this ML model takes a sequence of capacity observations, consisting of the observation at the current cycle and a few recent past cycles, and produces an RUL estimate, for instance, in the form of a probability distribution when a probabilistic ML model is adopted.

-

Problem 3: SOH trajectory prediction Unlike SOH estimation (Problem 1), which centers on inferring current health, this third problem focuses on predicting future capacity and resistance, often by examining the degradation trend over a few most recent cycles and extrapolating this trend. Similar to SOH estimation studies, SOH forecasting studies mostly look at capacity forecasting. A simple and popular approach is to take a sequence of capacity observations at the current and recent past cycle and feed these observations as input into an ML model, which may produce a sequence of probabilistic capacity estimates, for instance, the means and standard deviations of the forecasted capacity observations at the next few cycles that all follow Gaussian distributions. These estimates form a capacity degradation trajectory, based on which an end-of-life (EOL) estimate can be derived as the cycle number when this trajectory down-crosses a predefined capacity threshold (typically 80% of the initial capacity for automotive applications). An RUL estimate can be obtained by subtracting the current cycle number from the EOL estimate. Unlike RUL prediction through SOH forecasting, direct RUL prediction, as discussed in Problem 2, skips the step of capacity forecasting and directly maps a capacity sequence to the RUL.

-

Problem 4: Degradation diagnostics Degradation diagnostics is a subproblem of SOH estimation focused on diagnosing the degradation modes that drive capacity fade and resistance increase71. This subproblem aims to estimate three degradation parameters that measure the degrees of three degradation modes: the loss of active material on the cathode, the loss of active material on the anode, and the loss of lithium inventory. Estimating these three degradation parameters almost always requires access to high-precision voltage and current measurements during a full charge/discharge cycle, but workarounds do exist (see the section “Degradation diagnostics”).

-

Problem 5: Early life prediction This is an emerging prognostic problem where ML models map data from an early life stage to the lifetime (or the EOL cycle). A key step to solving this problem is defining early-life features predictive of the lifetime. A concise review of recent studies attempting to solve this problem will be provided in the section “Early life and trajectory prediction”.

-

Problem 6: Early trajectory prediction This sixth problem is similar to yet more challenging than early life prediction. The added difficulty comes from the need to predict the entire capacity trajectory rather than a single EOL cycle, as done in early life prediction. In addition to early-life features, capacity fade models are also required to produce a sequence of capacity estimates for any range of cycle numbers.

Here, V, I, T, and Q denote voltage, current, temperature, and capacity, respectively, and ({hat{{{{boldsymbol{theta }}}}}}_{{{{rm{d}}}}}) denotes an estimated vector of three degradation parameters defined to quantify three degradation modes.

Traditional ML vs. deep learning

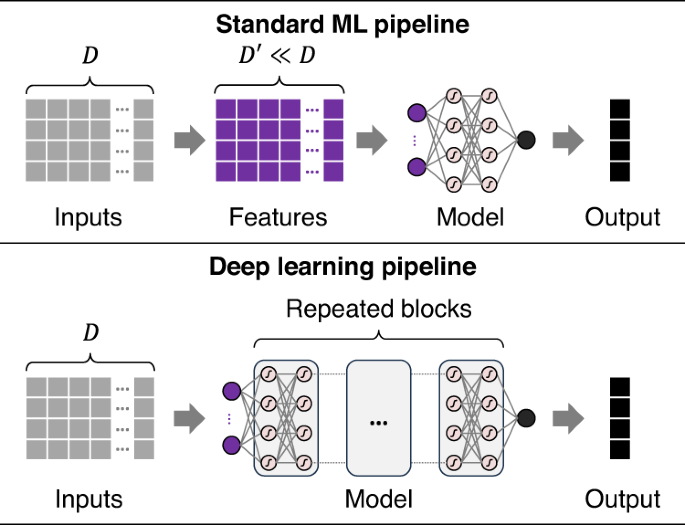

Over the past decade, hundreds, if not thousands, of data-driven approaches have been created for battery health diagnostics and prognostics. These existing approaches can be broadly categorized as traditional ML and deep learning. Figure 5 illustrates the key difference between these two categories. Traditional ML requires manually defining and extracting hand-crafted features. ML models are then built to approximate the often highly nonlinear relationship between these input features and the output (or target). Examples of traditional ML algorithms for building these models include regularized linear regressions (e.g., ridge regression, lasso, and elastic net), support vector machines, RVMs, Gaussian process regression (GPR) or kriging, random forests, Bayesian linear regression, gradient boosting machines (e.g., XGBoost and light gradient-boosting machines), k-nearest neighbors, and shallow neural networks.

Here, a typical traditional ML pipeline requires engineers to manually identify (D{prime}) features from a D-dimensional raw input, also known as feature extraction. In contrast, a typical deep learning pipeline does not need manual feature extraction and can automatically learn features with predictive power from data.

An input to a traditional ML model can be formulated from voltage and current measurements during a partial charge cycle. This input can be (1) a vector of features extracted from voltage vs. time (V vs. t) and current vs. time (I vs. t) curves13,14,72, (2) a vector of features extracted from an incremental capacity vs. voltage (dQ/dV vs. V) curve73,74, (3) a vector of features extracted from a differential voltage vs. capacity (dV/dQ vs. Q) curve75, or (4) any combination of these three vectors76. The output can be capacity or resistance for SOH estimation or EOL/RUL for health prognostics. The performance of ML models highly depends on the collective predictive power of these manually extracted features. Additionally, the same set of features that works well on a specific battery chemistry and application often does not transfer to a different chemistry or application. Thus, when dealing with a new chemistry or application, one has to repeat the tedious and time-consuming process of manual feature extraction.

Unlike traditional ML, deep learning can automatically learn high-level abstract features of predictive power from large volumes of data. An obvious benefit is that manual feature extraction is no longer needed and is replaced by “feature learning”. However, deep learning approaches have two well-known limitations. First, deep neural network models are more prone to overfitting data than shallower neural network models, especially when a training dataset is small (e.g., a few tens to hundreds of training samples). Given the time- and resource-demanding aging tests, most battery health diagnostics and prognostics applications reside in the small data regime77. Aging data available for model training are even more limited in an early research and development stage where lifetime prognostics may be applied to accelerate materials design78 or charging protocol optimization79. As a result, a deep learning model built for battery diagnostics/prognostics may produce high accuracy on the dataset this model was trained on but may generalize poorly to “unseen” test samples that could fall outside of the training data distribution. These out-of-training-distribution test samples are often called out-of-distribution (OOD) samples. A solution to the conflict between what is needed (i.e., big data) and what is available (i.e., small data) is quantifying the predictive uncertainty through probabilistic deep learning34. The uncertainty estimate could serve as a proxy for model confidence, i.e., how confident this model is when making a prediction. The ability to convey model confidence is crucial for safety-critical battery applications, where SOH/lifetime predictions with large errors and no warnings are simply unacceptable. Second, deep learning models are inherently “black-box” models whose predictions do not come naturally with an interpretation. A direct consequence is that it is almost impossible to understand why a deep learning model predicts a certain outcome and whether this prediction is reasonable and complies with physics or domain knowledge. Although efforts have been made to achieve varying degrees of interpretability mostly through post-processing80, deep learning models are still harder to interpret than simpler traditional ML models, some of which are inherently interpretable77.

Traditional ML models are likely to perform better on small training sets (N < 1000) than deep learning models. It is not surprising to see battery aging datasets with less than 100 cells tested to their EOL48. In such cases, a training set may consist of only N < 100 input-output pairs. Simple ML methods such as the elastic net, a regularized linear regression method, random forests (RFs), and Bayesian linear regression are probably the best choices27,77,81. Deep learning approaches, such as deep neural networks, are well-suited for applications where (1) it is reasonably feasible to run large-scale aging test campaigns to generate large training sets (N ≫ 1000) and (2) model interpretability is not a primary concern.

Publicly available battery aging datasets

Publicly available battery aging datasets have enabled a majority of the research in the battery diagnostics and prognostics field. The University of Maryland’s Center for Advanced Life Cycle Engineering (CALCE)82,83,84 and the National Aeronautics and Space Administration’s (NASA)85,86 were among the first organizations to publish publicly available battery aging datasets with greater than 20 cells. The initial work by the team at NASA focused on using an unscented Kalman filter to estimate internal battery states, namely max discharge capacity and internal resistance, and electrochemical model parameters over the course of the cells’ lifetime86. Notably, the battery cells in the NASA dataset were subjected to randomized discharge current loads, making the dataset more similar to real-world battery operation and making it more challenging to estimate the cells’ SOH and predict their RUL. The researchers at CALCE first demonstrated RUL prediction on their dataset by using an empirical battery degradation model where the parameters are initialized using Dempster-Shafer theory and updated online using recursive Bayesian filtering82. The model was shown to provide accurate non-parametric predictions of battery RUL by evaluating the many Bayesian-filtered model parameters.

While the battery aging datasets from NASA and CALCE were undoubtedly influential for their time, the trend as of late has been to test more batteries under more operating conditions so that modern machine and deep learning models can be applied48. A more recent battery aging dataset from Stanford, MIT, and Toyota Research Institute was used to study the problem of early lifetime prediction (see the section “Early life and trajectory prediction”)27. The researchers then used the early lifetime prediction model in a close-loop optimization algorithm to speed up the process of experimentally searching for a fast charging protocol that maximized a cell’s cycle life79. Similar work by a team of researchers at Argonne National Laboratory used a diverse dataset composed of 300 pouch cells with six unique battery cathode chemistries to study the role of battery chemistry and feature selection in early life prediction87. Other large datasets include the one from Sandia National Laboratory that was used to study commercial 18650-size NMC-, NCA-, and LFP-Gr cells under different operating conditions88 and the dataset from Oxford89 used to study the path-dependency of battery degradation.

A relatively new dataset from the collaborators at Stanford, MIT, the Toyota Research Institute, and the SLAC National Accelerator Laboratory consists of more than 360 21,700-size automotive cells taken from a newly purchased 2019 Tesla Model 3 to study aging under a wide range of cycling conditions55. Another large dataset made available this year is the dataset from a research collaboration between Iowa State University (ISU) and Iowa Lakes Community College that contains 251 Li-ion cells cycled under 63 unique cycling conditions26. Both large aging datasets were curated specifically to study ML-based approaches to battery health prognostics and the role of feature generation and engineering in battery lifetime prediction.

Recently, there has been a push to demonstrate battery diagnostic and prognostic algorithms that work on modules and packs operating in the field. One approach to do this is to replicate real-world operating conditions in cell-level laboratory aging experiments. Pozzato et al.90 cycled NMC/Gr+Si 21700 format cylindrical cells using a typical EV discharge profile while periodically characterizing cell health with RPTs. Similarly, Moy et al.91 cycled 31 cells using synthetically generated autonomous EV discharge profiles based on real-world driving telemetry data. While the datasets are still useful for studying battery degradation under more realistic conditions, they are still synthetic in nature and conducted on cells, making it difficult to understand how the study results translate to real-world packs and modules.

Research into module and pack based battery aging is becoming more prevalent. She et al.92 examined telemetry data from electric city buses operated in Beijing, China, finding that incremental capacity features extracted from the voltage readings during constant current charging were predictive of battery health, but changed drastically with the changing seasons (summer, fall, winter, spring) in the city. Similarly, Pozzato et al.31 looked at real-world EV data from an Audi E-tron. The team found that DC resistance measured during braking and acceleration along with charging impedance were good predictors of battery SOH. But unfortunately, neither of the aforementioned datasets were made publicly available, and to the best of our knowledge, no other publicly available battery module/pack aging datasets exist.

Publicly available battery aging datasets will continue to play a large role in furthering research in the area of battery diagnostics and prognostics by enabling those without access to battery testers to study battery aging and diagnostic and prognostic modeling. Websites like Battery Archive are important for sharing and disseminating battery aging data to a wider audience. Additionally, industry-academia collaboration will be key for gathering and disseminating real-world battery module/pack aging data. Access to real-world battery pack aging data will be crucial for studying and developing diagnostic and prognostic algorithms that can work beyond the lab. The next big leap in the battery health diagnostics and prognostics research community will be to understand how models built using lab data perform in the field.

Probabilistic ML techniques and their applications to battery health diagnostics and prognostics

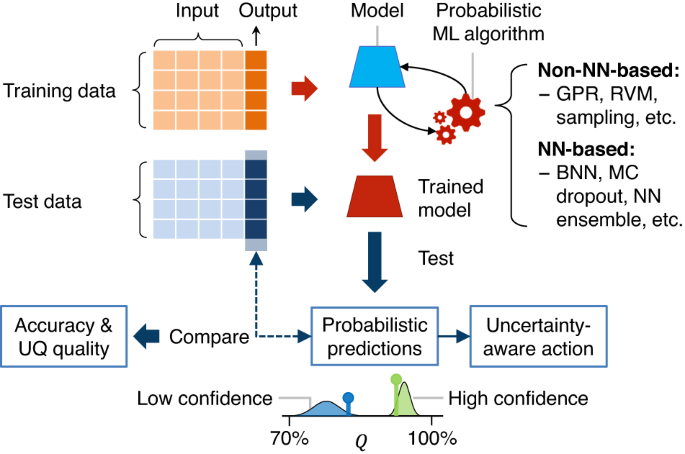

This section introduces a handful of probabilistic ML/deep learning methods for building reliable probabilistic ML pipelines for battery state estimation (see an illustrative flowchart in Fig. 6 in the context of capacity estimation). Following the introduction of each probabilistic ML method, we review the state-of-the-art in applying this method to solve the first three problems (Problems 1–3) on battery diagnostics/prognostics shown in Fig. 4, i.e., SOH estimation, capacity forecasting, and RUL prediction. The other three problems (Problems 4–6) are emerging and will be discussed in “RVM applications to battery diagnostics and prognostics SOH estimation”.

Among the probabilistic ML algorithms listed here, “GPR” stands for Gaussian process regression (the section “Gaussian process regression”); “RVM” stands for relevance vector machine (the section “Relevance vector machine”); “sampling stands for sampling methods (the section “Sampling methods”); “BNN” stands for Bayesian neural network (the section “Bayesian neural network”); “MC dropout” stands for Monte Carlo dropout (the section “Bayesian neural network”); and “NN ensemble” stands for neural network ensemble (the section “Neural network ensemble”).

As one proceeds through this section, one will notice that it goes beyond merely addressing the theoretical and battery application aspects of each probabilistic ML technique covered. It also includes specific algorithm examples (e.g., Figs. 7–12) and offers a tutorial-style description of the algorithmic procedures. Our aim is to present easily digestible materials, particularly for newcomers in this field, such as fresh Ph.D. students eager to grasp the fundamentals of probabilistic battery diagnostics and prognostics. We also note that there exists a broader spectrum of probabilistic ML methods beyond those discussed in this paper (e.g., see Nemani et al.34); we aim in this paper to highlight a select few that have been most prominently used in, and generally applicable, to the type of problems encountered in battery diagnostics and prognostics.

Here, the 95% prediction interval (denoted as “PI” in the legend) represented by the shaded area is computed as μ* ± 2σ*.

Before we introduce the probabilistic ML methods, it is meaningful to walk through some key steps when applying probabilistic ML to battery state estimation. As a representative example, Fig. 6 provides a graphical overview of these key steps for capacity estimation. Similar steps can be expected when solving other problems on battery diagnostics and prognostics.

-

This pipeline starts with defining an ML model’s input and output. For example, when dealing with capacity estimation by traditional ML models, the input could consist of predictive features extracted from the voltage (V), current (I), and temperature (T) measurements during a partial charge cycle, and the output would be the full capacity (Q) of the cell at that cycle.

-

The next step is defining a training and test dataset. An important consideration is that the test dataset should include a decent number (e.g., ≥30%) of OOD samples to evaluate the generalization performance of a trained ML model. Furthermore, one should avoid randomly assigning samples from the same cell to both training and test datasets. In most cases, all samples from one cell should be exclusively assigned to a training or test dataset. Again, this treatment ensures that the test dataset serves the purpose of evaluating how well a trained ML model generalizes to samples outside of the dataset the model has been trained on.

-

The third step is selecting a probabilistic ML algorithm. This section covers three non-neural-network-based algorithms, GPR (the section “Gaussian process regression”), RVM (the section “Relevance vector machine”), and sampling methods (the section “Sampling methods”), and two neural-network-based algorithms, BNN (the section “Bayesian neural network”) and neural network ensemble (the section “Neural network ensemble”). Selecting a probabilistic ML algorithm requires assessing several criteria to ensure the algorithm’s suitability for a specific use case. Several key criteria are listed as follows (see Nemani et al.34 for further details): (1) prediction accuracy (e.g., evaluated by comparing mean predictions with ground truth on a validation dataset spit out of a training dataset), (2) quality of uncertainty quantification (i.e., the algorithm’s ability to produce accurate uncertainty estimates), (3) computational efficiency (an important factor in applications where real-time or near-real-time diagnostics/prognostics may be required), (4) scalability (i.e., the algorithm’s ability to train models based on large volumes of datasets, i.e., in the big data regime), and (5) robustness (i.e., the algorithm’s ability to maintain performance in the presence of outliers, high noise, and adversarial variations in the input data). These criteria may become conflicting objectives that need to be weighed based on the needs and wants of a specific diagnostic/prognostic use case. It is often desirable to experiment with a suite of algorithms and choose one (standalone) or multiple (hybrid) algorithms for a specific use case.

-

After selecting the algorithm, one feeds the training data, some observed input-output pairs, into the algorithm. This algorithm then generates a mathematical model that infers something about the underlying process that generated the training data. Using the trained model, one can make probabilistic capacity estimations for cells or their cycle numbers the model has not seen before. Each capacity estimate can be expressed as a probability distribution of a certain type (e.g., Gaussian, log-normal, or exponential) or an empirical probability distribution.

-

If one has access to the ground truth for the test data, one can compare the capacity estimates with the observations to derive prediction accuracy metrics, such as the root-mean-square error (RMSE) and mean absolute percentage error, and uncertainty quantification quality metrics, such as the expected calibration error, Area Under the Sparsification Error curve (AUSE), and negative log-likelihood (NLL).

Gaussian process regression

Gaussian process regression methodology

Gaussian process regression (GPR), also known as kriging, is a principled, probabilistic method for learning an unknown function f from a given set of training data comprising N input vectors, ({left{{{{{bf{x}}}}}_{i}right}}_{i = 1,ldots ,N}), and N targets, ({left{{y}_{i}right}}_{i = 1,ldots ,N}). Here, ({{{{bf{x}}}}}_{i}in {{mathbb{R}}}^{D}) is the D-dimensional input feature vector of the i-th training sample, and ({y}_{i}in {mathbb{R}}) is the corresponding one-dimensional output, i.e., a noise-free or noisy observation of f at xi. The regression model learned by GPR is non-parametric because this model does not have a predefined functional form. It is common to assume the so-called Gaussian observation model where each observation yi is an addition of the true function value f(xi) and a zero-mean Gaussian noise εn:

where ({varepsilon }_{i} sim {{{mathcal{N}}}}(0,{sigma }_{varepsilon }^{2})). GPR starts by placing a Gaussian process prior on the unknown function f, i.e., (f({{{bf{x}}}}) sim {{{mathcal{GP}}}}(m({{{bf{x}}}}),k({{{bf{x}}}},{{{{bf{x}}}}}^{{prime} }))), where m(⋅) is the mean function and k(⋅,⋅) is the kernel, also known as the covariance function evaluated at x and ({{{{bf{x}}}}}^{{prime} })93. For example, if we assemble the N function values into a vector, ({{{bf{f}}}}={[f({{{{bf{x}}}}}_{1}),ldots ,f({{{{bf{x}}}}}_{N})]}^{{{{rm{T}}}}}), this vector follows a multivariate (N-dimensional) Gaussian distribution:

where ({{{bf{X}}}}={[{{{{bf{x}}}}}_{1},ldots ,{{{{bf{x}}}}}_{N}]}^{{{{rm{T}}}}}in {{mathbb{R}}}^{Ntimes D}) is a matrix assembly of the N training input points, m(X) is a vector of the mean values at these input points, and ({{{{bf{K}}}}}_{{{{bf{X}}}},{{{bf{X}}}}}in {{mathbb{R}}}^{Ntimes N}) is a covariance matrix that takes the following form:

Nonlinearity in the model arises from the kernel k(⋅,⋅), which models the covariance between function values at two different input vectors. In practice, we can choose a kernel from many candidates. For example, the probably most popular kernel is the squared exponential kernel, also known as the radial basis function (RBF) and the Gaussian kernel. The squared exponential kernel can be expressed as

where σf is the signal amplitude, ∥⋅∥ is the L2 norm or the Euclidean norm, the square of which (({sigma }_{f}^{2})) defines the signal variance, and l is the length scale. The signal variance (({sigma }_{f}^{2})) sets the upper limit of the variance and covariance for the Gaussian process prior (see the covariance matrix in Eq. (2); the length scale l determines how smooth the approximate function appears (the smaller the length scale, the more rapidly the function changes). These two kernel parameters are two hyperparameters of the GPR model, which, together with the noise standard deviation σε, need to be optimized during GPR model training. The squared exponential kernel has been widely used as it is simple and captures a function’s stationary and isotropic (dimension-dependent) behavior. Another popular choice is the RBF kernel with automatic relevance determination (ARD)94, which assigns N different length-scale parameters to the N dimensions rather than using the same parameter as is done by the standard RBF kernel. The resulting RBF-ARD kernel can capture dimension-dependent patterns in the covariance structure.

For notational convenience, we denote the collection of training input-output pairs as a training set, ({{{mathcal{D}}}}=left{left({{{{bf{x}}}}}_{1},{y}_{1}right),ldots ,left({{{{bf{x}}}}}_{N},{y}_{N}right)right}), and write the N noisy observations as a vector, ({{{bf{y}}}}={[{y}_{1},ldots ,{y}_{N}]}^{{{{rm{T}}}}}in {{mathbb{R}}}^{N}). For a new, unseen input point x*, the predictive distribution of the corresponding observation y* can be derived based on the conditional distribution of a multivariate Gaussian as the following:

and

where (k({{{bf{X}}}},{{{{bf{x}}}}}_{* })={[k({{{{bf{x}}}}}_{1},{{{{bf{x}}}}}_{* }),ldots ,k({{{{bf{x}}}}}_{N},{{{{bf{x}}}}}_{* })]}^{{{{rm{T}}}}}), denoting a vector of N cross covariances between X and x*.

GPR is a probabilistic ML method most well-known for its distance-aware uncertainty quantification capability. This capability is illustrated in Fig. 7, where a simple sine function is adopted to generate synthetic data after adding zero-mean Gaussian noise. Two observations can be made from this figure. First, high epistemic uncertainty due to a lack of data is associated with test points far from the eight training points. The GPR model seems to produce predictive uncertainty estimates that properly capture the high epistemic uncertainty at these OOD test points. Second, as a test point deviates from the training data distribution (e.g., when x starts to become larger than 4), the predictive uncertainty first increases due to the distance-aware property of GPR and then saturates to a maximum constant (i.e., ({sigma }_{* }^{2},approx, {sigma }_{f}^{2}+{sigma }_{varepsilon }^{2})). The above briefly overviews the math behind GPR and its uncertainty quantification capability. Our recent tutorial on uncertainty quantification of ML models34 provides a more detailed explanation of GPR.

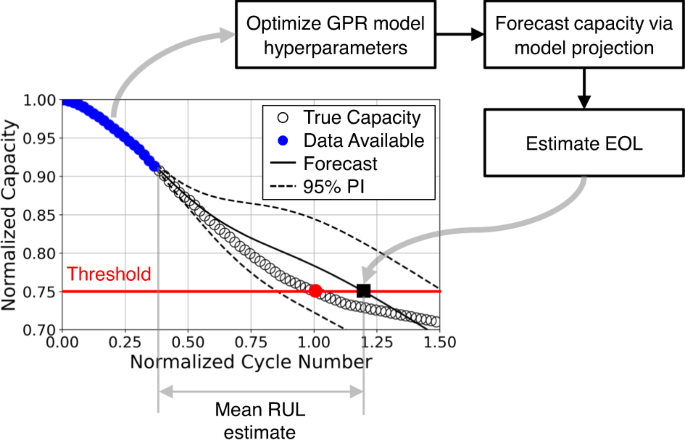

Figure 8 shows how GPR operates to forecast the capacity of future cycles probabilistically at a specific charge/discharge cycle. We first train a GPR model based on the available capacity data (blue points). This GPR model uses an empirical capacity fade model as the prior mean function to capture the known fade trend. The model training optimizes the GPR model’s hyperparameters by maximizing the likelihood of observing the capacity data. Intuitively, we fit a GPR regression model to available capacity data and use this model to make predictions for future cycles without capacity data. Because GPR is a probabilistic ML technique, the predictions are in the form of a mean curve, the solid line, and a 95% prediction interval, the dashed lines. So, in the next step, we forecast capacity beyond the current cycle using the trained GPR model, making predictions outside our data distribution. We then estimate the mean EOL when the mean prediction curve down-crosses a predefined capacity threshold or EOL limit. The black square is the mean prediction. We can imagine having a prediction interval around this mean, representing the uncertainty of our EOL prediction. We are often interested in knowing the RUL, i.e., the number of remaining cycles till the EOL limit. Our RUL estimate can be obtained by simply subtracting the current cycle number from the predicted EOL. Since this device was cycled to its EOL, we have the entire capacity trajectory and true EOL. We can compare the prediction and truth to know how well our GPR model does.

Here, the GPR model is fit to available data and extrpolated to forecast capacity and EOL.

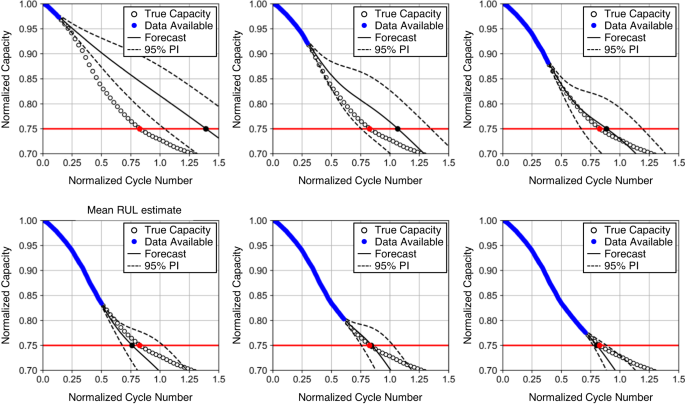

Now imagine we repeat the prediction steps in Fig. 8 at every cycle, as the battery is used in the field. Figure 9 shows how the prediction evolves from an early-life cycle to a late-life cycle. This figure panel shows six snapshots of probabilistic capacity forecasting by GPR at six different cycles. As we move along the cycle axis, we have more and more capacity data to train a GPR model and our prediction horizon till the EOL becomes shorter and shorter. As a result, the EOL and RUL predictions become more and more accurate. These predictions converge to the ground truth at around halfway through the lifetime. Also, We can see the prediction interval for the EOL, in general, gets narrower with time, indicating reduced predictive uncertainty, which is also what we expect to see.

Here, the GPR model is fit to available date and extrapolated to EOL. The various lengths of data available change the projected trajectory.

GPR applications to battery diagnostics and prognostics

SOH estimation

Two notable efforts applying GPR to SOH estimation were made almost simultaneously72,95. Both studies extracted features from the raw voltage vs. time (V vs. t) curve acquired from a charge cycle. Richardson et al.72 used the time differences between several equispaced voltage values and their minimum as the input features to a GPR model. These time differences were computed based on a segment of a full charge curve (after smoothing) within its constant-current (CC) portion. Yang et al.95 took a different feature engineering path by extracting time and slop features from a full charge curve consisting of both the CC and constant-voltage portions. It is noted that earlier studies on SOH estimation investigated similar features extracted from a charge curve starting at a partially discharged state13,96. Both studies72,95 evaluated their algorithms on a battery aging dataset from the NASA Ames Prognostics Center of Excellence Data Set Repository (e.g., the Randomized Battery Usage dataset86 used in ref. 72.

These two early applications of GPR to SOH estimation reported two unique properties of GPR: nonparametric regression, making the regression model self-adaptable to data complexity, and uncertainty estimation under a Bayesian framework and with distance awareness, enabling principled quantification of predictive uncertainty and reliable detection of OOD samples. Additionally, GPR is known for its minimal overfitting risk due to using a Bayesian probabilistic framework. These desirable properties may have driven many later studies that investigated the applicability of GPR to SOH estimation when only partial charge curves are available. Two examples of such investigations examined features extracted from the incremental capacity vs. voltage curve during partial charge73 and features extracted from the capacity vs. voltage curve during partial charge97. As discussed next, GPR can extrapolate reasonably well when a prior mean function is properly defined. However, GPR only operates well on small datasets and has limited scalability to bigger datasets34.

SOH forecasting and RUL prediction

The first application of GPR in the battery diagnostics and prognostics literature was SOH forecasting, not SOH estimation. It was reported in a comparative study on resistance and capacity forecasting led by a group of researchers at NASA’s Ames Research Center98. This study compared two regression techniques, polynomial regression and GPR, and one state estimation technique, particle filtering, in forecasting resistance and capacity. This comparative study was an extended version of the probably first-ever publication on battery diagnostics/prognostics, led by the same group of researchers11, which used a combination of RVM and particle filtering for capacity forecasting. SOH forecasting using GPR has an ~10-year longer history than SOH estimation using GPR. After the first application of GPR to SOH forecasting in the late 2000s, two notable studies attempted to improve the extrapolation performance of GPR, essential to long-term SOH forecasting, by using explicit prior mean functions99,100. Note that the default option for the prior mean function is either zero or a non-zero constant93. An empirical capacity fade model could be used as an explicit mean function, allowing the GPR model to capture the trend of degradation encoded in the capacity fade model100,101.

All the above studies on SOH forecasting assume that use conditions (e.g., charge and discharge C-rates and temperature) are time-invariant. This assumption may not hold in many real-world applications. A more realistic scenario is that these use conditions vary randomly over time but approximately follow a duty cycle pattern. As a follow-up to their earlier study on SOH forecasting102, Richardson et al. defined a capacity transition model to predict the capacity change during each usage period following a load pattern. A GPR model was built to approximate the relationship between features extracted from a load pattern (input) and the capacity change within this usage period (output). The outcome was the ability to forecast capacity probabilistically under time-varying use conditions. Two more recent studies also examined capacity forecasting under time-varying use conditions, specifically in cases where future charge and discharge C-rates vary significantly with cycle103,104. Similar to the study by Richardson et al.102, these two more recent studies also consider future use conditions when designing the input to an ML model. Specifically, they used charge and discharge currents in future cycles as part of the ML model input. The difference is that these two studies additionally incorporated the current or recent cell state into the model input. The cell state was characterized by either (1) a combination of features from electrochemical impedance spectroscopy (EIS) measurements in the current cycle and those from voltage and current measurements in the current and some past cycles103 or (2) only features from historical voltage and current measurements104. GPR was not used as the ML algorithm in either study. Instead, an ensemble of XGBoost regressors was used by Jones et al.103 to quantify forecasting uncertainty, while uncertainty quantification was not considered by Lu et al.104. Overall, it is interesting to see both studies focused on features with physically meaningful connections to future degradation when designing the ML model input. In fact, formulating a meaningful forecasting problem and designing highly predictive input features should be the centerpiece of almost any data-driven SOH forecasting effort.

Finally, it is worth noting that multiple probabilistic ML models can be combined to form a hybrid model for SOH forecasting or RUL prediction. In what follows, we briefly discuss three examples of hybrid modeling involving GPR.

-

The first example is the delta learning approach employed by Thelen et al. in their study on battery RUL prediction101. The basic idea of this approach is correcting initial RUL predictions by a GPR capacity forecasting model with a data-driven ML model. The prior mean function of the GPR model was explicitly designed to be an empirical capacity fade model. The approach was demonstrated on three open-source datasets, a simulated dataset, and one proprietary dataset. Initial RUL predictions by GPR capacity forecasting models were considerably under-confident as compared to the GPR delta learning approach with a GPR capacity forecasting model (predictor) and a GPR RUL error correction model (corrector), which was well calibrated on the original dataset. In contrast, the random forest delta learning approach using a probabilistic random forest model as the corrector was over-confident on the original dataset, but exhibited better calibration than the GPR delta learning approach on the simulated OOD dataset.

-

Another example is the use of a co-kriging model to forecast capacity degradation by combining two data sources: (1) a high-fidelity source consisting of the capacity measurements from the test cell (whose capacity trajectory beyond the current cycle needs to be predicted) up to the current cycle and (2) a low-fidelity source comprising capacity measurements from other cells of the same or a similar design105. Similar to the delta learning approach studied by Thelen et al.101, this second study attempted to build a corrective GPR model to compensate for the deviation of an initial GPR model built based on low-fidelity data to depict an “average” degradation trajectory.

-

The third example is an effort to modify vanilla GPR models by incorporating electrochemical and empirical knowledge of capacity degradation (i.e., the dependencies of capacity degradation on two cycling condition variables, named temperature and depth of discharge)106. These two dependencies were captured through the Arrhenius law (temperature) and a polynomial equation (depth of discharge), respectively, and encoded as a compositional covariance function (or kernel) within GPR. Unlike the first two examples, which are purely data-driven, this third example attempted to integrate some physics of degradation into the GPR formulation, which can be treated as a physics-informed probabilistic ML approach.

Relevance vector machine

Relevance vector machine methodology

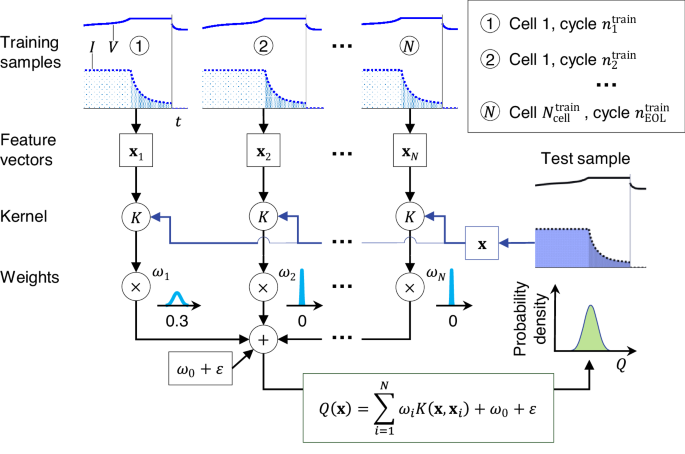

Suppose we have access to a set of training samples, each sample consisting of an input–output pair, (xi, yi), i = 1, ⋯ , N, where ({{{{bf{x}}}}}_{i}in {{mathbb{R}}}^{D}) is the D-dimensional input features of the i-th training sample, ({y}_{i}in {mathbb{R}}) is the corresponding output (also known as the target or the observation of the state of interest), and N is the number of samples. We are interested in learning a one-to-one mapping from the input (feature) space to the output (state) space. Similar to GPR, RVM also assumes that the observations are samples from a Gaussian observation model. Unlike GPR, which does not assume any functional form of this mapping, RVM approximates this mapping as a parametric, linear kernel regression function107. This regression function takes the following form:

where x is an input feature vector whose target may be unknown and needs to be inferred, K(x, xi) is a kernel function comparing the test input x with each training input xi, ωi is the kernel weight measuring the importance (or relevance) of the ith training sample, ω0 is a bias term, and ε is a zero-mean Gaussian noise, i.e., (varepsilon sim {{{mathcal{N}}}}(0,{sigma }_{varepsilon }^{2})). The bias term and N kernel weights form a (N + 1)-element weight vector, written as ({{{boldsymbol{omega }}}}={[{omega }_{0},{omega }_{1},ldots ,{omega }_{N}]}^{{{{rm{T}}}}}). If we define a design vector consisting of a constant of one and the N kernel functions, i.e., ({{{boldsymbol{phi }}}}={[K({{{bf{x}}}},{{{{bf{x}}}}}_{1}),ldots ,K({{{bf{x}}}},{{{{bf{x}}}}}_{N})]}^{{{{rm{T}}}}}), we can rewrite Eq. (7) in a convenient vector form, y(x) = ωTϕ + ε. The original RVM formulation follows a hierarchical Bayesian procedure by assuming the (N + 1) weights follow a zero-mean Gaussian prior, whose inverted variances, denoted as ({left{{alpha }_{i}right}}_{i ,=, 0,cdots ,N}), and the inverted noise variance, ({sigma }_{varepsilon }^{-2}), all follow Gamma distributions (hyperpriors).

Training the model in Eq. (7) using sparse Bayesian learning estimates the posterior of the weight vector ω and noise variance ({sigma }_{varepsilon }^{2}) via iterative optimization107. In practice, the posterior for most weights becomes highly peaked at zero, effectively “pruning” the corresponding kernel functions from the trained model. The remaining training samples with non-zero weights are known as relevance vectors, typically accounting for a very small portion of the training set (e.g., 5−20%). This unique attribute of sparsity makes the RVM attractive both in terms of generalization performance and test-time efficiency.

Figure 10 illustrates battery capacity estimation by a trained RVM model based on features extracted from voltage and current measurements during partial charge13. This SOH estimator possesses two desirable attributes: (1) statistical estimation, i.e., instead of providing a mere point estimate for the SOH parameter, this estimator generates a probability distribution as the parameter estimate; (2) sparsity, i.e., the estimator selectively utilizes only a small subset of feature vectors from the training dataset, known as relevance vectors, for real-time health inference (see, for example, the extreme posterior peakness at zero for ω2 and ωN). These two attributes offer several advantages for online health inference: (1) statistical estimation enables concurrent estimation of the parameter while quantifying the associated uncertainty, and (2) sparsity enhances the computational efficiency of online inference.

Example ML pipeline for capacity estimation using RVM trained on voltage-time and current-time data during cell charging. Figure adapted from Fig. 2 in Hu et al.13

RVM applications to battery diagnostics and prognostics

SOH estimation

As shown in Fig. 10, RVM can be applied to estimate battery capacity from features extracted from readily available voltage and current measurements. Such applications were first attempted in two studies, one focusing on implantable-grade LCO cells13 and the other focusing on NMC cells108. In the former study13, five characteristic features, some correlated strongly with capacity, were extracted from a test cell’s voltage vs. time and current vs. time curves at a specific charge cycle that started at a partially discharged state. These features were then fed as input (e.g., x in Fig. 10) into a trained RVM regression model that produced as output a Gaussian-distributed capacity estimate (e.g., Q in Fig. 10). The sparsity property of RVM made this regression model much smaller than a full-scale model. For example, each cross-validation trial used only <4% of training samples as the relevance vectors to build the final regression model, improving the computational efficiency and generalization of online capacity estimation.

In the later study108, a feature of predictive power was found to be the sample entropy of a short voltage sequence (time series) measured during a hybrid pulse power characterization test. This feature was concatenated with temperature to form the input vector to an RVM regression model, which outputs a Gaussian-distributed capacity estimate. It is interesting to see the inclusion of use condition parameters (e.g., temperature as reported in Hu et al.108) in the input of a data-driven ML model. Such a treatment builds condition awareness into the ML model, making it applicable under varying use conditions. Similar approaches have been taken in studies on capacity forecasting, as discussed in the section “GPR applications to battery diagnostics and prognostics”.

It is also widely accepted that a “one-ML-method-fits-all” approach does not work in battery diagnostics and prognostics. In some applications, one ML method may perform better than another regarding prediction accuracy. But, in other applications, accuracy comparisons between these two methods may look very different. Some limited efforts have been made to benchmark different ML methods for SOH estimation. An example is a comparative study on four data-driven ML methods, namely linear regression, support vector machine, RVM, and GPR, using features extracted from capacity vs. voltage curves during discharge109. These features included the standard deviations of the discharge capacity (Q) and cycle-to-cycle discharge capacity difference (ΔQ), calculated, respectively, from a measured sample of the discharge capacity vs. voltage curve at the current cycle (Q(V)) and a measured sample of the discharge capacity difference (between the current cycle and a fixed early cycle) vs. voltage curve (ΔQ(V)).

Like most other studies on battery diagnostics and prognostics, the above comparison exclusively focused on prediction accuracy by looking at error metrics such as RMSE and maximum absolute error. Few research or benchmarking efforts were made to study the quality of uncertainty quantification, i.e., how well an estimate of a model’s predictive uncertainty (known) on a test sample reflects the model’s prediction error (unknown) on this sample34. Additionally, we see that most studies worked with small datasets from limited numbers (mostly <100) of cells. In the small data regime, examining predictive uncertainty is even more important than in the big data regime, simply because (1) small training datasets possess limited representativeness of real-world scenarios, and (2) ML models may generalize poorly to OOD data. Although these existing studies reported high accuracy on small, carefully crafted test datasets mostly acquired from laboratory testing, these accuracy numbers are unlikely to generalize to real-world applications where we would expect wider ranges of and more complex use conditions, higher cell-to-cell variability, and larger measurement noise.

SOH forecasting and RUL prediction

The first application of RVM to battery prognostics was pioneered by a group of researchers at NASA’s Ames Research Center11. The same group of researchers also led the first application of GPR to battery prognostics98, as discussed in the section “RVM applications to battery diagnostics and prognostics SOH estimation”. The role RVM served was identifying mean regression curves on a charge transfer resistance vs. time dataset and electrolyte resistance vs. time dataset, both acquired from EIS. Each mean regression curve was then fitted to a simple two-parameter exponential model to obtain an estimate of the two model parameters. This estimate was treated as an initial (t = 0) estimate of the exponential model parameters in a discrete-time state-space model, solved using particle filters for capacity forecasting and RUL prediction. RUL prediction results were shown as an empirical probability distribution that became narrower and more centered at the true RUL as the cycle number where the prediction was made increased. Such plots later became a standard way to visualize results by probabilistic RUL prediction methods82,110,111,112.

Two later, more direct applications of RVM to battery prognostics were explored with the formulation of two vastly different approaches12,113. Wang et al. performed RVM regression on the capacity vs. cycle number data available to a test cell whose future capacity and RUL were unknown and then fitted a three-parameter variant of the well-known double exponential capacity fade model82 to only the capacity (Q) and cycle number (t) data of the relevance vectors12. Capacity forecasting and RUL prediction were achieved by extrapolating the fitted capacity fade model to a predefined EOL limit. It is important to note that, similar to the first application98, the RVM regression model, fitted to an SOH vs. cycle number dataset, was not directly used for capacity forecasting. More specifically, the forecasting was not done by extrapolating the RVM regression model, unlike the capacity forecasting studies using GPR models with empirical fade models as the “built-in” prior mean functions, as discussed in “RVM applications to battery diagnostics and prognostics SOH estimation”. Li et al. took a different approach by formulating capacity forecasting as a time series prediction problem113. RVM was employed to map the current and several past cycles’ capacity observations to the next cycle’s capacity observation, enabling one-step-ahead prediction. Capacity observations at cycles beyond the next cycle were predicted via iterative one-step-ahead prediction (i.e., marching over time). Again, capacity forecasting was not achieved by extrapolating an RVM regression model fitted to capacity vs. cycle number data. It suggests that simply extrapolating a data-driven regression model without consideration of the capacity fade trend may not yield reliable capacity forecasting, especially for long-term forecasting.

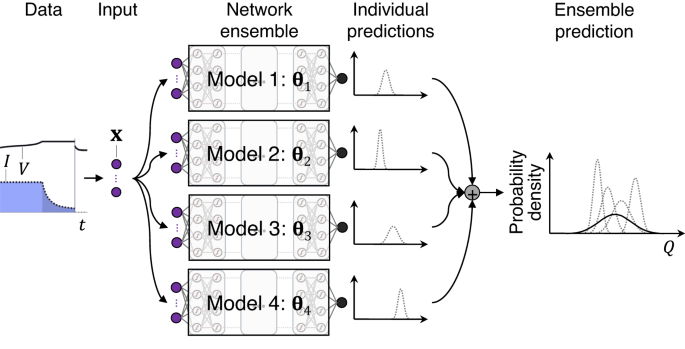

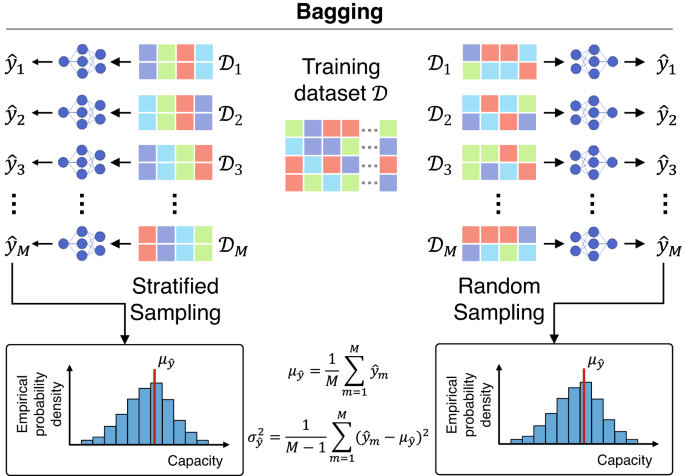

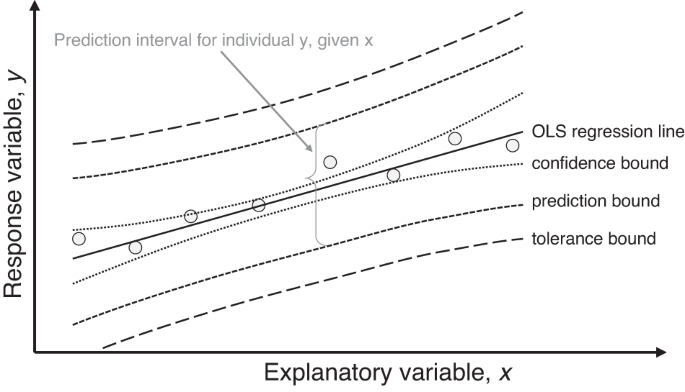

Bayesian neural network

Bayesian neural network methodology

A neural network fNN makes a prediction for an output variable at an input feature: (hat{y}={f}_{{{{rm{NN}}}}}({{{bf{x}}}};{{{boldsymbol{theta }}}})), where θ denotes all tunable parameters of the neural network (e.g., the neural network weight and bias terms). Given training samples (xi, yi), i = 1, ⋯ , N, the neural network training process seeks to set θ = θ* that minimizes a loss function, commonly the mean squared error:

This optimization problem is typically solved via gradient-based algorithms such as stochastic gradient descent114,115 or Adam116. The resulting θ* is single-valued, and subsequent new prediction using this trained neural network would also be single-valued as well: ({hat{y}}_{{{{rm{new}}}}}={f}_{{{{rm{NN}}}}}({{{{bf{x}}}}}_{{{{rm{new}}}}};{{{{boldsymbol{theta }}}}}^{* })).