Progressive enhancement and restoration for mural images under low-light and defective conditions based on multi-receptive field strategy

Introduction

Murals, created on walls through drawing, sculpture, or other painting methods, represent one of the earliest forms of human art. Over the centuries, Chinese mural paintings have developed into a vast collection, holding immense artistic, cultural, and historical value. These murals are a treasured embodiment of Chinese civilization. The structural and textural information of murals inspire modern painting techniques and artistic styles which scholars are capable of analyzing to understand ancient societies’ aesthetics, life, religion, architecture, and folklore1. However, natural aging, biological aggression, and man-made pollution have led to cracks, partial detachment, and mold growth in many ancient murals, causing ongoing damage. However, the restoration of handmade murals requires a great deal of specialized knowledge and is slow. Additionally, hand-painting on original murals is irreversible, meaning that any incorrect restoration can result in permanent defects2.

Recently, the conservation of ancient murals using computer technology has garnered significant attention among scholars, and digital restoration methods have proven to be both more efficient and reversible3. Therefore, applying more efficient deep learning-based digital image restoration techniques to mural paintings’ restoration is critically urgent, aiming to restore the damage to mural paintings as thoroughly as possible without destruction and to record the information they contain4. Unlike traditional image restoration problems, the restoration of ancient murals has the following challenges: (1) Mural collection often occurs in dimly lit environments, such as ancient mural sites like tombs and temples. Camera flashes or continuous lighting will cause irreversible damage, which is prohibited near murals to preserve them. Dark environments result in low-light images with poor brightness and pixel values which existing image restoration networks struggle with providing inadequate results. The challenge lies in enhancing the network’s ability to extract features from low-light images, restore defects, and create an image that retains its historical and cultural significance while being more visually accessible. (2) Characteristics of mural data increase training difficulty. Due to murals’ unique colors and textures, directly adapting pre-trained models which applied in other scenes to murals is ineffective. Training a mural-specific network requires extensive labeled data, which is scarce. Moreover, murals exhibit various defect types with random distribution which traditional restoration networks struggle to adapt to. (3) Traditional image restoration networks typically handle specific image sizes and struggle with large mural images. But murals come in a wide variety of colors and huge sizes. So many studies have made attempts to apply traditional image restoration techniques to mural restoration but there is no effective deep learning network in the field of mural restoration that can balance low-light enhancement and restoration of defects for giant ancient murals.

We divided the mural restoration task into two components: enhancement and restoration of defects. In our preliminary research, we found no existing studies that effectively integrate these two elements specifically for mural restoration. Consequently, we will separately review generalized methods for visual image enhancement and defect restoration.

Earlier image enhancement was achieved by adjusting the brightness and contrast of an image based on histogram equalization5. Although the method consumes less computational resources, histogram equalization is sensitive to noise in the image and does not work well in dealing with more complex image situations, such as problems in regions with large local contrast differences. Deep learning models can solve the deficiencies that occur in histogram equalization methods. Deep learning models can be trained based on a large amount of data, have better generalization ability and adaptability, and can object local features of an image to better handle image enhancement tasks of different types and complexity. Lore et al.6 pioneered the use of deep architectures for low-light image enhancement. Zhang et al.7 inspired by Retinex theory constructed a deep neural network to reconstruct reflectance and illumination maps. Wang et al.8 introduced intermediate illumination and proposed a new end-to-end network. Yang et al.9 proposed a deep recursive band network applying adversarial learning to recover low-light images. Zhang et al.10 proposed a maximum entropy-based Retinex model to estimate illumination and reflectance. Even though applying these image enhancement networks to mural images under low-light conditions can indeed improve their overall visual effect, the defects in mural images still exist and it is difficult for scholars to study valuable information from incomplete mural paintings, which is the most urgent problem that needs to be solved for mural painting restoration.

In image defect restoration, existing methods are broadly categorized into traditional image restoration methods and deep learning-based image restoration methods. Traditional image restoration often relied on diffusion techniques. Studies11,12,13,14 reconstructed missing areas by diffusing semantic information from surrounding domains into the gaps. Bertalmio et al.11 were the first to apply anisotropic diffusion iteratively for image restoration, enhancing clarity. However, the diffusion method focusing only on information near the missing area, struggles with large gaps. In contrast, patch-based methods are able to recover larger regions15,16,17. Patch-based methods fill gaps by transferring similar parts from neighboring images18,19. A. A. Efros and T. K. Leung20 pioneered the patch-based method, synthesizing textures in gaps by reusing local textures from the input image. In order to reduce the time cost of patch matching, Barnes et al.21 proposed a stochastic nearest-neighbor patch matching algorithm based on the patch method. However, patch methods depend on surrounding content to restore damaged areas. If the surrounding texture lacks similarity or is too complex, the desired restoration results are unattainable.

Applying deep learning techniques for image restoration has become a popular and effective option, and these methods can be broadly categorized as follows: progressive, structural information-guided, attention-based, convolution-based, and diversified restoration. Progressive restoration approaches are step-by-step, gradually restoring the missing regions. Yu et al.22 used a coarse-to-fine strategy, where a simple dilated convolutional network is used to make a coarse prediction, and then a second network takes the coarse prediction as input and predicts a finer result. Zhu et al.23 proposed a coarse-to-fine cascade refinement architecture. Li et al.24 utilized a part-to-full strategy, where the simpler part is solved in the first parts, and then used the results as additional information to progressively enhance the constraints to solve the difficult parts. Structural information-guided interior mapping relies on the structure of known regions, and Yang et al.25 used a gradient-guided restoration strategy. Attention mechanism-based image restoration strategies utilize the concept of human attention to improve the accuracy, efficiency and retention of semantic information in restoration algorithms. Yu et al.22 used contextual attention-based approach. Chen et al.26 proposed network incorporates a multiscale feature module and an improved attention module. Zeng et al.27 used attention transfer-based strategy and proposed a pyramid contextual encoder, which progressively learns high-level semantic feature maps by paying attention to the region affinity and transfers the learned attention to its neighboring high-resolution feature maps. Chen et al.28 proposed an image restoration model based on partial multiscale channel attention and residual networks. Restoration with convolutional perception uses masks to indicate missing regions. Xie et al.29 used a bidirectional convolution-based strategy and proposed a learnable attention graph module for efficiently adapting to irregular nulls and propagation in convolutional layers. Diversified restoration methods are to generate multiple visually plausible results. Zheng et al.30 proposed a novel framework with two parallel paths that can generate multiple different solutions with reasonable content for a single mask input. Chen et al.31 proposed a method that incorporates multilevel information compensation and U-Net. Zhang et al.32 use a semantic prior learner and a fully context-aware image generator to restore defective images. However, most of the above image restoration methods are aimed at portraits, street scenes, etc., and focus on the image quality of the defective restored areas; murals have very different colors and textures, and the application scenarios have unique characteristics. Because flash can cause damage to artifacts, it is banned resulting in captured images that are often in low-light conditions, which makes it extremely difficult for the model to extract features and restore them. Moreover, the types of defects in the image are varied and unusual, and corresponding masks need to be generated to describe the defective areas that need to be restored. These unique challenges in the mural scene make it difficult to obtain satisfactory restoration results when the above restoration networks are directly applied to defective mural restoration.

In the field of mural restoration, Cao et al.33 used Generative Adversarial Networks to restore missing mural regions and used the introduction of global and local discriminative networks to evaluate the restored images. Lv et al.34 separated the contour line pixel regions and content pixel regions of a mural image by connecting two generators based on U-Net. Merizzi et al.35 used Deep Image Prior method to restore defective murals; Li et al.36 proposed line-drawing-guided progressive mural restoration method; Song et al.37 proposed AGAN, an automatic coding generative adversarial network for image restoration of Dunhuang wall paintings. The above mural restoration methods perform well in terms of structural reconstruction and color correction under conventional conditions, but none of them take into account the challenges of the actual collection process of defective mural paintings that are limited by the low-light environment. This means that even though they fill in the defective areas of the murals well, the overall visualization of the frescoes remains difficult to observe.

In this paper, we aim to address the current two main challenges in the real mural restoration scenario (low-light condition as well as defective condition) simultaneously, utilizing a two-stage framework for batch restoration of ancient murals in archeological settings. We propose MER (Mural Enhance and Restoration) network, designed to restore defective murals captured under low-light conditions, yielding high-quality imaging restoration results. Our approach involves a two-stage processing strategy, particularly suited for low-light mural images characterized by low pixel values and difficulties in texture and structural feature extraction, hampering contextual learning and restoration by neural networks.

Our neural network comprises two main stages: the low-light image enhancement stage and the defect restoration stage. The initial stage employs weight-sharing cascaded illumination learning and a self-calibration module to enhance low-light images, improving clarity and aiding structural and textural recognition. This enhancement enables the second-stage restoration network to extract image texture and structural features effectively. In the second stage, the brightness-enhanced image is processed by an image restoration network with varying receptive fields, catering to both global and local defects’ restoration. Incorporating discriminators and diverse loss functions produces more realistic restorations, enhancing overall image quality for improved human perception of texture and structure.

Finally, the contributions of this article are as follows:

-

We propose the MER, a two-stage mural restoration model that effectively handles the restoration of defects while considering the impact of low-light imaging, resulting in higher-quality restoration of ancient murals.

-

We apply low-light processing and segmentation strategies for effective dataset expansion, where the segmentation strategy enhances both training and restoration outcomes.

-

We design the gradient based strategies for multiple image defect areas detection, to identify areas requiring restoration for more real-world scenarios, which enhances the applicability of the model.

-

We develop a free available web server with pre-trained MER model as backend for end users to restore mural images online.

Methodology

We designed our work with careful consideration of the specific requirements of mural restoration applications. First, we wish to provide a straightforward method for generating defect description masks for murals, which will be accomplished by identifying outliers. To address the limitations associated with the conditions under which mural images are captured, we designed a light enhancement network specifically tailored for murals. This network not only improves the visibility of the murals but also enhances feature extraction for the defect restoration network. Subsequently, we employed a multi-receptive field method to restore the defective regions of the murals, taking into account their unique characteristics. Each of these methods will be introduced in detail in the following sections.

Flaw finding

In practical applications, it is necessary to analyze the defective ancient wall paintings to find the flaw region. In order to find the flaw region, during the defect restoration network, we design a simple yet effective strategy to describe the defective region.

First, we calculate the pixel gradient for the ancient mural images. We reason that if the color of the defective region does not belong to the original image, then the boundary of the defective region can be obtained by screening the outliers of the pixel gradient. The threshold ({G}_{{th}}) of the gradient outlier is defined as follows:

Where ({G}_{{avg}}) is the mean of the pixel gradient, ({sigma }_{G}) is the standard deviation of the pixel gradient and ({lambda }_{G}) is the threshold parameter of the gradient, which was set to 3 in the experiment.

After getting the boundary of the defective region we calculate the pixel values of the boundary. We consider the pixel values of the defects as similar and outliers, so the threshold ({{rm{P}}}_{{rm{th}}}) for pixel outliers is defined as follows:

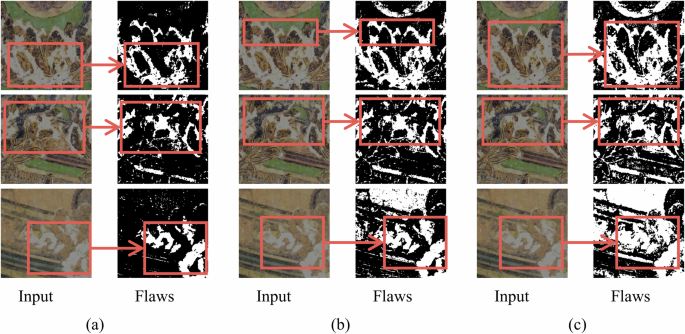

Where ({P}_{{avg}}) is the mean value of the pixel value at the edge of the defect, ({sigma }_{P}) is the standard deviation of the pixel value at the edge of the defect, and ({lambda }_{P}) is the threshold parameter of the pixel value of the defective region was set to 2 in the experiment. Examples of mask generation for other parameter combinations are shown in Fig. 1.

a Defect markers obtained using the image gradient method when ({lambda }_{G}) = 4, ({lambda }_{P}) = 3. b Defect markers obtained using the image gradient method when ({lambda }_{G}) = 4, ({lambda }_{P}) = 2. c Defect markers obtained using the image gradient method when ({lambda }_{G}) = 5, ({lambda }_{P}) = 1.5.

Finally, we calculate the ancient mural images in channels and defined the regions with pixel values higher than the pixel value threshold ({P}_{{th}}) as defective regions, from which we generated to get the defect description map.

Low-light image enhancement stage

Given that murals are typically found in indoor areas such as grottoes, tombs, temples, etc., we notice that the sites of real mural conservation tend to have low brightness, resulting in captured mural images being in low-light conditions. Low-light images exhibit poor visibility, and even after defective areas are restored, the most valuable texture information in the murals remains difficult to discern. To recover valuable mural images, we design a low-light image enhancement network. In classical Retinex theory ({y}={z}otimes {x}), we know that there is a link between the low-light observation ({rm{y}}) and the desired clear image (z), where (x) denotes the illumination component and it is the core optimization objective in low-light image enhancement operations. According to Retinex theory, better results can be obtained by getting a good estimate of the illumination. Given that illumination and low-light observations in murals are often similar or linearly related, and considering the need to minimize model parameters due to dataset limitations, we are inspired by the work38 to use a light estimation network ({{mathcal{H}}}_{{rm{theta }}}) with shared structure and parameters to form the augmentation module (E), where (theta) is a learnable parameter. To ensure outputs from different rounds of progressive optimization converge to the same state, we adopt a network ({{rm K}}_{vartheta }) with learnable parameters (vartheta) to form a self-calibrating module (S) progressively corrects the inputs of the augmentation module in each round. The formulation of the progressive optimization network is expressed as follows:

Where (k=t+1), ({v}^{t}) is the transformed input in round t of the asymptotic process, ({u}^{t}) is the residual in round t, and ({x}^{t}) is the illumination in round t, (t = 0,1…). Moreover, operators (otimes) are multiplied by elements and operators (oslash) are divided by elements.

Furthermore, in order to better train the low-light image enhancement network, we refer to the work39,40 which use an unsupervised loss function ({{mathcal{L}}}_{E}) defined as:

Where (T) is the total number of optimization enhancement rounds, which we set to 6 in our experiments. (N) is the total number of pixels, ({{rm{s}}}^{{rm{t}}-1}) is the output of ({{rm{{rm K}}}}_{{rm{vartheta }}}), (i) is the (i-{th}) pixel, (c) is the image channel in the YUV color space and ({rm{sigma }}=,0.1) is the standard deviation of the Gaussian kernel. ({rm{alpha }}) and ({rm{beta }}) are the weights which we set to 1.5 and 1 in our experiments.

Defect restoration stage

The final output from the low-light image enhancement stage serves as the input ({{rm{I}}}_{{rm{in}}}) for the image restoration network, tasked with restoring defective areas. Previous work has shown that networks with larger receptive field better restore overall structure and larger color blocks, while those with a smaller receptive field excel at restoring detailed local textures. Inspired by the work41, we employ networks with varying receptive fields for distinct restoration tasks, following a coarse-to-fine restoration strategy. The receptive field is the set of input pixels that are path-connected to a neuron. The advantages of such a choice in mural restoration applications are listed as follows: (1) Murals usually include delicate textures and complex patterns, and the model can capture the features of the mural image at different scales through the multi-acceptance domain strategy, focusing and integrating the information in the mural image as much as possible. The model adopts a multi-acceptance domain strategy, which first uses a large acceptance domain network to restore the global structure, and then uses a small acceptance domain network in cooperation with other methods to restore the local details, with each small stage of restoration focusing on the task of a specific goal, to achieve a more detailed and natural step-by-step restoration effect. (2) Due to the variety of breakage types and breakage area sizes of the mural paintings, the multi-acceptance domains can contribute to the enhancement of the model’s robustness in coping with the different styles of defects.

In order to roughly recover the structure of the broken mural as a whole, we first input the mural ({I}_{{in}}) which is to be restored with a binary mask (M) describing the missing regions (where 0 denotes a valid pixel and 1 denotes a missing pixel) into a coarse defect restoration network (({{Net}}_{C})) with a large receptive field. Where the mask (M) is randomly generated in the training process and automated by our flaw-finding method in real application scenarios. ({{Net}}_{C}) includes eight downsampling and upsampling operations, utilizing long skip connections to transfer information between the encoder and decoder, thereby recovering information lost during downsampling. The output of ({{Net}}_{C}) is ({I}_{{out}}^{C}). Additionally, we incorporate a patch-based discriminator with spectral normalization42 to improve the realism of the restoration. This discriminator accepts the real mural and the restored mural as inputs and produces a two-dimensional feature map of size ({{mathbb{R}}}^{32times 32}) as output. In this feature map, each element distinguishes between real and artificially generated content. The formulae for the spliced image and pixel-level loss of the rough restoration process are expressed as follows:

Further, we can define the relevant loss in the GAN method as follows:

Where ({M}_{r}) is the result of inverting the (M) pixel value (i.e., 0 becomes 1 and 1 becomes 0), ({I}_{{mer}}^{C}) is the merged image, ({I}_{{out}}^{C}) is the output of ({{rm{Net}}}_{{rm{C}}}), ({I}_{{gt}}) is the ground-truth mural corresponding to the input, ({{mathcal{L}}}_{r}^{C}) represents the pixel-wise reconstruction loss of the coarse defect restoration network, ({{mathcal{L}}}_{G}^{C}) represents the adversarial generation loss of the coarse defect restoration network, and ({{mathcal{L}}}_{D}) represents the loss of the discriminator. And (odot) is the element-wise product operation and sum(M) is the number of non-zero elements in M.

After completing the coarse restoration, we need to restore the local structure and texture of the mural in further detail. We input the coarsely restored mural into a local restoration network ({{Net}}_{L}) with a small receptive field. This network consists of two upsampling operations, four residual blocks and two downsampling operations to process the local regions of the input image in a sliding window manner, which can better restore the local information of the mural. The pixel-wise reconstruction loss of the localized restoration step is ({{mathcal{L}}}_{r}^{L}), which is the same process as the ({{mathcal{L}}}_{r}^{C}) operation, except that ({I}_{{out}}^{C}) is replaced by the output ({I}_{{out}}^{L}) of ({{Net}}_{L}) in Eq. (8). ({I}_{{mer}}^{L}) is also merged in the same way as ({I}_{{mer}}^{C}) in the coarse restoration step, except that ({I}_{{out}}^{C}) is replaced by the output ({I}_{{out}}^{L}) of ({{Net}}_{L}) in Eq. (7). And we use the attention module in this process. Further, we are inspired by the work43,44 to use the total variation loss (({{mathcal{L}}}_{{tv}}^{L})) for smoothing neighboring pixels in the hole region, and the perceptual loss (({{mathcal{L}}}_{{rm{per}}}^{{rm{L}}}))45 and style loss (({{mathcal{L}}}_{{sty}}^{L}))46, which are computed in feature space as defined on VGG-1647. Their combination with ({{mathcal{L}}}_{r}^{L}) to form the object loss ({{mathcal{L}}}_{L}) will achieve better recovery. The added pixel-level loss function can be defined as follows:

The loss function based on feature space computation can be defined as follows:

Where ({{mathcal{F}}}_{{rm{i}}}) represents the ({rm{i}})-th layer feature map of the pre-trained VGG-16 network, ({{mathcal{G}}}_{{rm{i}}}={{mathcal{F}}}_{{rm{i}}}(cdot ){{{mathcal{F}}}_{{rm{i}}}(cdot )}^{{rm{T}}}) is the Gram matrix36 ((iin left{mathrm{5,10,17}right})).

Finally, the total loss function of this restoration step can be defined as Eq. (14), where ({lambda }_{{tv}}), ({lambda }_{{per}}) and ({lambda }_{{sty}}) are the weight parameters which were set to 0.1, 0.05 and 120 in the experiments, respectively. Quantitative experiments on loss weights are shown in Supplementary Data Table S1.

After completing the local texture and structure refinement restoration, the mural presents a better texture restoration effect. In order to get a better overall visual effect, we input the output mural of the local refinement network to the global refinement network ({{Net}}_{G}). ({{Net}}_{G}) adds three attentional modules in front of the decoder of ({{Net}}_{C}). The affinity ({s}_{i,{j}}in {{mathbb{R}}}^{{HW}times {HW}}) between ({F}_{i}) and ({F}_{j}) in the feature map (Fin {{mathbb{R}}}^{Ctimes {HW}}) is computed as:

Next, we compute the weighted average of ({rm{F}}), denoted as (bar{F}={F}* {S}in {{mathbb{R}}}^{Ctimes {HW}}), through matrix multiplication. Finally, we concatenate ({rm{F}}) and (bar{F}), followed by applying a 1 × 1 convolutional layer to maintain the original channel count of ({rm{F}}). The attentional mechanism leads to a better effect.

The structure and processing flow of the MER model are shown in Fig. 2. The training loss function ({{mathcal{L}}}_{{rm{G}}}) of ({{Net}}_{G}) is similarly to that of ({{mathcal{L}}}_{L}) by simply replacing ({I}_{{out}}^{L}) with ({I}_{{out}}^{G}). Finally, we can get the training loss ({{mathcal{L}}}_{{rm{R}}}) of the whole image restoration network as the sum of the three restoration subnetworks’ losses and the sum of the GAN work losses, i.e., ({{mathcal{L}}}_{{rm{r}}}^{{rm{C}}}) + ({{mathcal{L}}}_{{rm{G}}}^{{rm{C}}}) + ({{mathcal{L}}}_{{rm{D}}}) + ({{mathcal{L}}}_{{rm{L}}}) + ({{mathcal{L}}}_{{rm{G}}}).

The mural to be restored is first divided into small images of 256 × 256 pixel size and sequentially fed into the subsequent restoration model. The corresponding mask describing the defect will then be automatically generated. Murals that are displayed in low light and contain defects will be light-enhanced first, and then the output of the image enhancement network will be fed into the defect restoration network along with the mask for further restoration, as specified in the image merging equation in Eq. (7). After completing the enhancement and defects’ restoration of the small images, we will join the small images to get the final result. The violet block within the local refinement network (({{Net}}_{L})) signifies a residual block consisting of two layers. Meanwhile, the global refinement network (({{Net}}_{G})) incorporates three green blocks, each representing attention blocks with resolutions of 16 × 16, 32 × 32, and 64 × 64 respectively.

Algorithm 1

Restoration of single mural images using MER modeling

Inputs: Low-light, defect-containing mural image ({mathbb{R}})

Output: Mural image ({mathbb{R}}{mathbb{{prime} }}) after MER model restoration

1 Split ({mathbb{R}}) into a collection of square subimages set ({rm{D}}) of size 256 pixels in sequence, maximum number of checksums ({rm{N}}). Initialize MER model and load pre-trained model

2 Loop over the mural images dataset ({rm{D}}), for a single piece of sub-image ({rm{y}})

3 for t = 1 to 6 do

4 if t = 1 then

5 ({v}^{{rm{t}}}) = ({rm{y}})

6 else if t = 6 then

7 ({I}_{{in}}) = ({u}^{t})

8 else

9 ({u}^{t}) = ({{mathcal{H}}}_{theta })(({v}^{{rm{t}}}))

10 ({z}^{t}) = divide(({rm{y}}), ({u}^{t}))

11 ({v}^{{rm{t}}}) = ({{rm K}}_{vartheta })(({z}^{t})) + y

12 t = t + 1

13 end if

14 end for

15 (M) = Masking Generation(({I}_{{in}}))

16 ({I}_{{mer}}^{C}) = ({I}_{{in}}) * (1 – (M)) + (M)

17 ({I}_{{mer}}^{L}) = ({I}_{{in}}) * (1 – (M)) + ({{Net}}_{C})(({I}_{{mer}}^{C})) * (M)

18 ({I}_{{mer}}^{G}) = ({I}_{{in}}) * (1 – (M)) + ({{Net}}_{L})(({I}_{{mer}}^{L})) * (M)

19 ({I}_{{out}}^{G}) = ({I}_{{in}}) * (1 – (M)) + ({{Net}}_{G})(({I}_{{mer}}^{G})) * (M)

20 return ({I}_{{out}}^{G}) ➩ ({I}_{{out}}^{G}) is the restoration result of ({rm{y}})

21 End Loop

22 The final restoration result is obtained by joining the restoration results of the subimages in sequence.

Parameter updating strategy

We then propose a two-stage strategy to seamlessly achieve the two desired outcomes: restoring ancient murals defective under low-light conditions into images with enhanced observability. The output of the low-light image enhancement phase serves as the input for the image restoration phase, creating a coupling between the two phases. During training we employ an optimizer to adjust network parameters based on the loss, aiming to minimize the difference between the generated output and the target. However, if both phases update parameters simultaneously, the input to the image restoration phase becomes unstable due to ongoing changes in the enhancement phase’s network parameters. This increases the learning difficulty in the image restoration stage. At the same time, we recognize the differing complexities of the network’s two phases and the varying number of learning samples required for optimization.

Thus we decide to use an alternating parameter update strategy. In one epoch we divide the dataset into several subsets, each with 60 data points. The first 6 data points in each subset are dedicated to optimizing the enhancement network, during which we freeze the image restoration network’s parameters to focus solely on updating the low-light image enhancement network. The remaining 54 data in the dataset are used for the restoration network optimization phase, where we optimize the defect restoration network’s parameters while freezing those of the low-light image enhancement network.

Further, the loss function of the whole MER ({{mathcal{L}}}_{{rm{MER}}}) can be expressed as follows:

Where parameter ({lambda }_{R}) will set to 1 in the defect restoration network optimization phase and 0 in the enhancement network optimization phase, and parameter ({lambda }_{E}) will set to 0 in the defect restoration network optimization phase and 1 in the enhancement network optimization phase.

Experiments

This section begins with an overview of the mural restoration outcomes achieved using the MER model. Subsequently, we detail the specific experiments, covering the dataset, experimental setup, and evaluation metrics. The visual outcomes and metric data from these experiments demonstrate the MER model’s effective mural restoration capabilities.

Experimental settings

Dataset

In order to achieve the best possible mural restoration results, we collect data on different styles of murals and expand the dataset.

Mural data

We first gathered captured images of famous mural paintings from China, which include images from Guangsheng Temple in Hongdong, water and land mural paintings at Princess Temple in Fanchi, and mural paintings at Yongle Palace in Ruicheng. Due to murals typically being located on indoor walls of tombs, grottoes, temples, and palaces, and restrictions on using excessive light to protect them, many ancient murals are scanned and preserved under darker imaging conditions, making the image data hard to observe. To mimic the lower brightness observed in real-world data collection scenarios, we artificially reduced the brightness of the collected images to 55%, 37%, and 12% of the brightness of the original images. Additionally, to enrich the dataset while considering the final restoration strategy, we crop the three obtained datasets with different low brightness into 256 × 256 pixel subimages. The final obtained low-light subimages for training are 123,299, and low-light subimages for testing are 4581. Example plots of data processing are shown in Supplementary Data Fig. S2.

Mask data

Research into the defect causes of ancient murals across various regions revealed common factors: stains, corrosion, peeling, mold, and cracks. To closely mimic real-world scenarios in experiments, based on identified defect types, we employed a random walk method to generate Dusk masks with random positions and shapes, simulating corrosion defect. Similarly, we used a corrosion operation to create Jelly masks, simulating edge defect around Dusk masks46. To simulate peeling defect, Droplet masks are created by randomly generating scattering points, simulating dispersed mold spots and liquid splashes. Block masks, generated from large circular blocks, mimic mural stains, while Line masks, created through random straight lines, also simulate stain defect. The cracks and scratches of the ancient murals are simulated in the real application scenario. Examples of masks that simulate different kinds of defects are shown in Supplementary Data Fig. S4. To replicate the diversity of defects in real scenarios, masks covering 5% to 50% of the area were generated. Various mask shapes and sizes were integrated into the network for both training and testing.

Experimental environment

The GPU used for the experiments is NVIDIA’s TITAN RTX. The Adam optimizer with a batch size of 6 is used to train our network. We set the initial value of the learning rate to 0.0001 for the first 100 trainings and linearly decayed it to zero for the next 100 trainings.

Metrics

In our experiment, we use three mostly used evaluation metrics to evaluate the performance of the restoration model by calculating the original image and the low-luminance image processed by the restoration model. These metrics include: Peak Signal-to-Noise Ratio (PSNR): to evaluate image distortion; Structural Similarity (SSIM): to give a combined image similarity score by comparing factors such as luminance, contrast, and structural attributes as well as Learned Perceptual Image Patch Similarity (LPIPS): a deep learning-based image quality evaluation metric that simulates visual similarity in human perception.

Comparison with state-of-the-art relevant methodologies

In our research, we find that there is a lack of effective open-source projects that can accomplish both light enhancement and defect restoration in the field of mural restoration. Therefore, we focus more on the imaging effect of defect restoration, the flowchart of our algorithm is shown in Supplementary Data Fig. S5. We select seven representative state-of-the-art correlation methods to compare with ours. We perform experiments using images of three light intensities with three different masking area ranges and made quantitative and qualitative comparisons: the MADF23, LG41, PEN27, RFR22, MuralNet36, AGAN37 and SCI38, where MuralNet36 and AGAN37 are recent works particularly designed for mural restoration.

Qualitative comparisons are shown in Fig. 3, which presents the visual outcomes of our method alongside SCI38, LG41, MADF23, PEN27, and RFR22, processed under three different low-light conditions and with three varying areas of masking. The SCI38 network merely brightens low-light images without restoring them, indicating it lacks image restoration capabilities. This implies the SCI38 network alone cannot fully recover the content of defective images in low light. Moreover, the color of the images processed with SCI38 ‘s pre-trained model significantly deviates from the ground truth’s color, highlighting the murals’ unique color style. Additionally, we compare and analyze the image restoration results of other models. PEN27 struggles with larger masked areas, showing holes and significantly worse restoration effects, indicating it’s not suited for extensive defect restoration. MADF23 outperforms PEN27 in overall restoration but falls short in accurately restoring textures at localized defect areas. Similarly, RFR22 exhibits poor restoration in areas with significant pixel gradient variations in murals. LG41 achieves a better overall imaging effect, yet its brightness and colors diverge considerably from the ground truth. Despite LG41, MADF23, PEN27, RFR22 in the defective areas, their restored images at low brightness levels fail to reveal detailed textures and structures, especially evident when the ground truth’s brightness is reduced to 12% in experiments. Consequently, these restoration networks cannot be directly applied to real-world ancient mural restoration scenarios. And, we can find that the line drawing guild method of MuralNet36 cannot properly assist restoration in low-light conditions, even though it is a restoration network for murals. Similarly, AGAN’s37 restoration effects are not balanced and do not respond effectively to low-light conditions.

Comparison of our method with SCI38, LG41, MADF23, PEN27, RFR22, MuralNet36, SPN32 and AGAN37 at three different low luminosities and applying three different areas of masking.

In contrast, our proposed MER effectively restores defective regions, improves image brightness via the enhancement network, and ensures textures and structures are discernible to the human eye. At the first two brightness levels, our restoration results closely resemble the ground truth, whereas the outcomes from other networks appear blurred. At the lowest brightness level, the content in images restored by other networks becomes indiscernible, while MER’s restoration allows clear observation of image content.

Quantitative evaluation also shows our experiment result, with three different ranges of random area masking for each of the three groups of low-light-intensity murals, with 1527 test images in each group. We use three metrics, LPIPS(↓), PSNR(↑), and SSIM(↑), to evaluate the difference between the model processing results and the ground truth. The box-and-whisker plots for these metrics in Fig. 4, based on the experimental results, allow us to clearly compare and conclude that our method’s restored images surpass those of other related methods across all test datasets. Additional test results are shown in Supplementary Data Fig. S3. Based on these findings, we can conclude that our proposed method achieves commendable results in restoring defective murals in low-light conditions.

Where a the input luminance is 55% of the ground truth and b the input luminance is 12% of the ground truth. For LPIPS metric, the lower is better. For PSNR and SSIM metrics, the higher is better.

Performance evaluation experiments on MER

Enhancement performance

We calculate the average pixel values of the three low-brightness images with the average pixel values of the brightness-enhanced images obtained after the brightness enhancement stage of MER. In the results of Table 1, we can see that the MER significantly improves the brightness of the input image and achieves a sharper imaging effect.

Defects restoration performance

We collected the Michelangelo frescoes dataset and tested their restoration effects using the MER model pre-trained on Chinese fresco images. The results in Fig. 5 show that MER well restores the defects imposed in the Michelangelo frescoes under low light, even though the model was trained without using them. More test results are shown in Supplemental Data Figs. S1, S6 and Table S3. Meanwhile, we also test the restoration effect of our method under different types of masking. Figure 6 illustrates that MER effectively restores the structure and texture of murals under coverings simulating various realistic defects, demonstrating the model’s robustness to different types of defects. This reinforces our confidence in using MER to restore ancient murals with various defect types in real-world scenarios. Additionally, in Table 2, comparing MER’s restoration outcomes across different datasets shows that performance metrics remain stable across varying masking areas, indicating strong model robustness.

a Raw refers to the original image of an ancient mural painting. b Low light and masked refers to the low-light and defective mural image obtained by reducing the brightness of the original image to 50% and adding random masking to simulate the real archeological scene. c Restored refers to the image after being restored by the MER network.

a is an example restoration that is masked by a dusk style. b is an example restoration that is masked by a jelly style. c is an example restoration that is masked by a droplet style. d is an example restoration that is masked by a block style. e is an example restoration that is masked by a line style.

Ablation studies

To demonstrate the superiority of the MER network’s two-stage strategy, we conduct ablation experiments comparing single-stage processing outcomes to those of two-stage processing. According to Table 3, using either the luminance enhancement or restoration stage alone fails to meet the requirements, with their performance metrics falling short of the two-stage processing strategy. Additionally, we analyze a 128 × 128 pixel segment of an experimental image, with its pixel value distribution displayed in Fig. 7. The pixel distribution of the image from the two-stage strategy aligns more closely with the ground truth, as shown in Fig. 7.

Pixel distribution of an experimental image under different ablation experimental method.

Computational complexity of the MER

Computational information of the model is displayed in Supplementary Data Table S2. We select several representative networks to compare the processing times of the models for low-luminance and defective mural images as an indicator of model complexity. First, we compare the processing speeds of various models within the defect restoration framework on images with 37% brightness and masks covering 20–35% of the area, with results presented in Fig. 8. The processing speed of MER is notable for its commendability and stability, demonstrating good robustness. According to the data in Table 4, the model processes a single input image within 0.03 s, enabling rapid restoration. Thus, the model’s moderate computational complexity allows for efficient batch processing of scanned data at real archeological sites.

The figure shows the processing time for two randomized data batches, where the experimental batch in (a) is 1560 mural images and the experimental batch in (b) is 400 mural images.

Real application scenarios

In real ancient mural restoration scenarios, the acquired images of ancient murals are often large, low-light, and contain defects. Therefore, when applying MER to real ancient mural restoration, large images should first be split into 256 × 256 pixel subimages for input into the restoration network. Additionally, it’s necessary to identify defect information in the mural subimages. We assume that defects share similar characteristics, with colors and shapes distinct from the original image content, resulting in significant gradient variation at the defect boundaries. In this experiment, a gradient operation a gradient operation is performed on the mural, using the mean and standard deviation of the gradient to identify outliers across the image, which are then considered as the boundaries of the defect. Additionally, we calculate the pixel values at the defect boundaries and use the mean and standard deviation to determine the range of pixel values for the defective area, assuming that the defect will exhibit similar outlier pixel values. This process yields a defect marker map of the image. After inputting the mural sub-map and its defect marker map into the network for restoration, all sub-maps are reassembled to produce the restored ancient mural image. Of course, this step can be substituted with manual labeling to achieve similar restoration outcomes.

To facilitate the accessibility of MER, we further develop a web server that runs MER on the backend using the Flask framework and made it freely available to users. Our server utilizes pre-trained MER models, allowing users to upload mural images for experimentation. We offer pages for low-light and defect simulation, enabling users to upload images of intact murals for simulation experiments. If the user’s input image is already low-light and contains defects, the mural image can be restored by defect labeling. The interface of our web server is shown in Fig. 9.

User can adjust the brightness of the mural which needs to be restored on the Brightness Adjustment Page and can draw or add random defects on the Defect Generation Page. We also provide the option to automatically find defective regions to form a mask based on the gradient method. After completing the pre-operation, users can get the restored result of MER on the Image Processing Page, and the results of the restoration will be presented in the order of the different stages.

Conclusions

In ancient murals conservation, ancient murals are often large, irregular and suffer from unpredictable defects. Scholars often use cameras to capture the valuable content of ancient murals for preservation. However, these images are often low-light and defective, making it difficult to directly observe the murals’ coherent texture and structure. To address this issue, we introduce the MER, a two-stage restoration network with the ability to automatically detect defective areas in murals. The first stage enhances the brightness of low-light mural images for improved visibility. The second stage employs a coarse-to-fine strategy to restore defective areas, resulting in images with complete texture and structure. Moreover, in order to solve the limitation of large size and small dataset of ancient murals, we apply artificial low-light processing and segmentation strategy. To improve the applicability of MER, we utilize various masks that simulate realistic application scenarios. A large number of experimental results have proved the commendable effectiveness of our proposed method.

However, our work is dedicated to propose a recovery method to solve the problem of low-light mural captured images with defective phenomena, and only a simple gradient calculation method is used in the application to obtain a mask image describing the defective regions. If improved recovery of defective areas is needed, manual labeling is essential for accurately covering the defective regions.

Responses