Protocol for evaluation of iTEST, a novel blended intervention to enhance introspective accuracy in psychotic disorders

Introduction

Psychotic disorders (i.e., schizophrenia and schizoaffective disorder) affect approximately 1.5% of the population and cause the most substantial disability of any psychiatric disorder [1,2,3]. A growing body of literature spanning cognitive science, educational research, and more recently, mental health, links inaccurate judgments of performance, or poor Introspective Accuracy (IA), with diminished real-world functioning in psychotic disorders [4,5,6,7,8]. IA is the discrepancy between objective performance and subjective estimation of performance [5, 7]. IA impairments span psychiatric illnesses, yet aberrant IA appears to be more common and pronounced in psychotic disorders [6, 9] and most frequently manifests as overconfidence in abilities [10]. However, there are no interventions that directly target IA. Here, we present a protocol for the initial phase of a new blended digital health intervention called Improving Thinking through Everyday Self-Assessment Training (iTEST) that aims to improve IA among people with psychotic disorders.

Treatments targeting IA are limited. Existing interventions to date target decision-making biases associated with psychotic symptoms, with other outcomes being considered secondary [11, 12]. IA, as defined in this protocol, is distinct from other domains of self-awareness such as clinical insight, which focuses on beliefs about the illness and its treatment, and metacognition, which emphasizes reducing cognitive errors like jumping to conclusions. Unlike these areas, IA directly addresses the personalized evaluation of one’s own skills and abilities and is measured objectively through the discrepancy between subjective estimates and actual performance. iTEST is the first intervention to specifically target IA in populations with psychotic disorders. Prior research suggests that IA is malleable and that IA skills can be transferred across domains [13, 14]. For example, an experiment in healthy adults improved IA on a trained task involving repeated judgments of correctness and led to the transfer of training to a novel task [15]. Education research among healthy adults indicates that interventions targeting improvement in task-based judgments of performance lead to improvements in learning [16, 17]. Other studies have found that improvements in task-based judgments extend to functional pursuits, such as employment searches [18, 19].

The iTEST intervention represents an attempt to translate basic science findings to clinical use. iTEST is a blended intervention, coupling automated mobile, remotely delivered task-based training in IA with personalized coaching in applying improved IA to everyday behaviors. The task-based components of iTEST are built upon previously validated mobile cognitive tasks that target verbal learning and facial emotion recognition, including the Mobile Variable Difficulty List Memory Test (VLMT) [20] and the Mobile Electronic Test of Emotional Recognition (METER) [21]. These tasks were selected for iTEST because studies have shown that average performance remains stable over time, they correlate with in-lab performance on tests of the same construct in people with psychotic disorders, and are associated with functional outcomes [22]. The verbal memory and facial emotion recognition domains were specifically chosen because they represent core cognitive functions that are frequently impaired in psychotic disorders and have direct implications for daily functioning. Memory deficits significantly impact occupational and social outcomes [23], while emotion recognition abilities are fundamental to social cognition and interpersonal relationships [24]. Additionally, performance on these tasks has been linked to motivation levels, which is a key factor in functional recovery [25]. Finally, we have validated these two tasks in mobile cognitive test formats as compared to in-lab measures in the study population, thus providing a basis from which to develop iTEST [26, 27]. IA judgments of performance in relation to actual performance are captured after the completion of each task, with increasing levels of difficulty as IA skills improve. The focus of coaching in iTEST is to support the translation of improved IA to functional goals that are meaningful to participants. Coaching is intended to also support participants in using compensatory strategies to achieve their goals and applying balanced thinking in approaching goal steps.

The evaluation of iTEST follows the NIMH’s Experimental Therapeutics paradigm. Supported by the NIMH’s two-phase R61/R33 grant mechanism, the first phase of iTEST (R61) is an open trial to evaluate the intervention’s feasibility, acceptability, and impact on IA. Here, impact is defined by IA improvements on both trained and untrained tasks as an indicator of generalization. Because iTEST is novel, a secondary aim of the R61 phase is to establish an optimal “dose” of the intervention by evaluating impacts at 8-, 12-, and 16-weeks post-baseline and selecting the shortest duration at which improvements are seen.

Materials and Methods

Setting and regulatory oversight

Participants are recruited from two study sites: the University of California San Diego (UCSD) and The University of Texas at Dallas (UTD). Recruitment settings include medical centers, public mental health clinics, local community clinics, and non-profit organizations. Methods include the use of flyers, word of mouth strategies, clinician referrals, and online advertisements. The University of California San Diego (UCSD) Institutional Review Board (IRB) approved the clinical trial, and the two-site study employs a SMART IRB [28] wherein the University of Texas at Dallas (UTD) is reliant on UCSD’s IRB. Each participant will complete the University of California San Diego Brief Assessment of Capacity to Consent to confirm decisional capacity before providing written informed consent [29]. The trial is registered in ClincialTrials.gov as NCT05899348.

Participant eligibility

Inclusion criteria for this study include: (1) voluntary informed consent to participate and capacity to consent; (2) aged 18 to 65; 3) DSM-5 diagnosis of schizophrenia or schizoaffective disorder based on the Structured Clinical Interview for DSM Disorders-5 (SCID-5; First et al., 2015) and available medical record review; (4) ≥6th grade reading level on the Wide Range Achievement Test-4 (WRAT-4) Reading subtest [30]; (5) no hospitalizations or medication class changes in two months prior to enrollment, determined by best-estimate history with information from medical records; (6) availability of a clinician with at least monthly contact (staff member, case manager, other mental health clinician) OR close associate (family member, friend) with at least biweekly contact who can serve as an informant.

Exclusion criteria are: (1) greater than moderate disorganization on the PANSS (P2-Disorganization item >5) due to demonstrated difficulties with adherence and engagement with blended interventions [31]; (2) DSM-5 moderate or severe substance use disorder in past three months based on the SCID-5; (3) level of care required that interferes with outpatient therapy (e.g., hospitalized; severe medical illness); (4) unable to interact with a smartphone (e.g., due to visual impairment); (5) lack of functional impairment, which is operationalized as having full-time employment and/or financial responsibility for their household.

Rationale for inclusion/exclusion criteria: We considered a broader transdiagnostic focus, but our preliminary data on direct comparisons strongly suggests that people with psychotic disorders have significantly more profound deficits in IA [32], notably in responsiveness to feedback [33], than do people with bipolar disorder. We considered screening for impairment in IA; however, screening for IA is not currently clinically translatable.

Timeline

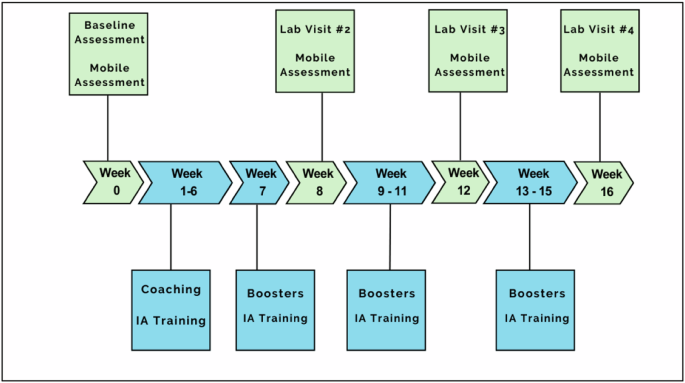

Figure 1 depicts the timeline for a participant in the study. After eligibility screening, participants complete a baseline assessment (see Measures below). Following the baseline visit, participants meet their coach and receive a tutorial regarding iTEST mobile procedures. They then complete a 6-day baseline assessment of IA using the mobile procedures before beginning the 16-week training program outlined below. During weeks 1–6, participants meet weekly with their coach for 60 minutes and complete mobile training, which includes IA feedback, 6 days per week. Subsequent training weeks [8,9,10,11,12,13,14,15,16] also include mobile procedures 6 days per week with feedback provided and bi-weekly 15-minute phone check-ins with their coach. Interspersed, there are 3 follow-up in-lab assessment visits and mobile assessments, as with the baseline visit.

Participation Timeline.

Intervention overview

iTEST was designed to contain the 4 elements linked with increased efficacy in cognitive remediation: trained coaching, repeated cognitive tasks, coaching in cognitive strategies, and a focus on the translation of skills to everyday functional tasks [34]. For cognitive tasks, participants complete word memorization (VLMT) and facial expression recognition (METER) tasks on smartphones 6 days a week, with each session lasting 10–15 minutes. At the conclusion of each task, participants are asked how many of the items they believe they got correct, and these estimations are compared to their actual score to index IA. Our web-based smartphone-compatible software is built around our previously validated NeuroUX’s VLMT and METER tasks [21, 35]. Participants have 6 hours to initiate their daily training and will receive 2 reminders throughout the 6-hour window to prompt them to complete the tasks. If they miss 2 consecutive days, study staff will contact participants for problem-solving and motivational support.

The training task stimuli are sourced from a large normed corpus to ensure no repetition over the trial. Training is designed to support incremental improvement in IA and provides more information and support in the initial phases based on best practices in cognitive remediation programs [36]. Training consists of 3 levels of difficulty with 5 sub-levels (e.g., 1-1, 1-2, etc., through 3-5) that progressively require more competency from the participants. Both the VMLT and METER tasks are used at all levels, and difficulty is manipulated by increasing the number of stimuli. At each level, training includes 3 scaffolding steps. The first is task awareness and orientation, where participants learn to correctly identify the number of stimuli (words or faces) presented. The second scaffolding step involves trial-by-trial feedback. Specifically, participants receive trial-by-trial feedback on the correctness of their responses to inform their overall estimate of the number correct. The third scaffolding step directly addresses IA by asking participants to estimate how many questions they answered correctly. These global performance estimates are compared to actual performance to yield an IA value.

As participants progress through sub-levels of training and demonstrate increased competency, these scaffolding steps are systematically removed. Sub-level 1 includes questions and feedback regarding a number of stimuli, item-by-item correctness, and feedback on IA (e.g., you said that you got 4 answers correct, but you got 5 answers correct). Sub-level 2 drops the question about a number of stimuli and only provides item-by-item feedback and feedback on IA. In sub-level 3, participants are asked to evaluate item-by-item correctness on their own instead of this information being automatically provided. Sub-level 4 asks participants to evaluate their item-by-item correctness but provides no feedback for these individual judgments. In sub-level 5, participants only make an overall IA judgment at the end of the task.

Advancing from each sub-level requires participants to correctly identify the number of stimuli for 3 consecutive days. Advancing difficulty levels requires 3 consecutive days of IA estimates that are ±1 or 0, indicating accurate IA. Participants complete all sub-levels for each level of difficulty.

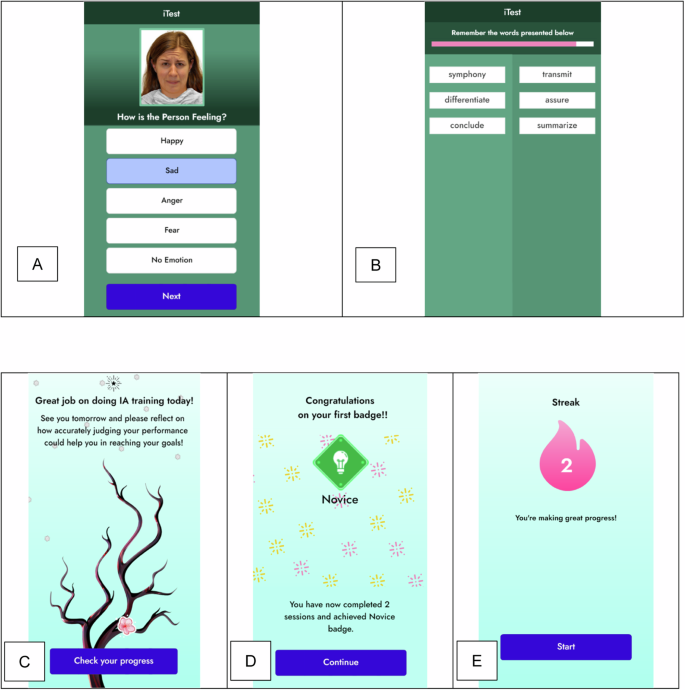

Design elements to sustain engagement: Engagement and motivation are encouraged through rewards, feedback, and gamification elements within iTEST [37]. Encouragement to continue training is directly provided in the app; the home screen shows a cherry blossom tree that grows flowers after each training session is successfully completed (see Fig. 2). Streaks are utilized for motivation to complete the daily mobile tasks. Participants can monitor their progress on the application’s dashboard. Additionally, coaches can view a dashboard that displays progress, which is reviewed with the participants in coaching sessions. Each of these design elements, particularly coach involvement, is linked to better adherence in meta-analyses of mobile interventions [38].

A Stimuli for the METER Social Cognition Task; B Stimuli for the VLMT Verbal Learning Task; C Tree Symbolizing Progress; D Badges Earned for Engagement; E Streaks Accumulated through Serial Engagement.

Coaching

iTEST mobile training is combined with weekly 60-minute coaching sessions in weeks 1-6. In weeks 8-16, the same coach provides biweekly 15-minute phone call check-ins. The 60-minute sessions are delivered either remotely or in-person per the preference of the participant. Sessions are manualized (see Fidelity below), consistent with our prior blended health interventions [39,40,41,42]. The introductory session provides the basis of iTEST to participants and includes psychoeducation on IA, a review of the participant’s baseline iTEST scores, a discussion of compensatory strategies in goal-setting, and a personalized goal identification activity [42, 43]. Thereafter, each iTEST coaching sessions involves: (1) review and feedback on iTEST adherence and IA scores; (2) psychoeducation on the impact of inaccurate IA on everyday activities; and (3) collaborative training, selection, and application of IA related compensatory strategies to weekly goals. The iTEST coaching session materials are adapted from Cognitive Behavioral Social Skills Training (CBBST) [44] and Action-Based Cognitive Remediation [43]. The topic of each coaching session is outlined in Table 1.

Coach training and fidelity: Coaches are bachelor’s or master’s level and have prior experience in clinical interviewing and/or intervention delivery in people with serious mental illnesses. Before the start of the study, coaches participated in four two-hour training workshops led by the PIs and an advanced doctoral student clinician, as well as training in safety planning. Coaches then conducted mock sessions which were video recorded for review. After the completion of training, coaches meet weekly for ongoing group supervision. Coaching sessions are audiotaped for supervision and fidelity ratings. Sessions are rated using an adapted version of the Cognitive Therapy Rating Scale for Psychosis (CTS-Psy) [45].

Dosing framework

Because IA is a novel target, the most efficient dose is unknown. Our rationale for the dosing framework of iTEST is based on three parameters: (a) duration in weeks, (b) a number of trials, and (c) frequency of feedback. We set the starting duration of iTEST at 8 weeks with training 6 times per week (1x per day, ~15 min) consisting of 3 rounds of trials and feedback for both memory and emotion recognition tasks. As a comparison, an in-lab IA intervention in healthy adults conducted over 8 weeks included 2160 total training trials and 80 instances of IA feedback (in aggregate ~6 hours) [15]. iTEST at 8 weeks equates to 4320 training trials and 188 rounds of feedback (8–10 hours), which essentially doubles the dose of the previous in-person IA intervention [15]. We then extended this period to 16 weeks with additional evaluations at 12 and 16 weeks. We note that the maximum dose of training at 16 weeks is 75% of the mean training duration reported in a 2020 meta-analysis of available computerized cognitive training [46] and ~50% of the average duration of cognitive remediation programs [47].

Assessments and measures

Table 2 details study assessments, which include an evaluation of current psychiatric symptoms (psychotic, mood, and suicidality), IA, and other factors that may influence treatment engagement such as motivation, sleep, and treatment expectancies. During the baseline visit, demographic and social contextual information (e.g., living situation) are collected and diagnostic evaluations are completed. Medication information, including sedation/sleepiness side effects, is collected via the Karolinska scale [48] at all assessment points. Two technology-based assessments, the Brief Assessment of Cognition in Schizophrenia-App Version (BACS) [49] and the Virtual Reality Functional Capacity Assessment Tool (VRFCAT) [50], are administered to assess global cognition and functional capacity, respectively, at baseline [51, 52]. All participants continue to receive their current mental health treatments with their previously established clinicians.

Measures for targets: In addition to feasibility and acceptability, the go/no go criteria to advance to a randomized clinical trial are improvements in IA on both trained and untrained tasks. For trained tasks, participants complete the METER and VLMT assessments on their smartphones during assessment weeks. Unlike the mobile training detailed above, the administration of METER and VLMT during assessment weeks does not include feedback about IA accuracy or task performance. The primary outcome is the averaged absolute discrepancy between each trial’s correct performance from that estimated by the participant, which is nested within sessions and weeks. We focused on trained tasks in IA as an outcome because a lack of improvement on these trained tasks could raise concerns about iTEST’s ability to modify IA and would suggest a need to revise the program.

An additional outcome is the transfer of training to an untrained task-based measure that is highly sensitive to IA: a modified version of the Meta-Cognitive Wisconsin Card Sorting Test (Metacognitive WCST) [53]. IA on this task is associated with functioning [4], and improvement on this task would support that iTEST leads to generalized improvement in IA since it addresses different cognitive domains than the training tasks. This Metacognitive WCST was developed by Koren (2006) and modified by our group [9, 53]. The primary outcome measure is the difference between momentary accuracy judgments and the correctness of individual sorts [9].

Secondary measures

Functional outcome: The Specific Level of Function (SLOF) scale is a 43-item interview used to measure functioning [54], and it will be administered to both participants and to their informants as an indicator of both real-world impact of iTEST (e.g., improvements in ratings) and of far transfer (e.g., the increased agreement between participants and informants). The scale assesses the participant’s current functioning integrated into a single higher-order factor addressing social, everyday, and vocational activities [55]. The SLOF emerged as the most reliable and externally valid scale-based measure of functioning in the team’s prior validation study [56]. Informant ratings on the SLOF were also found to be sensitive to short-term functional gains in a study conducted by our research group [57].

Exploratory functional outcome: As an objective digital biomarker of mobility pertinent to functioning, we measure GPS life space with NeuroUX’s NeuroLogger app (https://www.getneuroux.com/neurologger). Participants can opt-out of this exploratory measure and still participate in the trial. As in our prior reports in which mobility was associated with community functioning [20, 58], outcomes are median daily distance traveled from home and percent of samples at home.

Exploratory mechanistic targets linking change in IA to outcome: We will explore whether iTEST increases the use of compensatory strategies with the Compensatory Cognitive Strategies Scale [59], as well as motivation measured with Clinical Assessment Interview for Negative Symptoms (CAINS) Motivation and Pleasure (MAP) subscale [60]. We will also explore Defeatist Performance Beliefs (DPAS) [61], which predict effort [62] and may improve with more accurate self-awareness of abilities.

Intervention satisfaction: Treatment expectations are measured at baseline using the Credibility/Expectancy Questionnaire (CEQ) [63]. An adapted version Client Satisfaction Questionnaire (CSQ) [64] keyed to both coaching sessions and mobile intervention components will be used. Symptom, safety, and side effect assessments: At each assessment timepoint, depression and psychotic symptoms are assessed with the Calgary Depression Scale for Schizophrenia (CDSS) [65] and Positive and Negative Syndrome Scale (PANSS) [31], respectively. We administer the Columbia Suicide Severity Rating Scale at each visit to assess suicide risk [66]. Since the change in IA could theoretically lead to adverse experiences in some individuals (e.g., greater awareness leading to depressed mood) [67], we will also administer the Negative Effects Questionnaire (NEQ) [68]. The CDSS, PANSS, CSSRS, and NEQ are administered at each follow-up session.

Data safety monitoring

This study is supervised by an established clinical trials Data Safety Monitoring Board (DSMB) that consists of experts in clinical trials in serious mental illnesses and biostatistics, along with a patient advocate. The DSMB reviews the projects annually.

Statistical approach

We will use Multilevel Structural Equation Modeling (MLSEM) with three levels to estimate changes in Introspective Accuracy (IA) across trials (level 1) and/or weeks (level 2) within persons (level 3). Given the relatively large number of random effects, we will use Bayesian estimation rather than maximum likelihood estimation for the analyses [69]. Although some task or ability domains will not be measured at every trial or every week, specifying trial as Level 1 (e.g., for IA on mobile task) and week as Level 2 (e.g., for Metacognitive WCST) will still enable us to estimate changes in IA for these domains. Our models assume the presence of missing data, and we will evaluate models under various assumptions of missingness (i.e., missing completely at random (MCAR), not at random (MNAR), or random (MAR)). We will investigate ceiling effects based on skewness and kurtosis and transform variables as needed.

Sample size and power analyses: We used the Monte Carlo simulation capabilities of Mplus version 8.452 to perform our power analyses. To determine our levels of power to detect changes in IA for the list-learning task or emotion recognition tasks equivalent to d = |0.50|, we specified three-level models with 1000 replications each wherein trials (t = 18) were nested within weeks (8, 12, or 16) that were further nested within persons (n = 60 or 48 in the case of 20% attrition). This yielded strong power (i.e., > 0.90) across all combinations of sample size and week size. To determine our levels of power to detect transfer-of-training, we again specified three-level models with 1000 replications each wherein trials (t = 18) were nested within weeks (8, 12, or 16) that were further nested within persons (n = 60 or 48 in the case of 20% attrition). To determine our levels of power to detect exploratory predictors of changes in IA and their effects on functional outcomes, we modified the models such that predictors were regressed on changes in IA or functional outcomes were regressed on changes in IA. This yielded more-or-less adequate power ( ≈ 0.78–0.80) with 48 participants and fully adequate power (≈0.86–0.88) with 60 participants.

Results

To inform intervention development for the iTEST software, we completed pre-implementation alpha testing with participants with psychotic disorders (n = 5 for 5 days, n = 10 for 3 weeks). This testing included 6 days per week of the revised VLMT and METER IA training tasks and was aimed at obtaining feedback on usability and adherence/engagement. Following alpha testing, we conducted iTEST beta testing with participants with psychotic disorders (UT at Dallas: n = 5; UC San Diego: n = 5) to assess the feasibility and usability of the mobile tasks on iTEST. Beta participants completed up to 6 days of tasks for one week, with flexibility to choose one rest day. Beta testers did not receive coaching. After 7 days, participants completed the System Usability Scale and provided qualitative feedback. Overall task completion rate was 96.5%. System Usability Scores ranged from 72.5–100 with an average score of 93.8 (SD 8.0), which is considered excellent and is well above the accepted benchmark score of 68 [70]. Qualitative ratings of iTEST indicated very little to no difficulty with the program and high overall satisfaction. Recruitment is in progress as of November 2023. Enrollment in the open trial stage is expected to be completed by April 2025.

Discussion

Previous research indicates that introspective accuracy (IA) is impaired in people with psychotic disorders and is an independent predictor of functional outcomes [4,5,6,7,8]. However, no interventions have been developed and tested to improve IA. Experiments in healthy adults have indicated that IA can be modified and that improvements in one domain can be generalized to untrained tasks. Therefore, IA is a potential intervention target in psychotic disorders. Our trial represents a first step in testing whether IA is malleable in psychotic disorders, and whether improvements in trained domains generalize to untrained tasks. Further, we will assess whether coupling IA training with individualized coaching supports the translation of improved IA abilities to individually meaningful functional goal achievement. Although this trial is focused on a standalone intervention targeting IA, iTEST could be adapted to be coupled with cognitive training programs to expand training transfer and further impact functional outcomes.

Although several mobile interventions have been deployed in psychotic disorders [71], very few incorporate drill-and-practice mobile cognitive training. iTEST employs an app that engages with participants on a daily basis, coupled with coaching in applying IA to recovery goals consistent with elements of more effective cognitive remediation programs [34]. The mobile training experience includes design elements to increase engagement, such as streaks and digital art that grows with user engagement. This format may be useful for deploying cognition-targeted interventions in psychotic disorders.

Our operationalization of IA focuses on real-time, task-based judgments that can be directly compared to actual performance metrics. This differs from other approaches that measure IA through general self-ratings of abilities, confidence ratings, metacognitive questionnaires, or through comparisons between patient, family, and clinician ratings where determining accuracy becomes more complex. While each of these approaches is informative, in behavioral tasks we have an objective standard against which to measure self assessment accuracy, enabling precise quantification of the gap between perceived and actual performance. This methodological clarity is particularly valuable for intervention testing, as it allows us to track changes in accuracy over time and provide immediate, unambiguous feedback during training.

Further, while IA impairments often manifest as task-specific overconfidence, individuals with psychotic disorders may simultaneously underestimate their broader abilities. iTEST addresses this complexity through mobile training that targets task-specific IA with structured feedback, while coaching addresses broader self-evaluation patterns. The described study measures both aspects through performance tasks and the Defeatist Performance Beliefs Scale (DPAS).

Since iTEST is a new intervention, our trial is designed based on the NIMH’s experimental therapeutics framework, with a focus on evaluating the impact on both trained and untrained IA alongside feasibility and acceptability. The study design includes an embedded investigation of dosing, with evaluation of the go/no-go criteria assessed at 3 different follow-up time points (8, 12, and 16 weeks). If go/no go criteria are met, a planned next step is a larger efficacy-focused randomized controlled trial in which iTEST would be compared to a time-equivalent intervention employing both mobile assessments and coaching without training in IA. The earliest “dose” (8, 12, or 16 weeks) at which go/no go criteria are met would be selected. If go/no go criteria are met, a follow-on randomized control trial would evaluate whether iTEST improves functional outcomes to a greater extent an active comparator and whether a change in IA mediates the effect of iTEST on functional outcomes.

This pilot study of iTEST will provide important information on the malleability of IA in psychotic disorders and whether blended mobile cognition-targeted interventions might be a route to expanding access to treatments. Our findings may ultimately pave the way for more personalized and accessible cognitive remediation options, helping to bridge the gap between research and practical application in mental health treatment. Ultimately, this could help individuals with psychotic disorders achieve better functional outcomes and enhance their overall quality of life.

Citation diversity statement

The authors have attested that they made efforts to be mindful of diversity in selecting the citations used in this article.

Responses