Unsupervised identification of crystal defects from atomistic potential descriptors

Introduction

Machine learning (ML) has transformed the computational modeling of atomic interactions, a cornerstone of molecular dynamics (MD) simulations. Current machine learning potentials (MLPs) allow us to reliably simulate large systems and long timescales with ab initio accuracy1. Beyond the fitting of potential energy surfaces, the development of ML tools has also benefited the analysis of atomistic simulations, providing valuable structural information. For instance, supervised learning approaches based on neural networks have been used to identify different phases in polymorphic systems2,3,4,5,6,7,8 as well as dynamical processes9. However, characterizing local atomic environments of crystal defects is a challenging task10,11,12. Conveniently, the development of MLPs has come along with refined features describing local atomic environments, such as the Smooth Overlap of Atomic Position (SOAP) descriptors13 or the atom-centered symmetry functions14, which are applied in this work. Designing order parameters can be complex and computationally intensive, especially if no structural classification is available in advance. Therefore, unsupervised ML methods have emerged as a promising path towards discovering relevant structural features in complex systems15. Instead of trying to learn the local chemical environment from typically tens to hundreds of dimensions of atomic descriptors, it is common practice in unsupervised methods to, initially, reduce the dimensionality to two or three dimensions. Several methods for dimensionality reduction have been applied in the context of simulations16,17,18,19,20,21. In particular, unsupervised classification tasks have been performed using (i) topological graph order parameters combined with both Principal Component Analysis (PCA)22 and diffusion maps23,24, (ii) Gaussian mixture models together with both PCA25 and neural-network-based autoencoders26,27, (iii) a band structure encoding along with the t-distributed stochastic neighbor embedding (t-SNE)28, (iv) simple three-body features29 jointly with the Uniform Manifold Approximation and Projection (UMAP)30, and (v) SOAP combined with UMAP31,32,33 and the Multidimensional Scaling Algorithm34.

To effectively analyze MD trajectories, it is crucial to employ algorithms that meet three fundamental criteria: (1) minimal computational overhead, which can be achieved by utilizing precomputed atomistic potential descriptors; (2) distinct separation of clusters for simple classification; and (3) robustness without variations in hyperparameters and initialization. Topological graph methods fail to satisfy the requirement of minimal computational overhead due to the additional calculations involved in generating graph-based order parameters22. Furthermore, metric-preserving algorithms such as PCA and autoencoders often produce distributions with overlapping clusters, lacking clear separation, as demonstrated in this work. Graph-based algorithms like t-SNE and UMAP are known to be sensitive to variations in hyperparameters and initialization procedures35,36,37. The novel graph-based algorithm Pairwise Controlled Manifold Approximation Projection (PaCMAP) has been reported to be more robust and superior compared to t-SNE and UMAP in toy problems38 and single-cell transcriptomic data analysis39.

Our study compares three unsupervised dimensionality reduction techniques for identifying crystal defects: PCA, UMAP, and PaCMAP. Our goal is to validate the reported superiority of PaCMAP over the established UMAP method. We maintain consistent hyperparameters across all studies to evaluate their robustness when cluster identities are unknown beforehand. The only exception is the initialization process, which has been shown to be crucial for achieving reliable results. We select two standard benchmark systems: Si and H2O. First, we assess the methods’ ability to classify phases and then focus on locating point defects. For silicon, we also identify surface atoms, whereas for H2O, we detect a hexagonal ice nucleus surrounded by supercooled water, an essential feature for studying ice nucleation40,41. A detailed methodology for PaCMAP is provided, as this algorithm is less well-known than PCA and UMAP. Additionally, we discuss the nonparametric nature of PaCMAP and our approach to addressing it.

Results

To demonstrate the effectiveness of the method, we present results from specific applications. However, the following methodology is general and can be applied across different phases, trajectories, thermodynamic states, and systems.

Silicon phases

Silicon crystallizes in a diamond structure under standard conditions. When subjected to pressure, the diamond structure of silicon transforms into the β-Sn structure at approximately 10 GPa, the orthorhombic structure (space group Imma) at around 13 GPa, and the simple hexagonal structure at around 16 GPa42. High-pressure structures share the same unit cell, where atoms are positioned at (0, 0, 0), (0.5, 0.5, 0.5), (0.5, 0, 0.5 + ν), and (0, 0.5, ν). A ν value of 0.25 corresponds to the β–Sn structure, while the SH structure is characterized by ν = 0.5. The Imma phase provides a continuous transition between these phases. Consequently, the β–Sn and SH phases closely resemble each other, and their differentiation may not always be trivial. It is confirmed by the PCA analysis in Fig. 1a, where these phases overlap, and a clear boundary cannot be unequivocally determined. Similarly, distinguishing the liquid phase from the solid is not achievable through PCA, as the liquid embedding overlaps with other solid phases, as already observed in water43.

Included are silicon phases (a–c), diamond monovacancy (d–f), diamond interstitial (g–i), and diamond surface (j–l). Each color in the panels of the first row comes from a different dataset so we know in advance the ground truth embedding and it is used for testing purposes. The other rows contain a single dataset so one color is used to highlight that no prior information is required. After cluster separation, visual inspection of structures was sufficient to identify the cluster’s underlying meaning. This is possible because atoms in the vicinity must have similar local chemical surroundings, as we have also empirically verified.

Our preliminary experiments revealed that PaCMAP and UMAP highly depend on the initialization process. Specifically, using UMAP’s default spectral initialization resulted in correct clustering but also artificial cluster creation (Fig. S1a, in Supplementary information) and misclassification of parts of the SH phase as β-Sn (Fig. S1b). These results were obtained with identical hyperparameters, only varying the random seed. Similar results are observed with PaCMAP random initialization. To address this instability, we switched to PCA initialization. Following this change, both algorithms successfully separated the liquid phase from the solid phases and clearly distinguished between β-Sn and SH phases, as shown in Fig. 1b, c. The misclassification rates for UMAP and PaCMAP were 0.10% and 0.08%, respectively.

Diamond point defects and surface atoms

The subsequent analysis aims to localize monovacancies and interstitials within silicon’s diamond structure. Monovacancies can be identified by locating the four nearest neighbors that would have formed bonds with the missing atom if it had been present. The PCA plot in Fig. 1d shows two distributions without clear separation, whereas UMAP and PaCMAP clustering in Fig. 1e, f clearly distinguish atoms near monovacancies from those in other regions. Graph-based algorithms accurately identify the four nearest neighbors for 89% of structures, while the remaining cases exhibit variations (3, 5, and 6 neighbors) caused by local distortions introduced by interstitials. Nonetheless, the algorithms successfully locate the monovacancy in all tested cases.

Identifying interstitial atoms is straightforward when their identity remains constant throughout the simulation. However, additional analysis is required if an interstitial atom changes its identity over time. While PCA provides no useful information (Fig. 1g), UMAP and PaCMAP categorize silicon systems with interstitial defects into two interconnected and one separated cluster, as shown in Fig. 1h, i. The largest cluster includes atoms far from the defect, while the middle interconnected cluster comprises atoms near the interstitial. The smallest clusters are composed of atoms not arranged on a diamond lattice. Figure S2 presents examples from the smallest cluster, showing local chemical environments with varying degrees of distortion.

We conclude our silicon analysis by examining (001) diamond surfaces using a relatively small dataset comprising 29 structures. The PCA analysis reveals only overlapping distributions (Fig. 1j). While UMAP divides the local environments into three clusters (Fig. 1k), PaCMAP identifies only two (Fig. 1l). Upon examining one of the surface structures depicted in Fig. S3, we find that the UMAP clusters primarily correspond to bulk atoms, surface atoms, and first-neighbors to surface atoms. PaCMAP misses the first neighbors to surface atoms.

Ice/water classification

The second part of the paper is focused on the differentiation of ice-like and liquid-like water molecules in the context of defects and ice nucleation. It would be expected that classifying disordered and ordered phases would be easier than between similar crystal polymorphs, but this task was more challenging than the previous case of silicon. Mid- to long-range ordering is a critical feature for differentiation and is not primarily addressed by our potential descriptors. As shown in Fig. 2a–c, none of the tested methods distinguishes the water phase from the ice phase. This is due to the greater significance attributed to O-H bonds of water molecules compared to hydrogen bonds in used atomic potential descriptors. When the O-H bond length is fixed, as in the case of certain classical force fields, a complete separation between liquid and solid phases is possible. Tuning the descriptors may improve the classification, however, sets of symmetry functions that perform well for neural network potentials in molecular systems are expected to also perform well for unsupervised classification. In the supplementary information, we include an example of the role of hyperparameters of local atomic descriptors (Fig. S8).

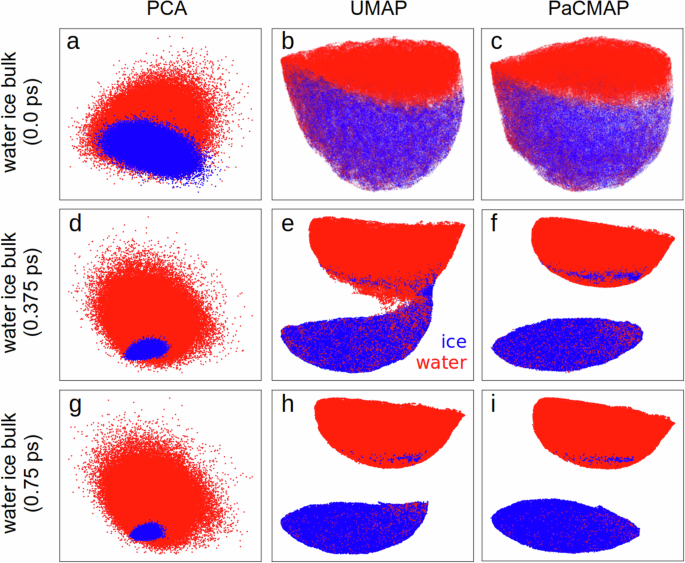

a–c show results without time averaging, (d–f) show results averaged over a 0.375 ps time interval, and (g–i) display results averaged over a 0.75 ps time interval. Since the water and ice phases originated from different datasets, we have distinguished them by color.

To minimize the impact of thermal noise from OH bond vibrations, one can apply a moving average either during the molecular dynamics simulation or afterward to the calculated descriptors. Here, we averaged the descriptors over a time window that was iteratively changed until successful classification. This allows us to separate phases considering that each local environment may have a different decorrelation time. Hence, by iteratively trying wider time windows we ensure that we do not miss relevant environments with shorter characteristic timescales, which are expected to be the limiting factor. PaCMAP achieves complete water and ice phase separation at a moving average interval of 0.375 ps (Fig. 2f), with classification errors of only 1.14% for water and 0.68% for ice. As the moving average length increases, phase differentiation accuracy improves. For instance, a 0.75 ps moving average reduces errors to 0.34% for water and 0.14% for ice. In contrast, UMAP fails to achieve complete cluster separation at 0.375 ps (Fig. 2e) or even at 0.5 ps (Fig. S4). Only with a 0.75 ps moving average does UMAP entirely separate water and ice local environments (Fig. 2h), albeit with a higher classification error for water (0.59%) compared to PaCMAP (0.34%). The classification error for ice remains similar, at 0.14%. The increased classification error for water is primarily due to the probability of water molecules forming local ice-like structures. In contrast, ice molecules remain closely aligned with their crystal lattice structure.

Ice defects

Time averaging has been shown to be essential in order to differentiate between water and ice phases using UMAP. Specifically, a moving interval of at least 0.75 ps is required to separate water and ice phases for UMAP effectively. Therefore, we employed this same interval in subsequent tests to identify molecular monovacancy, interstitial atoms, and ice nuclei in the liquid phase.

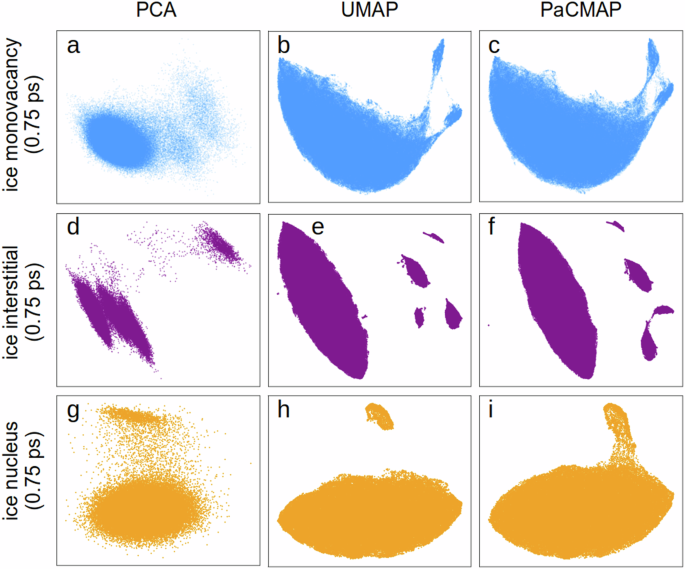

For localizing monovacancies in ice, UMAP proved the most effective for separating clusters (Fig. 3a–c), although complete separation is not achieved. The two emerging clusters correspond to distinct local chemical environments near monovacancy defects, specifically, two oxygen atoms missing a hydrogen donor and two oxygens missing a hydrogen acceptor per structure. These four oxygens near molecular monovacancy enable straightforward tracking of the monovacancy location.

Included cases are the ice monovacancy (a–c) and the ice interstitial (d–f) defects and the rounded ice nucleus in water (g–i), with potential descriptors averaged over a 0.75 ps time interval. For each case study, all atoms originate from the same dataset; therefore, the ground truth clustering is unknown.

In the case of identifying interstitial atoms, even PCA demonstrates sufficient clustering (Fig. 3d). UMAP and PaCMAP not only identify interstitials in 99.3% of structures (top right clusters in Fig. 3e, f) but also their neighbor atoms (three clusters below interstitial cluster). Notably, the small cluster on the left represents a second neighbor to an interstitial atom that has been displaced from its crystal position due to interstitial diffusion.

Ice nucleus in supercooled water

Our previous analysis compared the bulk structures of the water and ice phases. Identifying an ice nucleus within the liquid phase proved more challenging due to its distinct structure, influenced by interfacial stress. The interface is characterized by molecules with intermediate properties between liquid and solid, making it difficult to distinguish between them. To avoid finite-size effects in nucleation studies, the crystalline nucleus was significantly smaller than the surrounding liquid, resulting in an enormous class imbalance among molecules in the three phases (liquid, interface, and nucleus). Even using a moving average of 0.75 ps, only UMAP could effectively differentiate between the liquid and ice nucleus, as illustrated in Fig. 3g–i. While PaCMAP showed a visible cluster forming in the upper right, it is not distinct. The structure contained ~75,000 molecules in the liquid phase and about 2000 molecules in the solid phase, resulting in an enormous class imbalance. Augmenting the dataset with an additional 2000 local environments of bulk ice structures enabled complete cluster separation even for PaCMAP (Fig. S5b). However, only partial overlap with the ice bulk environment is observed in the newly separated cluster. The non-overlapping segment corresponds to interface atoms, as indicated by the periodicity loss at the nucleus’s edge in Fig. S5c.

Discussion

We have demonstrated the applicability of atomistic potential descriptors for unsupervised identification of local atomic environments in silicon and water systems. These descriptors enable both phase classification and the detection of point defects and interfaces. We compared the clustering capabilities of two widely used methods, PCA and UMAP, with a novel approach, PaCMAP. Although PCA provided limited valuable information for phase or defect detection, its initialization served as an effective starting point for both UMAP and PaCMAP. Without PCA initialization, the clustering results of both methods were unstable.

Both UMAP and PaCMAP successfully separated silicon phases and identified diamond point defects. UMAP outperformed PaCMAP in distinguishing the first neighbors of the silicon surface, while PaCMAP only identified the surface atoms. Furthermore, UMAP could identify ice nuclei in water without additional data points, whereas PaCMAP required augmenting the dataset. This sensitivity to class imbalance suggests that PaCMAP may struggle with unbalanced datasets. In contrast, when class imbalance was not an issue, such as distinguishing between water and hexagonal ice, PaCMAP outperformed UMAP. PaCMAP required only a 0.375 ps moving average, whereas UMAP needed twice that time. Although hyperparameter tuning could potentially yield similar results for both methods, our focus was on unsupervised clustering, where the number of clusters is generally unknown a priori.

In conclusion, PaCMAP’s superiority over UMAP has not been confirmed. While PaCMAP excelled in separating the water and ice phases, it struggled with unbalanced datasets. Although UMAP clustering is sensitive to hyperparameter variations, it performs effectively with default settings, except for the initialization process, where the default spectral initialization produces unstable results. Both algorithms perform well overall, and the choice between them should be determined by the specifics of the system under study.

Methods

Workflow

This article demonstrates how atomistic potential descriptors can identify crystal defects, surfaces, interfaces, and phases without supervised learning. While we examine most cases individually to illustrate key concepts, the methodology broadly works across different local environments, trajectories, and chemical elements. In Fig. 4, we show a schematic overview that outlines the general workflow step-by-step.

The workflow begins with a structure dataset and proceeds through dimensionality reduction and clustering analysis. Dotted lines indicate optional feedback paths when clustering is unsuccessful: time averaging of atomic positions or descriptors can be performed, or descriptors may be enhanced, and the process returns to feature standardization if needed. If clusters are identified, the workflow proceeds to classify atomic environments into phases, defect sites, and interface/surface atoms.

PaCMAP algorithm

The overarching objective of PaCMAP is to bring proximate data points within the high-dimensional space into closer proximity within the low-dimensional space, as well as more distant data points in the original space to greater distances within the low-dimensional space. Since it would be computationally demanding to consider all the distances between all atoms with each other during optimization, PaCMAP restricts the number of neighbors for each data point to a finite value. Neighbors are categorized into three types: near pairs (nNP), mid-near pairs (nMN), and further pairs (nFP). Attractive forces are applied to near and mid-near pairs, whereas repulsive forces are exerted on further pairs. The selection of neighbors is a one-time process, and the neighbor pairs remain constant throughout the optimization phase. The whole algorithm unfolds in three steps:

1) To determine near neighbors, an initial step involves the selection of a subset comprising the minimum of either nNP + 50 or the total number of observations (N) nearest neighbors based on Euclidean distance. Subsequently, the nearest neighbors are identified according to the scaled distance metric ({d}_{ij}^{2}=frac{| | {{{bf{x}}}}_{i}-{{{bf{x}}}}_{j}| {| }^{2}}{{sigma }_{i}{sigma }_{j}}) between observation pairs (i, j), where Xi represents atomistic potential descriptors and σi is the average distance between observation i and its Euclidean nearest neighbors falling in the fourth to sixth positions. This scaling is implemented to accommodate potential variations in the magnitudes of neighborhoods across different regions of the feature space. In selecting mid-near pairs, six additional points are randomly sampled (uniformly), and the second nearest point among them is chosen. Lastly, further pairs are determined by randomly selecting nFP additional points.

2) To initialize the low dimension embedding Y, each datapoint i is associated with a 2D vector yi. As PaCMAP is a nonparametric algorithm without an underlying function that generates low-dimension embedding, values are generated by selecting the most important PCA components.

3) Then, with a predefined neighbor list for each datapoint and an initial position in the low-dimensional embedding provided by PCA, the optimization process commences minimizing the loss function by varying yi with the ADAM optimizer44:

where ({tilde{d}}_{ab}=| | {{{bf{y}}}}_{a}-{{{bf{y}}}}_{b}| {| }^{2}+1). The first two terms correspond to the attractive interactions between near and mid-near pairs. The third term represents a repulsive interaction that facilitates cluster separation. The pairs contribute to the loss function with weights determined by the coefficients wNP, wMN, and wFP, collectively constituting the overall loss. These weights are dynamically updated throughout the algorithm as part of the optimization process. The dynamic update of weights follows a specific scheme during different iterations of the optimization process:

-

Iterations 0 to 100: wNP = 2, ({w}_{MN}=1000left(1-frac{t-1}{100}right)+3left(frac{t-1}{100}right)), wFP = 1

-

Iterations 101 to 200: wNP = 3, wMN = 3, wFP = 1

-

Iterations 201 to 450: wNP = 1, wMN = 0, wFP = 1

The optimization process has three distinct phases designed to circumvent local optima. The initial phase focuses on global structure achieved through substantial weighting of mid-near pairs. As this phase progresses, weights on mid-near pairs gradually decrease, facilitating algorithmic focus shifting from global to local structures. The subsequent phase concentrates on enhancing local structure while retaining the global structure acquired in the first phase. Finally, the third phase prioritizes the refinement of local structure by reducing the weight of mid-near pairs to zero, accentuating the role of repulsive forces to separate cluster boundaries more distinctly. Figure S6 provides an example of the optimization process for silicon phases.

Parametric PaCMAP

In this section, we address the most significant limitation of the PaCMAP algorithm: its nonparametric nature. It means that predicting new data points is not directly possible, as the algorithm does not construct a function f(x): Rd → R2 that would map high-dimensional descriptors to a 2D latent space. This limitation can be a considerable constraint in certain real-world applications where the goal is to cluster training data and apply the learned mapping to new, simulated data points. A practical approach involves generating embedding/labels, which are then employed as target for supervised regression or classification tasks. Subsequently, a simple feedforward neural network can be easily trained and rapidly produce predictions for new local environments. To illustrate this approach, we trained a neural network with three hidden layers, each comprising 50 nodes and employing ReLU activation functions for silicon and bulk liquid and ice phases.

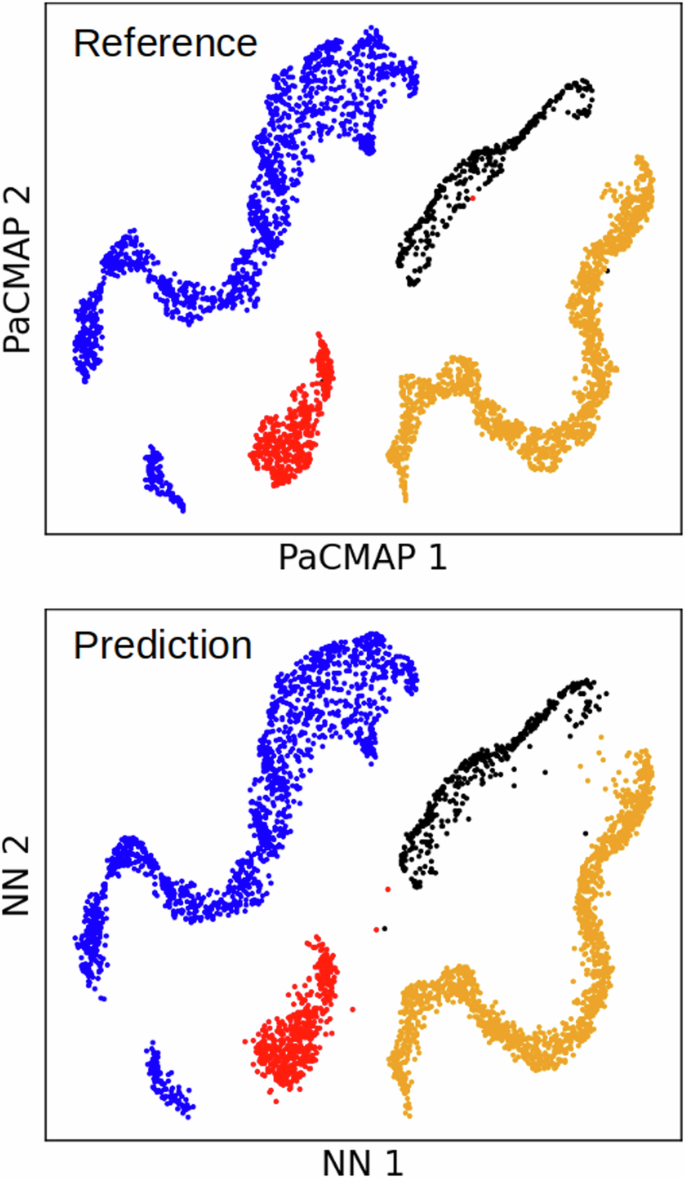

In Fig. 5, we compare the neural network’s predictions with the test reference dataset (PaCMAP mapping), proving visually that the neural network has successfully learned the underlying mapping of PaCMAP. A similar comparison of bulk liquid and ice phases is provided in Fig. S7. However, classification is a more practical approach for real-world applications. Each cluster from the training data would be assigned a class, and a classifier would then predict the class of each cluster directly from the descriptors, eliminating the need for 2D embedding.

Comparison of reference test data (top) and neural network predictions (bottom) for distinguishing between silicon phases. The test dataset contains 4000 local chemical environments.

Datasets, atomistic potential descriptors, and PaCMAP hyperparameters

We have selected two well-known systems for benchmarking unsupervised algorithms: silicon (Si) and water (H2O). In the case of silicon, our focus is on identifying the crystal lattice, point defects, and the surface. Our emphasis for water molecules is on liquid and hexagonal ice distinction and molecular point defects.

The General-Purpose Interatomic Potential for Silicon encompasses 2475 structures, which can be categorized into 23 different structure types45. Not all structure types contain a sufficient number of structures for data-driven analysis. Therefore, we restrict our analysis to distinguishing between diamond, β-Sn, simple hexagon (SH), and liquid phase, but also localization of monovacancies, interstitial positions, and identifying surface atoms.

Water structures include bulk liquid, ice, and a spherical nucleus surrounded by supercooled water. All structures have been generated by running MD simulations with n2p246 – LAMMPS47 using a Behler-Parrinello neural network trained on ab initio data based on the RPBE-D3 zero damping density functional48. Initial ice-Ih structures were generated with GenIce49 and then simulated in the anisotropic NpT ensemble at 250 K and 0 bar for 700 ps. Supercooled water presents prolonged relaxation, so producing equilibrated structures requires either very long simulations or the use of special techniques. Here, we have run a 32 replica parallel tempering simulation at constant 0 bar pressure during 8 ns covering from 211 K up to 335 K. Then, the last structure from the distribution of 250 K was selected and simulated for 2 ns more at constant temperature and pressure (250 K and 0 bar) for production-level data acquisition. To produce the nucleus system, we inserted a perfect spherical ice-Ih nucleus of about 2000 water molecules into a supercooled water configuration, producing 78,856 water molecules. We pre-equilibrated the system using the TIP4P/Ice force field50 via the GROMACS package51. The temperature was set to 250 K, pressure to 0 bar, and the nucleus size barely changed during 500 ps. Then, we switched to the MLP and slightly heated the system towards 260 K for 10 ps. Finally, we have run 2.5 ns in the NpH ensemble for production-level structures at 0 bar. The nucleus remained relatively stable in size, and the average temperature quickly converged to ~255 K. To study the role of hyperparameters in local descriptor space as described in the supplementary information, we use dataset-2 from ref. 52.

We employ Behler-Parinello descriptors (symmetry functions) to encode the local structure information due to their simplicity and widespread applicability14. For silicon, we constructed a descriptor vector comprising 20 radial Gaussian symmetry functions and 15 angular polynomial symmetry functions53 (details are provided in Tables S1 and S2). These symmetry functions are designed generally without leveraging specific knowledge about the bonding54. A cutoff distance of 5 Å was set to prevent self-interaction in smaller supercells within the dataset. For water analysis, we chose well-tested symmetry functions commonly employed in molecular dynamics simulations accelerated by MLPs48 with a cutoff of 6.35 Å. Only oxygen descriptors that include both oxygen-oxygen and oxygen-hydrogen interactions have been utilized in analyses.

Each data point corresponds to an individual atomic environment within the supercell without averaging over the cell or time unless otherwise specified.

Regarding the hyperparameters of the PaCMAP algorithm, we have fixed parameters for all presented cases. This entails nNP = 10, nMN = 5, and nFP = 25 with PCA initialization.

A linear classifier was used for the water system. As silicon phases originate from different datasets, the misclassification rate for each silicon phase was obtained separately using the Local Outlier Factor (LOF) method55.

Software

Production-level molecular dynamics simulations are performed with n2p246 – LAMMPS47. GROMACS51 is used for setting up the ice-Ih nucleus system. Initial configurations of ice are generated with GenIce49. The symmetry functions are evaluated using the n2p2 package46. The structures are rendered using OVITO56. Dimensionality reduction is performed using packages: scikit-learn for PCA57, UMAP58, and PaCMAP38. Misclassification rates were obtained using scikit-learn57.

Neural networks trained on PaCMAP labels are constructed and used for inference with PyTorch59.

Responses